How-to: Add Data Registry tools to the CrewAI template¶

After you clone the DataRobot agentic templates repository and create an agentic workflow from the CrewAI agent template, you can modify this template to create a simple agent to search the Data Registry, read a dataset, and explain the contents. This agentic workflow calls deployed DataRobot global agentic tools to search the Data Registry for a dataset and then read that dataset.

Deploy the global agentic tools¶

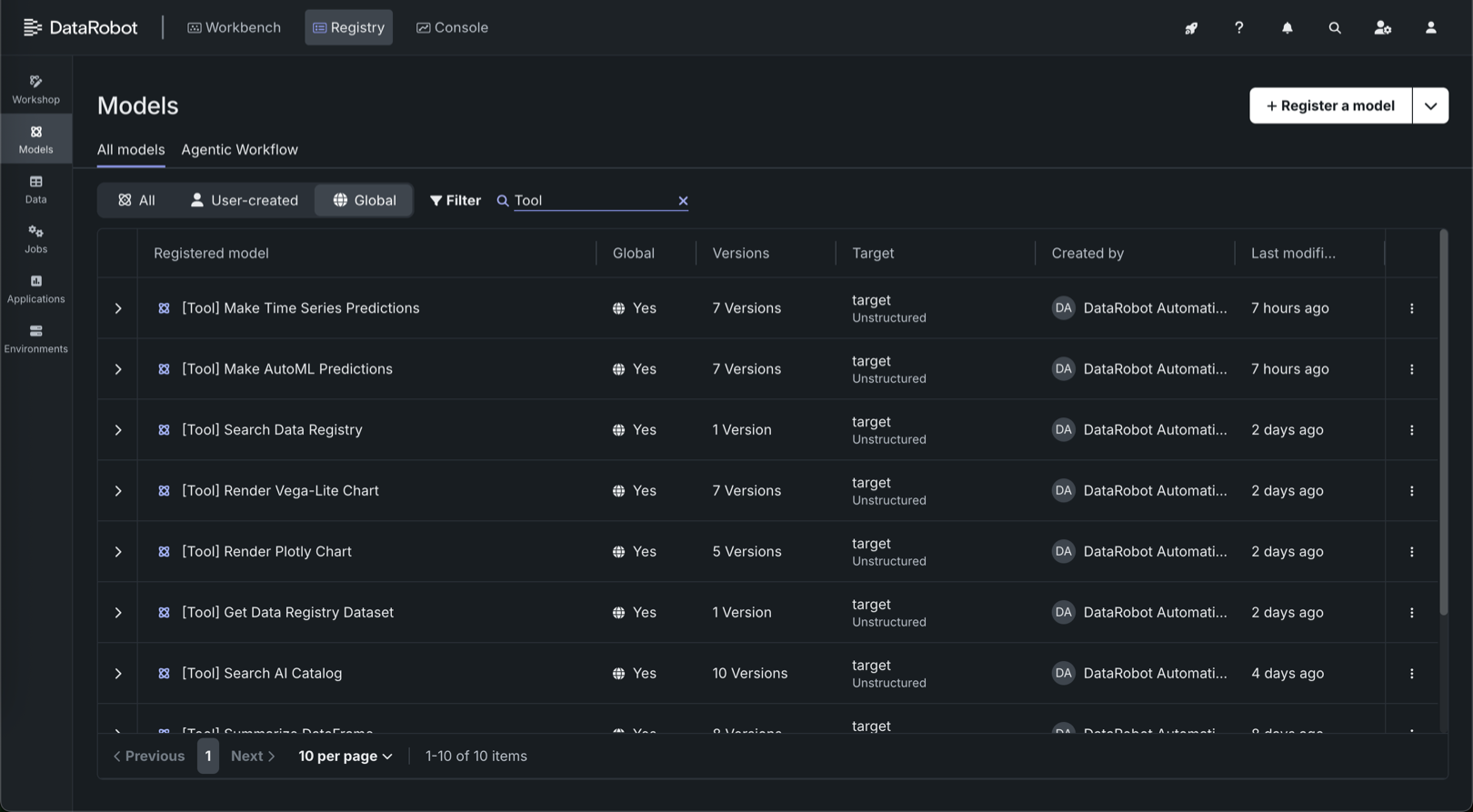

This walkthrough assumes you've deployed the Search Data Registry and Get Data Registry Dataset global tools from Registry.

Deploying the global agentic tools

These tools are deployed the same way as any other registered model. As unstructured models, the deployment settings available are limited; however, one of the available settings is Choose prediction environment. Selecting a DataRobot Serverless environment is recommended.

After deploying the Data Registry agentic tools, save the deployment IDs from the deployment Overview tab or URL. These values are required for agentic workflow development (through a .env file), in the metadata for the custom agentic workflow in Workshop (through a model-metadata.yaml file) and, eventually, in production.

Clone the datarobot-agent-templates repository¶

To start building an agentic workflow, clone the datarobot-agent-templates public repository to DataRobot. This repository provides ready-to-use templates for building and deploying AI agents with multi-agent frameworks. These templates streamline the process of setting up your own agents with minimal configuration requirements. To do this, do one of the following:

git clone --branch 11.4.0 --depth 1 https://github.com/datarobot-community/datarobot-agent-templates.git

cd datarobot-agent-templates

datarobot-agent-templates version

This walkthrough uses version 11.4.0 of the datarobot-agent-templates repository. Ensure that the workspace used for this walkthrough is on that version. Newer versions may not be compatible with the code provided below.

GitHub clone URL

For more information on cloning a GitHub repository, see the GitHub documentation.

Set up the environment¶

In the new directory containing the datarobot-agent-templates repository, use the command below to copy and rename the provided template environment file (.env.template). In this file, define the necessary environment variables.

cp .env.template .env

In the new .env file, enter your DATAROBOT_API_TOKEN and DATAROBOT_ENDPOINT. Then, enter any string to define a PULUMI_CONFIG_PASSPHRASE.

DataRobot credentials in codespaces

If you are using a DataRobot codespace, remove the DATAROBOT_API_TOKEN and DATAROBOT_ENDPOINT environment variables from the file, as they already exist in the codespace environment.

Next, add the following two environment variables to define the deployment IDs copied from the deployed Search Data Registry and Get Data Registry Dataset global agentic tools.

# Data Registry tool deployment IDs

DATA_REGISTRY_SEARCH_TOOL_DEPLOYMENT_ID=<YOUR_SEARCH_TOOL_DEPLOYMENT_ID>

DATA_REGISTRY_READ_TOOL_DEPLOYMENT_ID=<YOUR_READ_TOOL_DEPLOYMENT_ID>

These values are loaded into the agent.py file using dotenv to read the key-value pairs from the .env file.

Run the CrewAI quickstart¶

Next, use task start to run quickstart.py, selecting the CrewAI template.

task start

To select the CrewAI template press 1 and then press Enter to confirm your selection.

task start

task: [start] uv run quickstart.py

***** * **** * *

* * *** ***** *** * * *** **** *** *****

* * * * * * * **** * * * * * * *

***** *** * * *** * * * *** **** *** *

--------------------------------------------------------

Quickstart for DataRobot AI Agents

--------------------------------------------------------

Checking environment setup for required pre-requisites...

All pre-requisites are installed.

You will now select an agentic framework to use for this project.

For more information on the different agentic frameworks please go to:

https://github.com/datarobot-community/datarobot-agent-templates/blob/main/docs/getting-started.md

Please select an agentic framework to use:

1. agent_crewai

2. agent_generic_base

3. agent_langgraph

4. agent_llamaindex

5. agent_nat

Enter your choice (1-5): 1

Next, press Y and then Enter to install prerequisites and set up environments for the selected agent:

Would you like to setup the uv python environments and install pre-requisites now?

(y/n): y

Running these commands configures the environment for the agent_crewai template, removes all unnecessary files, and prepares the virtualenv to install the additional libraries required to run the selected agent template.

You can refresh the installed environment at any time by running:

task install

Optionally, before customizing the agent template, run the agent without modification. To test the code, use the following command:

task agent:cli START_DEV=1 -- execute --user_prompt 'Hi, how are you?'

You can also send a structured query as a prompt if your agent requires it.

task agent:cli START_DEV=1 -- execute --user_prompt '{"topic":"Generative AI"}'

Now you can customize the code of your agent in the agent_crewai/agentic_workflow directory. In this walkthrough, the environment remains unchanged.

View all task commands

Before running task start, to view available tasks for the project, run the task command as shown below:

❯ task

task: Available tasks for this project:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* install: Install dependencies for all agent components and infra (aliases: req, install-all)

* start: ‼️ Quickstart for DataRobot Agent Templates ‼️

After running task start and selecting a framework, to view available tasks for the project, run the task command as shown below:

❯ task

task: Available tasks for this project:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* install: 🛠️ Install all dependencies for agent and infra

* agent:install: 🛠️ [agent_crewai] Install agent uv dependencies (aliases: agent:req)

* agent:add-dependency: 🛠️ [agent_crewai] Add provided packages as a new dependency to an agent

* agent:cli: 🖥️ [agent_crewai] Run the CLI with provided arguments

* agent:dev: 🔨 [agent_crewai] Run the development server

* agent:dev-stop: 🛑 [agent_crewai] Stop the development server

* agent:chainlit: 🛝 Run the Chainlit playground

* agent:create-docker-context: 🐳 [agent_crewai] Create the template for a local docker_context image

* agent:build-docker-context: 🐳 [agent_crewai] Build the Docker image

* infra:install: 🛠️ [infra] Install infra uv dependencies

* infra:build: 🔵 Deploy only playground testing resources with pulumi

* infra:deploy: 🟢 Deploy all resources with pulumi

* infra:refresh: ⚪️ Refresh and sync local pulumi state

* infra:destroy: 🔴 Teardown all deployed resources with pulumi

❯ task --list-all

task: Available tasks for this project:

* build:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* deploy:

* destroy:

* install: 🛠️ Install all dependencies for agent and infra

* agent:add-dependency: 🛠️ [agent_crewai] Add provided packages as a new dependency to an agent

* agent:build-docker-context: 🐳 [agent_crewai] Build the Docker image

* agent:chainlit: 🛝 Run the Chainlit playground

* agent:cli: 🖥️ [agent_crewai] Run the CLI with provided arguments

* agent:create-docker-context: 🐳 [agent_crewai] Create the template for a local docker_context image

* agent:dev: 🔨 [agent_crewai] Run the development server

* agent:dev-stop: 🛑 [agent_crewai] Stop the development server

* agent:install: 🛠️ [agent_crewai] Install agent uv dependencies (aliases: agent:req)

* agent:lint:

* agent:lint-check:

* agent:test:

* agent:test-coverage:

* agent:update:

* infra:build: 🔵 Deploy only playground testing resources with pulumi

* infra:deploy: 🟢 Deploy all resources with pulumi

* infra:destroy: 🔴 Teardown all deployed resources with pulumi

* infra:info:

* infra:init:

* infra:install: 🛠️ [infra] Install infra uv dependencies

* infra:install-pulumi-plugin:

* infra:lint:

* infra:lint-check:

* infra:pulumi:

* infra:refresh: ⚪️ Refresh and sync local pulumi state

* infra:select:

* infra:select-env-stack:

* infra:test:

* infra:test-coverage:

In addition, to view all available agent CLI commands, run task agent:cli.

Customize the CrewAI template files¶

To customize the default CrewAI template to create a Data Registry Search and Summarize agentic workflow, open the agent_crewai/agentic_workflow directory and make the following changes to the agentic workflow artifacts:

- Modify the

agent.pyfile. - Create a

tool_deployment.pyfile. - Create a

tool_data_registry_search.pyfile. - Create a

tool_data_registry_read.pyfile. - Modify the

model-metadata.yamlfile.

Modify the agent.py file¶

Replace the contents of the CrewAI template's agent.py file with the code below. This replaces the previous planning, writing, and editing agents and tasks with tools to search and read datasets from the Data Registry, followed by new agents and tasks to use these tools to carry out the search, read, and edit workflow.

Copy code from this walkthrough

This walkthrough requires copying large code blocks to modify the existing template. To copy the full contents of a code snippet, click Copy to clipboard in the upper-right corner of the snippet.

Modified file: agent.py

| agent.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 | |

Create the tool_deployment.py file¶

To implement deployed tools in an agentic workflow, create a tool_deployment.py file in the agent_crewai/agentic_workflow directory. After you create the file, add the contents below.

Modified file: tool_deployment.py

| tool_deployment.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

Create the tool_data_registry_search.py file¶

Create a tool_data_registry_search.py file in the agent_crewai/agentic_workflow directory. The updated agent.py file imports the SearchDataRegistryTool class defined in this file using an import statement: from tool_data_registry_search import SearchDataRegistryTool. After you create the file, add the contents below.

New file: tool_data_registry_search.py

| tool_data_registry_search.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

Create the tool_data_registry_read.py file¶

Create a tool_data_registry_read.py file in the agent_crewai/agentic_workflow directory. The updated agent.py file imports the ReadDataRegistryTool class defined in this file using an import statement: from tool_data_registry_read import ReadDataRegistryTool. After you create the file, add the contents below.

New file: tool_data_registry_read.py

| tool_data_registry_read.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 | |

Modify the model-metadata.yaml file¶

While this step isn't required to use this agent locally or in a codespace, it's important to modify the existing model-metadata.yaml file for use in an agentic playground or in production.

| model-metadata.yaml | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

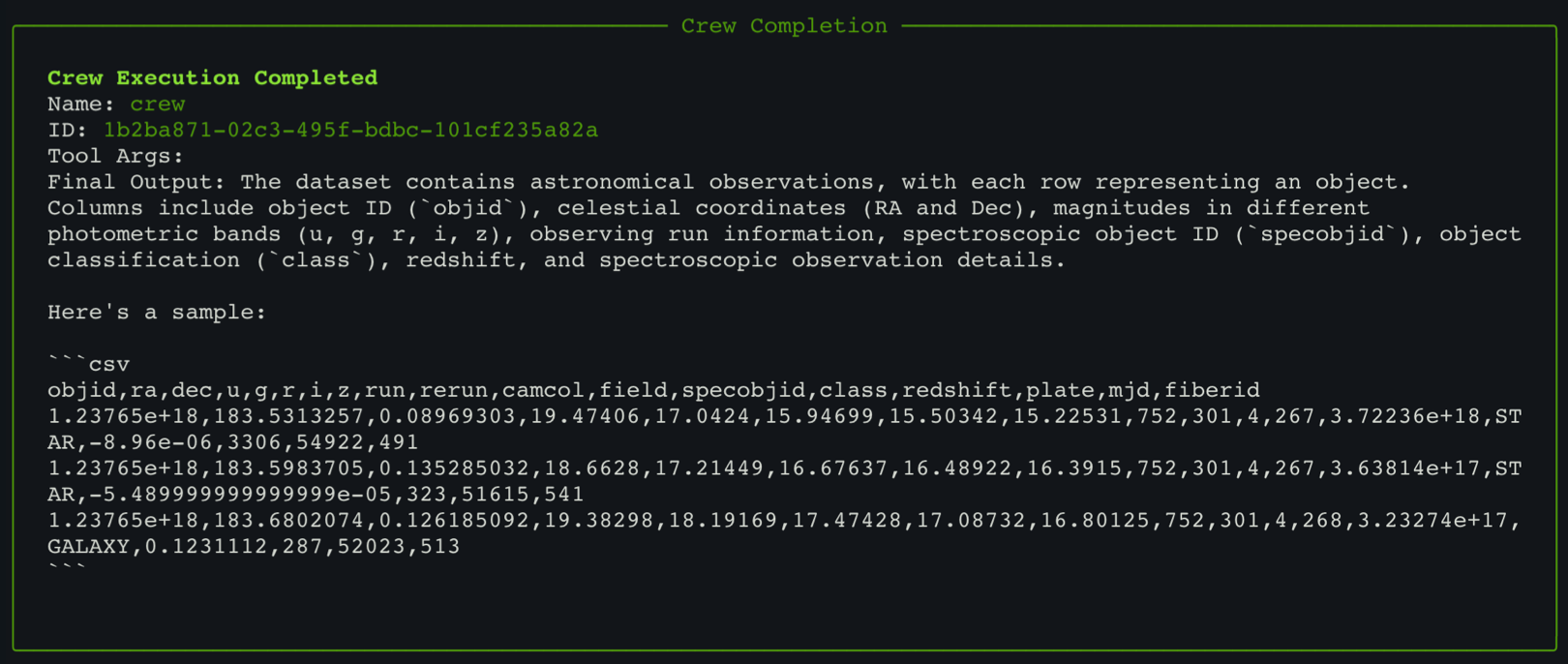

Test the modified agentic workflow¶

After making all necessary modifications and additions to the agentic workflow in the agent_crewai/agentic_workflow directory, test the workflow with the following command. Modify the --user_prompt argument to target a dataset present in the Data Registry.

task agent:cli START_DEV=1 -- execute --user_prompt 'Describe a space dataset.'

You can also send a structured query as a prompt. Again, modify the --user_prompt argument to target a dataset present in the Data Registry.

task agent:cli START_DEV=1 -- execute --user_prompt '{"dataset_topic":"Space", "question": "Please describe the dataset and show a sample of a few rows"}'

Troubleshooting

If you encounter issues while testing an agentic workflow, it can be helpful to update agent environments and dependencies. To do this, you can use the task install (or task setup), task agent:install, and task agent:update commands. For more information on these commands, and others, use the task command.

If you see a connection error when testing, ensure you're using START_DEV=1 in the command, or start the dev server separately with task agent:dev in a different terminal window.

Next steps¶

After successfully testing the updated agentic workflow for searching the Data Registry and summarizing a dataset, send the workflow to Workshop to connect it to an agentic playground, or deploy the workflow.