How-to: Modify the CrewAI template with AI assistance¶

This walkthrough demonstrates how to use AI-assisted development environments, such as Cursor or Windsurf, to migrate locally developed agentic workflows into a template from the DataRobot Agent Templates repository. By leveraging your existing AI development tools with any agentic framework—such as LangGraph, CrewAI, or LlamaIndex—together with the DataRobot Agentic AI Platform, you can accelerate the iterative process of building, governing, and deploying agentic workflows into production, supported by capabilities like systematic evaluation, governance, security, tracing, and lifecycle management. While this example uses Cursor, the same principles apply to other AI coding assistants. For a simpler example that does not include AI assistance, see the custom agent integration walkthrough.

Clone the datarobot-agent-templates repository¶

To begin migrating your custom agent code, clone the datarobot-agent-templates public repository. This repository contains ready-to-use templates for building and deploying AI agents with various multi-agent frameworks. These templates streamline the process of setting up agents with minimal configuration requirements.

To begin, clone the DataRobot Agent Templates repository using the command line/terminal in your development environment and the command below:

git clone --branch 11.4.0 --depth 1 https://github.com/datarobot-community/datarobot-agent-templates.git

cd datarobot-agent-templates

datarobot-agent-templates version

This walkthrough uses version 11.4.0 of the datarobot-agent-templates repository. Ensure that the workspace used for this walkthrough is on that version. Newer versions may not be compatible with the code provided below.

GitHub clone URL

For more information on cloning a GitHub repository, see the GitHub documentation.

Integrate the custom agent code with AI assistance¶

When migrating a locally developed agentic workflow into the DataRobot agent template, the DataRobot Agent Templates repository accelerates development by providing boilerplate code, testing functionality, and deployment pipelines. If a template exists for your agent framework, use the existing template. DataRobot provides agentic workflow templates for CrewAI, LangGraph, and LlamaIndex. If no template exists for your framework, use the Generic Base template.

Set up the environment¶

Before migrating your custom code into a template, set up the CrewAI template to bootstrap the implementation. In the datarobot-agent-templates repository directory, open the terminal and use the command below to copy and rename the provided template environment file (.env.template). This file will contain the necessary environment variables for your agent.

cp .env.template .env

Locate and open the new .env file and configure the following variables:

DataRobot credentials in codespaces

If you are using a DataRobot codespace, remove the DATAROBOT_API_TOKEN and DATAROBOT_ENDPOINT environment variables from the file, as they already exist in the codespace environment.

- Define the

DATAROBOT_API_TOKENandDATAROBOT_ENDPOINTenvironment variables to enable the local agent to communicate with DataRobot. - Provide any string to define a

PULUMI_CONFIG_PASSPHRASE, which will allow Pulumi to manage the state of agent assets in DataRobot. - Comment out

LLM_DEPLOYMENT_IDsince the DataRobot LLM gateway is used for this example. - Comment out

DATAROBOT_DEFAULT_EXECUTION_ENVIRONMENTto allow DataRobot to build a new environment with the necessary Python libraries for the agent workflow.

# Refer to https://docs.datarobot.com/en/docs/api/dev-learning/api-quickstart.html#create-a-datarobot-api-key

# and https://docs.datarobot.com/en/docs/api/dev-learning/api-quickstart.html#retrieve-the-api-endpoint

# Can be deleted on a DataRobot codespace

DATAROBOT_API_TOKEN=

DATAROBOT_ENDPOINT=

# Required, unless logged in to Pulumi cloud. Choose your own alphanumeric passphrase to be used for encrypting Pulumi config

PULUMI_CONFIG_PASSPHRASE=

# If empty, a new use case will be created

DATAROBOT_DEFAULT_USE_CASE=

# To create an new LLM Deployment instead of using the LLM Gateway, set this to false.

# If you are using the LLM Gateway you must enable the `ENABLE_LLM_GATEWAY` feature flag in the DataRobot UI.

USE_DATAROBOT_LLM_GATEWAY=true

# Set this to an existing LLM deployment ID if you want to use a custom LLM deployment

# instead of creating new

# LLM_DEPLOYMENT_ID=

# Specify the default execution environment for agents.

# The default agents environment is "[DataRobot] Python 3.11 GenAI Agents", you can alternatively replace

# this with the ID of an existing execution environment. If you leave this empty, a new execution environment

# will be created for each agent.

# DATAROBOT_DEFAULT_EXECUTION_ENVIRONMENT="[DataRobot] Python 3.11 GenAI Agents"

These environment variables are loaded into the agent.py file using dotenv (implemented in the Taskfile) to read the key-value pairs from the .env file.

Run the CrewAI quickstart¶

Next, use task start to run quickstart.py and select the CrewAI template.

task start

To select the CrewAI template, press 1 and then press Enter to confirm your selection.

task start

task: [start] uv run quickstart.py

***** * **** * *

* * *** ***** *** * * *** **** *** *****

* * * * * * * **** * * * * * * *

***** *** * * *** * * * *** **** *** *

--------------------------------------------------------

Quickstart for DataRobot AI Agents

--------------------------------------------------------

Checking environment setup for required pre-requisites...

All pre-requisites are installed.

You will now select an agentic framework to use for this project.

For more information on the different agentic frameworks please go to:

https://github.com/datarobot-community/datarobot-agent-templates/blob/main/docs/getting-started.md

Please select an agentic framework to use:

1. agent_crewai

2. agent_generic_base

3. agent_langgraph

4. agent_llamaindex

5. agent_nat

Enter your choice (1-5): 1

Next, press Y and then press Enter to install prerequisites and set up environments for the selected agent:

Would you like to setup the uv python environments and install pre-requisites now?

(y/n): y

Running these commands configures the environment for the agent_crewai template, removes unnecessary files, and prepares the virtual environment to install the additional libraries required to run the selected agent template.

You can refresh the installed environment at any time by running:

task install

Optionally, before customizing the agent template, run the agent without modification to test the code:

task agent:cli START_DEV=1 -- execute --user_prompt 'Hi, how are you?'

View all task commands

Before running task start, to view available tasks for the project, run the task command as shown below:

❯ task

task: Available tasks for this project:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* install: Install dependencies for all agent components and infra (aliases: req, install-all)

* start: ‼️ Quickstart for DataRobot Agent Templates ‼️

After running task start and selecting a framework, to view available tasks for the project, run the task command as shown below:

❯ task

task: Available tasks for this project:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* install: 🛠️ Install all dependencies for agent and infra

* agent:install: 🛠️ [agent_crewai] Install agent uv dependencies (aliases: agent:req)

* agent:add-dependency: 🛠️ [agent_crewai] Add provided packages as a new dependency to an agent

* agent:cli: 🖥️ [agent_crewai] Run the CLI with provided arguments

* agent:dev: 🔨 [agent_crewai] Run the development server

* agent:dev-stop: 🛑 [agent_crewai] Stop the development server

* agent:chainlit: 🛝 Run the Chainlit playground

* agent:create-docker-context: 🐳 [agent_crewai] Create the template for a local docker_context image

* agent:build-docker-context: 🐳 [agent_crewai] Build the Docker image

* infra:install: 🛠️ [infra] Install infra uv dependencies

* infra:build: 🔵 Deploy only playground testing resources with pulumi

* infra:deploy: 🟢 Deploy all resources with pulumi

* infra:refresh: ⚪️ Refresh and sync local pulumi state

* infra:destroy: 🔴 Teardown all deployed resources with pulumi

❯ task --list-all

task: Available tasks for this project:

* build:

* default: ℹ️ Show all available tasks (run `task --list-all` to see hidden tasks)

* deploy:

* destroy:

* install: 🛠️ Install all dependencies for agent and infra

* agent:add-dependency: 🛠️ [agent_crewai] Add provided packages as a new dependency to an agent

* agent:build-docker-context: 🐳 [agent_crewai] Build the Docker image

* agent:chainlit: 🛝 Run the Chainlit playground

* agent:cli: 🖥️ [agent_crewai] Run the CLI with provided arguments

* agent:create-docker-context: 🐳 [agent_crewai] Create the template for a local docker_context image

* agent:dev: 🔨 [agent_crewai] Run the development server

* agent:dev-stop: 🛑 [agent_crewai] Stop the development server

* agent:install: 🛠️ [agent_crewai] Install agent uv dependencies (aliases: agent:req)

* agent:lint:

* agent:lint-check:

* agent:test:

* agent:test-coverage:

* agent:update:

* infra:build: 🔵 Deploy only playground testing resources with pulumi

* infra:deploy: 🟢 Deploy all resources with pulumi

* infra:destroy: 🔴 Teardown all deployed resources with pulumi

* infra:info:

* infra:init:

* infra:install: 🛠️ [infra] Install infra uv dependencies

* infra:install-pulumi-plugin:

* infra:lint:

* infra:lint-check:

* infra:pulumi:

* infra:refresh: ⚪️ Refresh and sync local pulumi state

* infra:select:

* infra:select-env-stack:

* infra:test:

* infra:test-coverage:

In addition, to view all available agent CLI commands, run task agent:cli.

Define the context and prompt for AI-assisted coding¶

Custom agent code developed locally, outside the DataRobot agent template, can be integrated into the agent_crewai template with minimal modification. In the datarobot-agent-templates repository, locate the agent_crewai/ directory (all other template directories were removed by the task start command). In the agent_crewai/ directory, create the my_agent directory and add the following file:

Agent file: internet-research-agent.py

| internet-research-agent.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 | |

Copy code from this walkthrough

This walkthrough requires copying large code blocks to modify the existing template. To copy the full contents of a code snippet, click Copy to clipboard in the upper-right corner of the snippet.

Next, locate the agent_crewai/agentic_workflow/ directory and review the contents. These files define the agent that will be sent to DataRobot:

agentic_workflow/

├── __init__.py # Package initialization

├── agent.py # Main agent implementation

├── config.py # Configuration management

├── crewai_event_listener.py # Event listener for pipeline interactions

├── custom.py # DataRobot integration hooks

├── mcp_client.py # MCP server integration (optional)

└── model-metadata.yaml # Agent metadata configuration

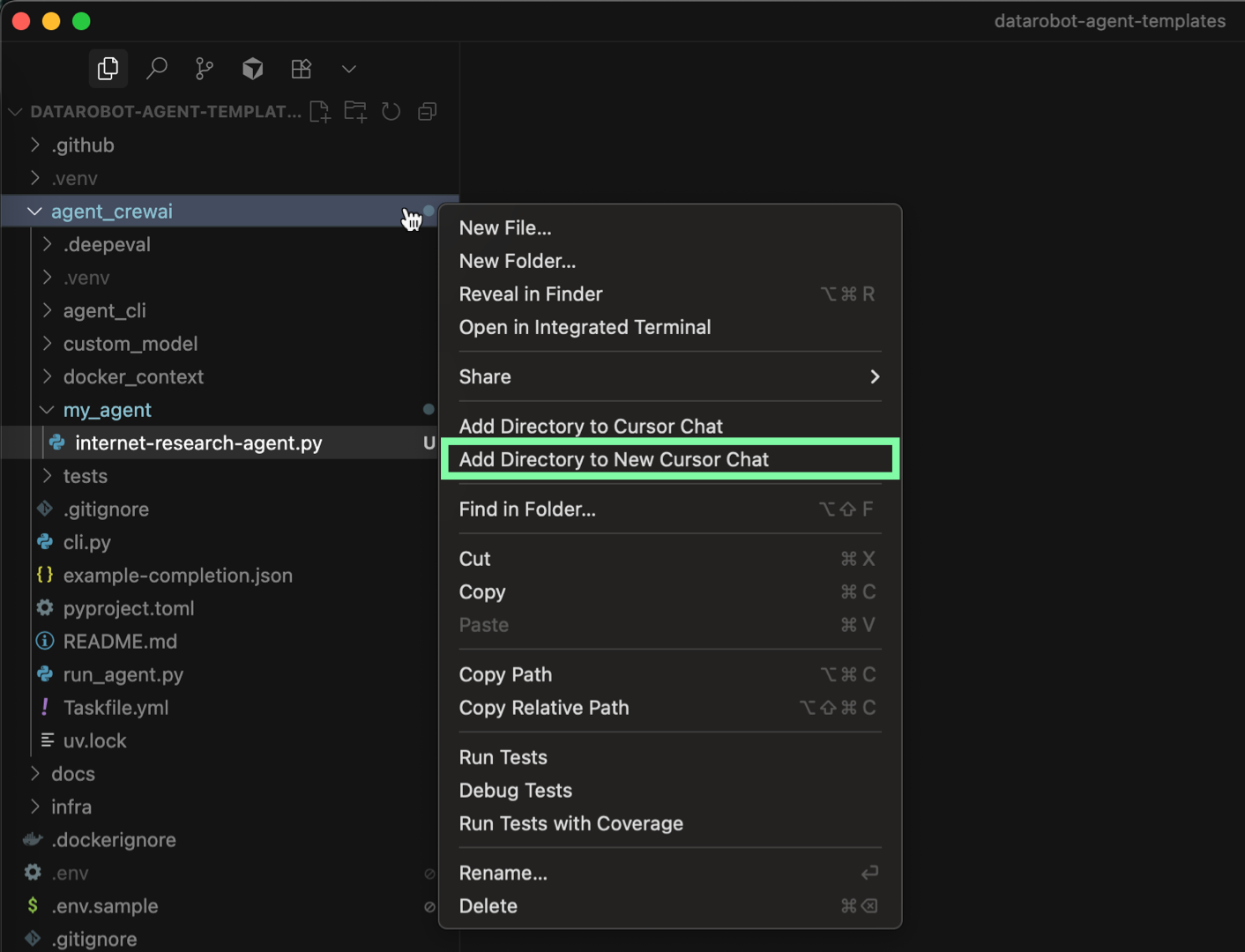

This migration process customizes the agent code in the agent_crewai/agentic_workflow directory, primarily agent.py. To provide the context required for your AI-assisted development environment to modify the agent code, add the agent_crewai/ directory to the chat context. In Cursor, for example, right-click agent_crewai and select Add Directory to New Cursor Chat:

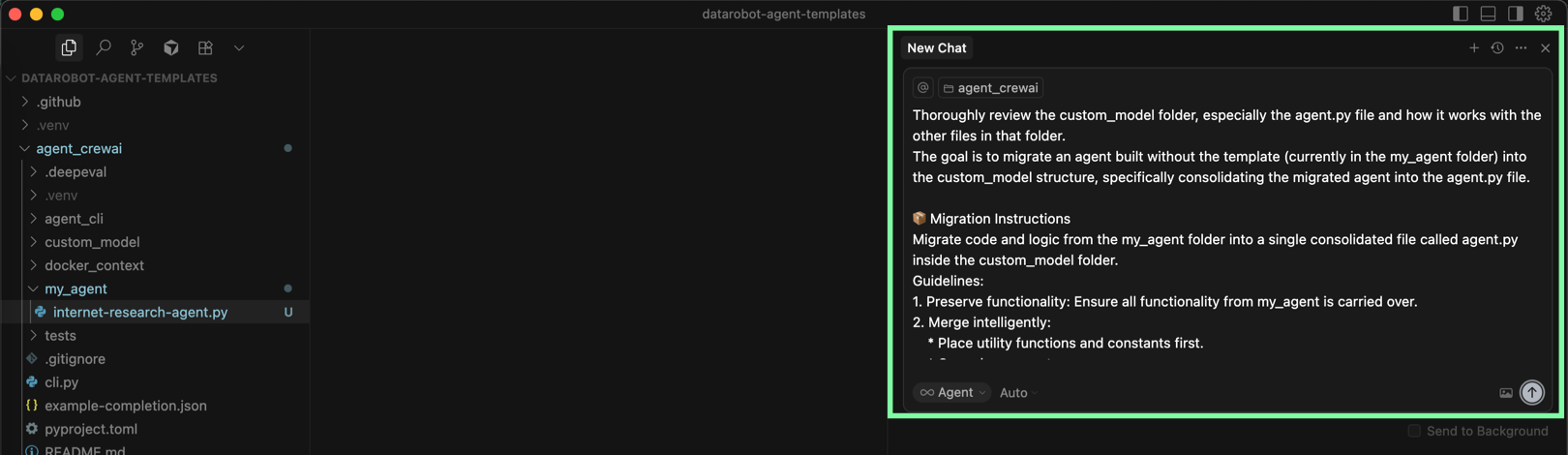

Next, in the new chat with the agent_crewai/ directory in context (including the my_agent directory containing the locally developed agent), use the following prompt to migrate the new agent to the CrewAI agent template:

Thoroughly review the agentic_workflow folder, especially the agent.py file and how it works with the other files in that folder.

The goal is to migrate an agent built without the template (currently in the my_agent folder) into the agentic_workflow structure, specifically consolidating the migrated agent into the agent.py file.

📦 Migration Instructions

Migrate code and logic from the my_agent folder into a single consolidated file called agent.py inside the agentic_workflow folder.

Guidelines:

1. Preserve functionality: Ensure all functionality from my_agent is carried over.

2. Merge intelligently:

* Place utility functions and constants first.

* Core classes next.

* Entrypoints last.

3. Simplify definitions: Remove unnecessary definitions of agents, tasks, and tools from the original agent.py in agentic_workflow.

4. Gateway integration: Ensure the migrated agent uses the LLM gateway defined in the function llm_with_datarobot_llm_gateway(self) -> LLM in the original agent.py.

5. Naming consistency: If duplicate/overlapping names exist, rename them carefully to avoid conflicts.

6. Docstring header: At the top of agent.py, include a clear docstring summarizing the agent's purpose.

7. Self-contained: After migration, agentic_workflow/agent.py should be runnable standalone without referencing the original my_agent folder.

📥 Dependency Management with uv

Any new dependencies introduced by the migration must be installed and aligned with the project’s virtual environment using uv.

Steps:

1. Navigate to the agent project directory: cd datarobot-agent-templates/agent_crewai

2. Add each new dependency: uv add <package>[extras]==<version>. For example: uv add perplexityai

3. Sync dependencies to the venv: uv sync

🔹 Docker Dependency Management (CRITICAL)

To prevent runtime errors like ModuleNotFoundError: No module named 'X' when running inside Docker:

1. Add new dependencies to agent_crewai/pyproject.toml (via uv add).

2. If you need a custom Docker context, run task create-docker-context and then add dependencies to the generated docker_context/requirements.in file.

✅ Post-Migration Test

After migration and dependency resolution, run the test to confirm the agent works:

From the root of datarobot-agent-templates, run:

task agent:cli START_DEV=1 -- execute --user_prompt "{user_prompt}"

* Replace {user_prompt} with a meaningful test prompt aligned with the agent’s purpose and task descriptions (e.g., if the agent is a research assistant, use: “Summarize the latest research on climate change adaptation strategies.”).

Allow the AI-assisted coding environment to run any required commands so that it can install requirements, make other necessary changes to the agent template, and test the resulting agent.

Test the modified agentic workflow¶

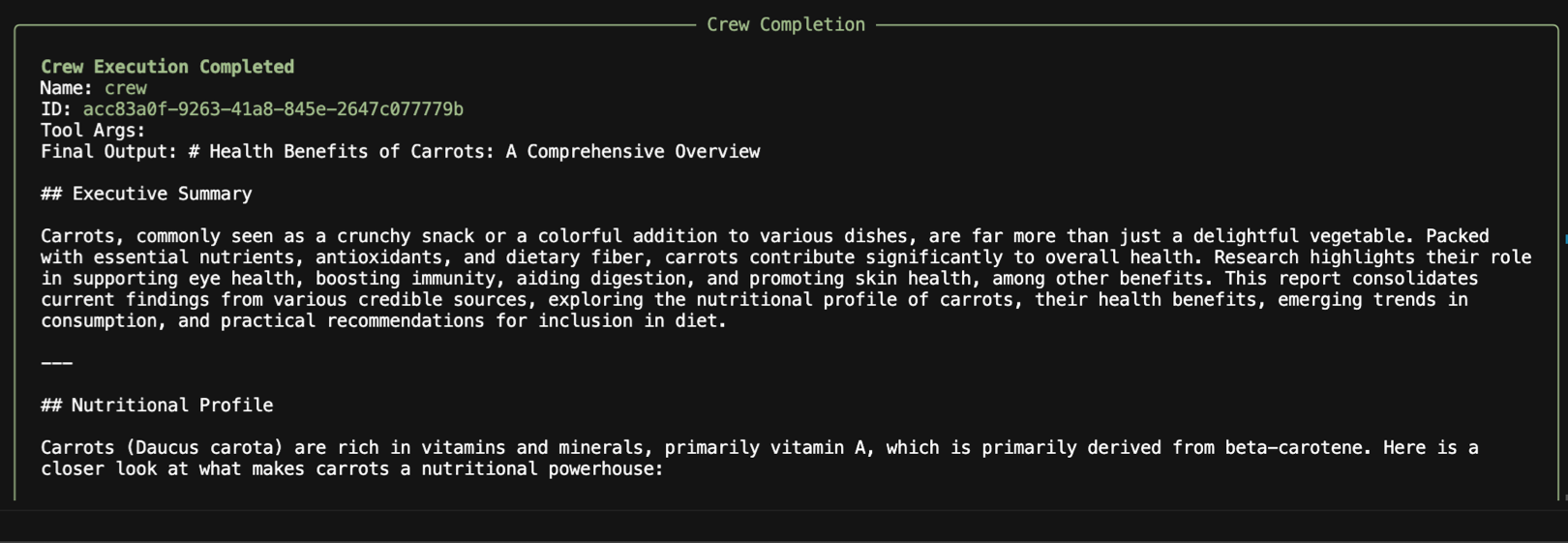

After the AI-assisted migration is complete, Cursor (or your AI-assisted coding environment) automatically tests the workflow; however, to test the workflow manually, use the following command in the terminal:

task agent:cli START_DEV=1 -- execute --user_prompt "Health benefits of carrots"

Troubleshooting

If you encounter issues while testing an agentic workflow, it can be helpful to update agent environments and dependencies. To do this, you can use the task install (or task setup), task agent:install, and task agent:update commands. For more information on these commands and others, run the task command.

If you see a connection error when testing, ensure you're using START_DEV=1 in the command, or start the dev server separately with task agent:dev in a different terminal window.

Next steps¶

After successfully testing the updated agentic workflow, send the workflow to Workshop to connect it to an agentic playground or deploy the workflow.