GenAI workflow overview¶

This section provides a generalized discussion of the generative LLM building workflow, which can include:

- Creating and versioning vector databases.

- Creating LLM blueprints.

- Chatting with and compare LLM blueprints.

- Applying evaluation metrics and creating compliance tests.

- Preparing LLM blueprints for deployment.

Tip

For a hands-on experience, try the GenAI how-to.

See the full documentation for information on using your own data and LLMs, working with code instead of the UI, and working with NVIDIA NIM.

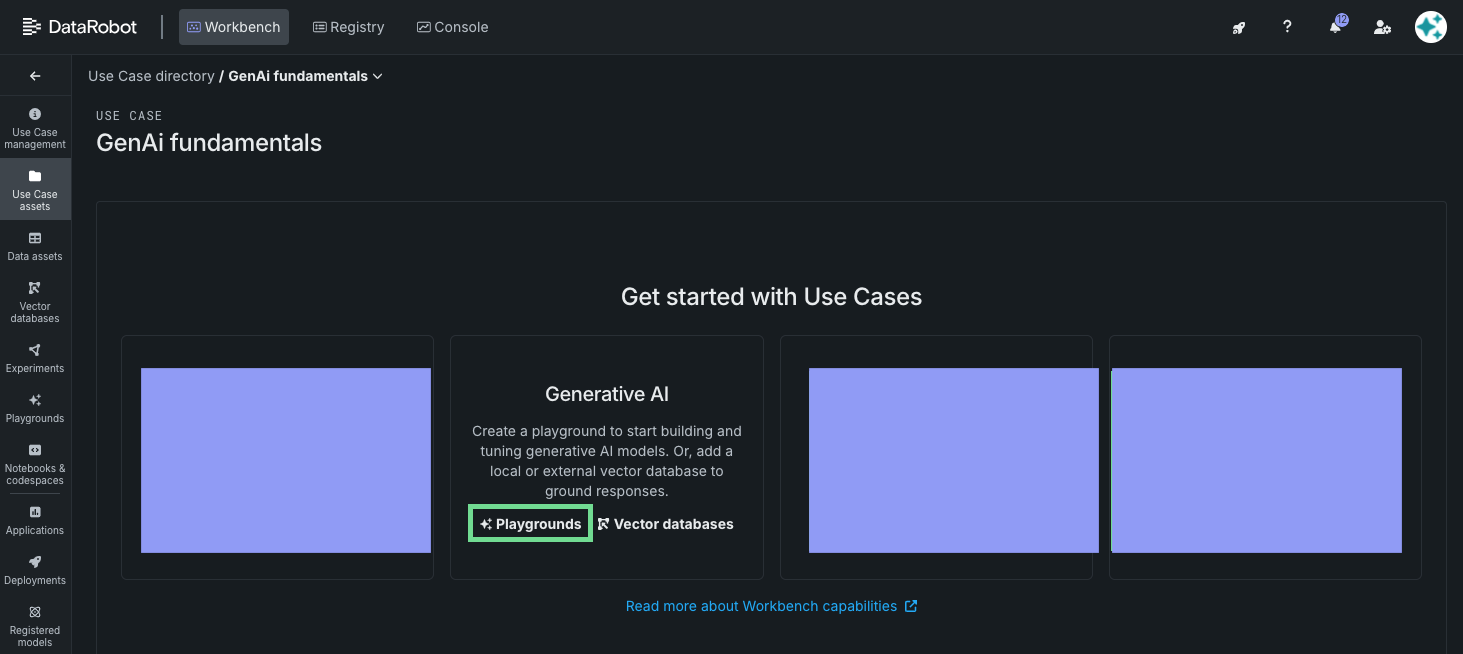

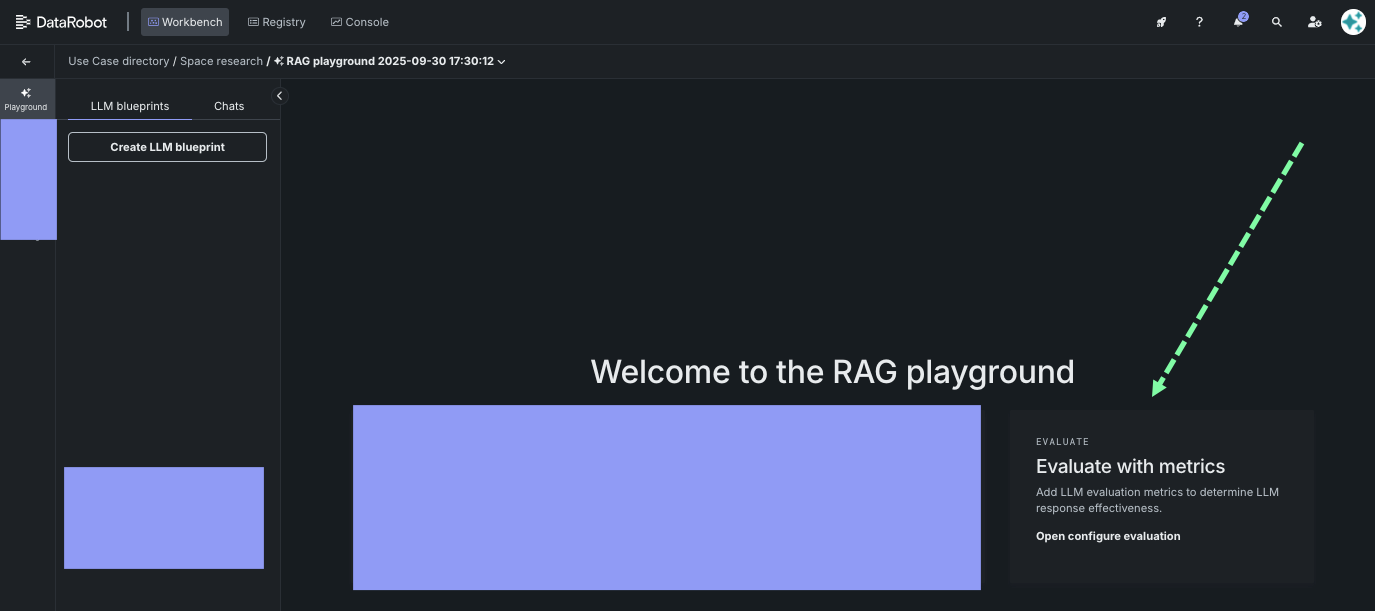

Get started¶

It all begins by creating a Use Case and adding a RAG playground. A playground is a dedicated LLM-focused experimentation environment within Workbench, where you can build, review, compare, evaluate, and deploy.

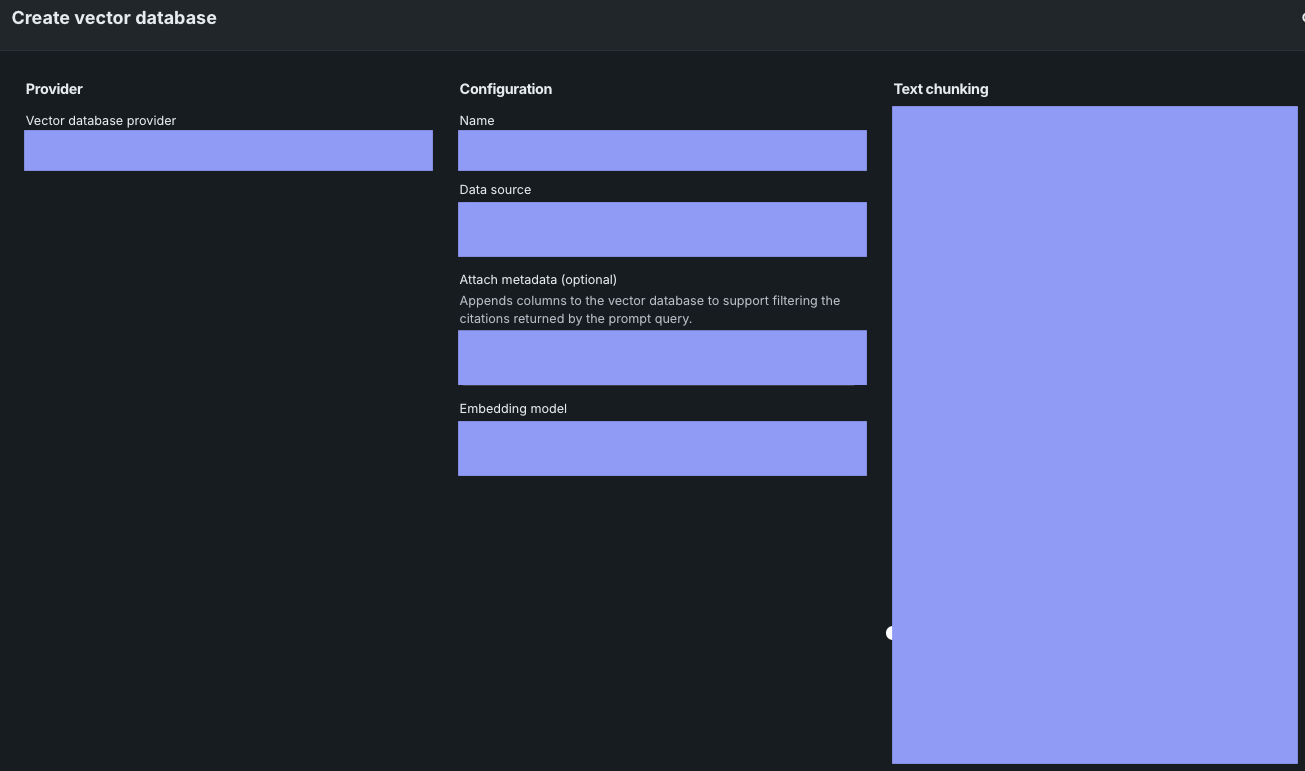

Create a vector database¶

Once your playground is set up, optionally add a vector database. The role of the vector database is to enrich the prompt with relevant context before it is sent to the LLM. When creating a vector database, you:

- Choose a provider.

- Add data.

- Set a basic configuration and text chunking details.

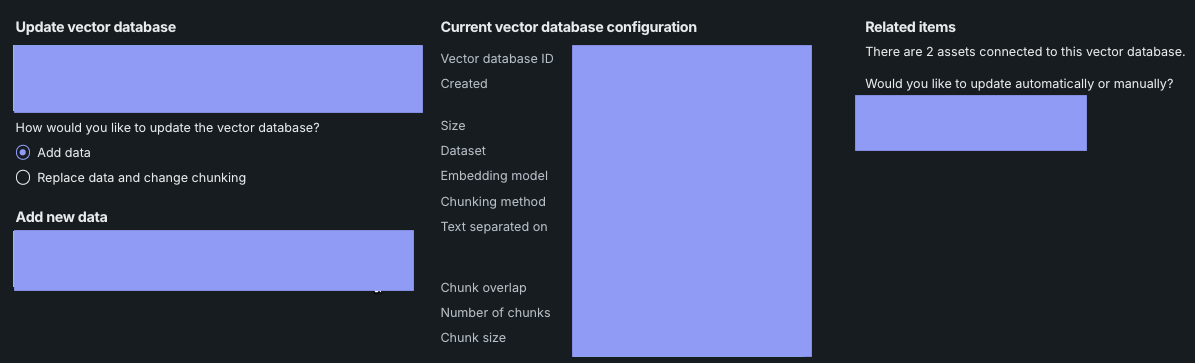

Vector databases can be versioned to make sure the most up-to-date data is available to ground LLM responses.

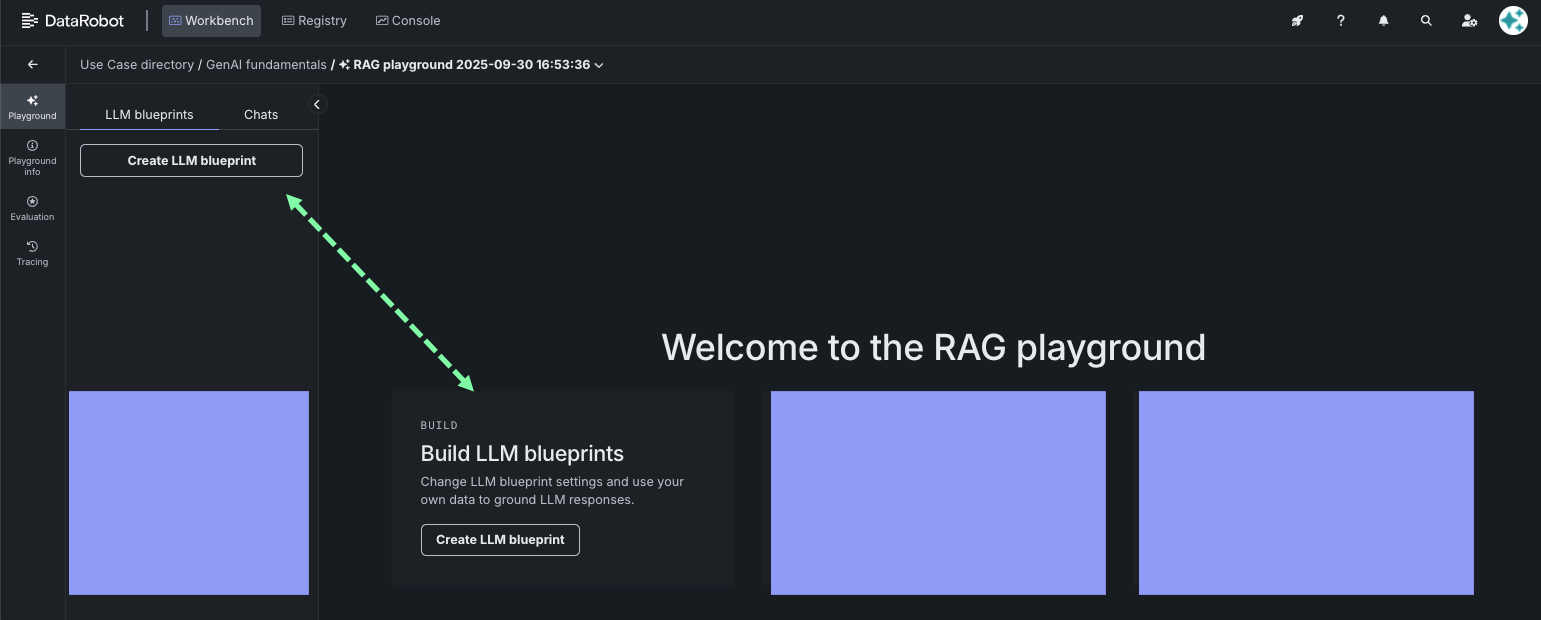

Build LLM blueprints¶

An LLM blueprint represents the full context for what is needed to generate a response from an LLM; the resulting output is what can then be compared within the playground.

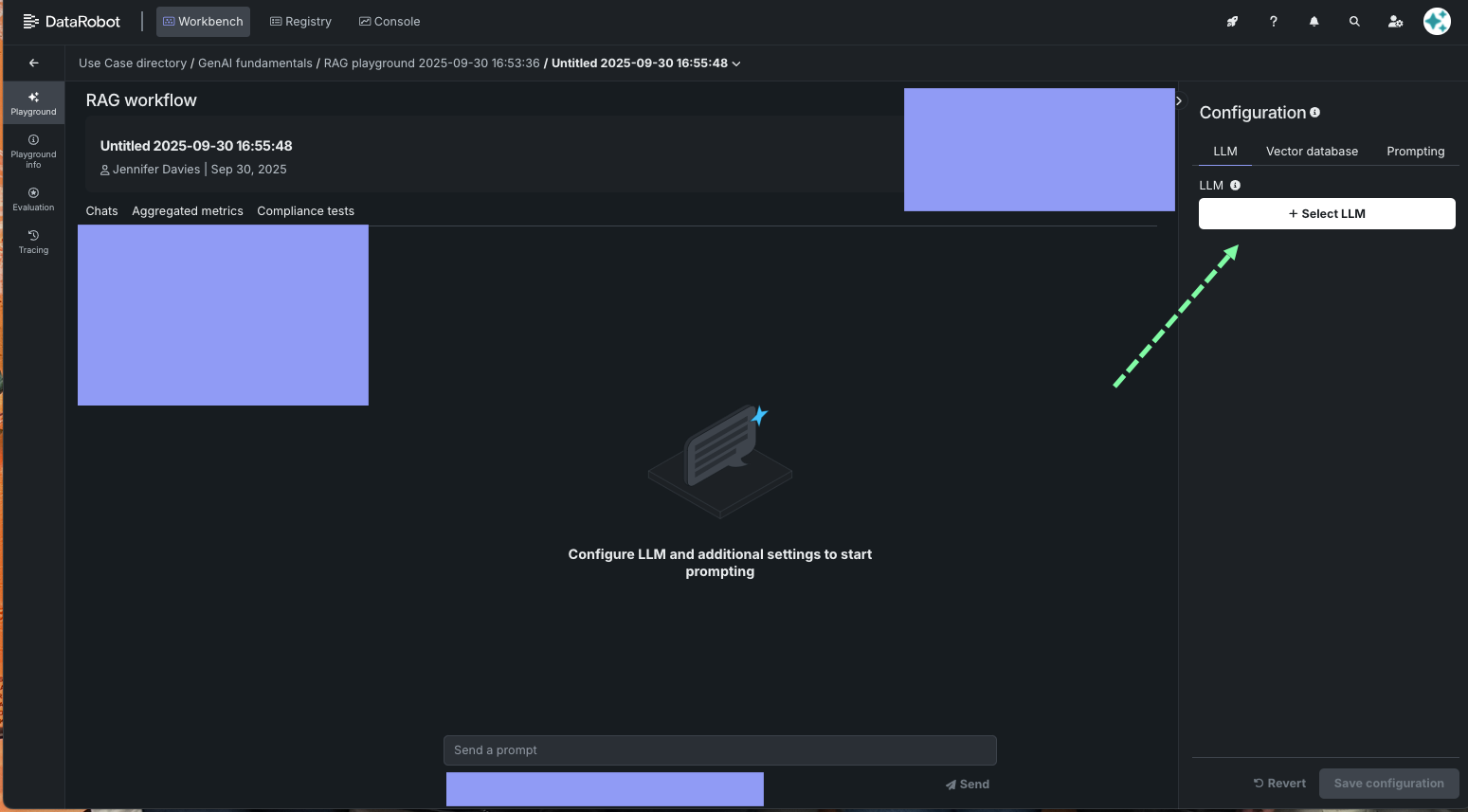

When you click to create an LLM blueprint, the playground opens. Select an LLM to get started and then set the configuration options.

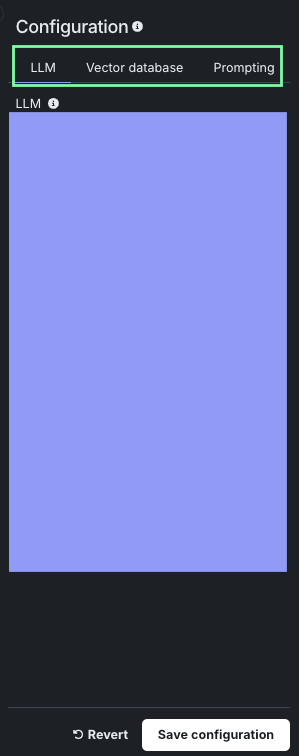

In the configuration panel, optionally add a vector database and set the prompting strategy.

After you save, the new LLM blueprint is listed on the left.

Chat and compare LLM blueprints¶

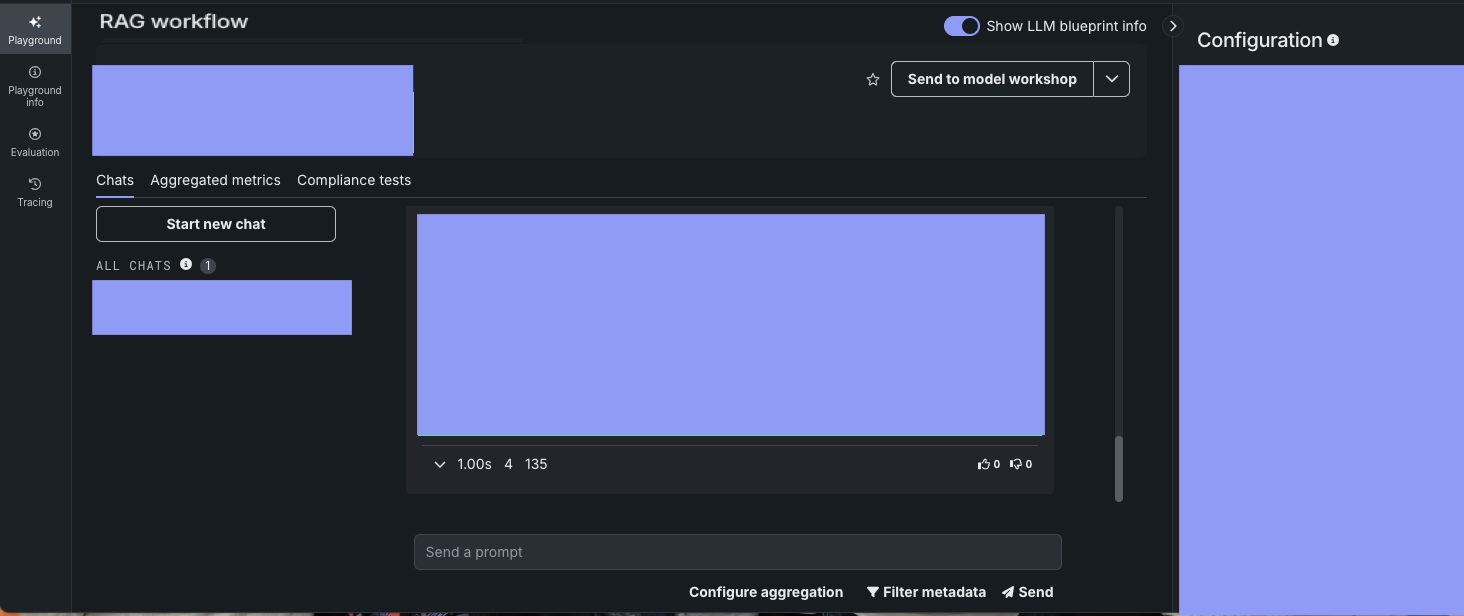

Once the LLM blueprint configuration is saved, try sending it prompts (rag-chatting) to determine whether further refinements are needed.

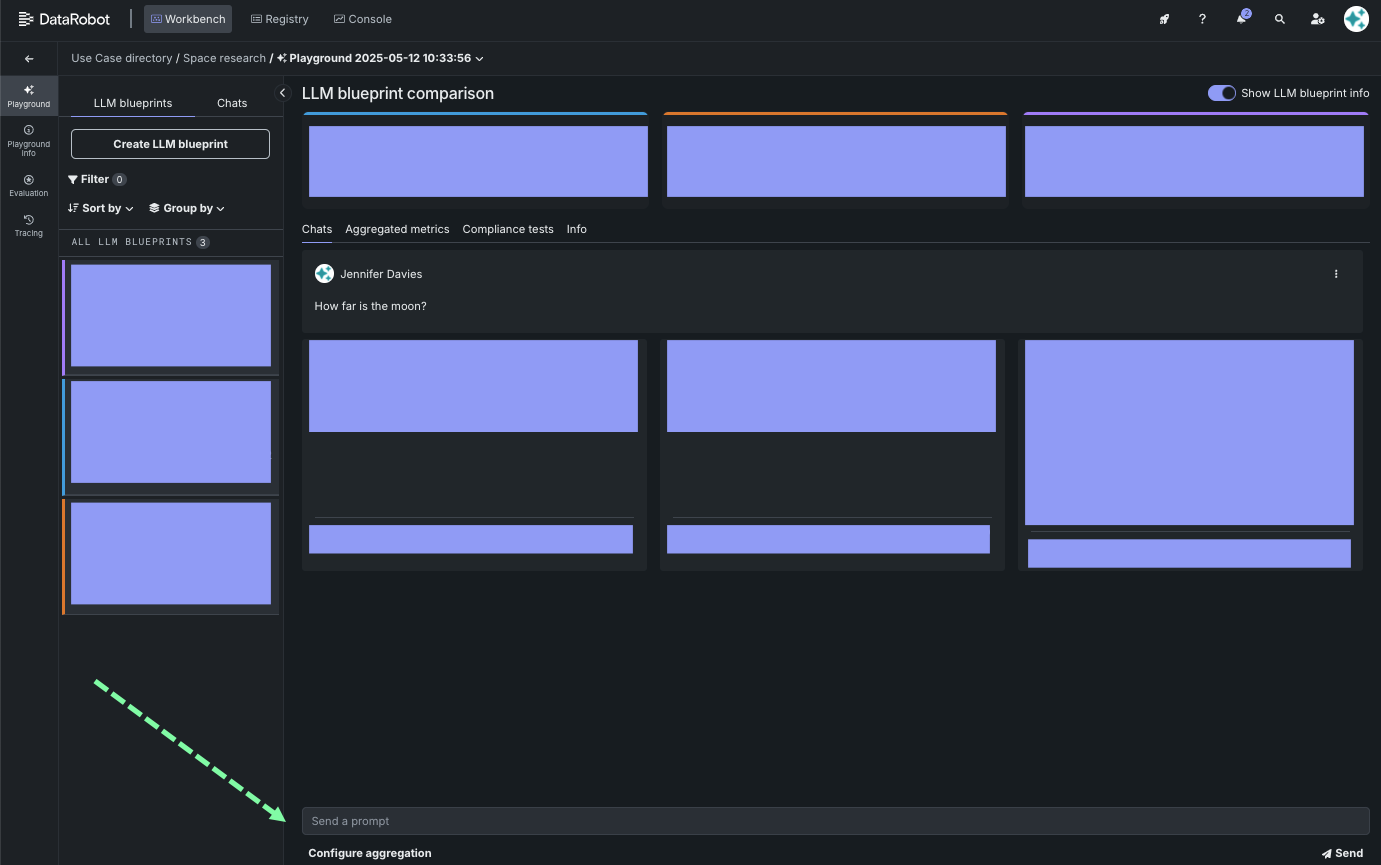

Then, add several blueprints to use the comparison tool to test different LLM blueprints using the same prompt. This helps pick the best LLM blueprint for deployment.

See the best practices for prompt engineering when chatting and doing comparisons.

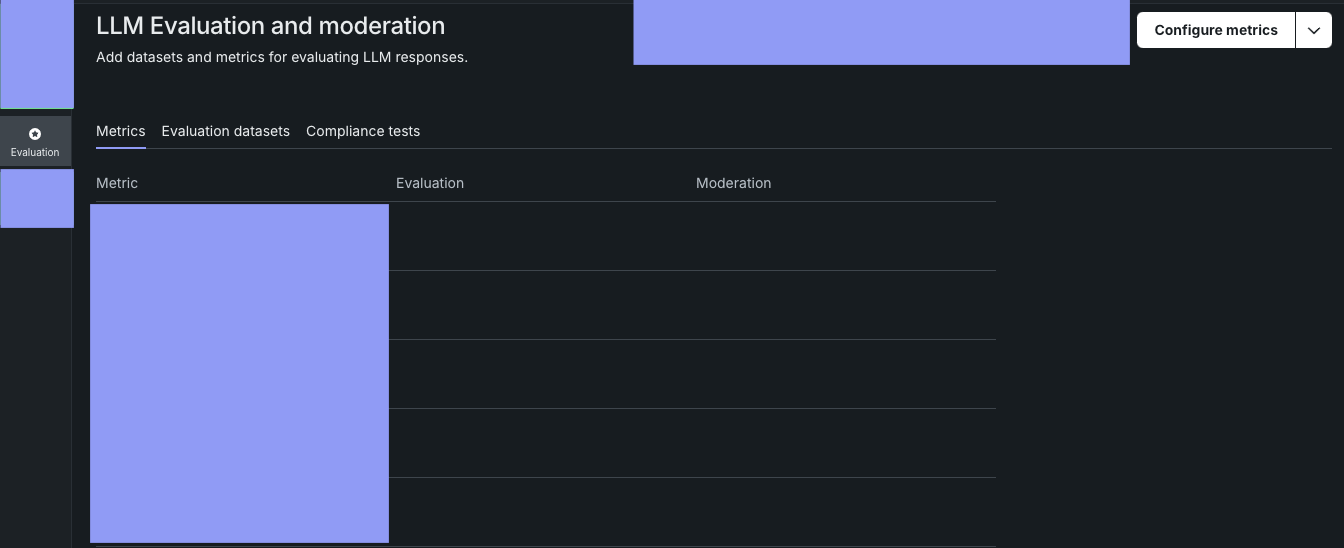

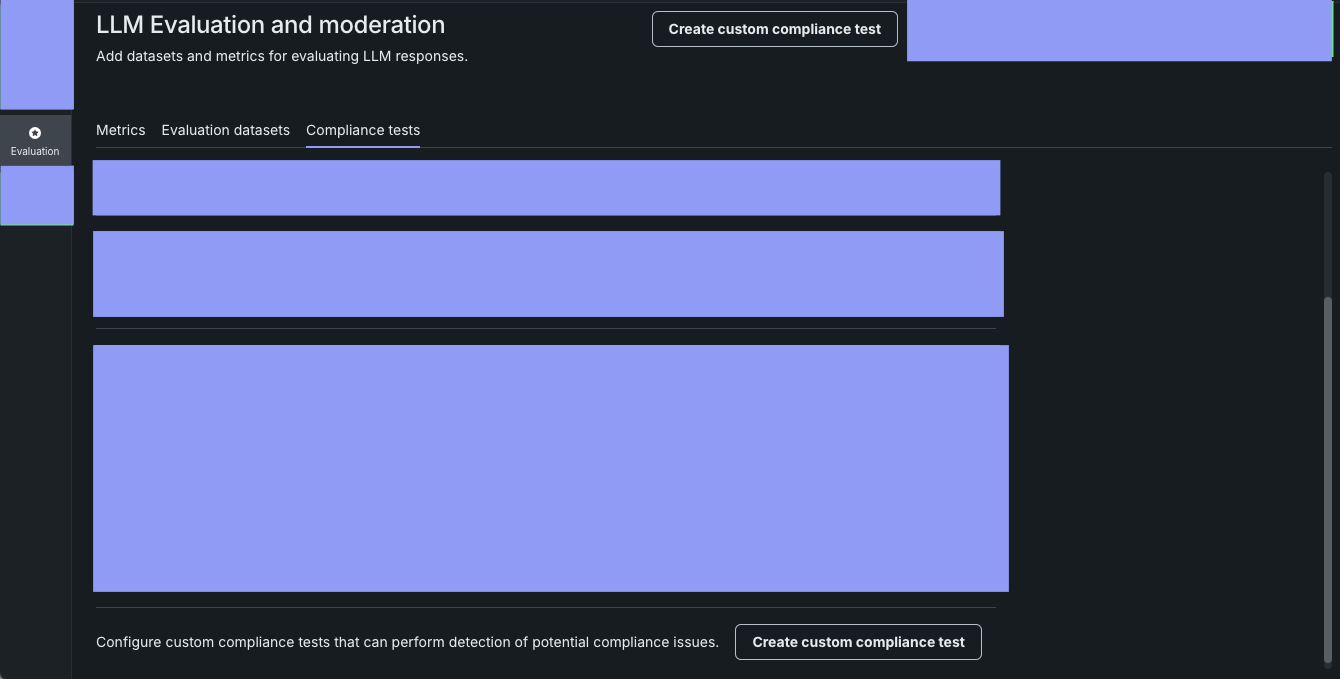

Use LLM evaluation tools¶

Using metrics and compliance tests, DataRobot monitors how models are used in production, intervening and blocking bad outputs.

Add metrics before or after configuring LLM blueprints:

Add evaluation datasets, or generate a synthetic dataset from within DataRobot, to create a systematic assessment of how well the model performs for its intended tasks.

Combine evaluation metrics and an evaluation dataset to automate the detection of compliance issues through test prompt scenarios. Use DataRobot-supplied evaluations or create your own.

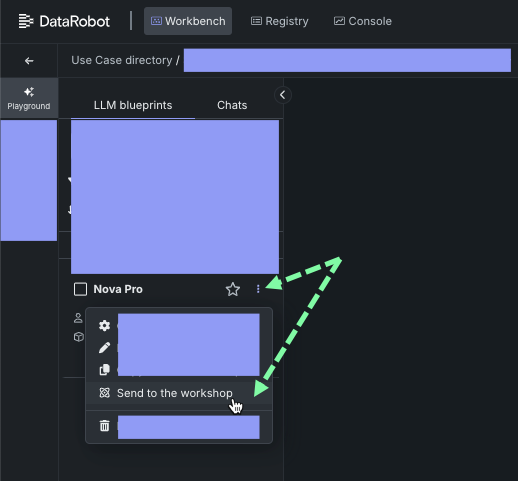

Deploy an LLM¶

Once you are satisfied with the LLM blueprint, you can send it to the Registry's workshop from the playground.

The Registry workshop is where you test the LLM custom model and ultimately deploy it to Console, a centralized hub for monitoring and model management.