How-to: Apply app templates to DataRobot deployments¶

Talk to my Data Agent allows you to upload raw data from your preferred data source and ask a question, after which the agent recommends business analyses. Instead of using an external LLM, DataRobot allows you to build and deploy a RAG model trained on a dataset containing industry- and company-specific definitions of terms and jargon. Doing so creates an AI agent that has the background knowledge of another member of your team, applying context to any responses it's trying to infer from your questions and data.

In this walkthrough, you will:

- Create a Use Case and add a

.zipfile of industry terms. - Create a vector database and set up a RAG playground.

- Register and deploy the RAG LLM model.

- Launch and the Talk to my Data Agent template in a codespace.

- Customize the application template using the RAG model's deployment ID.

- Build the Pulumi stack.

Assets for download¶

To follow this walkthrough, download and the .zip file linked below.

Download industry jargon dataset

The .zip file above is list of common finance terms and definitions—generated using AI.

Prerequisites¶

For this walkthrough, you need:

- Access to GenAI and MLOps functionality in DataRobot

- DataRobot API token

- DataRobot Endpoint

.zipfile of industry-specific terms and definitions- A serverless prediction environment

1. Create a Use Case and upload data¶

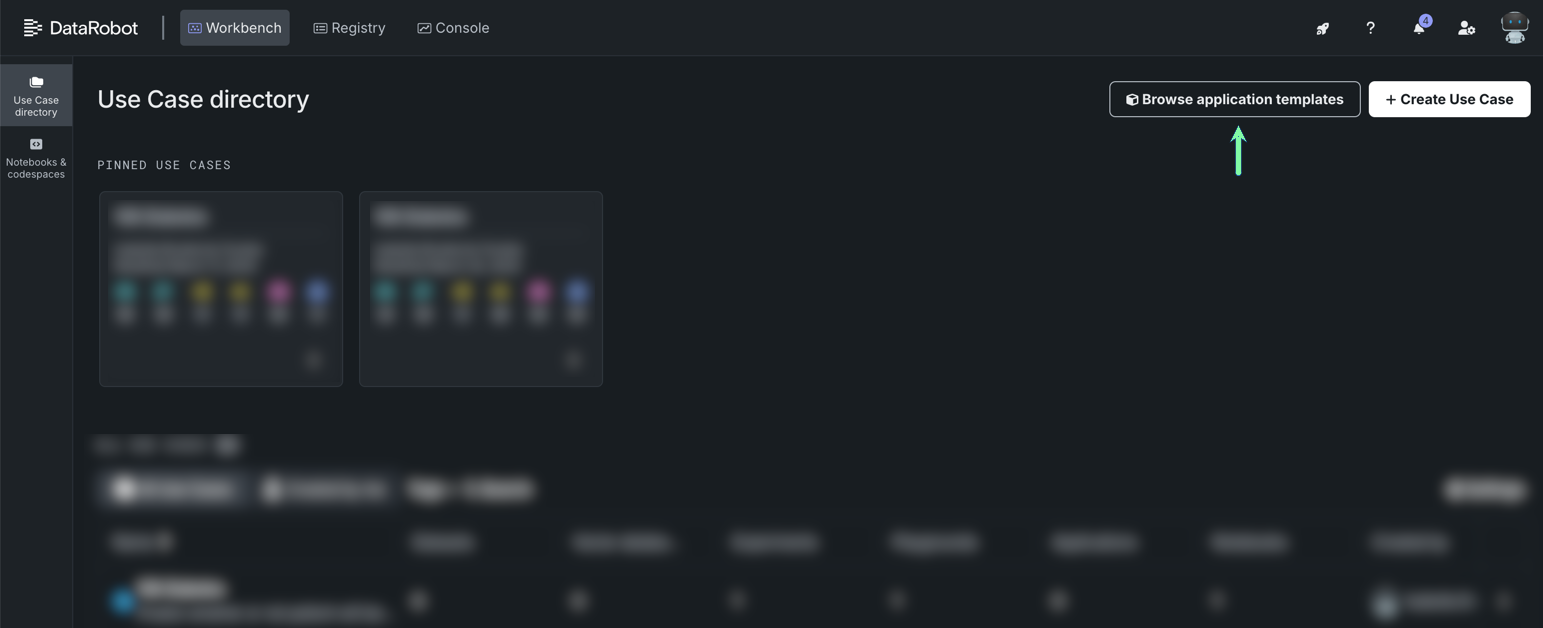

From Workbench, in the Use Case directory, click + Create Use Case. Before proceeding, you can give your Use Case a more descriptive name.

In the left-hand navigation, click the Data assets tile and click Upload file. Then, select the .zip file saved to your computer.

2. Create the vector database¶

Once the dataset is finished registering, in the left-hand navigation, click the Vector databases tile and then Create vector database.

In the resulting window, populate the fields as follows:

| Field | Selection | |

|---|---|---|

| 1 | Vector database provider | DataRobot |

| 2 | Name | A descriptive name (in this example, financial_jargon). |

| 3 | Data source | finance_knowledge_md.md.zip (the .zip registered in the previous step). |

For the rest of the fields (blurred in the above image), use the default settings. Then, click Create vector database. Note that building the vector database may take a minute or two.

For more information, see Vector databases.

3. Create the RAG playground¶

Once the vector database is finished building, open the Actions menu and select Create playground from latest version.

In the playground, select the Vector database tab in the right-hand pane, open the Vector database version dropdown, and select the database with the Latest badge.

On the Prompting tab, enter the following in the System prompt field: You are an expert financial analyst who is providing guidance on how to navigate data for analysis.

On the LLM tab, open the LLM dropdown and select Azure OpenAI GPT-4o.

Click Save configuration, and then add a descriptive name—in this case, ttmd-finance-terms.

In the playground, click Send to the workshop. Then, on the Vector database tab, choose a prediction environment and click Send to the workshop.

For more information, see Playground overview.

4. Register and deploy the model from the workshop¶

To register the RAG model, open Registry and select the Workshop tile. Select the model you sent over from the playground (ttmd-finance-terms).

Without changing the default settings, click Register a model. In the resulting window, click Register a model again. Once registration is complete, click Deploy model.

Without changing the default settings, click Deploy model. A window appears—click Return to deployments to open Console and monitor the progress of the deployment.

Open the deployment (ttmd-finance-terms), and on the Overview tab, copy the Deployment ID. This ID will be used in the application template.

5. Launch Talk to my Data Agent template¶

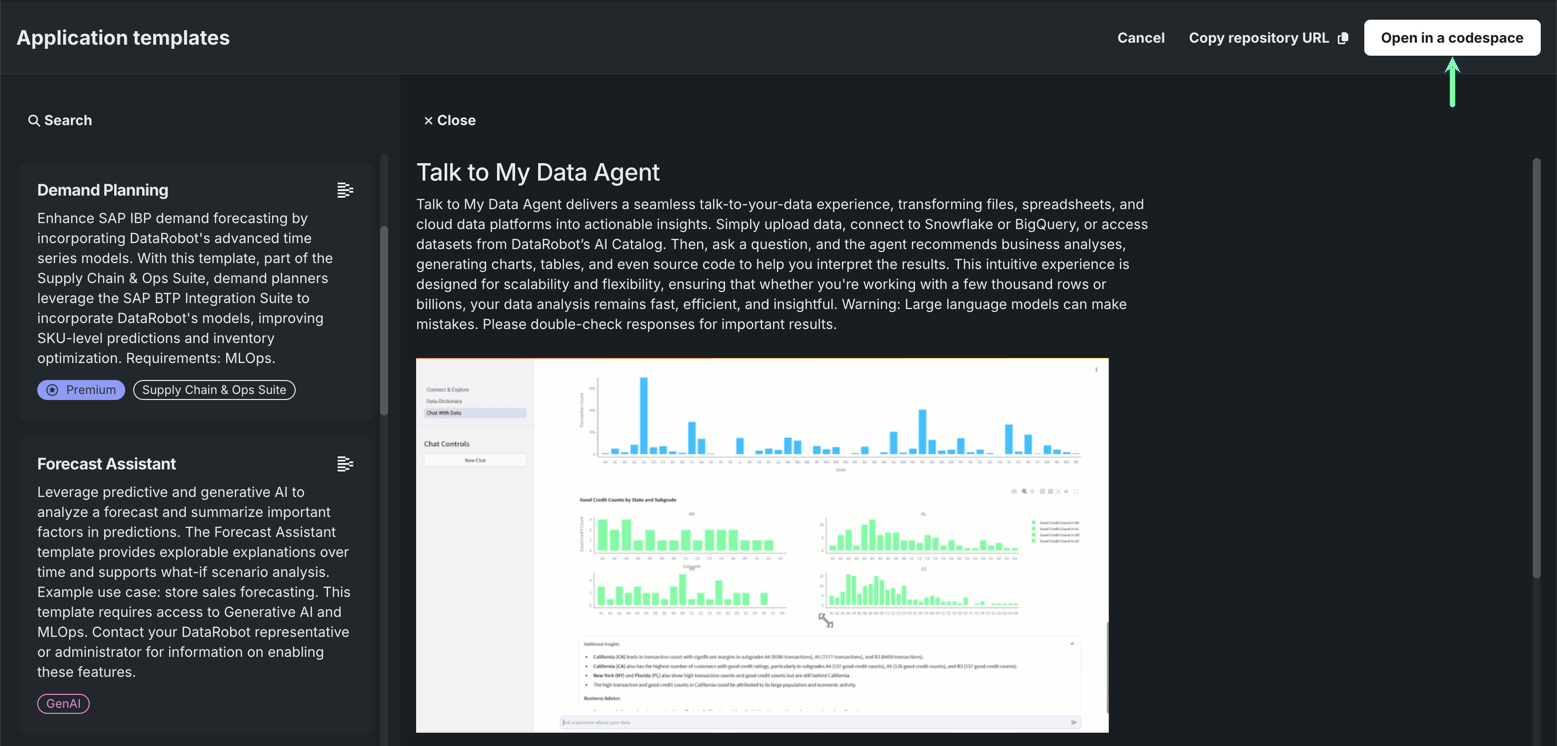

From Workbench, in the Use Case directory, click Browse application templates.

Select Talk to my Data Agent and click Open in a codespace in the upper-right corner.

This walkthrough focuses on working with application templates in a codespace; however, you can click Copy repository URL and paste the URL in your browser to open the template in GitHub.

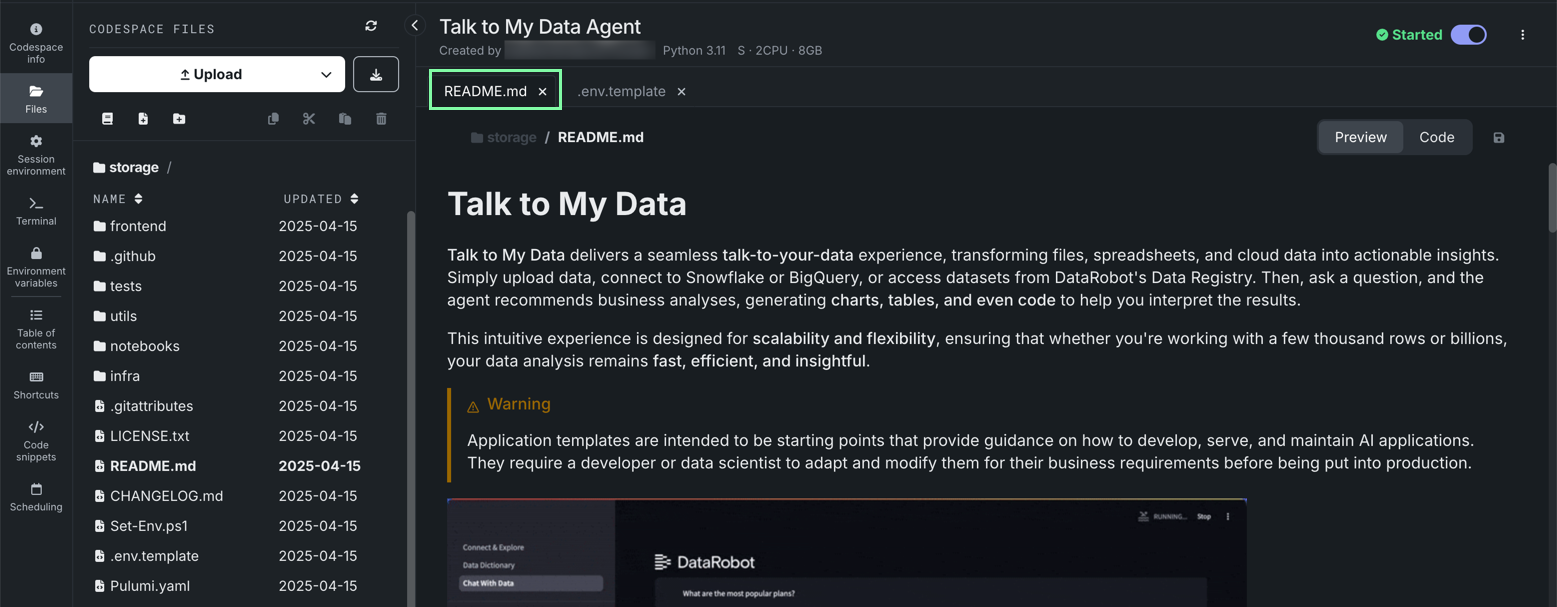

DataRobot opens and begins initializing a codespace. Once the session starts, the template files appear on the left and the README opens in the center. To learn more about the codespace interface, see Codespace sessions.

Tip

DataRobot automatically creates a Use Case, so you can access this codespace (and any resulting assets) from the Use Case directory in the future.

6. Configure application template and add the LLM deployment¶

Follow the instructions included in the README file.

In the .env file, accessed from the file browser on the left, the following fields are required:

DATAROBOT_API_TOKEN: Retrieved from User settings > API keys and tools in DataRobot.DATAROBOT_ENDPOINT: Retrieved from User settings > API keys and tools in DataRobot.PULUMI_CONFIG_PASSPHRASE: A self-selected alphanumeric passphrase.USE_DATAROBOT_LLM_GATEWAY: Set totrue.TEXTGEN_DEPLOYMENT_ID: The deployment ID previously copied.

Comment out all other parameters by adding # to the beginning of the line.

7. Execute the Pulumi stack and open the application¶

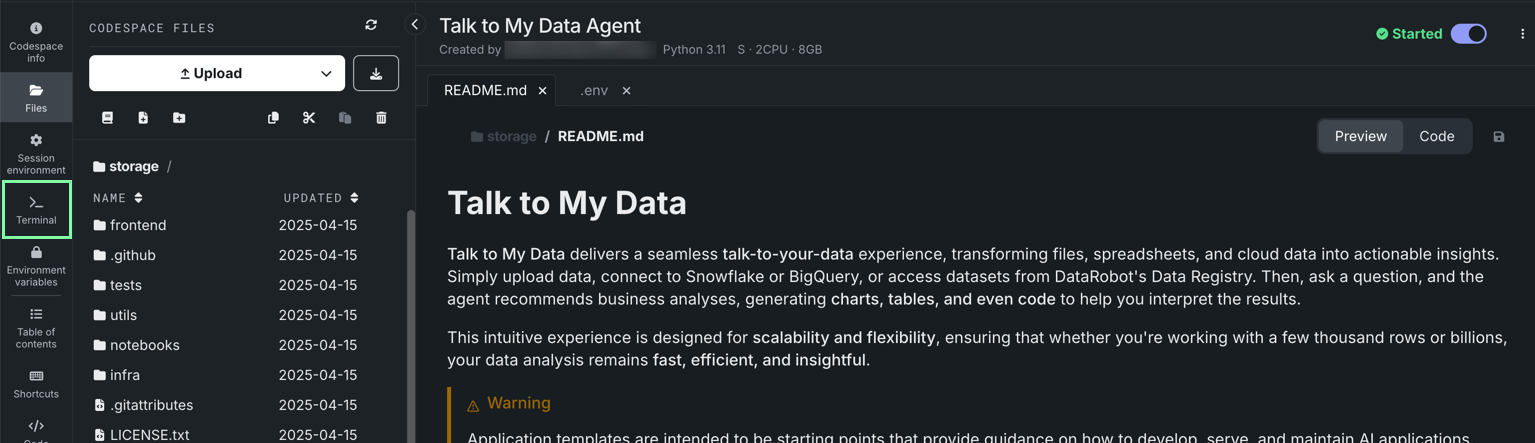

Click the Terminal tile in the left panel.

In the resulting terminal pane, run python quickstart.py YOUR_PROJECT_NAME, replacing YOUR_PROJECT_NAME with a unique name. Then, press Enter.

Executing the Pulumi stack can take several minutes. Once complete, DataRobot provides a URL at the bottom of the results in terminal. To view the deployed application, copy and paste the URL in your browser.

Tip

DataRobot also creates an application in Registry. To access this application again, you can navigate to Registry > Applications.