DataRobot AI Platform overview¶

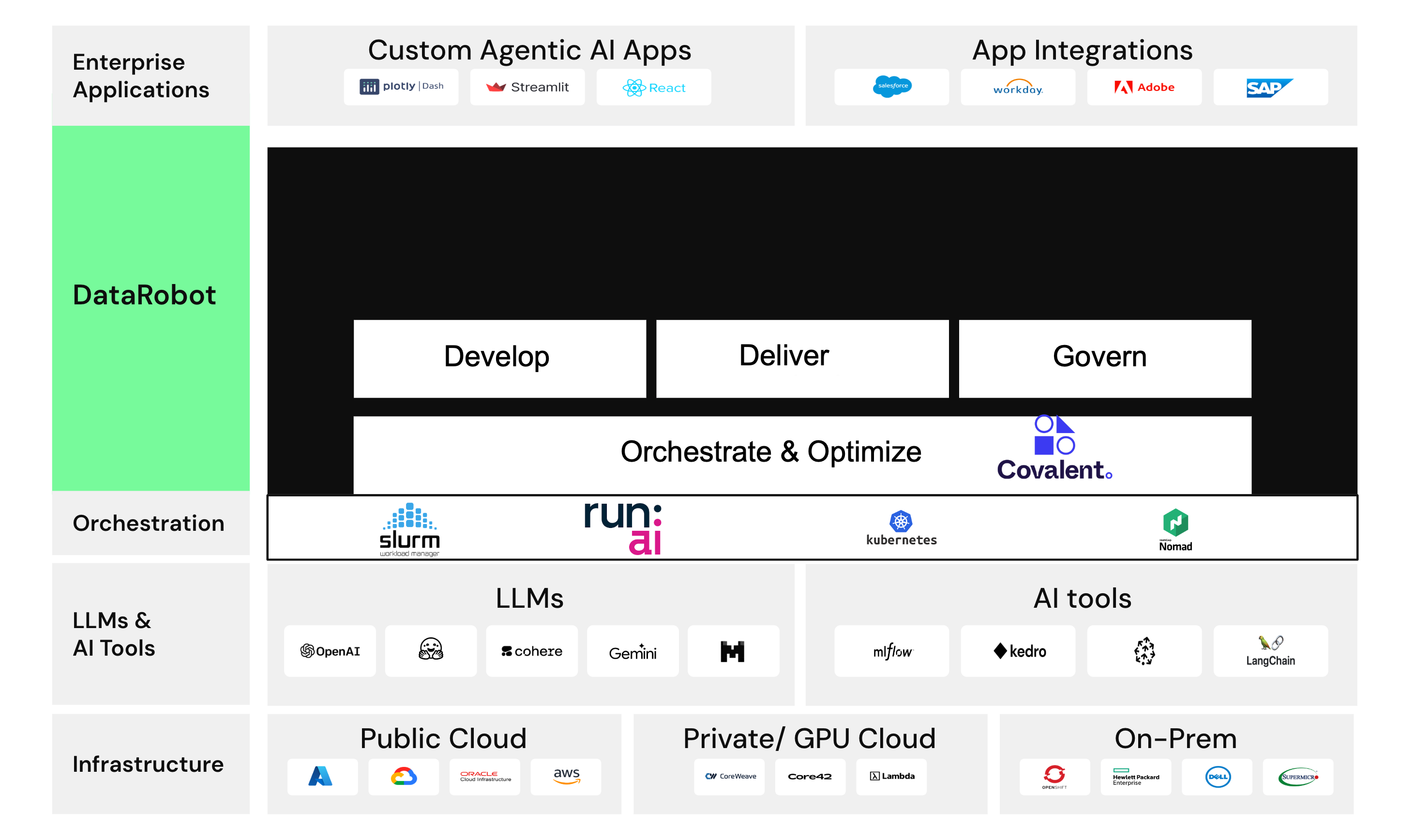

The DataRobot AI Platform, organized by stages in the AI lifecycle, provides a unified experience for developing, delivering, and governing enterprise AI solutions.

It is available on managed SaaS, virtual private cloud, or self-managed infrastructure.

Develop¶

Building great AI solutions—both generative and predictive models and agentic AI solutions—requires a lot of experimentation. Use Workbench to quickly experiment, easily compare across experiments, and organize all your experiment assets in an intuitive Use Case container.

Deliver¶

Use Registry to create deployment-ready model packages and generate compliance documentation for the purposes of enterprise governance.

Registry ensures that all your AI assets are documented and under version control. With test results and metadata stored alongside each AI asset, you can deploy models to production with confidence, regardless of model origin—whether DataRobot models, user-defined models, or external models.

Govern¶

Use Console to view the operating status of each deployed model.

As your organization becomes more AI-driven, you’ll have tens or even hundreds of task-specific models. Console provides a centralized hub for observing the performance of these models, allowing you to configure numerous automated intervention and notification options to keep things running smoothly.

One-click deployments¶

But where do deployments fit into the structure above? The deployment process is your model's seamless transition from Registry to Console. Once the model is registered and tested in Registry, you’ll have the option to deploy it with a single click.

Once you click to deploy, DataRobot’s automation creates an API endpoint for your model, in your selected prediction environment, and configures all the observability and monitoring.

All four of these deployment options are supported:

- A DataRobot model to a DataRobot serverless or dedicated prediction server.

- A DataRobot model to an external prediction server.

- A custom model to a DataRobot serverless or dedicated prediction server.

- A custom model to an external prediction server.

Generative AI¶

DataRobot's generative AI offering builds off of DataRobot's predictive AI experience to enable you to bring your favorite libraries, choose your LLMs, and integrate third-party tools. You can embed or deploy AI wherever it will drive value for your business, and leverage built-in governance for each asset in the pipeline.

Inside DataRobot there is very little distinction between generative and predictive AI. But, you will find that many tutorials and examples are organized along these lines.

- Predictive AI includes time series, classification, regression, and unsupervised machine learning such as anomaly detection and clustering.

- Generative AI (GenAI) includes text and image generation using foundational models.

Applications¶

DataRobot offers various approaches for building applications that allow you to share machine learning projects: custom applications, application templates, and no-code applications.

- Custom applications are a simple method for building and running custom code.

- Customizable application templates assist you by programmatically generating DataRobot resources that support your use case.

- No-code applications enable core DataRobot services without having to build and evaluate models.

Experiment with the Talk to my Data Agent application template

Which experience should you choose?¶

When working in DataRobot, you have two interface choices:

- Log into the platform via web browser and work with the graphical user interface (UI).

- Access the platform programmatically with the REST API or Python client packages.

If you're leaning towards using DataRobot programmatically, it's recommended that you explore the workflows in the UI first. DataRobot is committed to full accessibility in both interfaces, so you are not locked into a single choice. Know that you can:

- Flexibly switch between code and UI at any time.

- Seamlessly collaborate with other users who are working with a different option.

- When in code, use any development environment of your choice.

NextGen¶

An intuitive UI-based product comprised of Workbench for experiment-based iterative workflows, Registry for model evolution tracking and the centralized management of versioned models, and Console for monitoring and managing deployed models. NextGen provides a complete AI lifecycle platform, leveraging machine learning that has broad interoperability and end-to-end capabilities for ML experimentation and production. It is also the gateway for creating GenAI experiments, Notebooks, and applications.

Try a basic predictive walkthrough

Code¶

A programmatic alternative for accessing DataRobot using the REST API or Python client packages.

Where to get help¶

Help is everywhere you look:

Onboarding resources provided in Get started are a small subset of all the available content. Try exploring the introductory tutorials and labs in this section before moving into the general reference sections.

Email DataRobot support to ask a question or report a problem. Existing customers can also visit the Customer Support Portal.

Your designated Customer Success Manager and/or Applied AI Expert are available to offer consultative advice, share best practices, and get you on the path to AI value with DataRobot.

Next steps¶

So what's next? The best way to learn DataRobot is hands-on. We recommend taking an hour to complete these two suggested exercises.

Or, for more overview, watch this rapid tour video. It demonstrates:

- Five major data types—numerics, categorical, text, geospatial, and images.

- Nine major problem types—classification, regression, clustering, multilabel, anomaly detection, forecasting, time series clustering, time series anomaly detection, and generative AI.

- More than 40 modeling techniques that are specific to each problem type.

Each quick experiment demo was built with DataRobot's automation and results in a fully deployable machine learning pipeline.