NVIDIA AI Enterprise integration¶

Premium

The use of NVIDIA Inference Microservices (NIM) in DataRobot requires access to premium features for GenAI experimentation and GPU inference. Contact your DataRobot representative or administrator for information on enabling the required features.

NVIDIA AI Enterprise and DataRobot provide a pre-built AI stack solution, designed to integrate with your organization's existing DataRobot infrastructure, providing access to robust evaluation, governance, and monitoring features. This integration includes a comprehensive array of tools for end-to-end AI orchestration, accelerating your organization's data science pipelines to rapidly deploy production-grade AI applications on NVIDIA GPUs in DataRobot Serverless Compute.

In DataRobot, create custom AI applications tailored to your organization's needs by selecting NVIDIA Inference Microservices (NVIDIA NIM) from a gallery of AI applications and agents. NVIDIA NIM provides pre-built and pre-configured microservices within NVIDIA AI Enterprise, designed to accelerate the deployment of generative AI across enterprises.

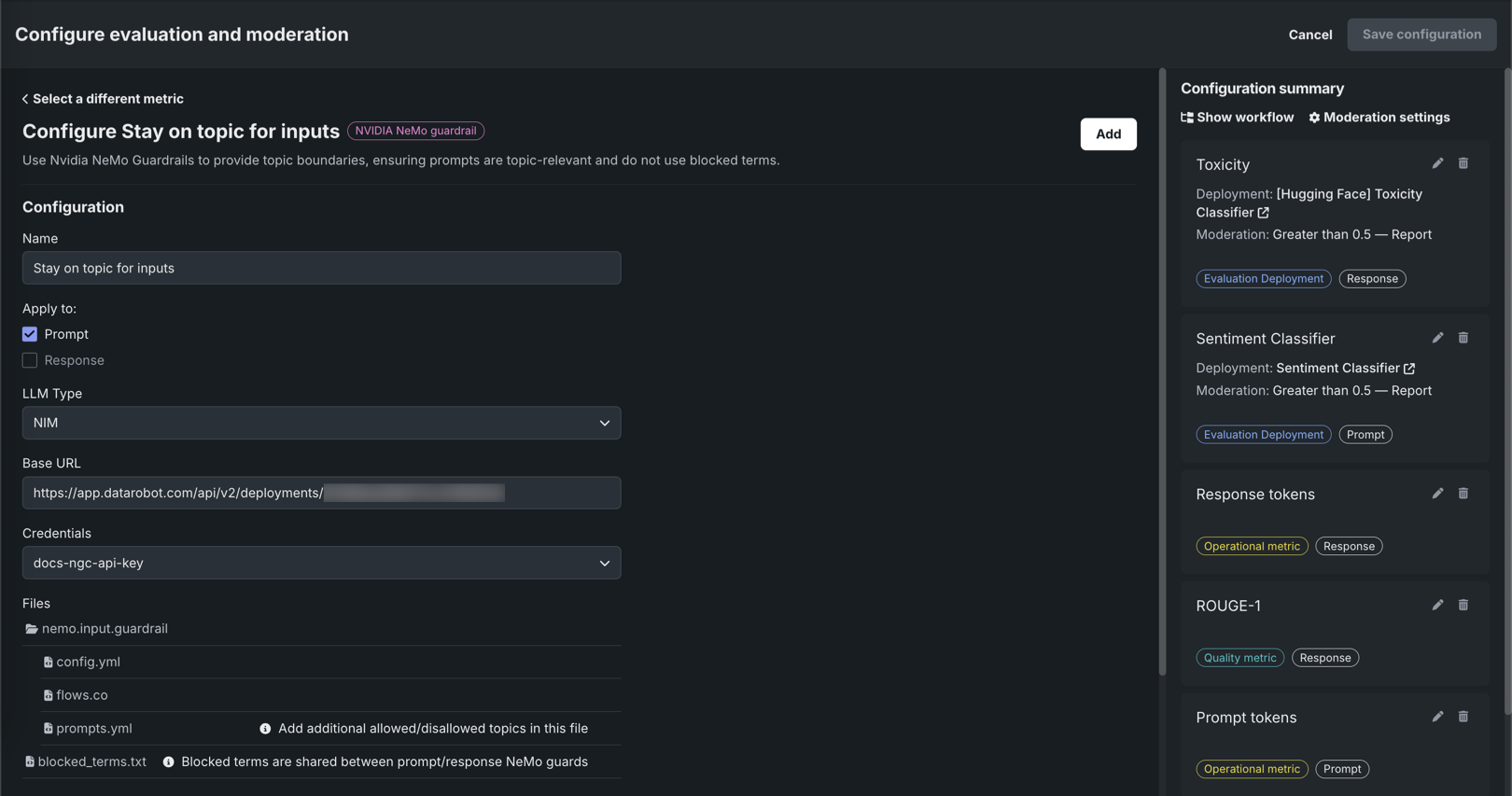

The DataRobot moderation framework provides out-of-the-box guards, allowing you to customize your applications with simple rules, code, or models to ensure GenAI applications perform to your organization's standards. NVIDIA NeMo Guardrails are tightly integrated into DataRobot, providing an easy way to build state-of-the-art guardrails into your application.

For more information on the capabilities provided by NVIDIA AI Enterprise and DataRobot, review the documentation listed below, or read the workflow summary on this page.

| Task | Description |

|---|---|

| Create an inference endpoint for NVIDIA NIM | Register and deploy with NVIDIA NIM to create inference endpoints accessible through code or the DataRobot UI. |

| Evaluate a text generation NVIDIA NIM in the playground | Add a deployed text generation NVIDIA NIM to a blueprint in the playground to access an array of comparison and evaluation tools. |

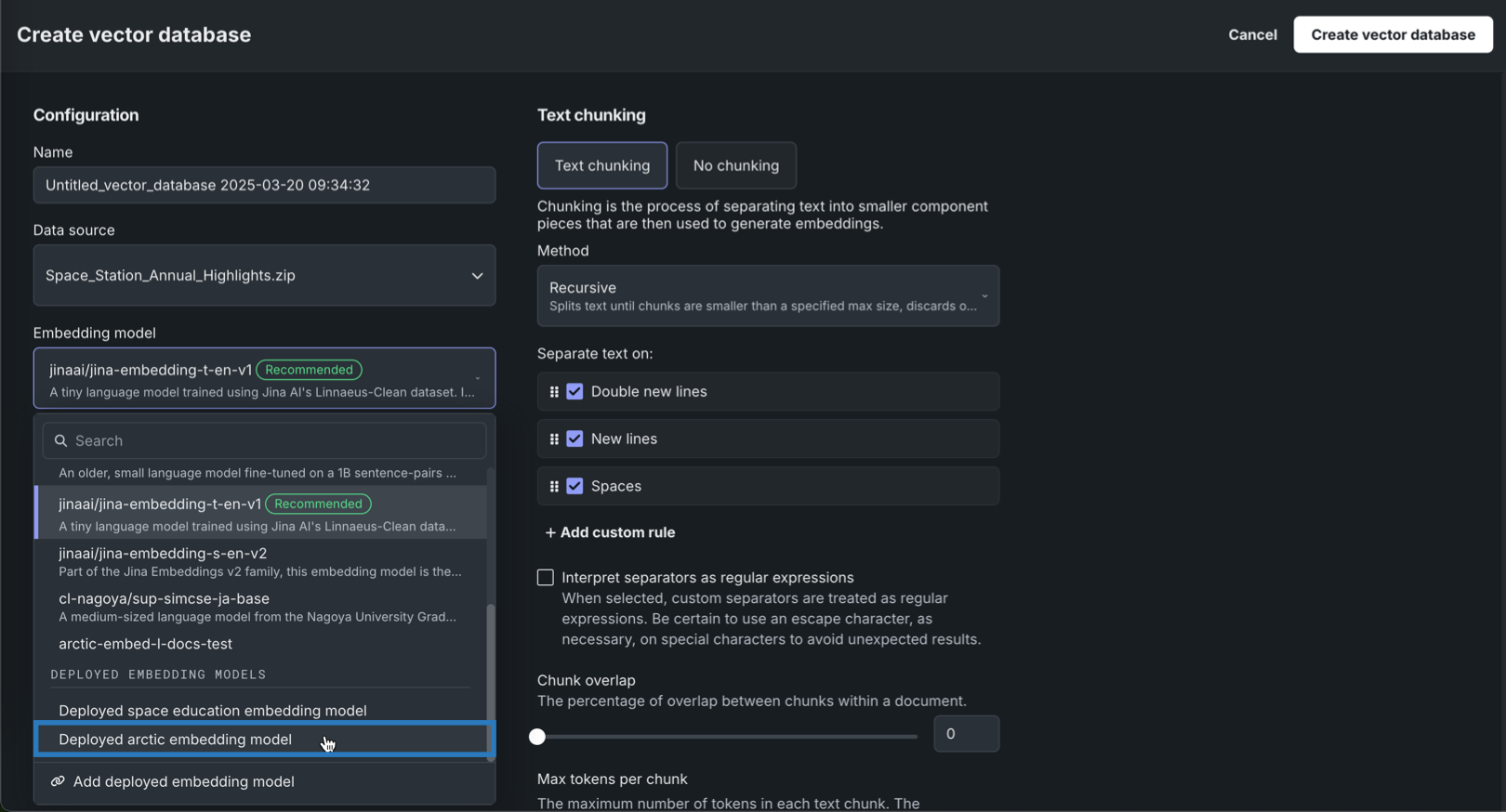

| Use an embedding NVIDIA NIM to create a vector database | Add a registered or deployed embedding NVIDIA NIM to a Use Case with a vector database to enrich prompts in the playground with relevant context before they are sent to the LLM. |

| Use NVIDIA NeMo Guardrails in a moderation framework to secure your application | Connect NVIDIA NeMo Guardrails to deployed text generation models to guard against off-topic discussions, unsafe content, and jailbreaking attempts. |

| Use a text generation NVIDIA NIM in an application template | Customize application templates from DataRobot to use a registered or deployed NVIDIA NIM text generation model. |

Create an inference endpoint for NVIDIA NIM¶

The NVIDIA AI Enterprise integration with DataRobot starts in Registry, where you can import NIM containers from the NVIDIA AI Enterprise catalog. The resulting registered model is optimized for deployment to Console and is compatible with the DataRobot monitoring and governance framework.

NVIDIA NIM provides optimized foundational models you can add to a playground in Workbench for evaluation and inclusion in agentic blueprints, embedding models used to create vector databases, and NVIDIA NeMo Guardrails used in the DataRobot moderation framework to secure your agentic application.

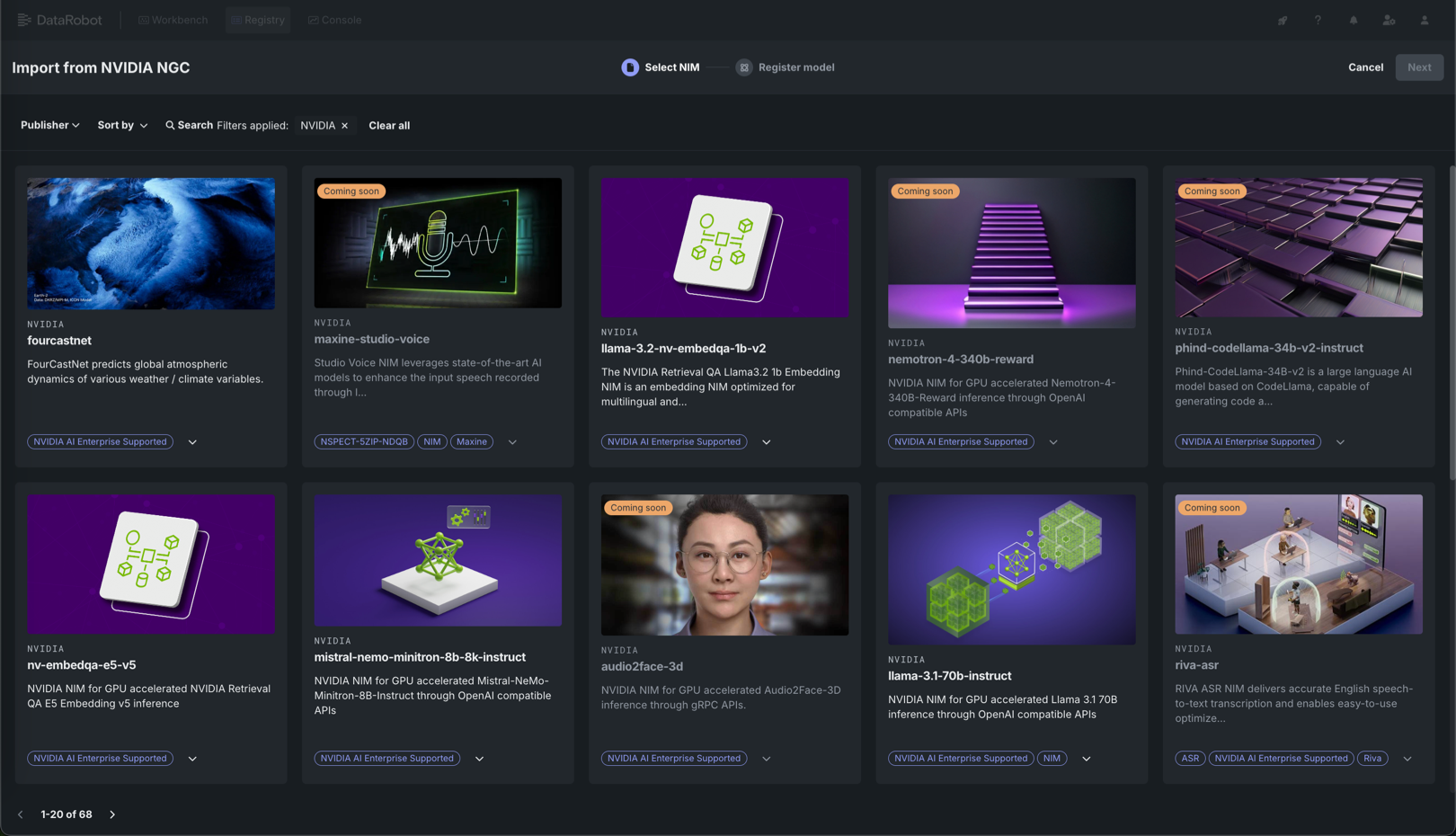

On the Models tab in Registry, register NVIDIA NIM models from the NVIDIA GPU Cloud (NGC) gallery, selecting the model name and performance profile and reviewing the information provided on the model card.

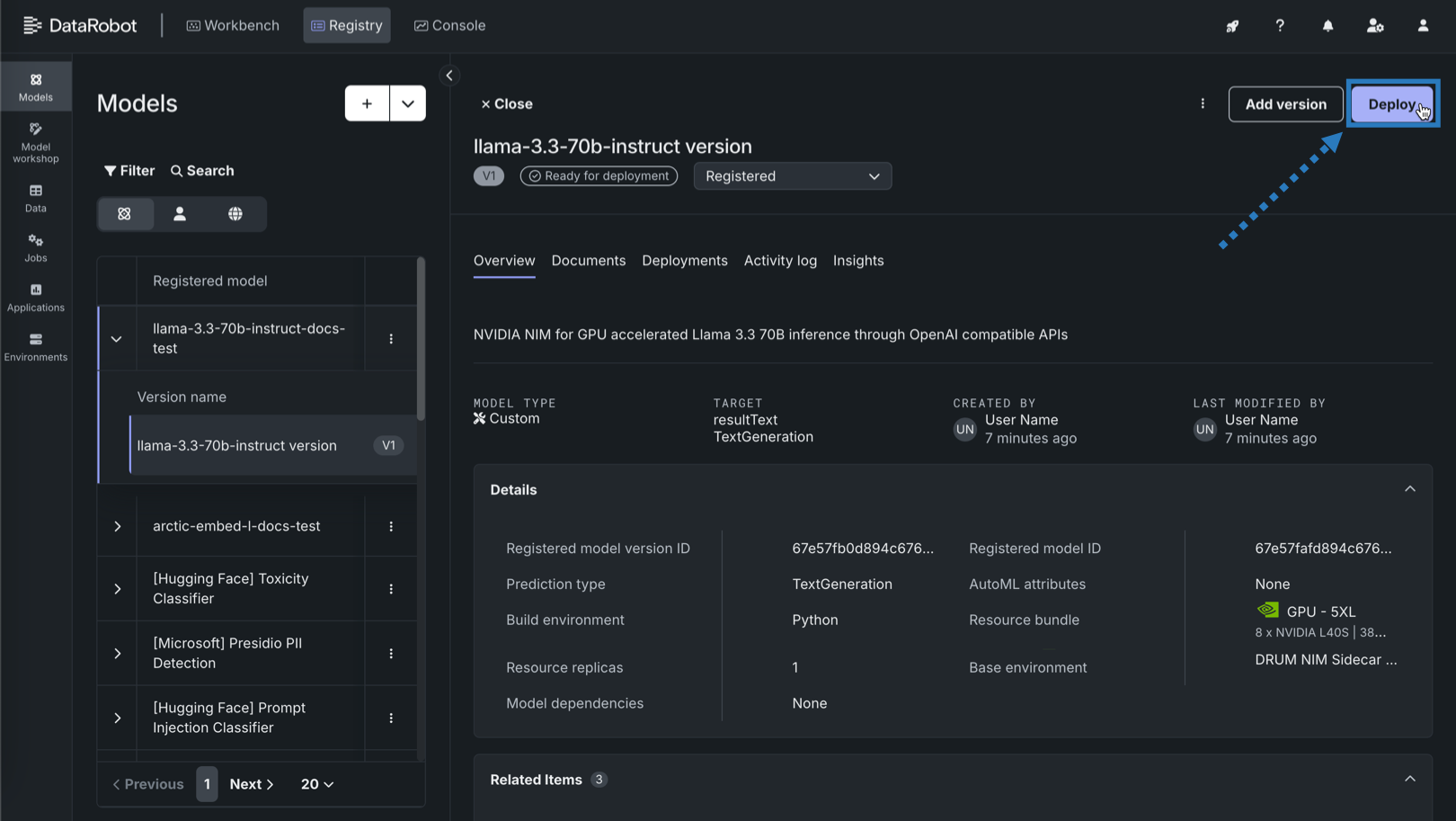

After the model is registered, deploy it to a DataRobot Serverless prediction environment. To deploy a registered model to a DataRobot Serverless environment, on the Models tab, locate and click the registered NIM, and then click the version to deploy. Then in the registered model version, you can review the version information and click Deploy.

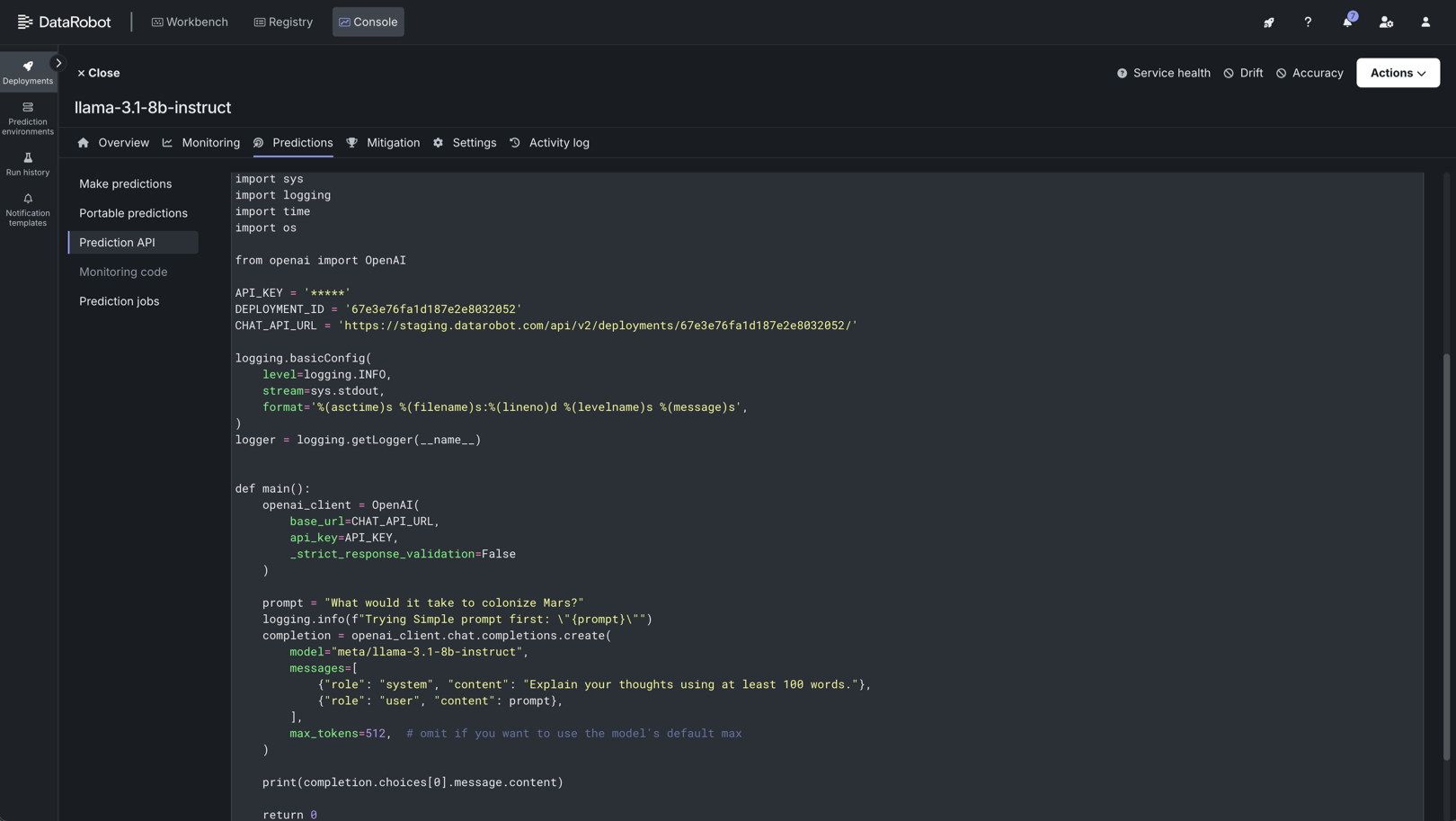

After the model is deployed to a DataRobot Serverless prediction environment, you can access real-time prediction snippets from the deployment's Predictions tab. The requirements for running the prediction snippet depends on the model type: text generation or unstructured. When you add a NIM to Registry in DataRobot, LLMs are imported as text generation models, allowing you to use the Bolt-on Governance API to communicate with the deployed NIM. Other types of models are imported as unstructured models, where endpoints provided by the NIM containers are exposed to communicate with the deployed NIM. This provides the flexibility required to deploy any NIM on GPU infrastructure using DataRobot Serverless Compute.

For more information, see the documentation.

Evaluate a text generation NVIDIA NIM in the playground¶

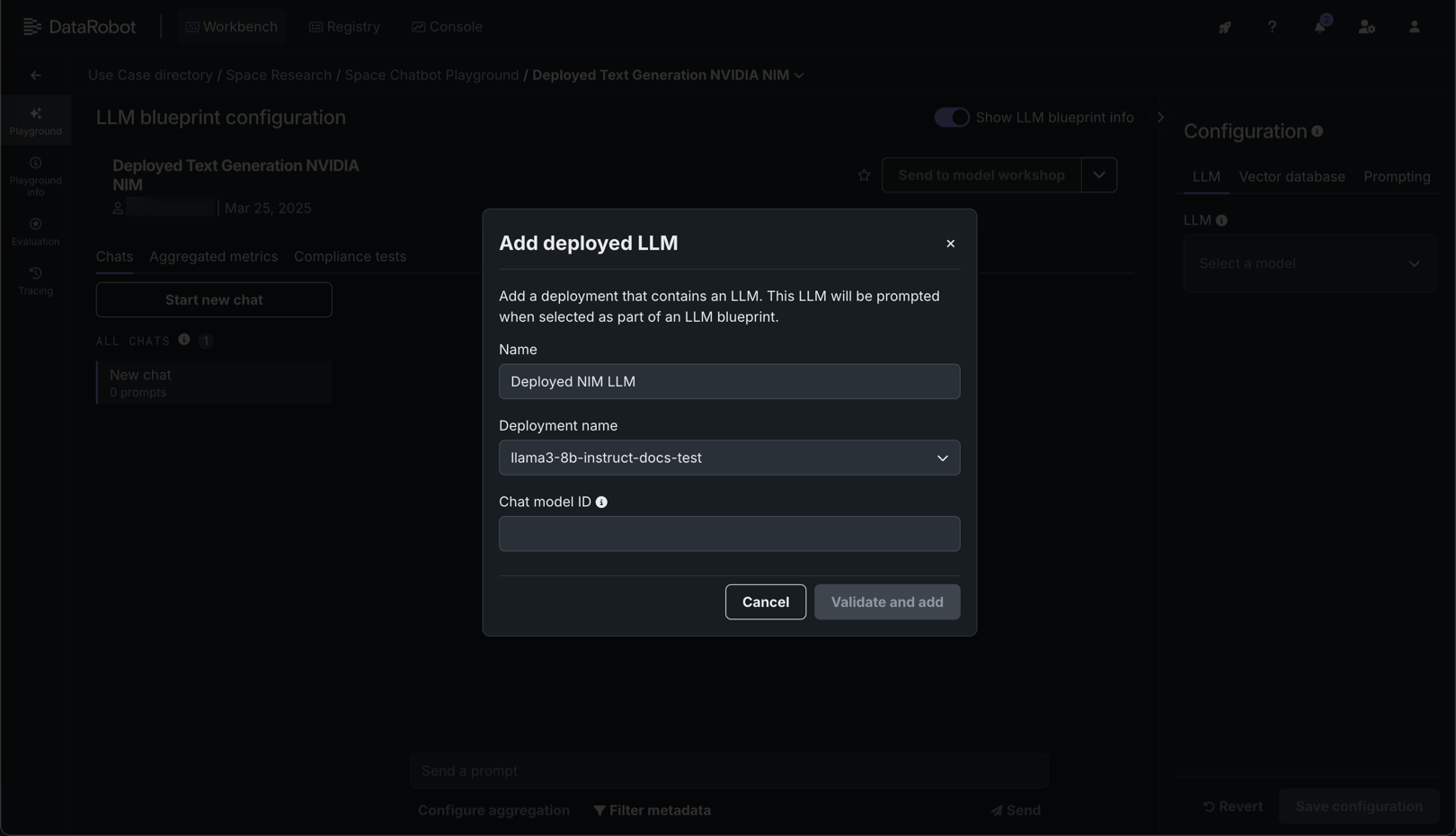

Optimized foundational models are available in Registry through NVIDIA NIM, imported with a text generation target type. In Workbench, you can add these optimized foundational models to a playground, where you can create, interact with, and compare LLM blueprints.

For more information, see the documentation.

Use an embedding NVIDIA NIM to create a vector database¶

Embedding models are available in Registry through NVIDIA NIM. In Workbench, you can add a deployed embedding model to a Use Case as a vector database. Vector databases can optionally be used to ground the LLM responses to specific information and can be assigned to an LLM blueprint to leverage during a Retrieval-Augmented Generation (RAG) operation.

For more information, see the documentation.

Use NVIDIA NeMo Guardrails in a moderation framework to secure your application¶

Connect NVIDIA NeMo Guardrails to deployed text generation models to guard against off-topic discussions, unsafe content, and jailbreaking attempts. To use a deployed NVIDIA NIM with the moderation framework provided by DataRobot, first, register and deploy a NeMo model, then, when you create a custom model with the text generation target type, configure evaluation and moderation.

For more information, see the documentation.

Use a text generation NVIDIA NIM in an application template¶

Applications templates can integrate capabilities provided by NVIDIA AI Enterprise. To use this integration, you can customize a DataRobot application template to programmatically generate a GenAI Use Case built on NVIDIA NIM. With minimal edits, the following application templates can be updated to use NVIDIA NIM, selecting a registered or deployed text generation NIM:

| Application template | Description |

|---|---|

| Guarded RAG Assistant | Build a RAG-powered chatbot using any knowledge base as its source. The Guarded RAG Assistant template logic contains prompt injection guardrails, sidecar models to evaluate responses, and a customizable interface that is easy to host and share. Example use cases: product documentation, HR policy documentation. |

| Predictive Content Generator | Generates prediction content using prediction explanations from a classification model. The Predictive Content Generator template returns natural language-based personalized outreach. Example use cases: next-best-offer, loan approvals, and fraud detection. |

| Talk to My Data Agent | Provides a talk-to-your-data experience. Upload a .csv, ask a question, and the agent recommends business analyses. It then produces charts and tables to answer your question (including the source code). This experience is paired with MLOps to host, monitor, and govern the components. |

| Forecast Assistant | Leverage predictive and generative AI to analyze a forecast and summarize important factors in predictions. The Forecast Assistant template provides explorable explanations over time and supports "what-if" scenario analysis. Example use case: store sales forecasting. |

To use an existing text generation model or deployment with these application templates, select one of the templates above from the Application Gallery. Then, you can make minimal modifications to the template files, locally or in a DataRobot codespace, to customize the template to use a registered or deployed NVIDIA NIM. With the template customized, you can proceed with the standard workflow outlined in the template's README.md.

For more information, see the documentation.