Use NVIDIA NeMo Guardrails with DataRobot moderation¶

Premium

The use of NVIDIA Inference Microservices (NIM) in DataRobot requires access to premium features for GenAI experimentation and GPU inference. NVIDIA NeMo Guardrails are a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

Additional feature flags: Enable Moderation Guardrails (Premium), Enable Global Models in the Model Registry (Premium), Enable Additional Custom Model Output in Prediction Responses

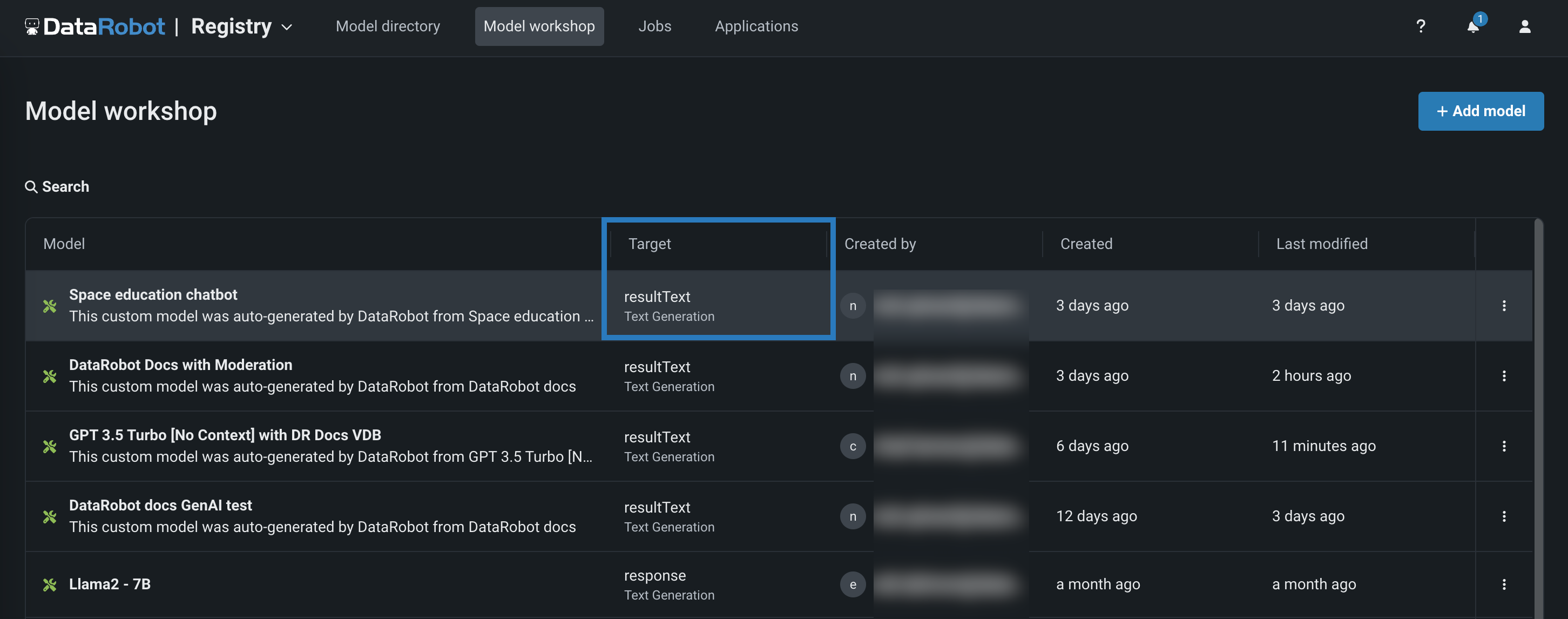

DataRobot provides out-of-the-box guardrails and lets you customize your applications with simple rules, code, or models. Use NVIDIA Inference Microservices (NIM) to connect NVIDIA NeMo Guardrails to text generation models in DataRobot, allowing you to guard against off-topic discussions, unsafe content, and jailbreaking attempts. The following NVIDIA NeMo Guardrails are available and can be implemented using the associated evaluation metric type:

| Model name | Evaluation metric type |

|---|---|

llama-3.1-nemoguard-8b-topic-control |

Stay on topic for input / Stay on topic for output |

llama-3.1-nemoguard-8b-content-safety |

Content safety |

nemoguard-jailbreak-detect |

Jailbreak |

Use a deployed NIM with NVIDIA NeMo guardrails¶

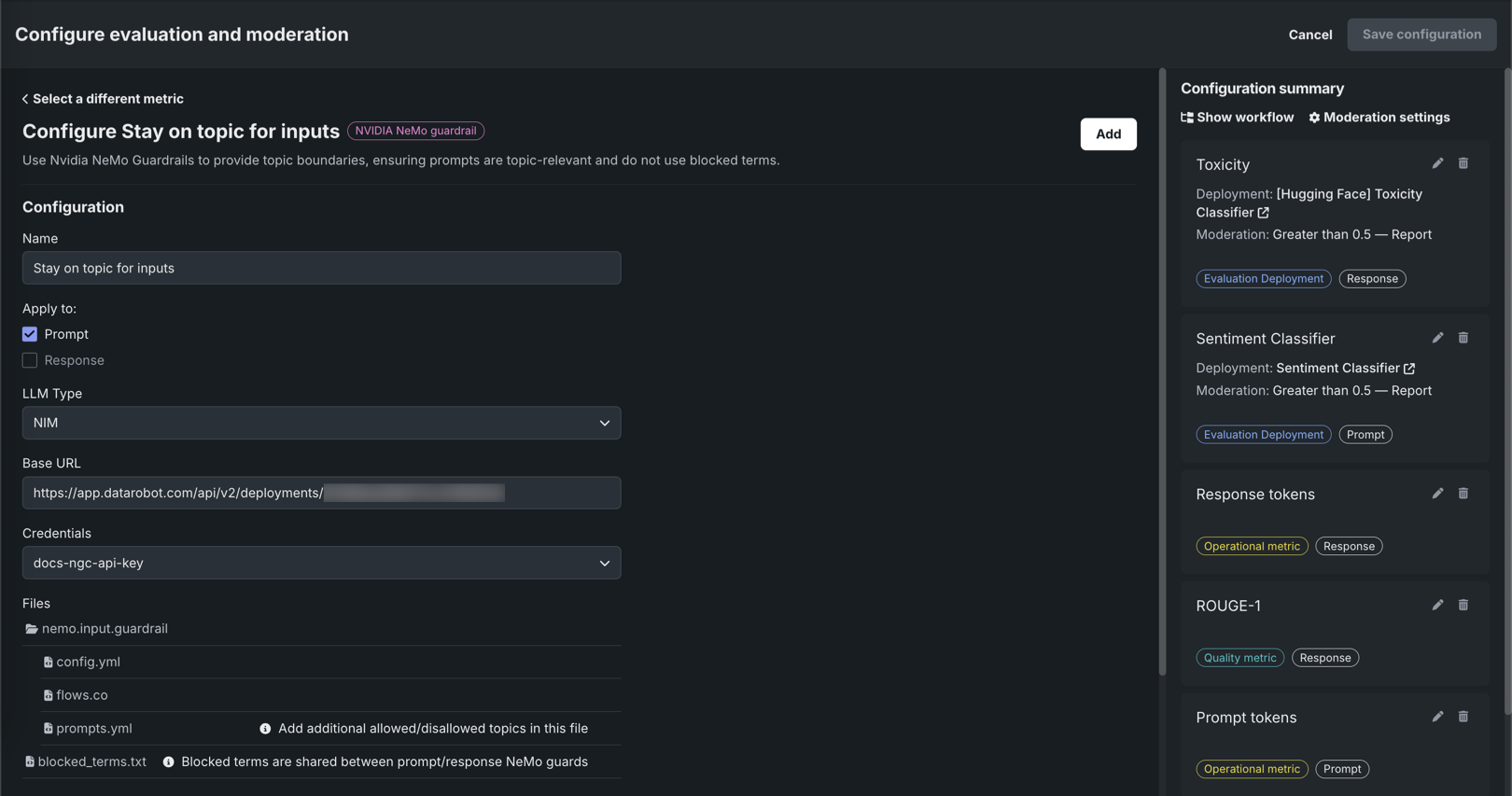

To use a deployed llama-3.1-nemoguard-8b-topic-control NVIDIA NIM with the Stay on topic evaluation metrics, register and deploy the NVIDIA NeMo Guardrail. Once you have created a custom model with the text generation target type, configure the Stay on topic evaluation metric.

To select and configure NVIDIA NeMo Guardrails for topic control:

-

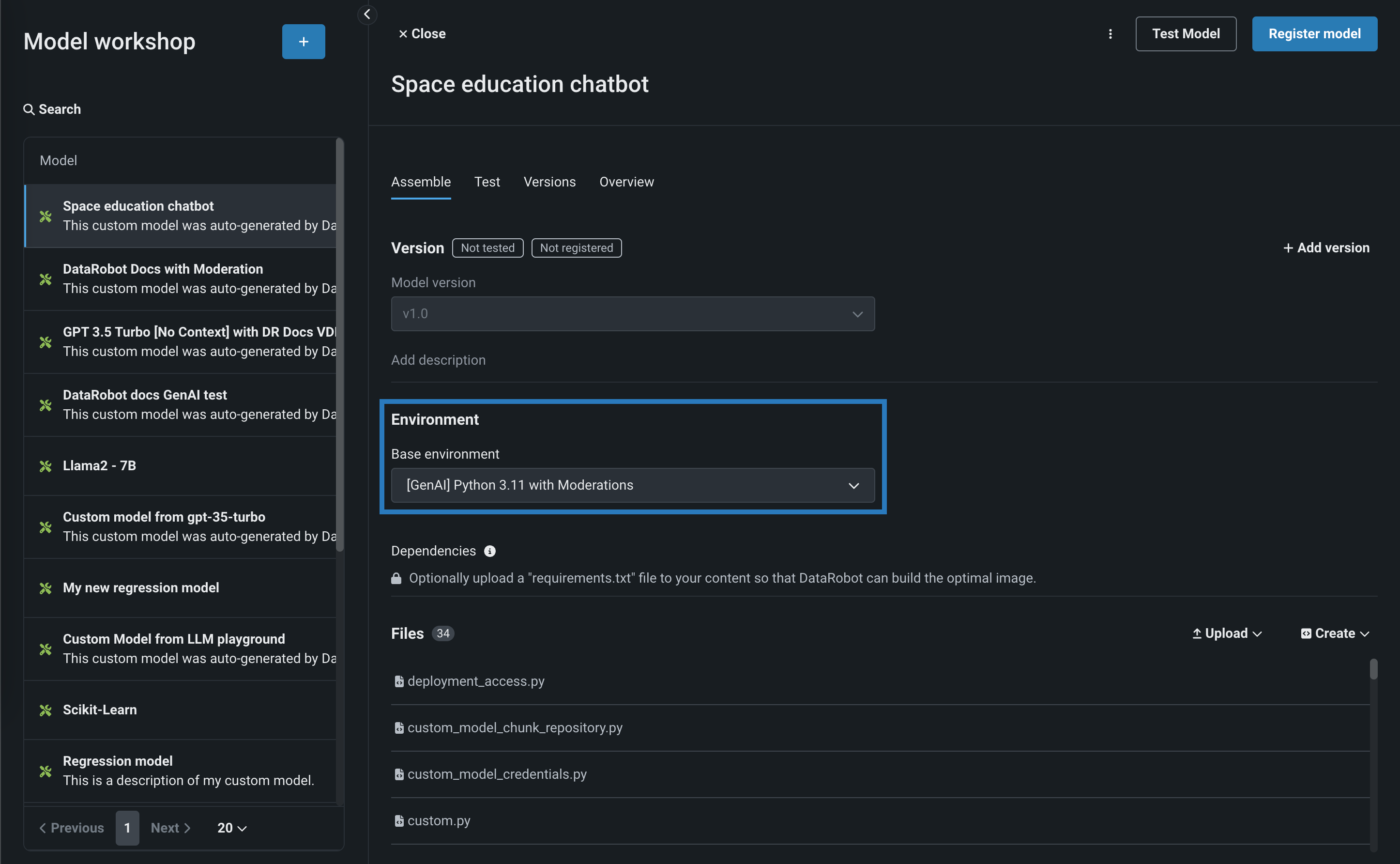

In the Workshop, open the Assemble tab of a custom model with the Text Generation target type and assemble a model, either manually from a custom model you created outside DataRobot or automatically from a model built in a Use Case's LLM playground.

When you assemble a text generation model with moderations, ensure you configure any required runtime parameters (for example, credentials) or resource settings (for example, public network access). Finally, set the Base environment to a moderation-compatible environment, such as [GenAI] Python 3.12 with Moderations:

Resource settings

DataRobot recommends creating the LLM custom model using larger resource bundles with more memory and CPU resources.

-

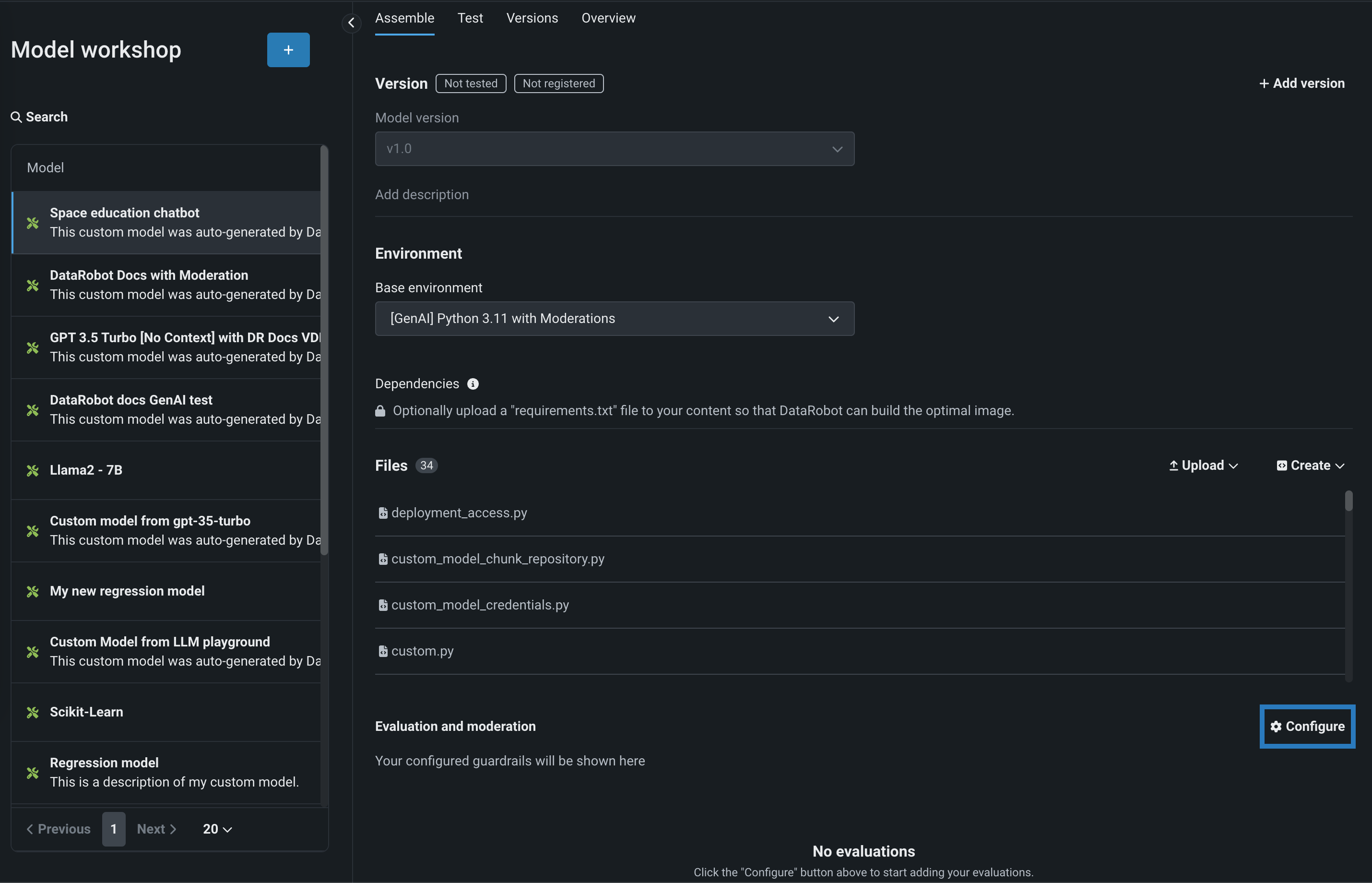

After you've configured the custom model's required settings, navigate to the Evaluation and moderation section and click Configure:

-

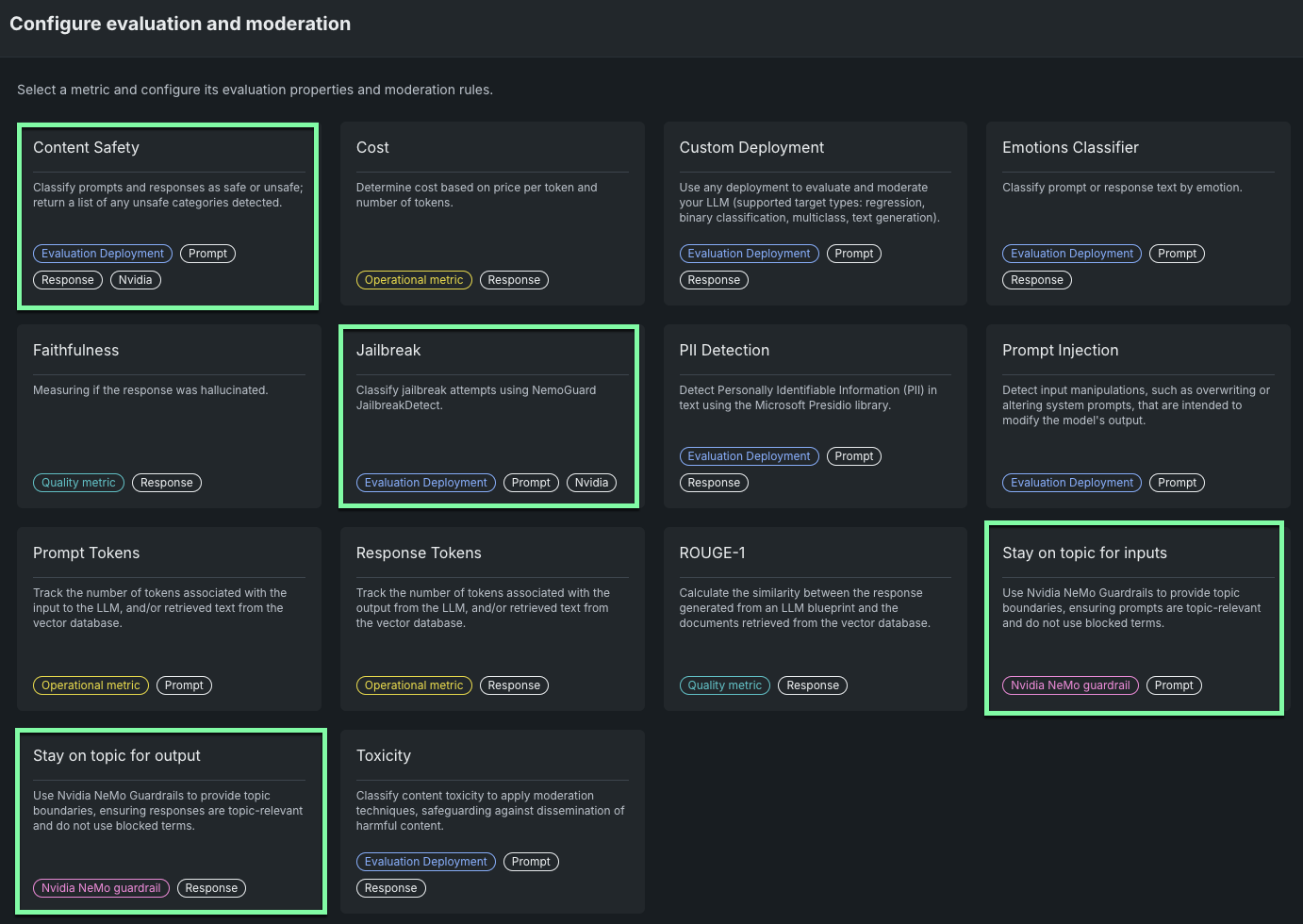

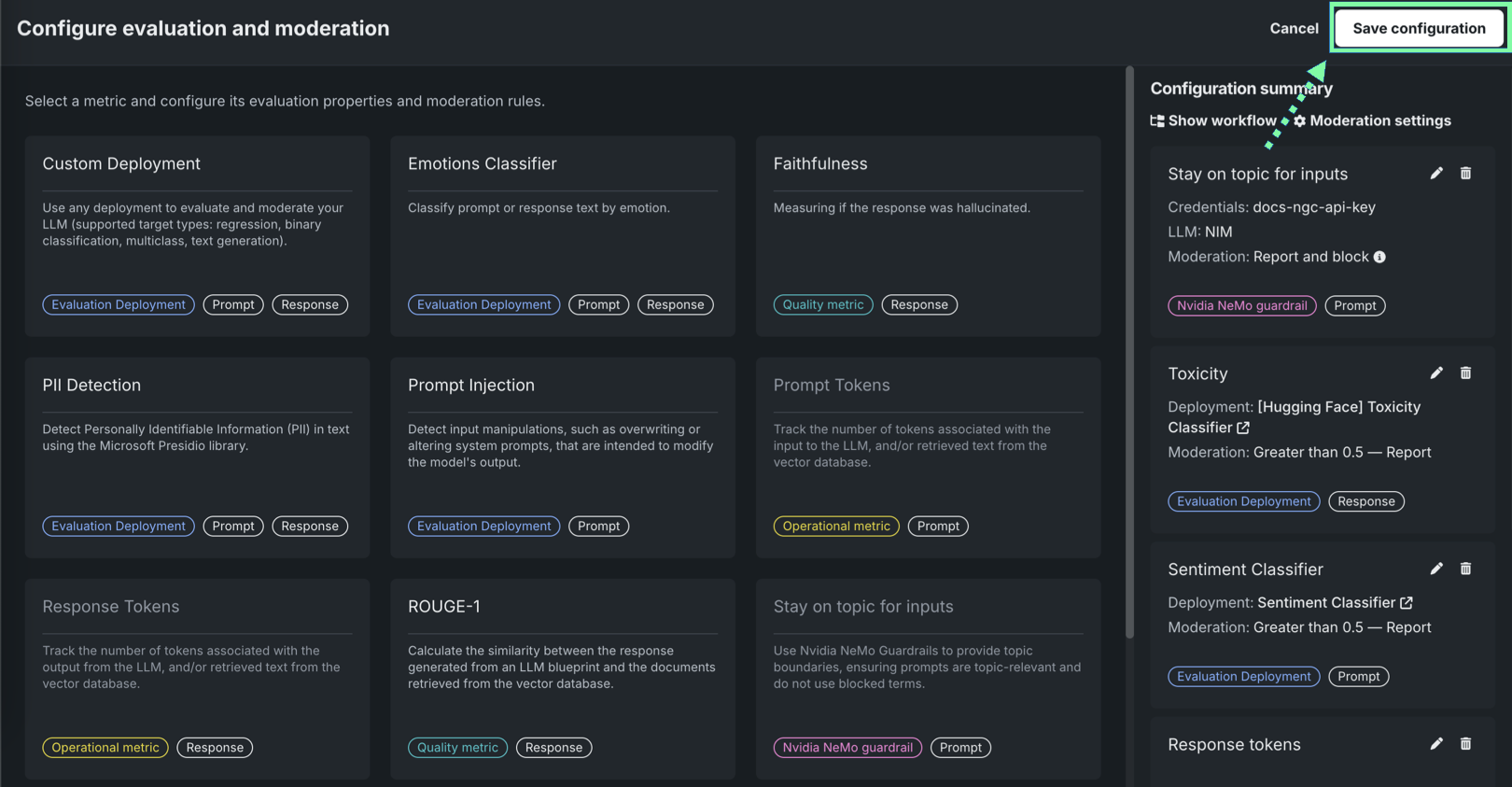

In the Configure evaluation and moderation panel, locate the metrics tagged with NVIDIA NeMo guardrail or NVIDIA and select the metric you want to use.

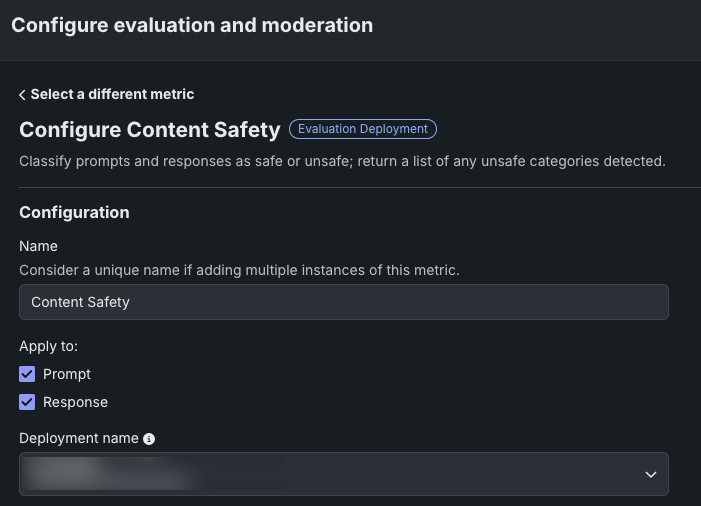

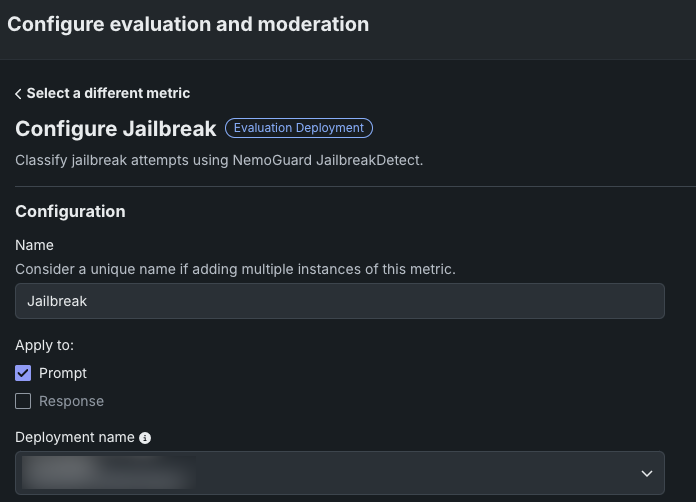

Evaluation metric Requires Description Stay on topic for inputs NVIDIA NeMo guardrails configuration Use NVIDIA NeMo Guardrails to provide topic boundaries, ensuring prompts are topic-relevant and do not use blocked terms. Stay on topic for output NVIDIA NeMo guardrails configuration Use NVIDIA NeMo Guardrails to provide topic boundaries, ensuring responses are topic-relevant and do not use blocked terms. Content safety A deployed NIM model llama-3.1-nemoguard-8b-content-safetyimported from NGCClassify prompts and responses as safe or unsafe; return a list of any unsafe categories detected. Jailbreak A deployed NIM model nemoguard-jailbreak-detectimported from NGCClassify jailbreak attempts using NemoGuard JailbreakDetect. -

On the Configure evaluation and moderation page, set the following fields based on the selected metric:

Field Description Name Enter a descriptive name for the metric you're configuring. Apply for Stay on topic for input is applied to the prompt. Stay on topic for output is applied to the response. LLM type Set the LLM type to NIM. NIM Deployment Select an NVIDIA NIM deployment. For more information, see Import and deploy with NVIDIA NIM. Credentials Select a DataRobot API key from the list. Credentials are defined on the Credentials management page. Files (Optional) Configure the NeMo files. Next to a file, click to modify the NeMo guardrails configuration files. In particular, update prompts.ymlwith allowed and blocked topics andblocked_terms.txtwith the blocked terms, providing rules for NeMo guardrails to enforce. Theblocked_terms.txtfile is shared between the input and output stay on topic metrics; therefore, modifyingblocked_terms.txtin the input metric modifies it for the output metric and vice versa. Only two NeMo stay on topic metrics can exist in a custom model, one for input and one for output.Field Description Name Enter a descriptive name for the metric you're configuring. Apply for Apply content safety to both the prompt and the response. Deployment name In the list, locate the name of the llama-3.1-nemoguard-8b-content-safety model registered and deployed in DataRobot and click the deployment name. Field Description Name Enter a descriptive name for the metric you're configuring. Apply to Apply jailbreak to the prompt. Deployment name In the list, locate the name of the nemoguard-jailbreak-detect model registered and deployed in DataRobot and click the deployment name. -

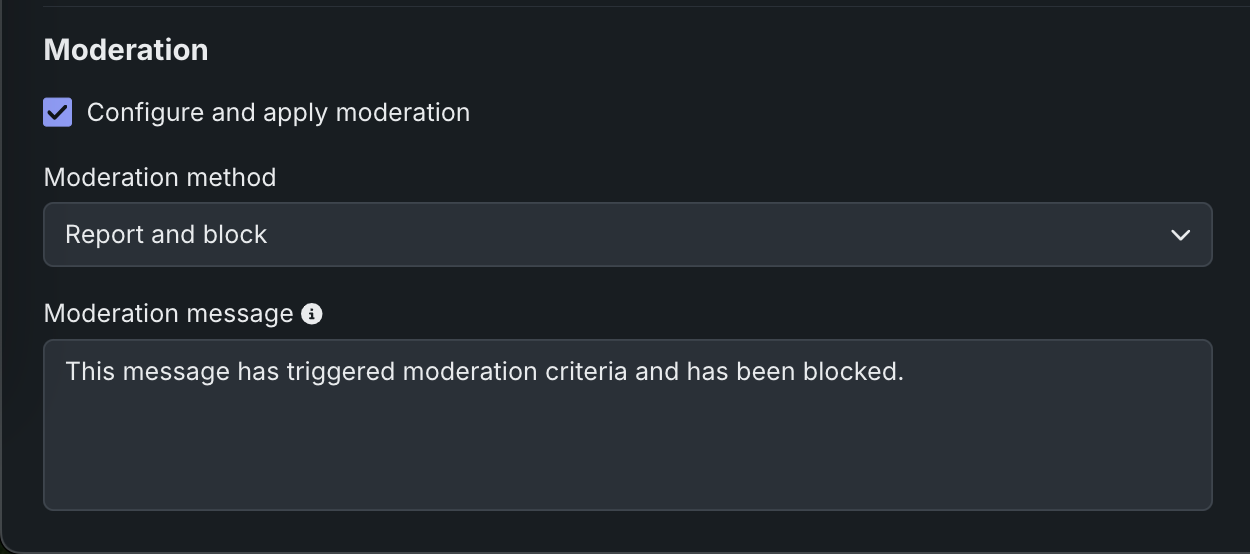

In the Moderation section, with Configure and apply moderation enabled, for each evaluation metric, set the following:

Field Description Moderation method Select Report or Report and block. Moderation message If you select Report and block, you can optionally modify the default message. -

After configuring the required fields, click Add to save the evaluation and return to the evaluation selection page. Then, select and configure another metric, or click Save configuration.

The guardrails you selected appear in the Evaluation and moderation section of the Assemble tab.

After you add guardrails to a text generation custom model, you can test, register, and deploy the model to make predictions in production. After making predictions, you can view the evaluation metrics on the Custom metrics tab and prompts, responses, and feedback (if configured) on the Data exploration tab.