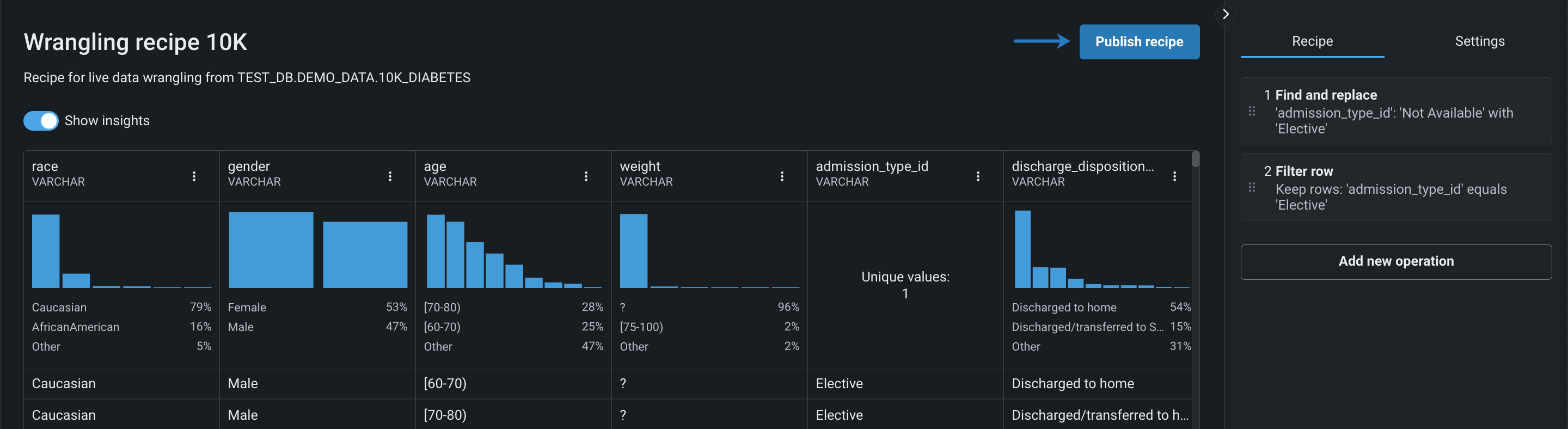

Publish a recipe¶

Once the recipe is built and the live sample looks ready for modeling, you can publish the recipe, pushing it down as a query to the data source. There, the query is executed by applying the recipe to the entire dataset and materializing a new output dataset. The output is sent back to DataRobot and added to the Use Case.

See the associated considerations for important additional information.

Publishing large datasets

When publishing a wrangling recipe for input datasets larger than 20GB, you can push the data transformations and analysis down to a DataRobot compute engine for scalable and secure data processing of CSV and Parquet files stored in S3. Note that this is only available for AWS SaaS and VPC installations.

Feature flag OFF by default: Enable Distributed Spark Support for Data Engine

To publish a recipe:

-

After you're done wrangling a dataset, open the Recipe actions dropdown and select Publish.

-

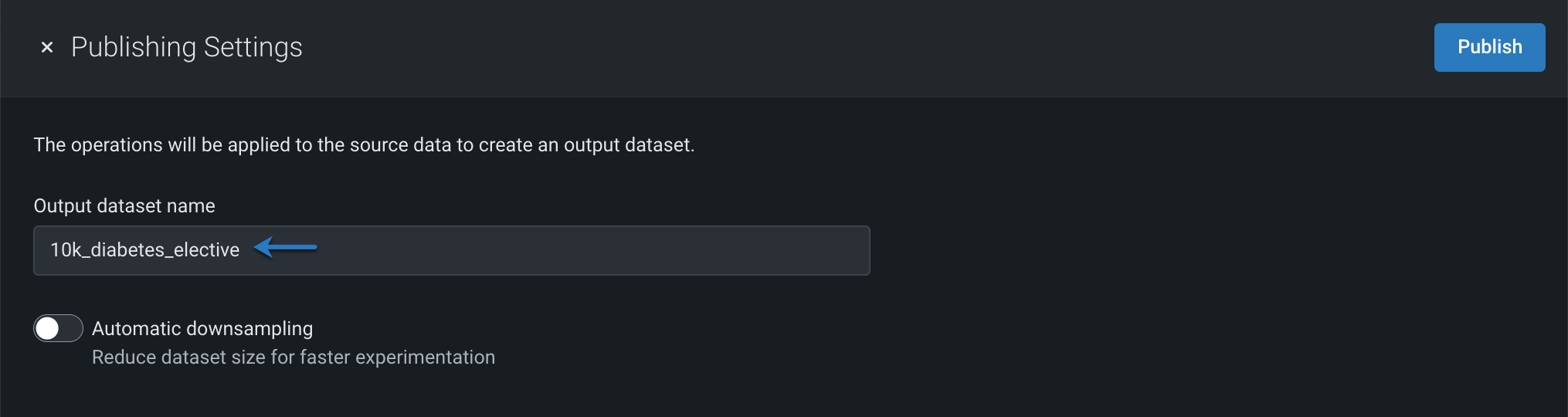

Enter a name for the output dataset. DataRobot will use this name to register the dataset in the AI Catalog and Data Registry.

-

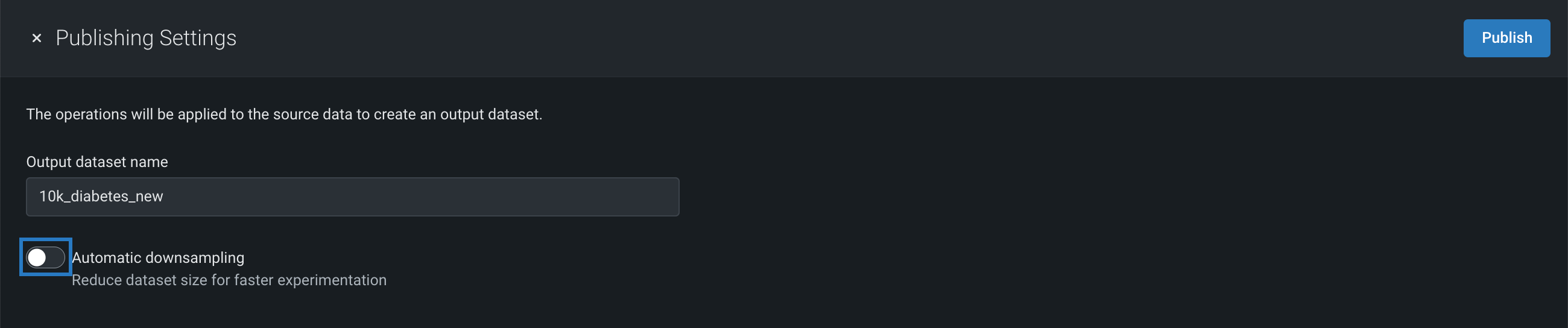

(Optional) Configure Automatic downsampling.

-

Click Publish.

DataRobot sends the published recipe to Snowflake and where it is applied to the source data to create a new output dataset. In DataRobot, the output dataset is registered in the Data Registry and added to your Use Case.

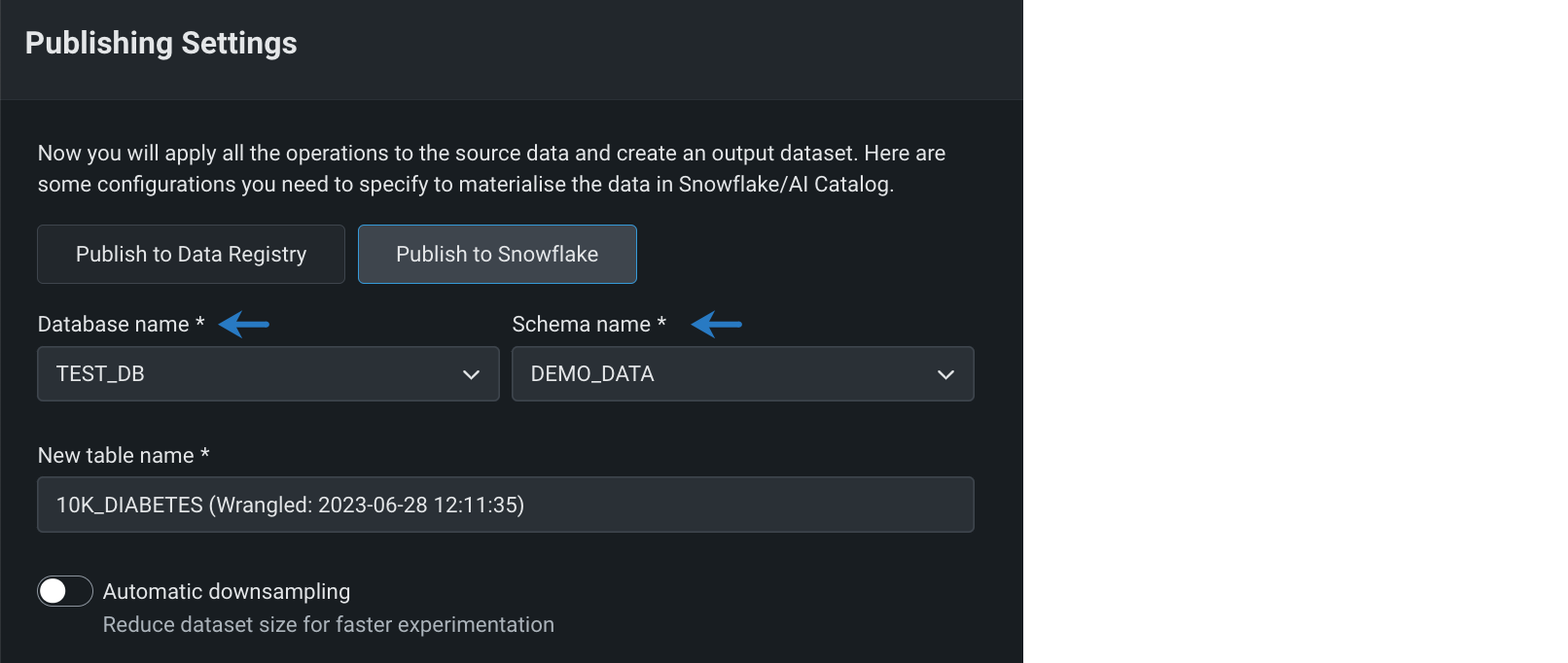

Publish to your data source¶

When you publish a wrangling recipe, those operations and settings are pushed down into your virtual warehouse, allowing you to leverage the security, compliance, and financial controls specified within its environment. Selecting this option materializes an output dynamic dataset in DataRobot's Data Registry as well as your data source.

Required permissions

You must have write access to the selected schema and database.

To enable in-source materialization (for Snowflake in this example):

-

In the Publishing Settings modal, click Publish to Snowflake.

-

Select the appropriate Snowflake Database and Schema using the dropdowns.

-

From here, you can:

- Click Publish to finish publishing your recipe.

- Configure downsampling.

Configure downsampling¶

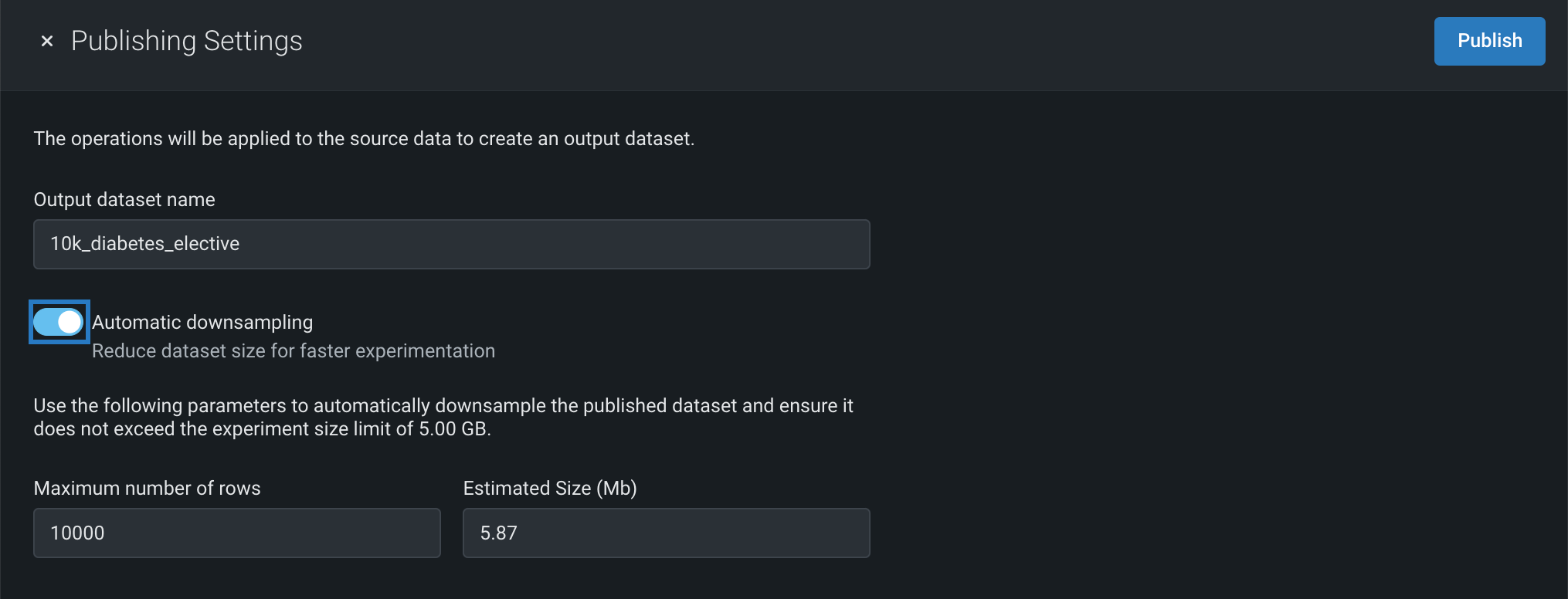

Automatic downsampling is a technique used to reduce the size of a dataset by reducing the size of the majority class using random sampling. Consider enabling automatic downsampling if the size of your source data exceeds that of DataRobot's file size requirements.

To configure downsampling:

-

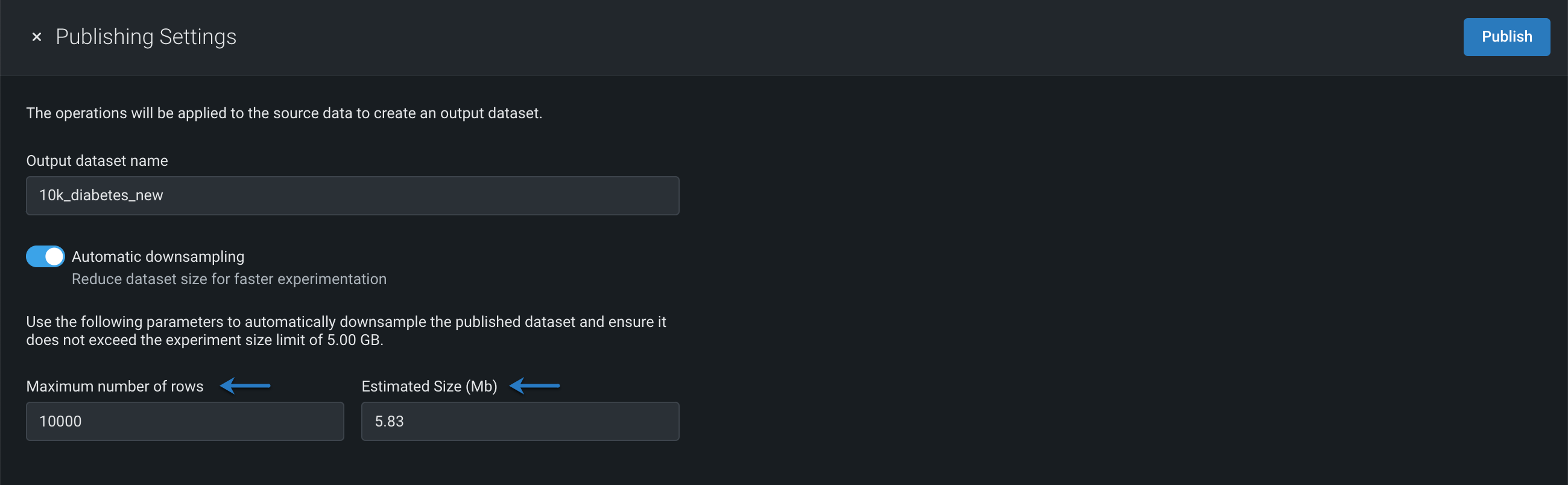

Enable the Automatic downsampling toggle in the Publishing Settings modal.

-

Specify the Maximum number of rows and Estimated size in megabytes.

Configure smart downsampling¶

You can use smart downsampling to reduce the size of your output dataset when publishing a wrangling recipe. Smart downsampling is a data science technique to reduce the time it takes to fit a model without sacrificing accuracy; it is particularly useful for imbalanced data. This downsampling technique accounts for class imbalance by stratifying the sample by class. In most cases, the entire minority class is preserved, and sampling only applies to the majority class. Because accuracy is typically more important on the minority class, this technique greatly reduces the size of the training dataset (reducing modeling time and cost), while preserving model accuracy.

To configure smart downsampling:

-

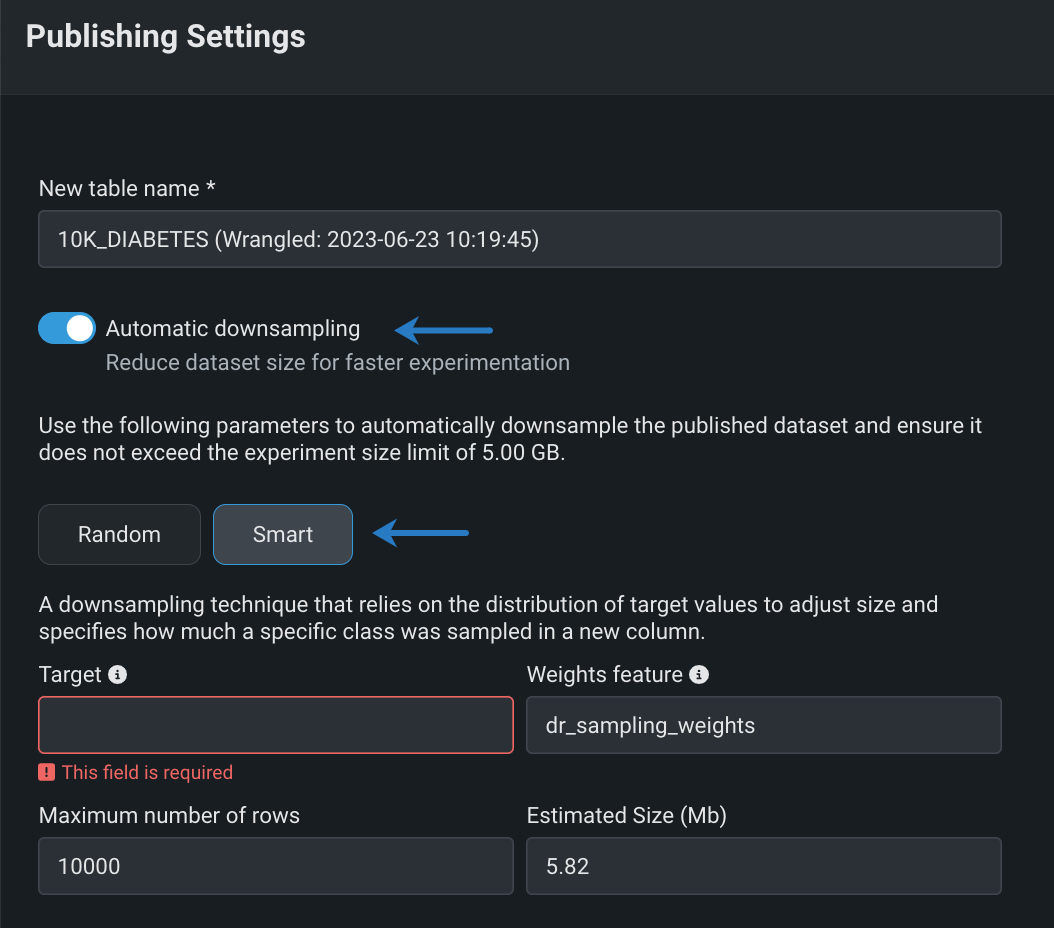

Enable the Automatic downsampling toggle and click Smart.

-

Populate the following fields:

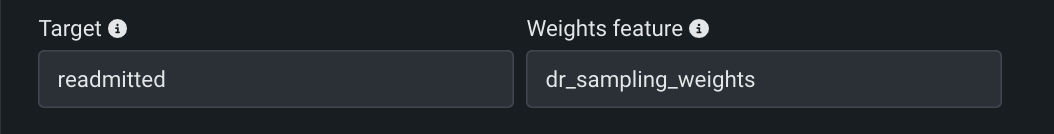

- Target: Select the target feature—a binary classification or zero-inflated feature. If the dataset does not contain either feature type, the option to apply smart downsampling is unavailable.

- Weights feature: (Optional) Enter a name for the Weights feature. This column, which contains downsampling weights, is computed and added to your output dataset as a result of smart downsampling.

- Maximum number of rows or Estimated Size (MB): These values are linked, so if you change the value in one field, the other field updates automatically. See DataRobot's dataset requirements to ensure the output dataset is below the file size limit.

Note

Any rows with null as a value in the target column will be filtered out after smart downsampling.

Publishing re-wrangled datasets¶

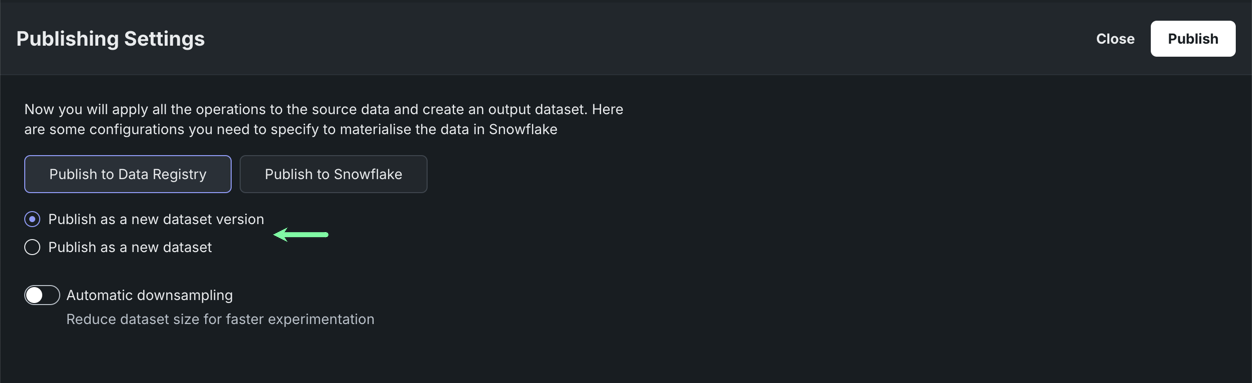

If you're publishing a recipe for a dataset that you've previously wrangled and published, there are two additional settings:

| Setting | Description |

|---|---|

| Publish as a new dataset version | When published, the output dataset is registered as new version of the wrangled dataset. |

| Publish as a new dataset | When published, the output dataset is registered as a separate dataset. |

Read more¶

To learn more about the topics discussed on this page, see: