Model Leaderboard¶

| Tile | Description |

|---|---|

|

Opens a list of all built models and overview information for each, with access to the model's available insights. |

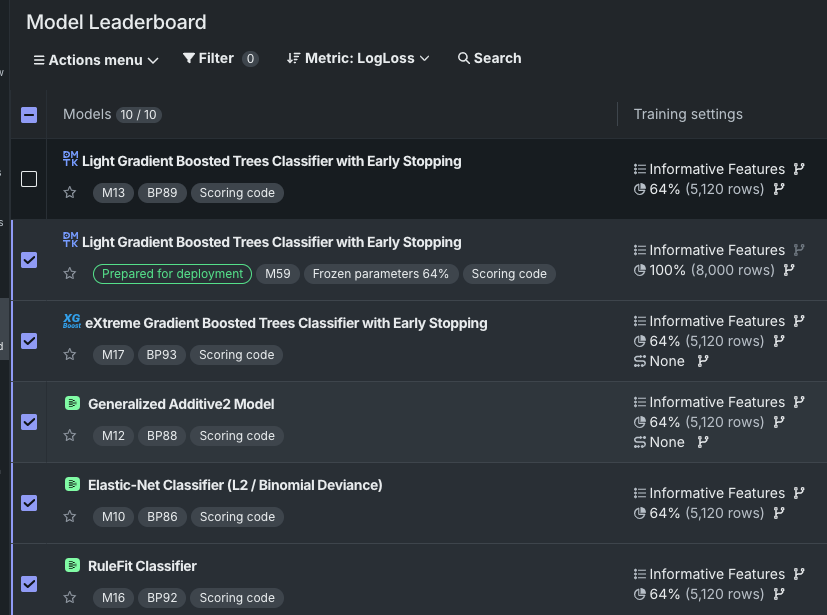

Once you start modeling, Workbench begins to construct a performance-ranked model Leaderboard to help with quick model evaluation. The Leaderboard provides a summary of information, including scoring information, for each model built in an experiment. From the Leaderboard, you can click a model to access visualizations for further exploration. Using these tools can help to assess what to do in your next experiment.

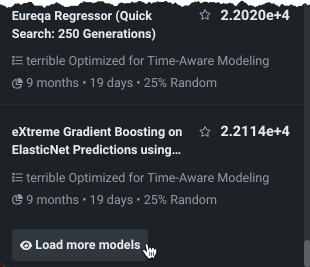

DataRobot populates the Leaderboard as it builds, initially displaying up to 50 models. Click Load more models to load 50 more models with each click.

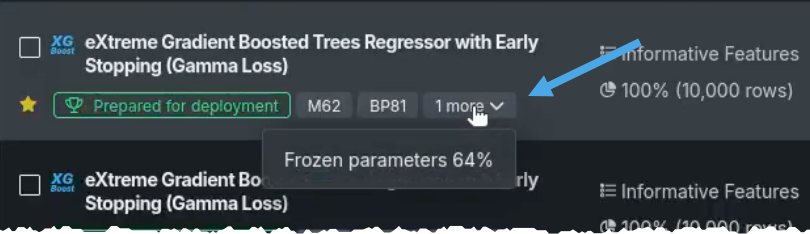

If you ran Quick mode, after Workbench completes the 64% sample size phase, the most accurate model is selected and trained on 100% of the data. That model is marked with the Prepared for Deployment badge.

Why isn't the prepared for deployment model at the top of the Leaderboard?

When Workbench prepares a model for deployment, it trains the model on 100% of the data. While the most accurate was selected to be prepared, it was selected based on a 64% sample size. As a part of preparing the most accurate model for deployment, Workbench unlocks Holdout, resulting in the prepared model being trained on different data from the original. If you do not change the Leaderboard to sort by Holdout, the validation score in the left bar can make it appear as if the prepared model is not the most accurate.

Two elements make up the Leaderboard:

- The Leaderboard itself, a manageable listing of all built models in the experiment.

- A model overview page that provides summary information and access to model insights.

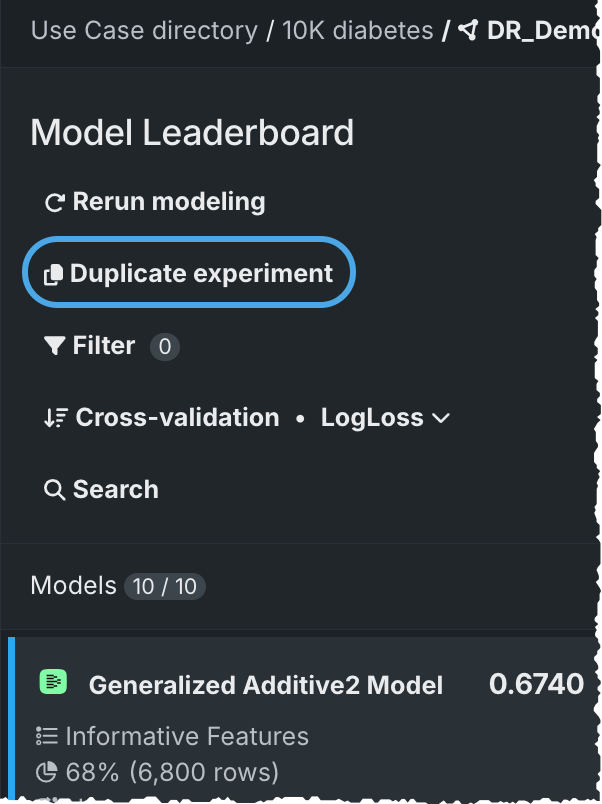

Model list¶

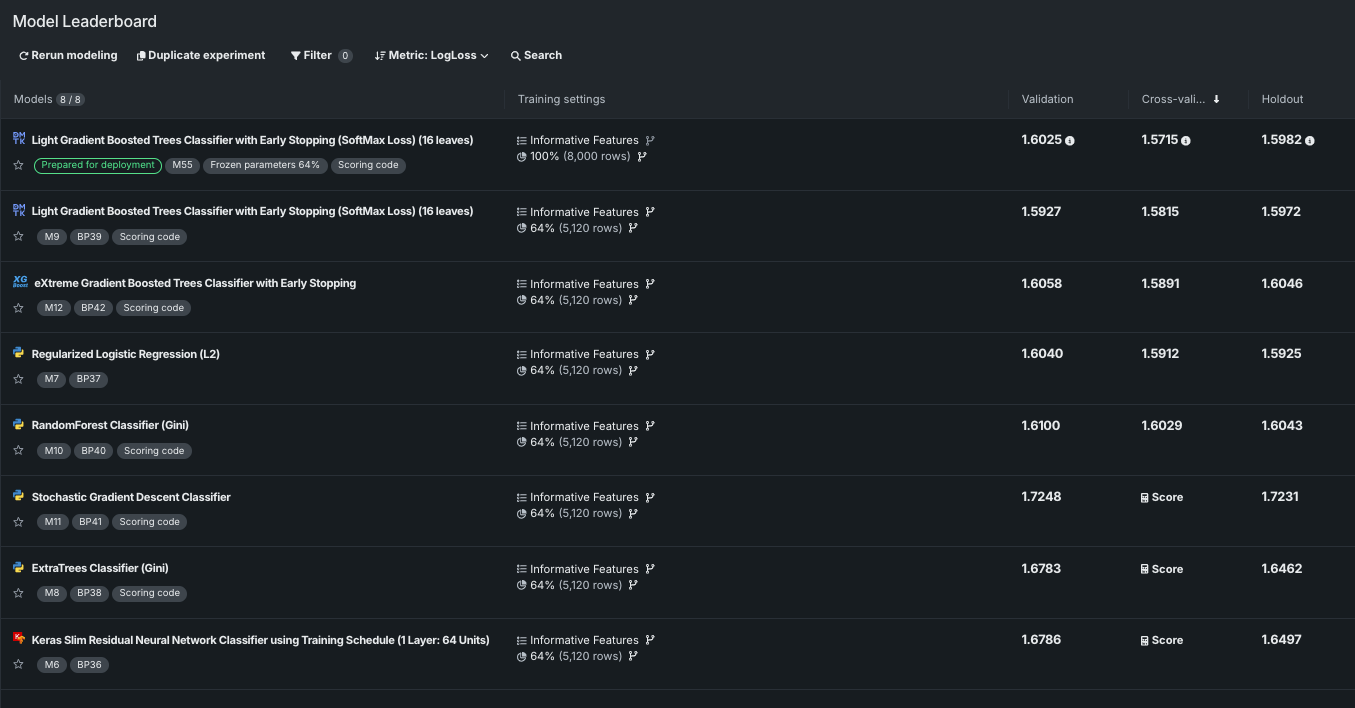

By default, the Leaderboard opens in an expanded, full-width view that shows all models in the experiment with a summary of their training settings and the validation, cross-validation, and holdout scores. Badges provide quick model identifying and scoring information while icons in front of the model name indicate model type.

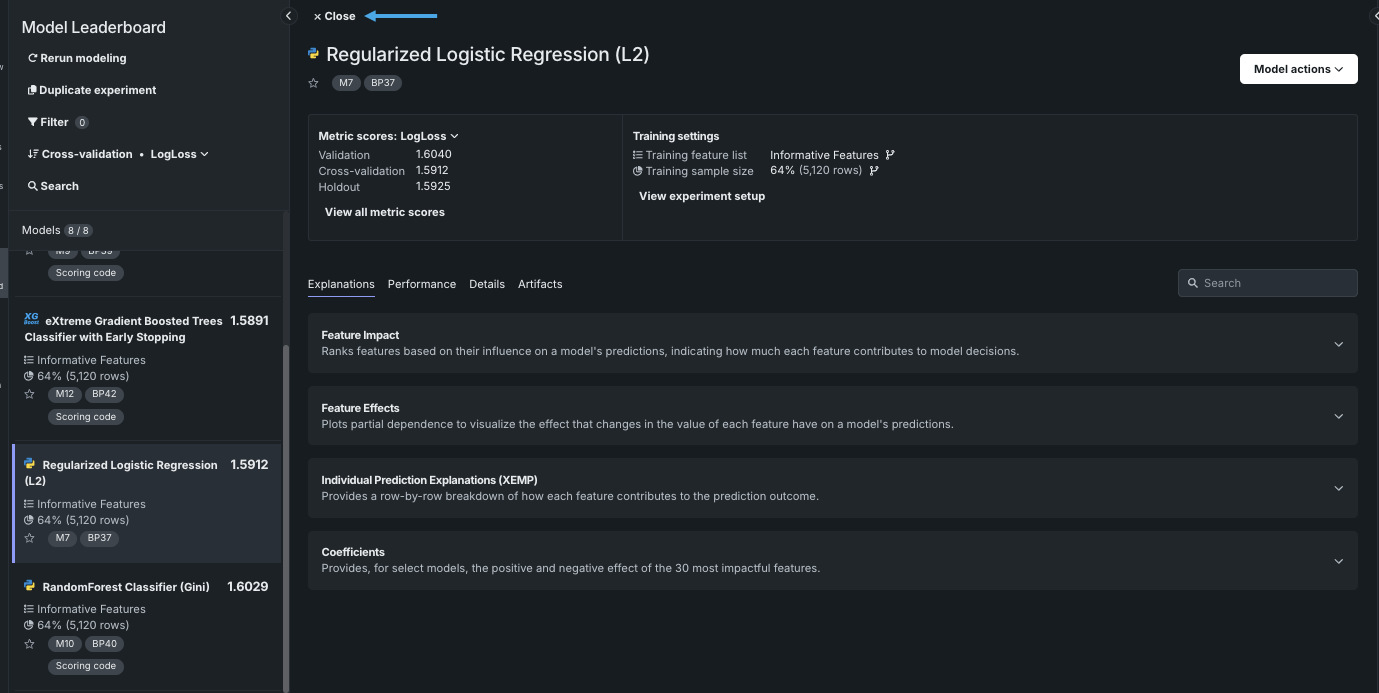

Click any model to show additional scoring information and to access the model insights. Click Close to return to the full-width view.

If a model has more badges than the Leaderboard can display, use the dropdown:

Model list display¶

The Leaderboard offers a variety of ways to filter and sort the Leaderboard model list to make viewing and focusing on relevant models easier. In addition to using the search function, you can filter, sort, and "favorite" models.

Search¶

Combine any of the filters with search filtering. First, search for a model type or blueprint number, for example, and then select Filters to find only those models of that type meeting the additional criteria.

Model filtering¶

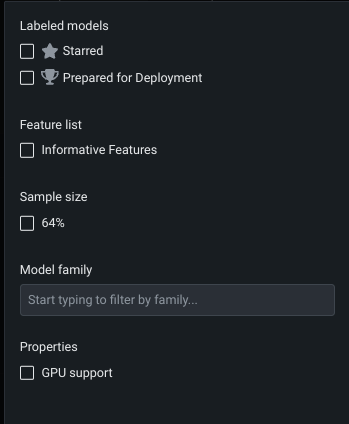

Filtering makes viewing and focusing on relevant models easier. Click Filter to set the criteria for the models that Workbench displays on the Leaderboard. The choices available for each filter are dependent on the experiment and/or model type—they were used in at least one Leaderboard model—and will potentially change as models are added to the experiment. For example:

| Filter | Displays models that |

|---|---|

| Labeled models | Have been assigned the listed tag, either starred models or models recommended for deployment. |

| Feature list | Were built with the selected feature list. |

| Sample size (random or stratified partitioning) | Were trained on the selected sample size. |

| Training period (date/time partitioning) | Were trained on backtests defined by the selected duration mechanism. |

| Model family | Are part of the selected model family:

|

| Properties | Were built using GPUs. |

Available fields, and the settings for that field, are dependent on the project and/or model type. For example, non-date/time models offer sample size filtering while time-aware models offer training period

Note

Filters are inclusive. That is, results show models that match any of the filters, not all filters. Also, options available for selection only include those in which at least one model matching the criteria is on the Leaderboard.

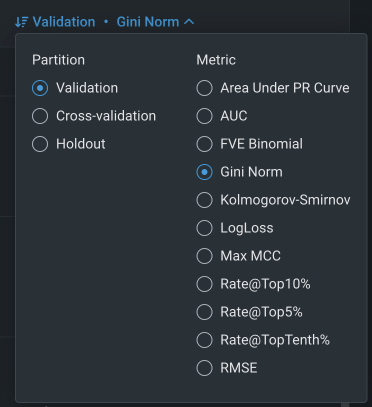

Model sorting¶

By default, the Leaderboard sorts models based on the score of the validation partition, using the selected optimization metric. You can, however, use the Sort models by control to change the basis of the display parameter when evaluating models.

Note that although Workbench built the project using the most appropriate metric for your data, it computes many applicable metrics on each of the models. After the build completes, you can redisplay the Leaderboard listing based on a different metric. It will not change any values within the models, it will simply reorder the model listing based on their performance on this alternate metric.

See the page on optimization metrics for detailed information on each.

Filter by favorites¶

Tag or "star" one or more models on the Leaderboard, making it easier to refer back to them when navigating through the application. click to star and then use Filter to show only starred models

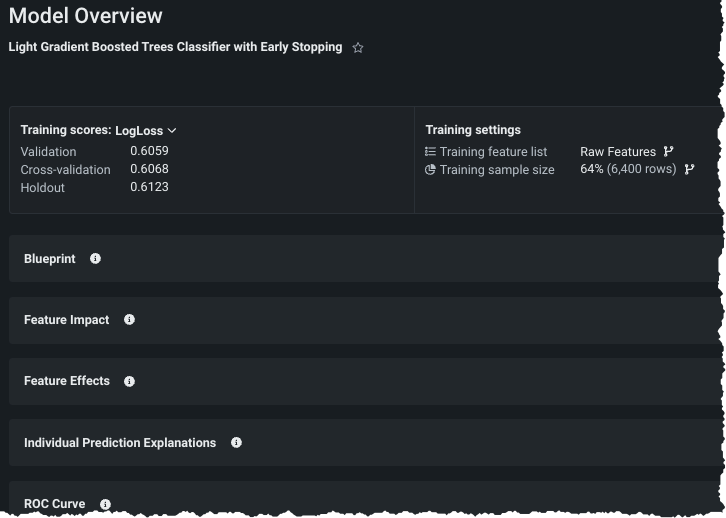

Model Overview¶

When you select a model from the Leaderboard listing, it opens to the Model Overview where you can:

- See specific details about metric scores and settings.

- Retrain models on new feature lists or sample sizes. Note that you cannot change the feature list on the model prepared for deployment as it is "frozen".

- Access model insights.

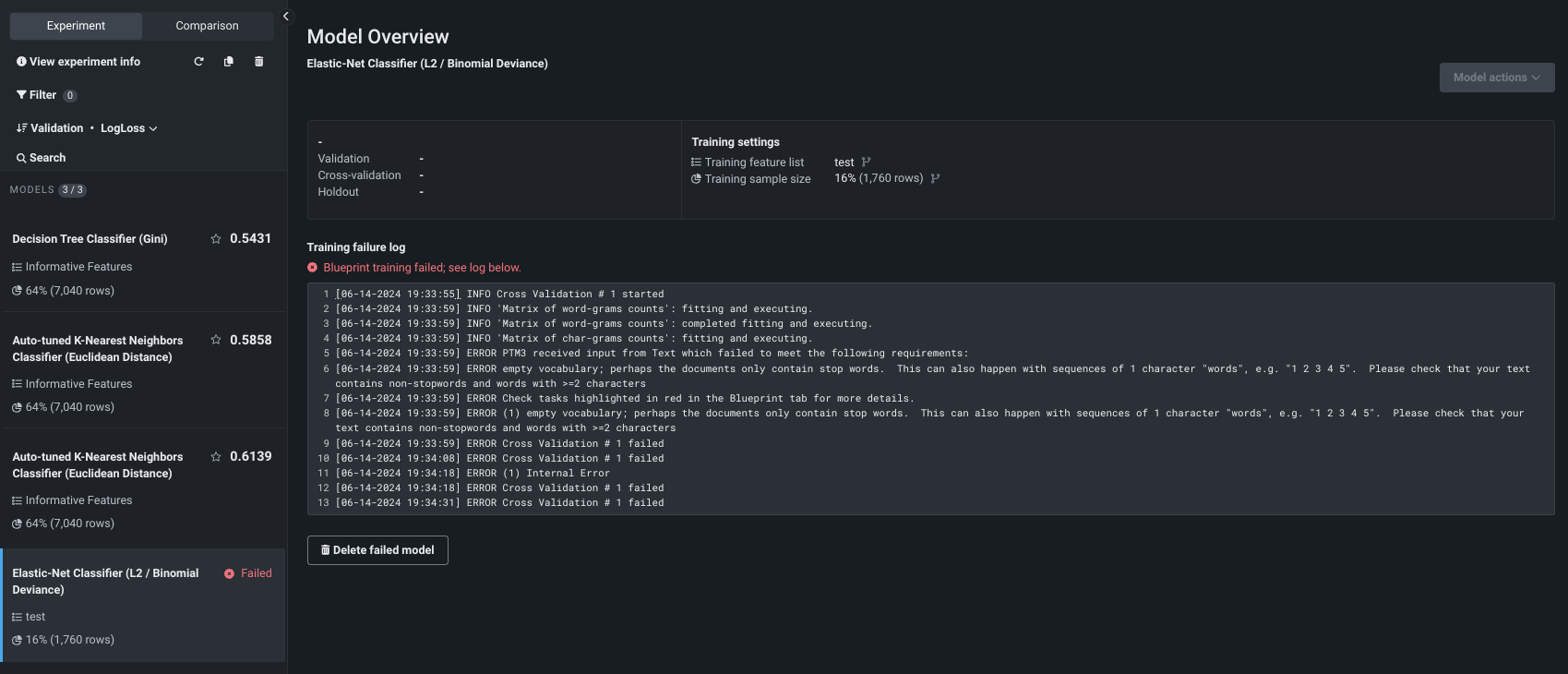

Model build failure¶

If a model failed to build, you will see that in the job queue as Autopilot runs. Once it completes, the model(s) are still listed in the Leaderboard but the entry indicates the failure. Click the model to display a log of issues that resulted in failure.

Use the Delete failed model button to remove the model from the Leaderbaord.

Experiment tools¶

In addition to the model access available from the Leaderboard, you can also:

- Create blender (ensemble) models to increase accuracy by combining model predictions.

- Duplicate the experiment.

- Use Rerun modeling to rerun Autopilot with a different feature list, a different modeling mode, or additional automation settings.

Blend models¶

A blender model can increase accuracy by combining the predictions of between two and up to eight models.

Optimizing for response time

To improve response times for blender models, DataRobot stores predictions for all models trained at the highest sample size used by Autopilot (typically 64%) and creates blenders from those results. Storing only the largest sample size (and therefore predictions from the best-performing models) limits the disk space required.

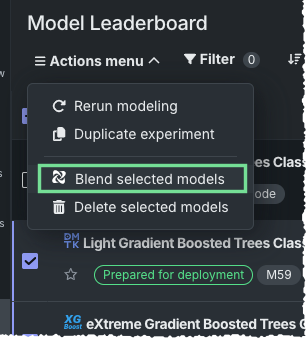

To create a blender model:

-

From the Leaderboard, select at least two, and up to eight, models to blend.

-

From the Actions menu, select Blend selected models. The option will not be available if:

- The experiment is an excluded model or experiment type.

- You have selected too many models to blend.

- Selected models have different forecast distances (time-aware experiments).

- Model actions are not supported for your role.

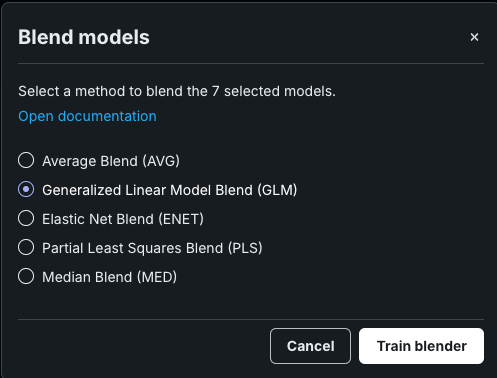

-

The Blend models modal opens, providing a list of methods that are supported for the selected models. Select a method to create a new blender model from each selected Leaderboard model, then click to train. See the blender method reference for information on the methods available.

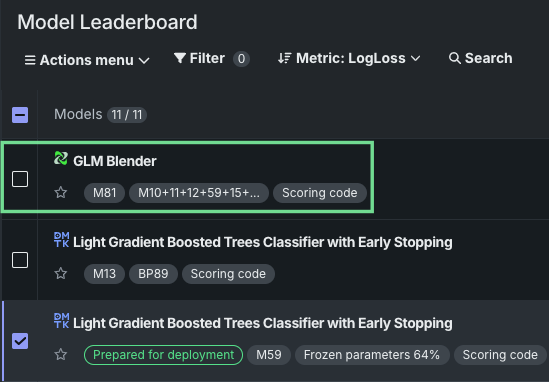

When training is complete, the new blended model displays in the list on the Leaderboard.

Blend methods¶

The table below lists blend methods with a short description and their experiment type availability.

Available blender methods

| Method | Description |

|---|---|

| Average Blend (AVG) | Combine prediction averages from multiple base models by taking the simple arithmetic mean of their outputs (for regression) or the average of predicted probabilities (for classification). |

| ElasticNet Blend (ENET) | Learn optimal weights for combining, rather than averaging, predictions from multiple base models. ElasticNet's L1 and L2 regularization help select the most valuable models while preventing overfitting. |

| Generalized Linear Model Blend (GLM) | Combine predictions from multiple base models, where the base model predictions serve as input features and the GLM learns linear weights. |

| Maximum Blend (MAX) | Use complex algorithms (e.g., neural networks, gradient boosting, or stacking with multiple layers) to learn intricate, non-linear combinations of base model predictions. |

| Mean Absolute Error (MAE) | Learn optimal weights for combining base model predictions by minimizing the Mean Absolute Error loss function during blending. This differs from typical squared-error-based blenders by being more robust to outliers. |

| Mean Absolute Error 1 (MAE1) | Learn optimal weights for combining base model predictions by minimizing the Mean Absolute Error loss function during blending and apply L1 regularization. This differs from typical squared-error-based blenders by being more robust to outliers. |

| Minimum Blend (MIN) | Combine predictions from multiple base models using basic aggregation methods like averaging (for regression) or majority voting (for classification). This is a baseline ensemble technique with no learned weights or complex combination rules. |

| Median Blend (MED) | Combine predictions from multiple base models by taking the median value of their outputs (for regression) or the median of predicted probabilities (for classification). MED Blend is more robust to outliers than mean-based blending because the median is less sensitive to extreme predictions. |

| Partial Least Squares Blend (PLS) | Use PLS regression to combine predictions from multiple base models by finding linear combinations of the base model predictions that maximize covariance with the target variable. Unlike simple linear blending that treats base model predictions as independent features, PLS specifically looks for latent components that capture the shared predictive information across models while being maximally correlated with the target. |

| Average Blend by Forecast Distance | Time-aware. Create a model that blends, for each forecast distance, the average of each model's predictions |

| ENET Blend by Forecast Distance | Time-aware. Create a model that trains, for each forecast distance, an ElasticNet model to predictions of the selected models. |

Note

DataRobot has special logic in place for natural language processing (NLP) and image fine-tuner models. For example, fine-tuners do not support stacked predictions. As a result, when blending a combination of stacked and non-stack-enabled models, the available blender methods are: AVG, MED, MIN, or MAX. DataRobot does not support other methods in this case because they may introduce target leakage.

Feature considerations¶

The following model types or circumstances prevent a model from inclusion in a blender:

- Model features not supported in NextGen (rating tables, , custom tasks).

- Extended multiclass with more than 10 classes and multilabel experiments.

- Unsupervised predictive or time-aware clustering experiments.

- Blender models (those models that resulted from a previous blender action).

- Custom models, created in Registry's workshop.

- Failed models.

- Deprecated and disabled models.

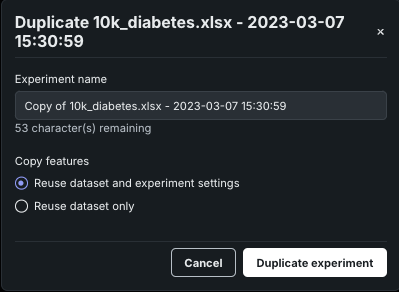

Duplicate experiments¶

Use the link at the top of the Leaderboard to duplicate the current experiment. This creates a new experiment and can be a faster method to work with your data than re-uploading it.

When you click to duplicate a modal opens with an option to provide a new experiment name. Then, select whether to copy only the dataset or to copy the dataset and experiment settings. If you select to include settings, DataRobot clones the target as well as any advanced settings and custom feature lists associated with the original project.

When complete, DataRobot opens to the new experiment setup page where you can begin the model building process.