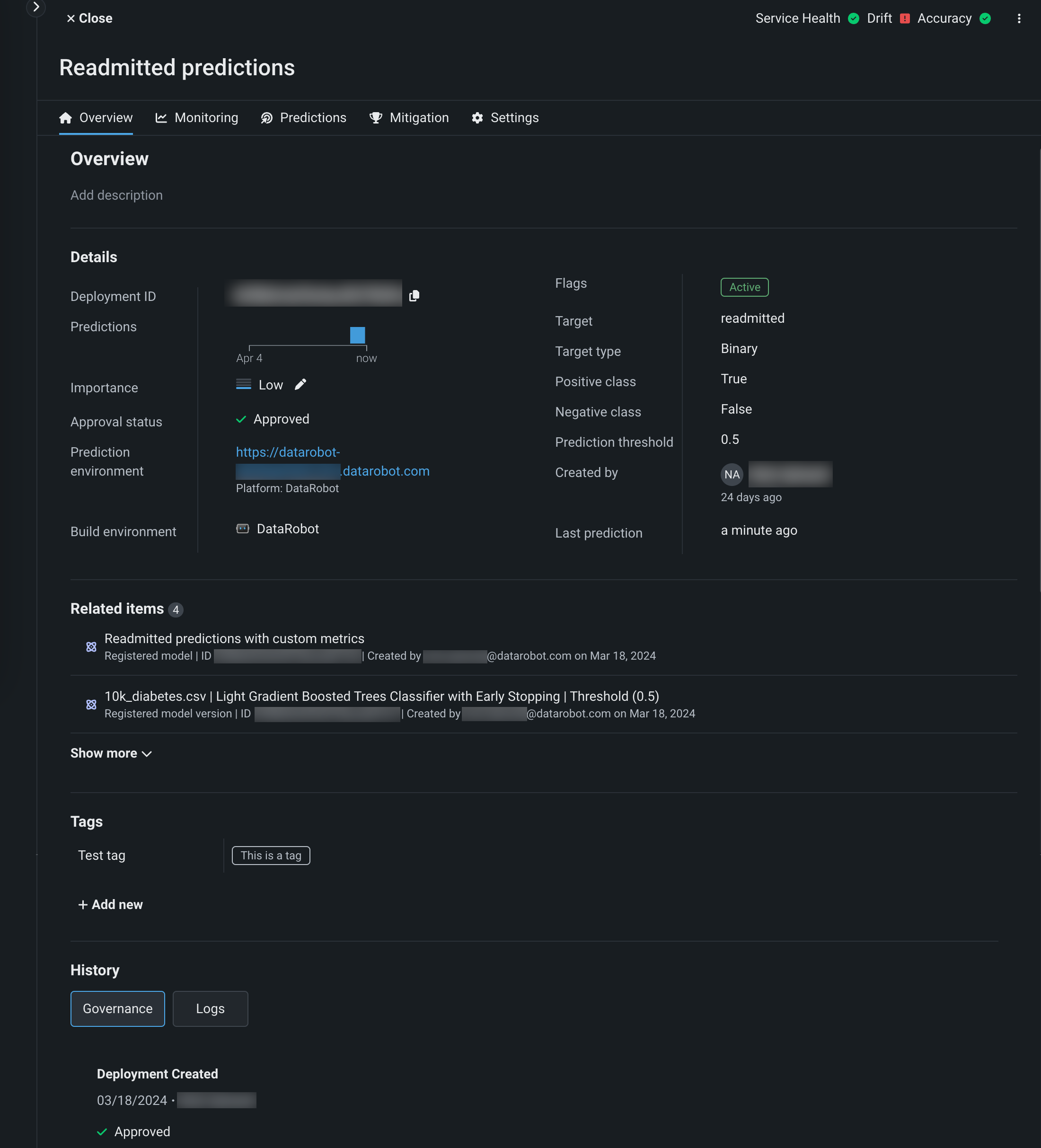

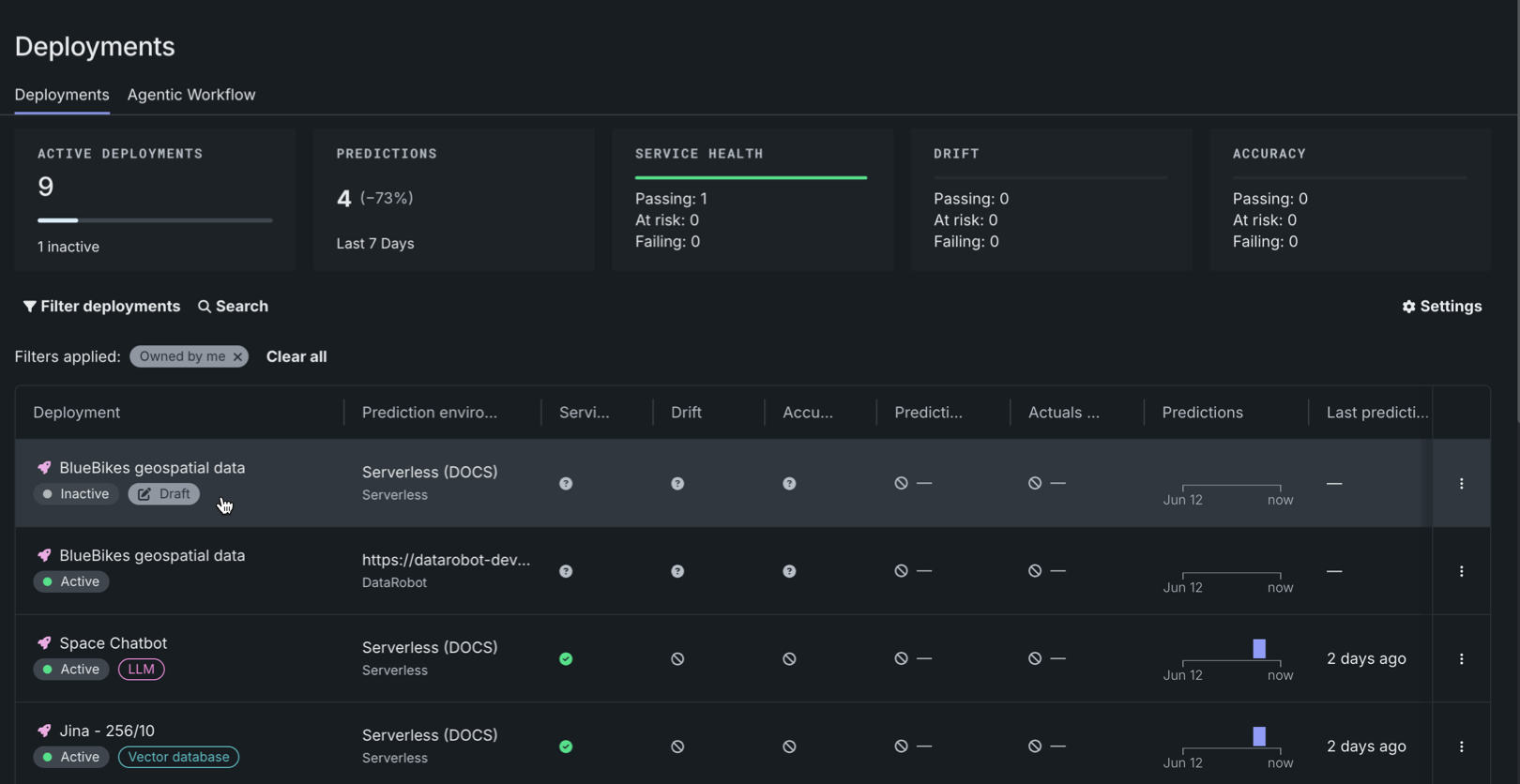

Deployment overview¶

When you select a deployment from the Deployments dashboard, DataRobot opens the Overview page for that deployment. The Overview page provides a model- and environment-specific summary that describes the deployment, including the information you supplied when creating the deployment and any model replacement activity.

Details¶

The Details section of the Overview tab lists an array of information about the deployment, including the deployment's model and environment-specific information. At the top of the Overview page, you can view the deployment name and description; click the edit icon to update this information.

Note

The information included in this list differs for deployments using custom models and external environments. It can also include information dependent on the target type.

| Field | Description |

|---|---|

| Deployment ID | The ID number of the current deployment. Click the copy icon to save it to your clipboard. |

| Predictions | A visual representation of the relative prediction frequency, per day, over the past week. |

| Importance | The importance level assigned during deployment creation. Click the edit icon to update the deployment importance. |

| Approval status | The deployment's approval policy status for governance purposes. |

| Prediction environment | The environment on which the deployed model makes predictions. |

| Build environment | The build environment used by the deployment's current model (e.g., DataRobot, Python, R, or Java). |

| Flags | Indicators providing a variety of deployment metadata, including deployment status—Active, Inactive, Errored, Warning, Launching—and deployment type—Batch, LLM. |

| Target type | The type of prediction the model makes. For Classification model deployments, you can also see the Positive Class, Negative Class, and Prediction Threshold. |

| Target | The feature name of the target used by the deployment's current model. |

| Modeling features | The features included in the model's feature list. Click View details to review the list of features sorted by importance. |

| Created by | The name of the user who created the model. |

| Last prediction | The number of days since the last prediction. Hover over the field to see the full date and time. |

| Custom model information | |

| Custom model | The name and version of the custom model registered and deployed from the workshop. |

| Custom environment | The name and version of the custom model environment on which the registered custom model runs. |

| Resource bundle | Preview feature. The CPU or GPU bundle selected for the custom model in the resource settings. |

| Resource replicas | Preview feature. The number of replicas defined for the custom model in the resource settings. |

| External model information | |

| Deployment Console URL | The URL of the deployment in the NextGen Console. |

| External Predictions URL | The URL of the external prediction environment for the external model. |

| Generative model information | |

| Target | The feature name of the target column used by the deployment's current generative model. This feature is the generative model's answer to a prompt; for example, resultText, answer, completion, etc. |

| Prompt column name | The feature name of the prompt column used by the deployment's current generative model. This feature is the prompt the generative model responds to; for example, promptText, question, prompt, etc. |

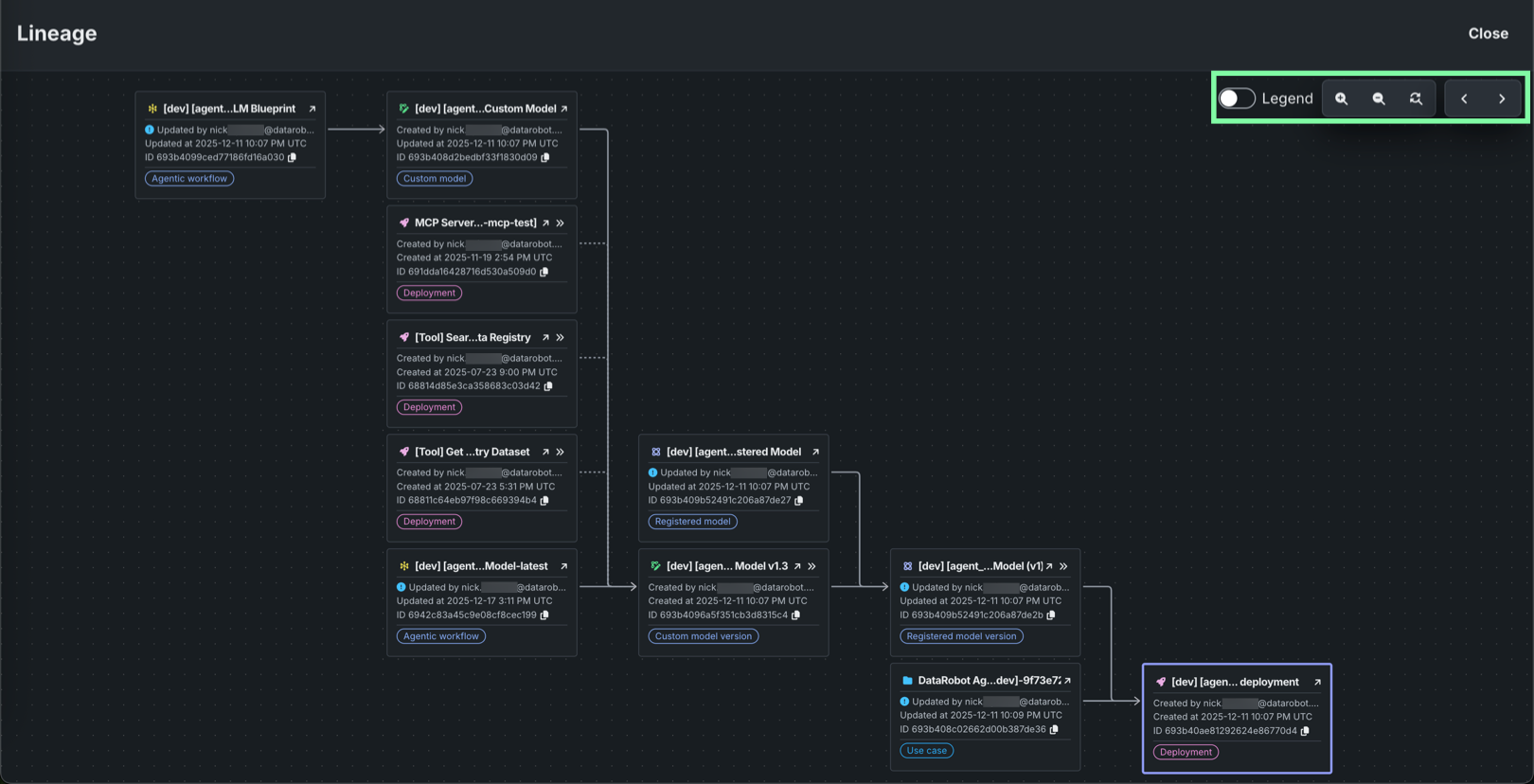

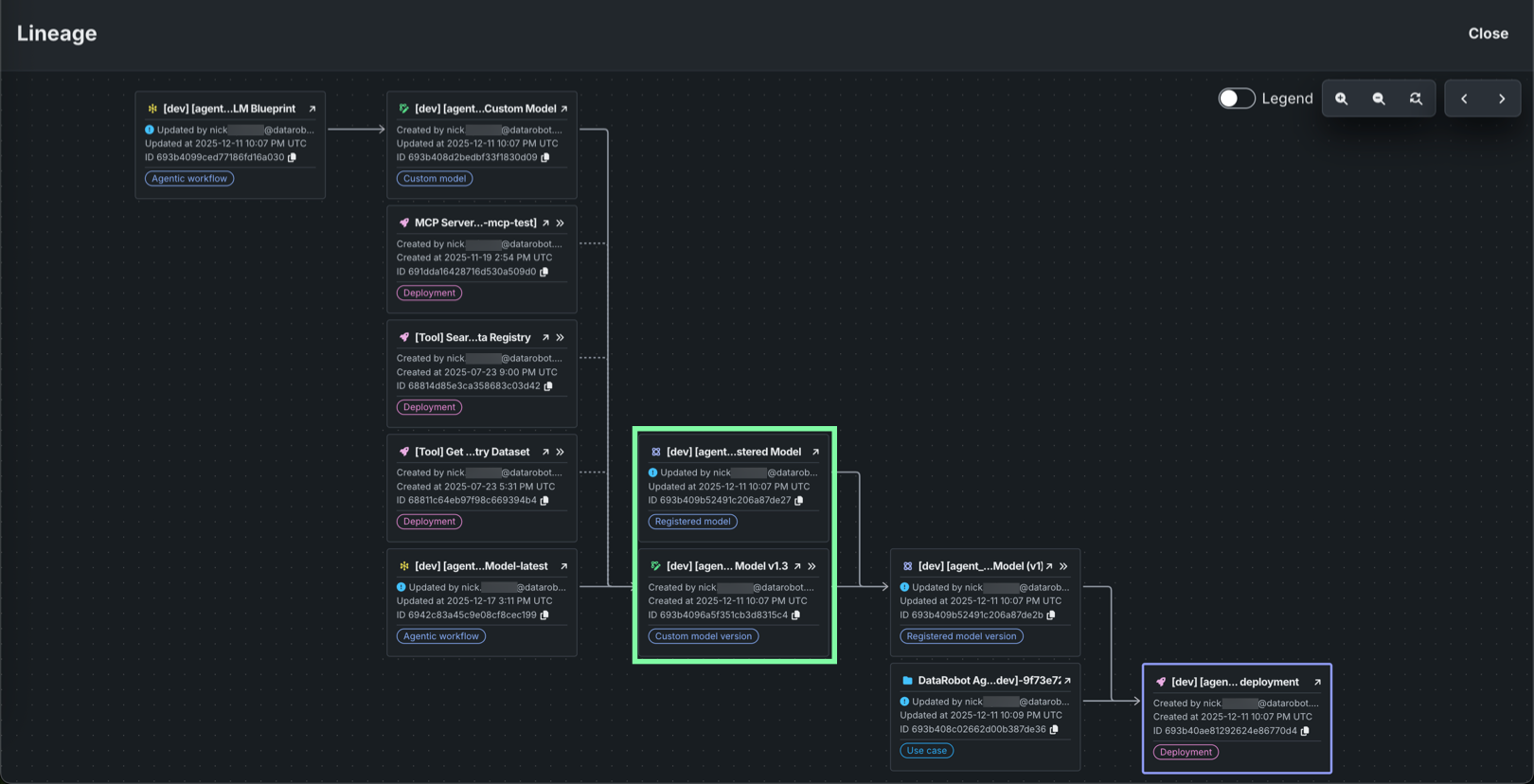

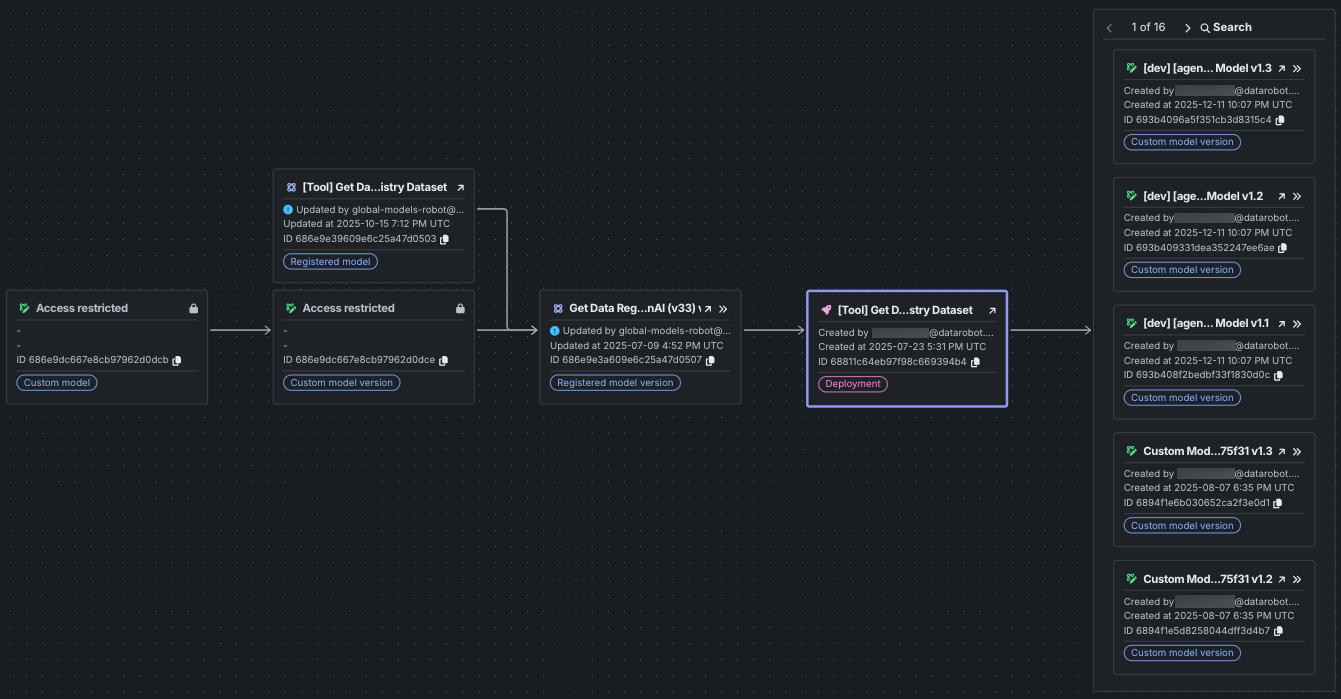

Lineage¶

The Lineage section provides visibility into the assets and relationships associated with a deployment. This section helps understand the complete context of a deployment, including the models, datasets, experiments, and other MLOps assets connected to it.

The Lineage section contains two tabs:

-

Graph: An interactive, end-to-end visualization of the relationships and dependencies between MLOps assets. This DAG (Directed Acyclic Graph) view helps audit complex workflows, track asset lifecycles, and manage components of agentic and generative AI systems. The graph displays nodes (assets) and edges (relationships/connections), enabling the exploration of connections and navigation through the asset ecosystem.

-

List: A list of the assets associated with a deployment, including registered models, model versions, experiments, datasets, and other related items. Each item displays its name, ID, creator, and creation date. Click View to open any related item, or use the list to quickly identify and access connected assets. Depending on the type of model currently deployed (DataRobot NextGen, DataRobot Classic, custom, or external), you can see different related items.

The Lineage section in the Overview tab includes a Graph view that provides an end-to-end visualization of the relationships and dependencies between your MLOps assets. This feature is essential for auditing complex workflows, tracking asset lifecycles, and managing the various components of agentic and generative AI systems.

The Graph view serves as a central hub for reviewing your systems. The lineage is presented as a Directed Acyclic Graph (DAG) consisting of nodes (assets) and edges (relationships).

When reviewing nodes, the asset you are currently viewing is distinguished by a purple outline. Nodes display key information such as ID, name (or version number), creator, and the last modification information (user and date).

When reviewing edges, solid lines represent concrete, persistent relationships within the platform, such as a registered model used to create a deployment. Dashed lines indicate relationships inferred from runtime parameters. These are considered less reliable as they may change if a user modifies the underlying code or parameters. Arrows generally flow from the "ancestor" or container to the "descendant" or content (e.g., Registered model version to Deployment).

Inaccessible assets

If an asset exists but you do not have permission to view it, the node only displays the asset ID and is marked with an Asset restricted notice.

The view is highly interactive, allowing for deep exploration of your asset ecosystem. To interact with the graph area, use the following controls:

Graph area navigation

To navigate the graph, click and drag the graph area. To control the zoom level, scroll up and down.

To interact with the related item nodes, use the following controls when they appear:

| Control | Description |

|---|---|

| Navigate to the asset in a new tab. | |

| Open a fullscreen view of the related items lineage graph centered on the selected asset node. | |

| Copy the asset's associated ID. |

One-to-many list view

If an asset is used by many other assets (e.g., one dataset version used for many projects), in the fullscreen view, the graph shows a preview of the 5 most recent items. Additional assets are viewable in a paginated and searchable list. If you don't have permission to view the ancestor of a paginated group, you can only view the 5 most recent items, without the option to change pages or search.

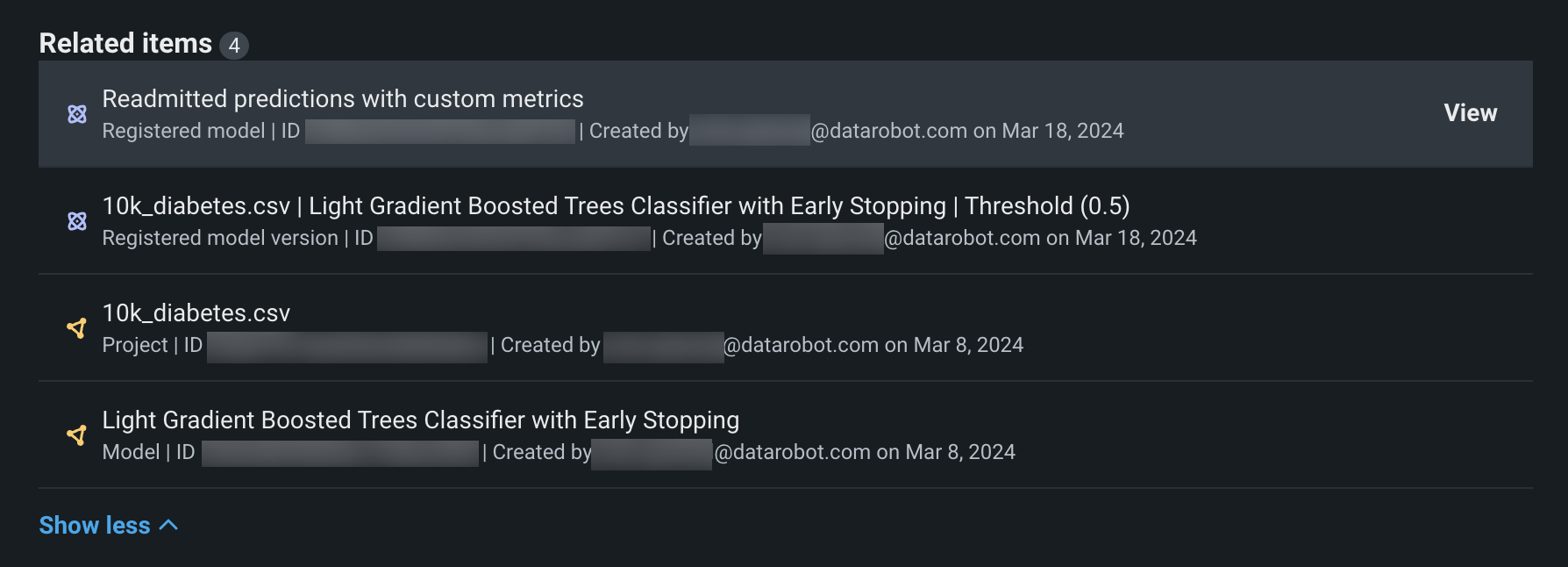

The Lineage section in the Overview tab also includes a List view. On the List tab, click Show more to reveal all related items. Each item in the list displays its name, ID, the user who created it, and the date it was created. Click View to open the related item. Depending on the type of model currently deployed (DataRobot NextGen, DataRobot Classic, custom, or external), you can see different related items.

| Field | Description |

|---|---|

| Registered model | The name and ID of the registered model associated with the deployment. Click to open the registered model in Registry. |

| Registered model version | The name and ID of the registered model version associated with the deployment. Click to open the registered model version in Registry. |

| DataRobot NextGen model information | |

| Use Case | The name and ID of the Use Case in which the deployment's current model was created. Click to open the Use Case in Workbench. |

| Experiment | The name and ID of the experiment in which the deployment's current model was created. Click to open the experiment in Workbench. |

| Model | The name and ID of the deployment's current model. Click to open the model overview in a Workbench experiment. You can view the model ID of any models deployed in the past from the deployment logs (History > Logs). |

| Training dataset | The filename and ID of the training dataset used to create the currently deployed model. |

| DataRobot Classic model information | |

| Project | The name and ID of the project in which the deployment's current model was created. Click to open the project. |

| Model | The name and ID of the deployment's current model. Click to open the model blueprint. You can view the Model ID of any models deployed in the past from the deployment logs (History > Logs). |

| Training dataset | The filename and ID of the training dataset used to create the currently deployed model. |

| Custom model information | |

| Custom model | The name, version, and ID of the custom model associated with the deployment. Click to open the workshop to the Assemble tab for the custom model. |

| Custom model version | The version and ID of the custom model version associated with the deployment. Click to open the workshop to the Versions tab for the custom model. |

| Training dataset | The filename and ID of the training dataset used to create the currently deployed custom model. |

| External model information | |

| Training dataset | The filename and ID of the training dataset used to create the currently deployed external model. |

| Holdout dataset | The filename and ID of the holdout dataset used for the currently deployed external model. |

Inaccessible related items

If you don't have access to a related item, a lock icon appears at the end of the item's row.

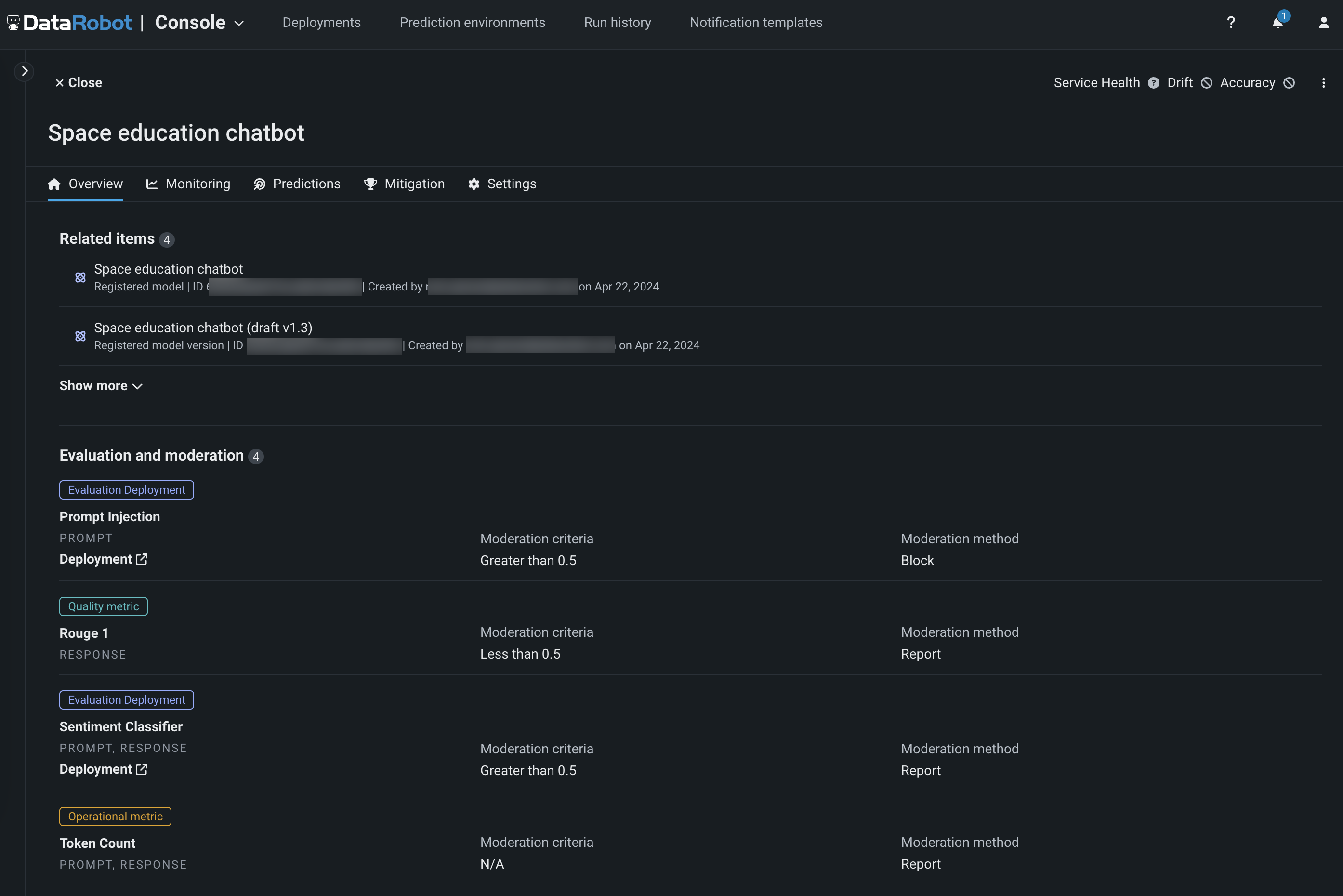

Evaluation and moderation¶

Availability information

Evaluation and moderation guardrails are a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

Feature flag: Enable Moderation Guardrails (Premium), Enable Global Models in the Model Registry (Premium), Enable Additional Custom Model Output in Prediction Responses

When a text generation model with guardrails is registered and deployed, you can view Evaluation and moderation section the deployment's Overview tab:

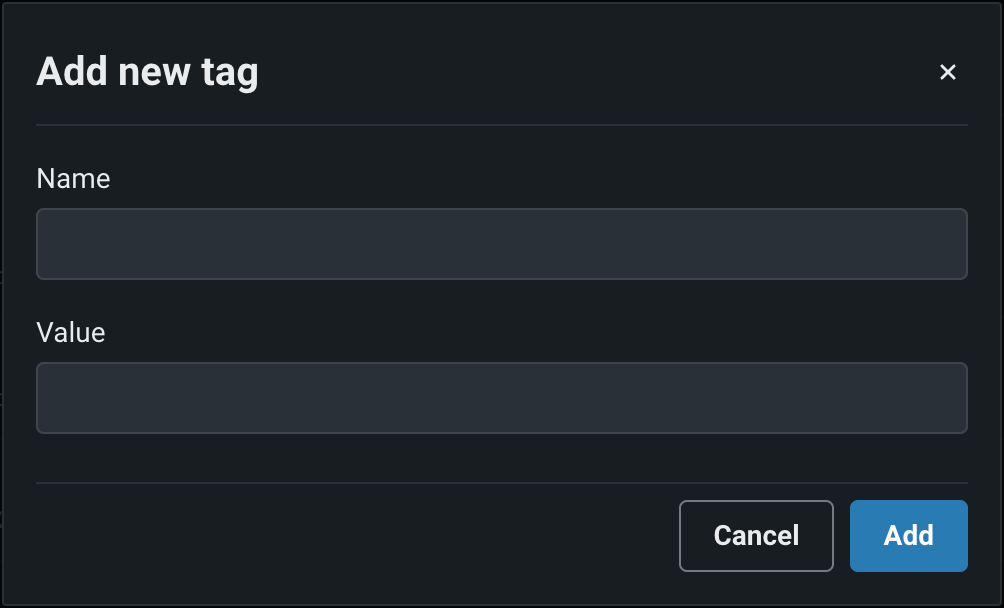

Tags¶

In the Tags section, click + Add new and enter a Name and a Value for each key-value pair you want to tag the deployment with. Deployment tags can help you categorize and search for deployments in the dashboard.

Runtime parameters¶

Preview

The ability to edit custom model runtime parameters on a deployment is on by default.

Feature flag: Enable Editing Custom Model Runtime-Parameters on Deployments

On a custom model deployment's Overview tab, you can access the Runtime parameters section. If the deployed custom model defines runtime parameters through runtimeParameterDefinitions in the model-metadata.yaml file, you can manage these parameters in this section. To do this, first make sure the deployment is inactive, then, click Edit:

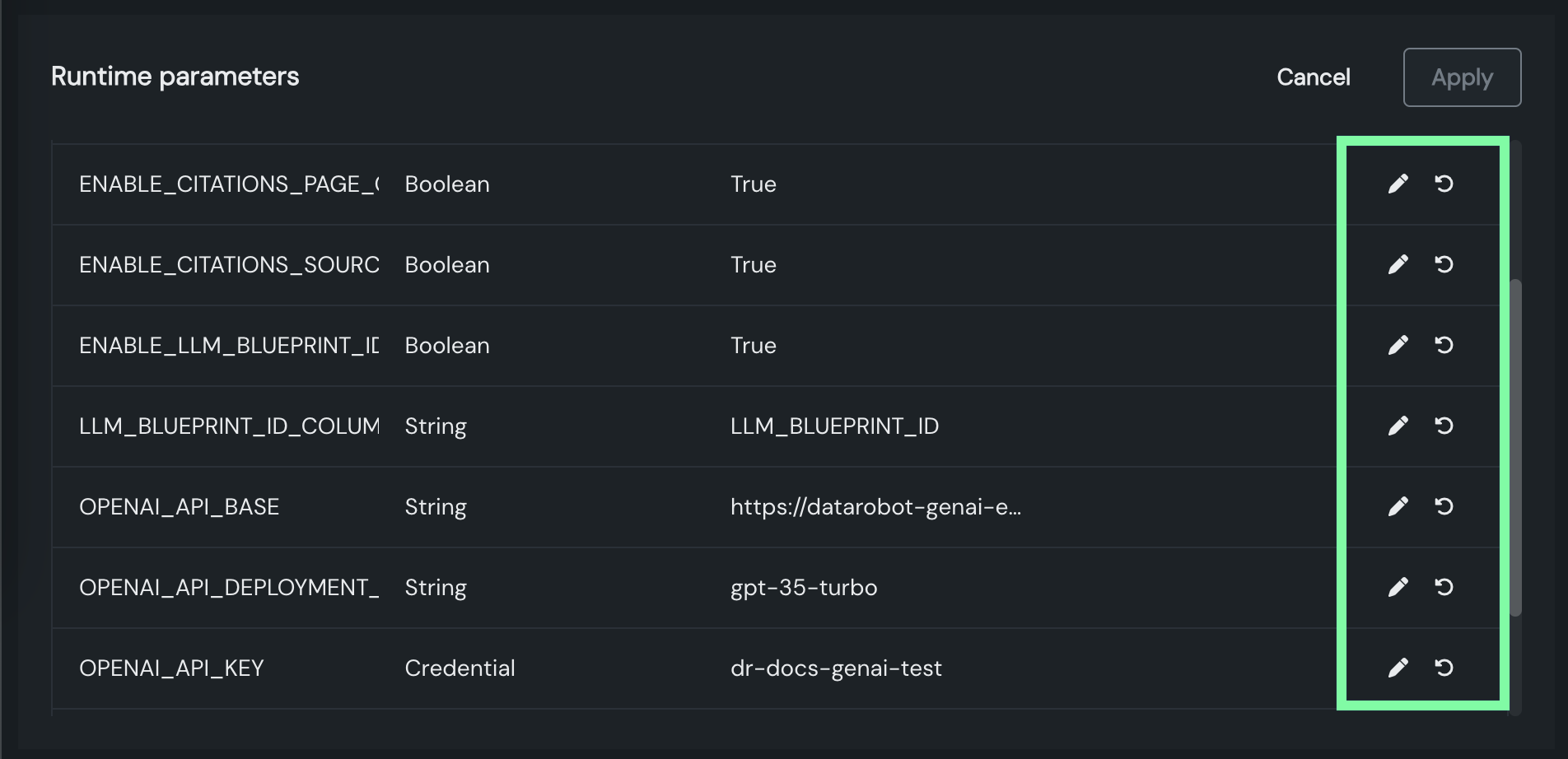

Each runtime parameter's row includes the following controls:

| Icon | Setting | Description |

|---|---|---|

| Edit | Open the Edit a Key dialog box to edit the runtime parameter's Value. | |

| Reset to default | Reset the runtime parameter's Value to the defaultValue set in the model-metadata.yaml file (defined in the source custom model). |

If you edit any of the runtime parameters, to save your changes, click Apply.

For more information on how to define runtime parameters and use them in custom model code, see the Define custom mode runtime parameters documentation.

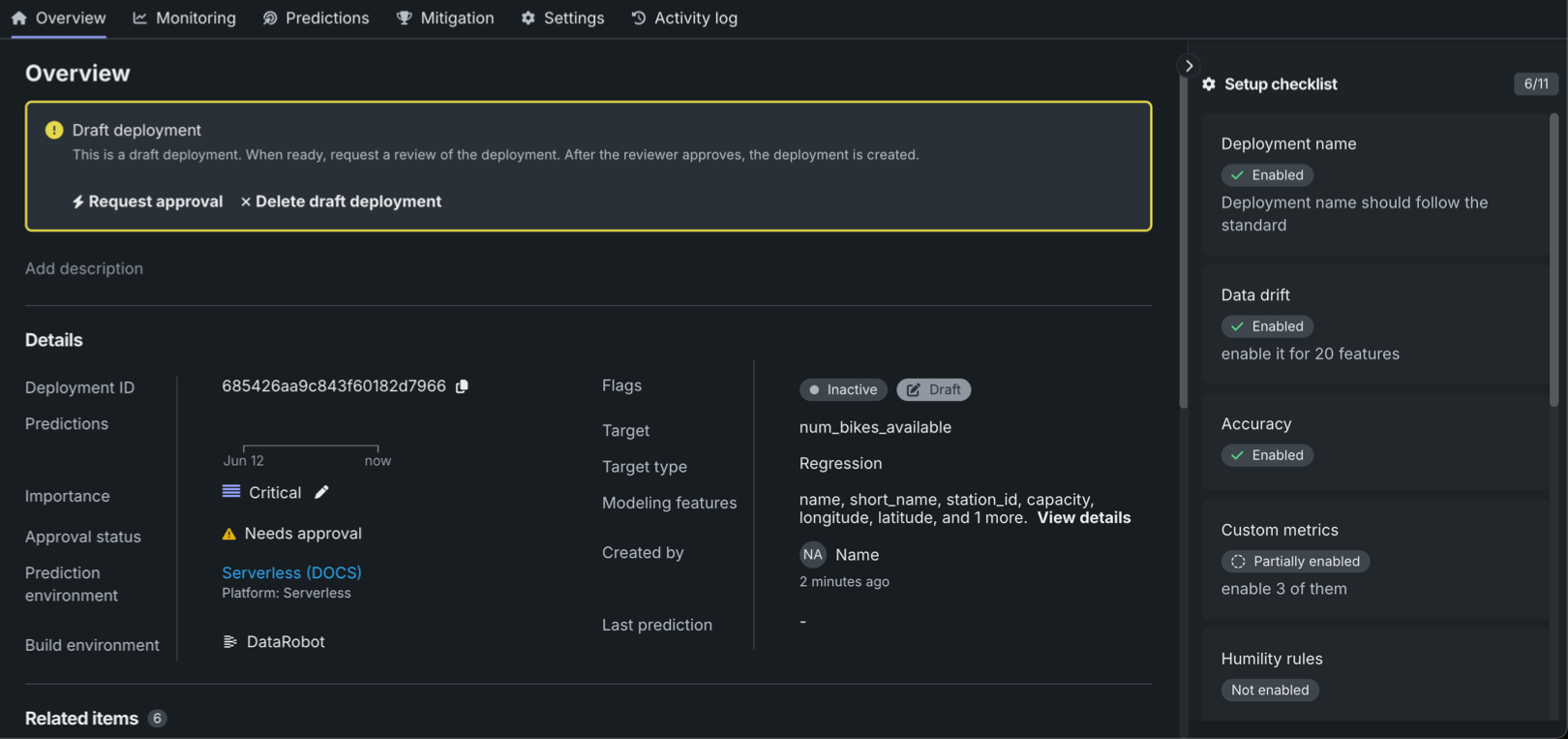

Setup checklist and approval¶

When governance management functionality is enabled for your organization, the Setup checklist panel appears on the deployment Overview. This checklist includes the settings required by your administrator, any additional guidance they provided when configuring the checklist, and the status of the checklist setting: Not enabled, Partially enabled, or Enabled. Complete this checklist before requesting deployment approval from an administrator. Click a tile in the checklist to open the relevant deployment setting page.

Default setup checklist

By default, the Setup checklist displays all available setting groups for the current deployment; however, the default list is customized by the organization-level administrator.

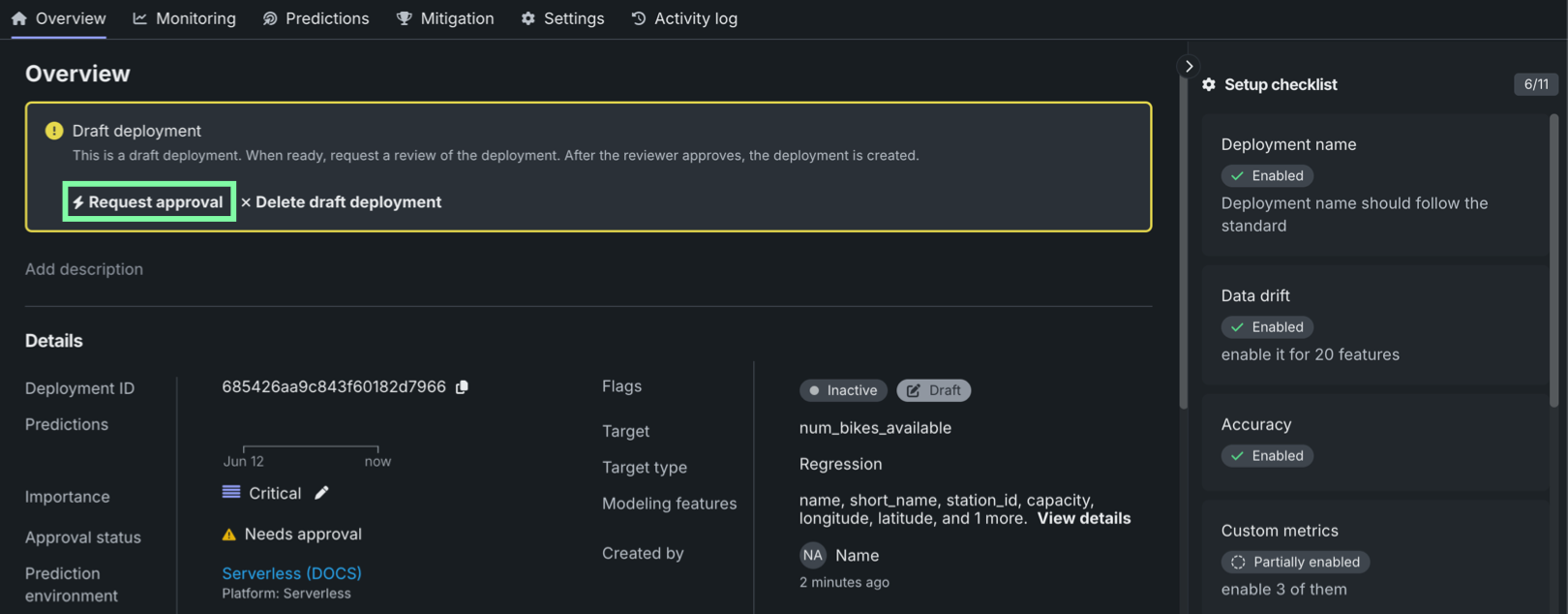

If a deployment is subject to a configured approval policy, the deployment is created in a Draft state, with an Approval status of Needs approval, as shown above. After you complete the approval checklist, you can click Request approval in the Draft deployment notice on the deployment Overview page.

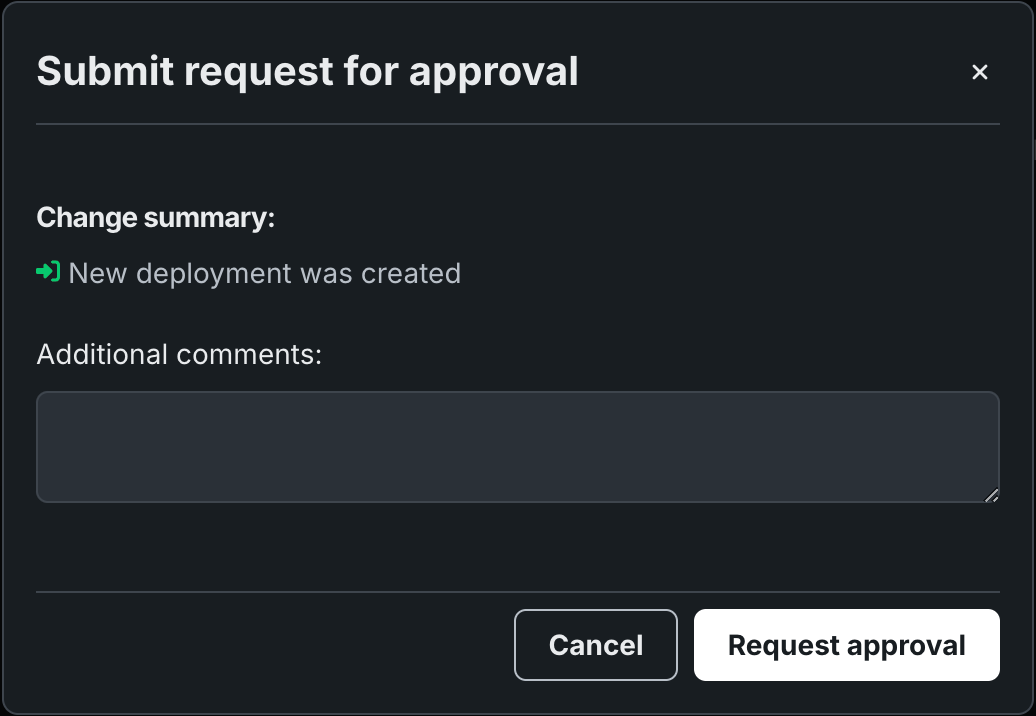

When you click Request approval, the Submit request for approval dialog box appears, where you can enter Additional comments for the approver. Then, click Request approval to complete your request. After approval, the deployment is automatically moved out of the draft state and activated.

On the Deployments tab, draft deployments awaiting approval are shown with a Draft tag and an Inactive tag:

Draft deployment limitations

With a draft deployment, you can't make predictions, upload actuals or custom metric data, or create scheduled jobs.