Notebook reference¶

FAQ¶

Are DataRobot Notebooks available in Classic and Workbench?

Yes, DataRobot Notebooks are available in both Workbench and DataRobot Classic.

Why should I use DataRobot Notebooks?

DataRobot Notebooks offer you the flexibility to develop and run code in the language of your choice using both your preferred open-source ML libraries as well as the DataRobot API for streamlining and automating your DataRobot workflows—all within the DataRobot platform. With the fully managed, hosted platform for creating and executing notebooks with auto-scaling capabilities, you can focus on data science work rather than infrastructure management. You can easily organize, collaborate, and share notebooks and related assets among your teams in one unified environment with centralized governance via DataRobot Use Cases.

What’s different about DataRobot Notebooks compared to Jupyter?

Working locally with open-source Jupyter Notebooks can be challenging to scale with an enterprise, whereas DataRobot Notebooks help make data science a team sport by serving as a central repository for notebooks and data science assets, enabling your team to collaborate and make progress on complex problems. Since DataRobot Notebooks is a fully managed solution, you can work with notebooks with scalable resources without having to manage the infrastructure yourself. DataRobot Notebooks also provide enhanced features beyond the classic Jupyter offering, such as built-in revision history, credential management, built-in visualizations, and more. You will be able to have the full flexibility of a code-first environment while also leveraging DataRobot’s suite of other ML offerings, including automated machine learning.

Are DataRobot Notebooks compatible with Jupyter?

Yes, notebooks are Jupyter compatible and utilize Jupyter kernels. DataRobot supports import from and export to the .ipynb standard file format for notebook files, so you can easily bring existing workloads into the platform without concern for vendor lock-in of your IP. The user interface is also closely aligned with Jupyter (e.g., modal editor, keyboard shortcuts), so you can easily onboard without a steep learning curve.

Can I install any packages I need within the notebook environment?

Yes, you can install any additional packages you need into your environment at runtime during your notebook session. You can use Jupyter's magic commands (e.g., !pip install <package-name>) from a notebook cell; however, when your session shuts down, packages installed at runtime do not persist. You must reinstall them the next time you restart the session. However, package installations persist if you instead use a codespace.

Can I share notebooks?

Although you cannot share notebooks directly with other users, in Workbench, you can share Use Cases that contain notebooks. Therefore, to share a notebook with another user, you must share the entire Use Case so that they have access to all associated assets.

How can I access datasets that I have not yet loaded into my notebook?

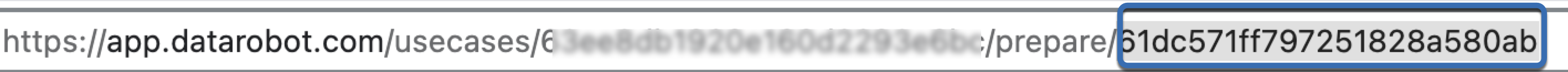

Access the dataset you want to include in the notebook from the Use Case dashboard. The ID is included in the dataset URL (after /prepare/); it is the same ID stored for the dataset in the AI Catalog.

To import a dataset in an AI Catalog into a notebook:

from datarobot.models.dataset import Dataset

# This ID will be seen here:

# https://app.datarobot.com/ai-catalog/641e1ee2cc9ba01fc1dab737

my_ds_id = '' # Enter the ID of the dataset to import; for example, '641e1ee2cc9ba01fc1dab737'

dataset = Dataset.get(my_ds_id)

ds_as_df = dataset.get_as_dataframe(low_memory=True)

ds_as_df.head()

Once you've imported the Dataset class, you can list all of the datasets using the following code:

Dataset.list()

Can I write a file to my local machine from a notebook?

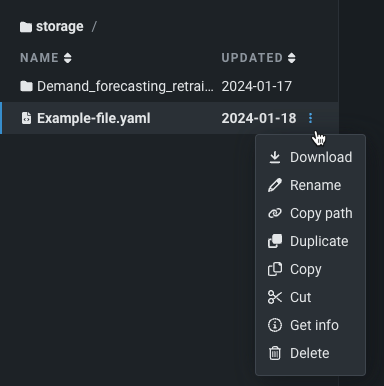

To write a file to your local machine, you must use Codespaces. After creating a codespace, you are able to download any files within that codespace (notebooks included) by selecting Download from the file's actions menu.

Feature considerations¶

Review the tables below to learn more about the limitations for DataRobot Notebooks.

CPU and memory limits¶

Review the limits below based on the machine size you are using.

| Machine size | CPU limit | Memory limit |

|---|---|---|

| XS | 1 CPU | 4 GB |

| S | 2 CPU | 8 GB |

| M* | 4 CPU | 16 GB |

| L* | 8 CPU | 32 GB |

* M and L machine sizes are available for customers depending on their pricing tier (up to M for Enterprise tier customers, and up to L for Business Critical tier).

User limits¶

You can maintain a maximum of four concurrent active notebook and/or codespace sessions per user in DataRobot. There is also a larger limit for the maximum number of concurrent notebook or codespace sessions that can be running across all users in an organization. If the limit has been reached, you can shut down an existing active session in order to launch another notebook or codespace in a session. Contact your DataRobot representative for more information on the limit for the total number of concurrent sessions that can run in parallel in your organization.

Cell limits¶

The table below outlines limits for cell execution time, cell source, and output sizes

| Limit | Value |

|---|---|

| Max cell execution time | 24 hours |

| Max cell output size | 10MB |

| Max notebook cells count | 1000 cells |

| Max cell source code size | 2MB |

Read more¶

DataRobot offers a library of AI accelerators that outline data science and machine learning workflows to solve problems. You can view a selection of notebooks below that use v3.0 of DataRobot's Python client.