Data connections¶

In Workbench, you can easily configure and reuse secure connections to predefined data sources, allowing you to interactively browse, preview, and profile your data before using DataRobot's integrated data preparation capabilities.

See also:

- A full list of supported data stores.

- Associated considerations for important additional information.

- Dataset requirements in DataRobot.

Data store vs. data connection

In DataRobot, data store and data connection are used interchangeably, and both refer to where your data is stored and managed as well as the dynamic link to that data. You will see both terms in the UI.

Source IP addresses for allowing

Before setting up a data connection, make sure the source IPs have been allowed.

Data connector limitation for vector databases

Data ingested via data connectors (such as Google Drive or SharePoint) is stored in the File Registry and cannot be used as VDB metadata. All metadata files must be uploaded to Datasets storage in the Data Registry, as assets contained in Files storage are not validated by the EDA process. If a vector database requires metadata for filtering, access control, or downstream retrieval logic, ensure that the metadata is provided as a Dataset in the Data Registry rather than sourced from a data connector.

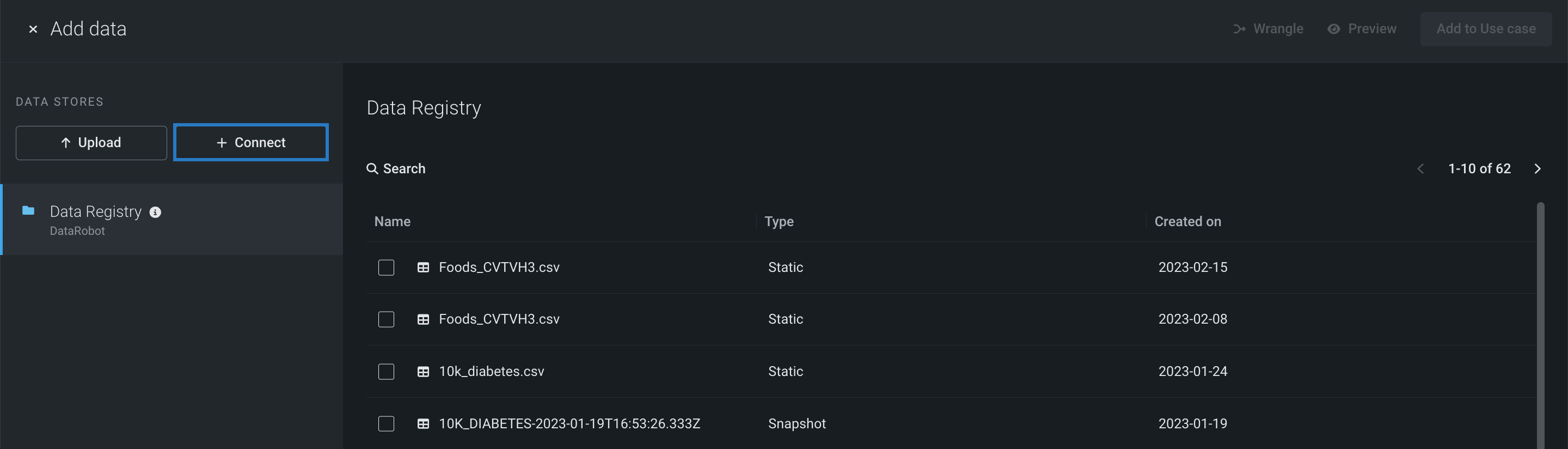

Connect to a data source¶

Creating a data connection lets you explore external source data—from both connectors and JDBC drivers—and then add it to your Use Case. The Browse data modal only lists connections that support structured data.

To create a data connection:

-

From the Data assets tile, click Add data > Browse data in the upper-right corner, opening the Browse data modal.

-

Click + Add connection.

-

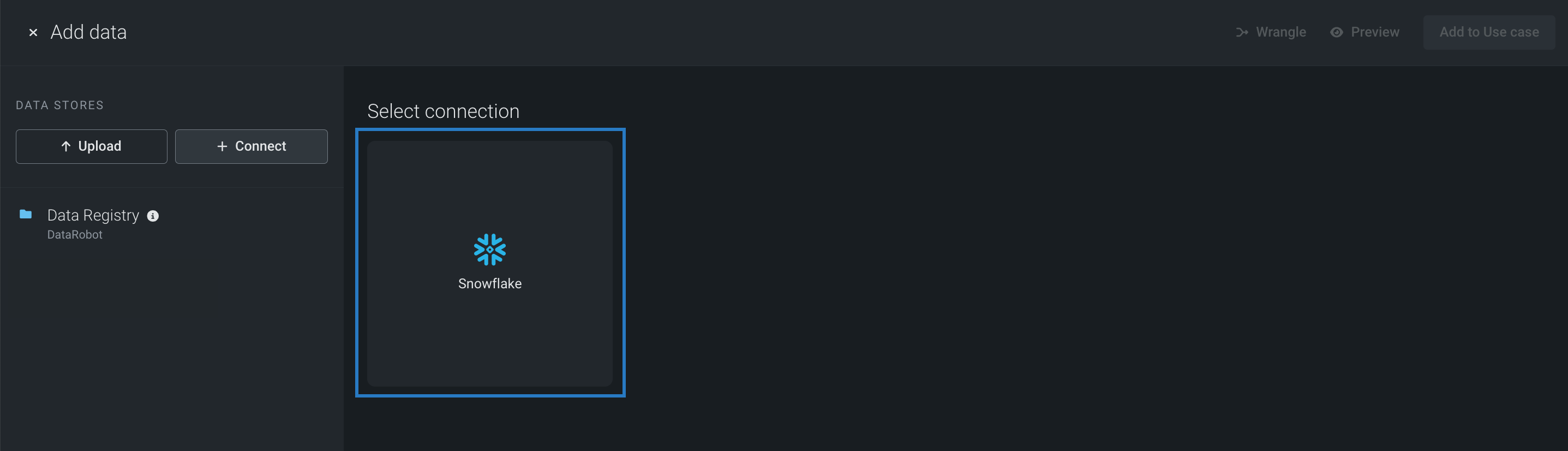

Choose either Structured for connections that support adding structured data, or Unstructured for connections that support unstructured data (only available during VDB creation). Then, select a data store.

Now, you can configure the data connection.

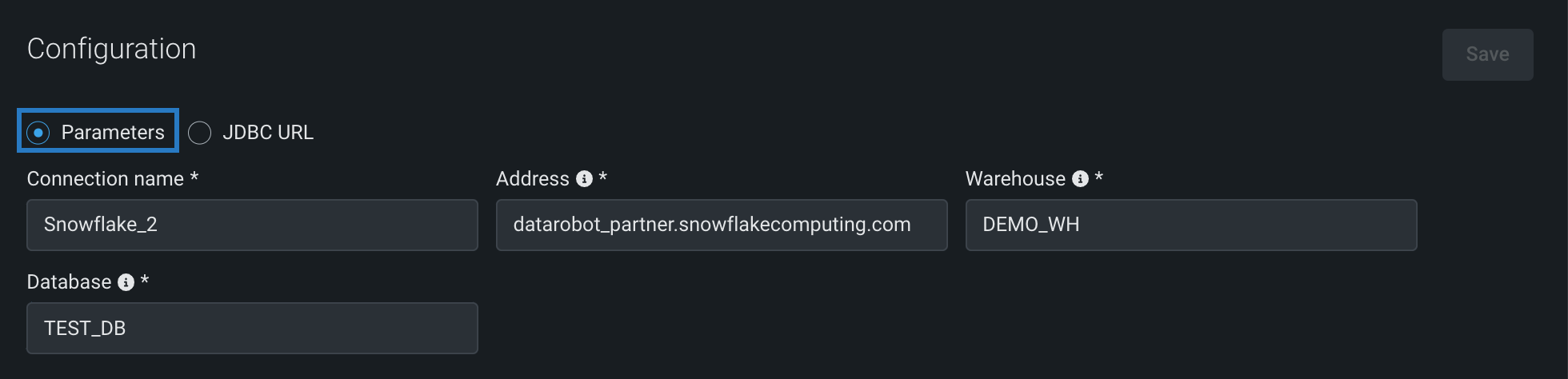

Configure the connection¶

Note

When configuring your data connection, configuration types, authentication options, and required parameters are based on the selected data source. The example below shows how to configure Snowflake with OAuth using new credentials.

To configure the data connection:

-

With the Connection Configuration tab selected in the Edit Connection modal, choose a configuration method—either Parameters or JDBC URL.

-

Enter the required parameters for the selected configuration method.

-

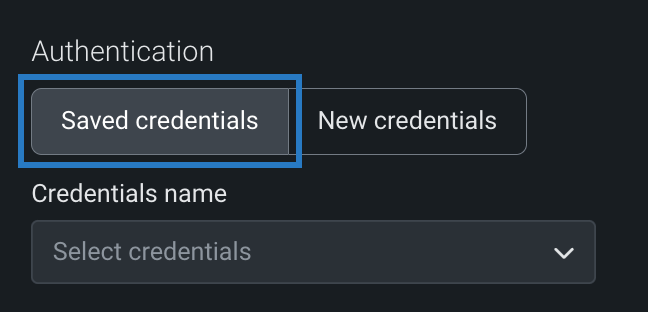

Click New Credentials and select an authentication method—the available authentication methods are based on the selected connection.

Saved credentials

If you previously saved credentials for the selected data source, click Saved credentials and select the appropriate credentials from the dropdown.

-

Click Save in the upper right corner. If your browser window is small, you may need to scroll up.

If you selected OAuth as your authentication method, you will be prompted to sign in before you can select a dataset. See the list of supported data stores for more information about supported authentication methods and required parameters.

Select a dataset¶

Once you've set up a data connection, you can add datasets by browsing the database schemas and tables you have access to.

To select a dataset:

-

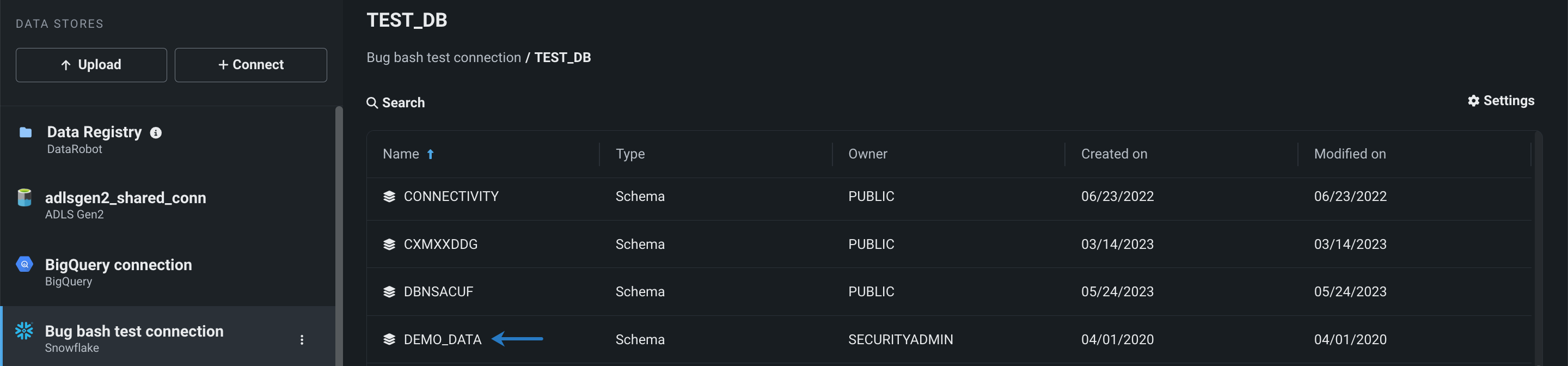

Select the schema associated with the table you want to add.

-

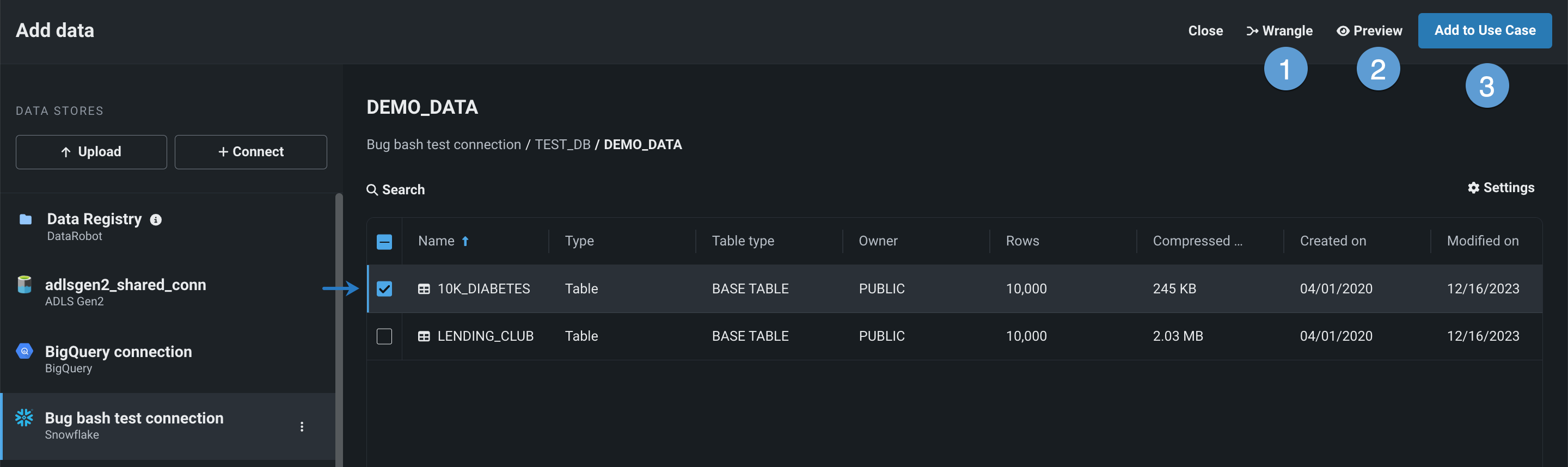

Select the box to the left of the appropriate table.

With a dataset selected, you can:

Element Description 1 Add to Use Case Adds the data asset to your Use Case, making it available to you and other team members. 2 Add from SQL query Allows you to use SQL queries to add data. 3 Settings Allows you to show, hide, and/or pin columns. 4 Actions menu Provides access to the following actions: - Preview: Open a snapshot preview to help determine if the dataset is relevant to your Use Case and/or if it needs to be modified in either Wrangler or the SQL Editor.

- Open in Wrangler: Perform data preparation before adding the asset to your Use Case.

- Open in SQL Editor: Create a recipe comprised of SQL queries that enrich, transform, shape, and blend datasets together.

Large datasets

If you want to decrease the size of the dataset before adding it to your Use Case, click Wrangle. When you publish a recipe, you can configure automatic downsampling to control the number of rows when Snowflake materializes the output dataset.

-

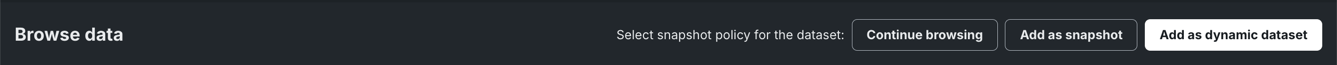

Click Add to Use Case, and then choose a snapshot policy by adding either dynamic data (Add as dynamic dataset) or a snapshot of the dataset (Add as snapshot). To go back without adding data, click Continue browsing.

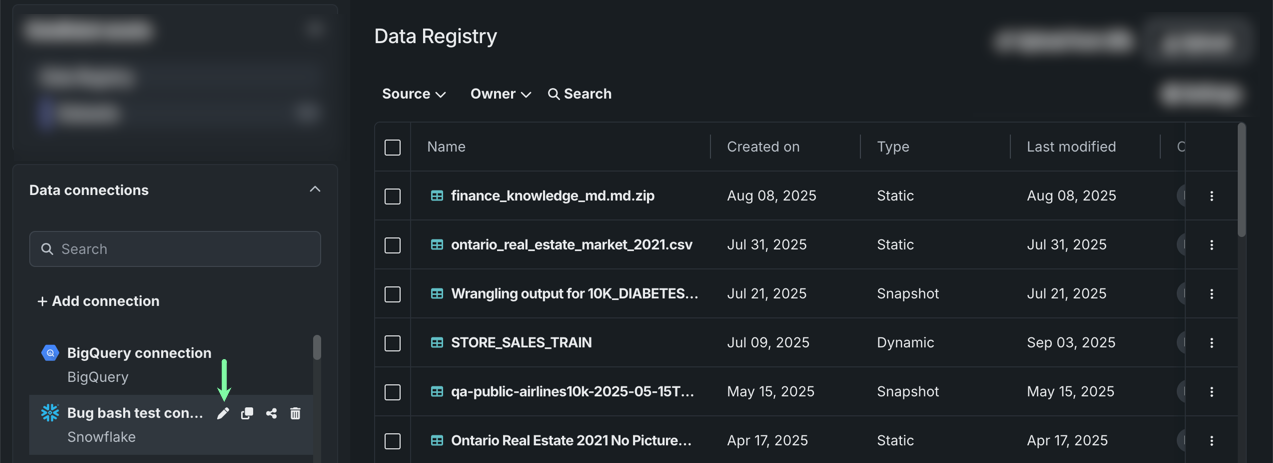

Edit a connection¶

To modify an existing data connection from the Browse data modal, hover over the connection and click the edit icon . For more information, see Edit a connection. From this modal, you can also delete a connection.

Connection support for Wrangling and SQL Editor¶

You can connect to and add data from all connectors and JDBC drivers that are currently supported in DataRobot. For a full list of supported data stores, see Supported data stores.

Note that Snowflake, BigQuery, and Databricks connections use pushdown wrangling—all other connections use Spark wrangling.

The table below highlights the capabilities supported by each wrangling method:

| Wrangling method | Snapshot datasets | Dynamic datasets | Live preview | Wrangling | In-source materialization |

|---|---|---|---|---|---|

| Pushdown wrangling: Snowflake, BigQuery, Databricks | ✔ | ✔ | ✔ | ✔ | |

| Spark wrangling: snapshots uploaded from local files, public URLs, all supported connections | ✔ | ✔ |

JDBC driver capabilites

You can only add snapshot datasets from a JDBC driver connection.