Data preparation reference¶

FAQ¶

Add data¶

Can one dataset be added to multiple Use Cases?

Yes. When you add a dataset to a Use Case via the Data Registry, you are establishing a link between the dataset and the Use Case.

How do I delete a dataset?

To remove a dataset from a Use Case, click Actions menu > Remove from Use Case. Note that this only removes the link from the data source to the Use Case, meaning team members in that specific Use Case will no longer see the dataset, however, if they have access to the same dataset in a different Use Case, they will still be able to access the dataset. You can control access to the source data from the AI Catalog in DataRobot Classic.

How can I browse and manage data connections not currently supported in Workbench?

You must use DataRobot Classic to manage any data connections not listed above. Additional connections will be added to Workbench in future releases.

How can I delete a data connection in Workbench?

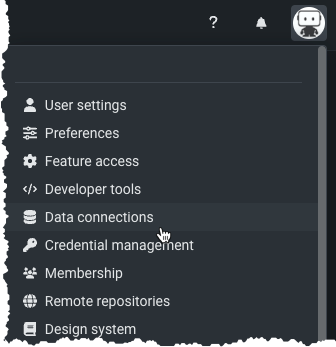

You cannot delete data connections from within Workbench; to remove existing data connections, go to User Settings > Data Connections in DataRobot Classic.

How can I manage saved credentials?

You can manage saved credentials for your data connections in DataRobot Classic.

Wrangle data¶

What permissions are required to be able to push down operations to an external data source?

You must have read access to the selected database.

Are there situations where data is moved from source?

Yes, data is moved from the source:

- During an interactive wrangling session: 10,000 randomly sampled rows from the original table or view in the data source are brought into DataRobot for preview and profiling purposes.

- After publishing a wrangling recipe: When you publish a recipe, the transformations are pushed down and applied to the entire input table or view in the data source. The resulting output is materialized in DataRobot as a snapshot dataset.

How do the wrangling insights differ from the exploratory data insights generated when registering a dataset in DataRobot?

The insights generated during data wrangling are based on the same live random sample of the raw dataset retrieved from your data source used during an interactive wrangling session. Whenever you adjust the row count or add operations, DataRobot updates the sample and performs exploratory data analysis again.

Why do I need to downsample my data?

If the size of the raw data in Snowflake does not meet DataRobot's file size requirements, you can configure automatic downsampling to reduce the size of the output dataset.

Feature considerations¶

Add data¶

Consider the following when adding data:

- There is currently no image support in previews.

Wrangle data¶

Consider the following when wrangling data:

- Profile cannot be customized and is limited to sample-based profiles.

-

Wrangling does not support query type datasets (i.e., a dataset built from a query).

-

Self-managed: You can wrangle Data Registry datasets of up to 20GB.

- Managed SaaS (multi-tenant SaaS and AWS single-tenant SaaS deployments): You can wrangle Data Registry datasets of up to 100GB.