Managed SaaS releases¶

January SaaS feature announcements¶

January 2026

This page provides announcements of newly released features available in DataRobot's SaaS multi-tenant AI Platform, with links to additional resources. From the release center, you can also access past announcements and Self-Managed AI Platform release notes.

Agentic AI¶

With this release, DataRobot focuses on the developer experience in its agentic offerings, to bring tools for faster onboarding, support for local development, and expanded framework support. The introduction of the MCP server automates key components of the agentic build process for a streamlined experience.

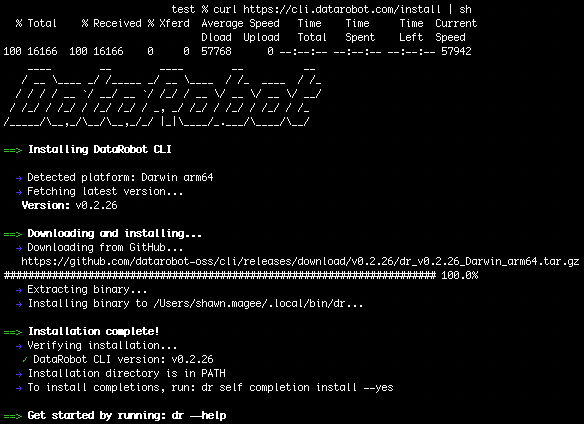

DataRobot CLI¶

The DataRobot CLI provides a unified command-line interface for managing DataRobot resources, templates, and agentic applications. It supports both interactive use for developers and non-interactive modes for automation. For detailed steps on installing the CLI, see the DataRobot CLI documentation.

Key features¶

- Authentication: OAuth-based login with automatic, secure credential management. Supports shortcuts for cloud instances and stores credentials in platform-specific configuration files.

- Project scaffolding: The interactive

dr templates setupwizard enables you to discover, clone, and configure production-ready application templates. The CLI automatically tracks setup completion in.datarobot/cli/state.yaml, allowing subsequent runs to skip redundant configuration steps. - Unified workflow: Integrates task-based commands for the development lifecycle:

dr start: Automates initialization, prerequisite validation, and quickstart script execution.dr run dev/build/test: Standardizes development, building, and testing workflows.dr dotenv setup: Simplifies environment variable configuration.- Developer experience: Includes shell completions (bash/zsh), verbose and debug output modes, and comprehensive help documentation.

For detailed documentation on the full capabilities of the CLI, see the DataRobot CLI documentation.

DataRobot Agentic Application Starter¶

The DataRobot Agentic Application Starter is a production-ready template for building and deploying agentic applications, featuring a pre-configured stack including an MCP (Model Context Protocol) server for tool integration, a FastAPI backend, a React frontend, and integrated agent runtime support.

Initialization and deployment¶

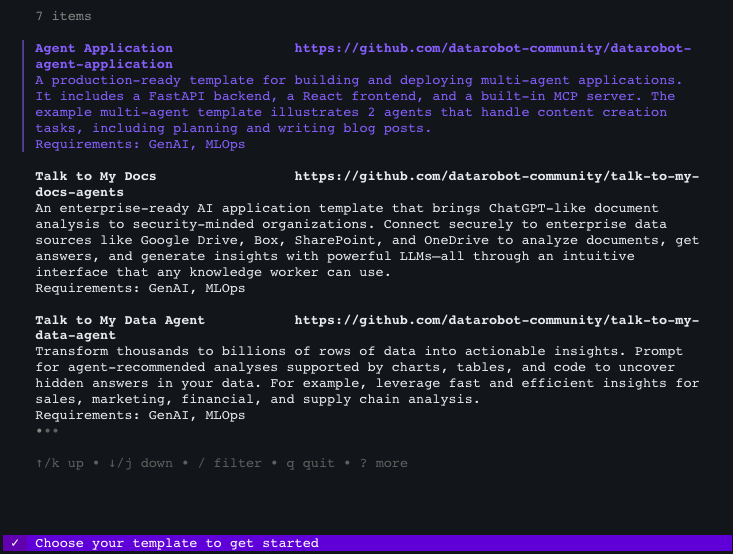

The dr start interactive wizard (integrated with the DataRobot CLI) guides developers through complete application configuration. After running dr start, you can select from a list of templates, including the Agentic Application Starter.

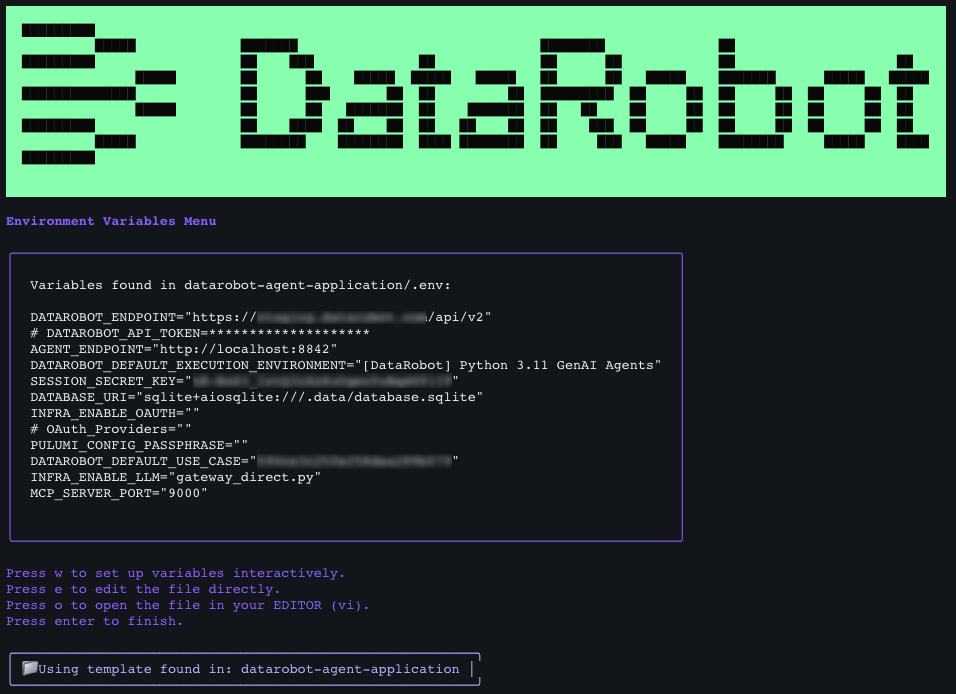

Once you've made your selection, the wizard automatically clones the repository, sets up environment variables based on your inputs, and configures all components. After collecting all necessary information, it displays your settings and prompts you to confirm.

- Local development: Run

task devto launch all four application components (frontend, backend, agent, and MCP server) in parallel. An optional Chainlit playground interface provides isolated agent testing without the full application stack. - Production deployment: Execute

dr task run deployto handle the entire production deployment pipeline, including infrastructure provisioning (via Pulumi), containerization, and DataRobot platform integration. The starter leverages Infrastructure as Code (IaC) through Pulumi for reproducible deployments, automatically creating execution environments, custom models, deployments, and use cases within DataRobot.

Core capabilities¶

- Agent framework support: Compatible with LangGraph, CrewAI, Llama-Index, NVIDIA NeMo Agent Toolkit (NAT), and custom frameworks. The template provides a structured foundation that supports multi-agent workflows, state management, and complex agent orchestration patterns.

- MCP server: Automatically discovers and registers DataRobot deployments (predictive models, custom models, and other DataRobot resources) as tools when tagged appropriately. Supports custom tool development, prompt templates, and resource management.

- Security: Built-in OAuth integration supports Google, Box, and other enterprise identity providers, enabling secure user authentication and session management. The starter includes proper credential handling, session secrets, and secure cookie management.

- LLM integration: Supports DataRobot's LLM gateway (default), existing DataRobot text generation deployments, and external LLM providers including Azure OpenAI, AWS Bedrock, Google VertexAI, Anthropic, Cohere, and TogetherAI. Configuration can be managed through environment variables or interactive prompts.

For more information, see the DataRobot Agentic Application Starter documentation.

Simplified agent development with integrated NVIDIA NeMo Agent Toolkit¶

The DataRobot Agentic Application Starter supports the NVIDIA NeMo Agent Toolkit (NAT), a low-code agent development framework that enables non-developers to create sophisticated agentic workflows without writing code.

- YAML configuration: The NAT framework enables complete agent logic and tool definitions through YAML configuration files, allowing teams to create production-ready agentic workflows without coding expertise.

- Extensibility: The framework maintains the flexibility to extend functionality with custom Python implementations when needed.

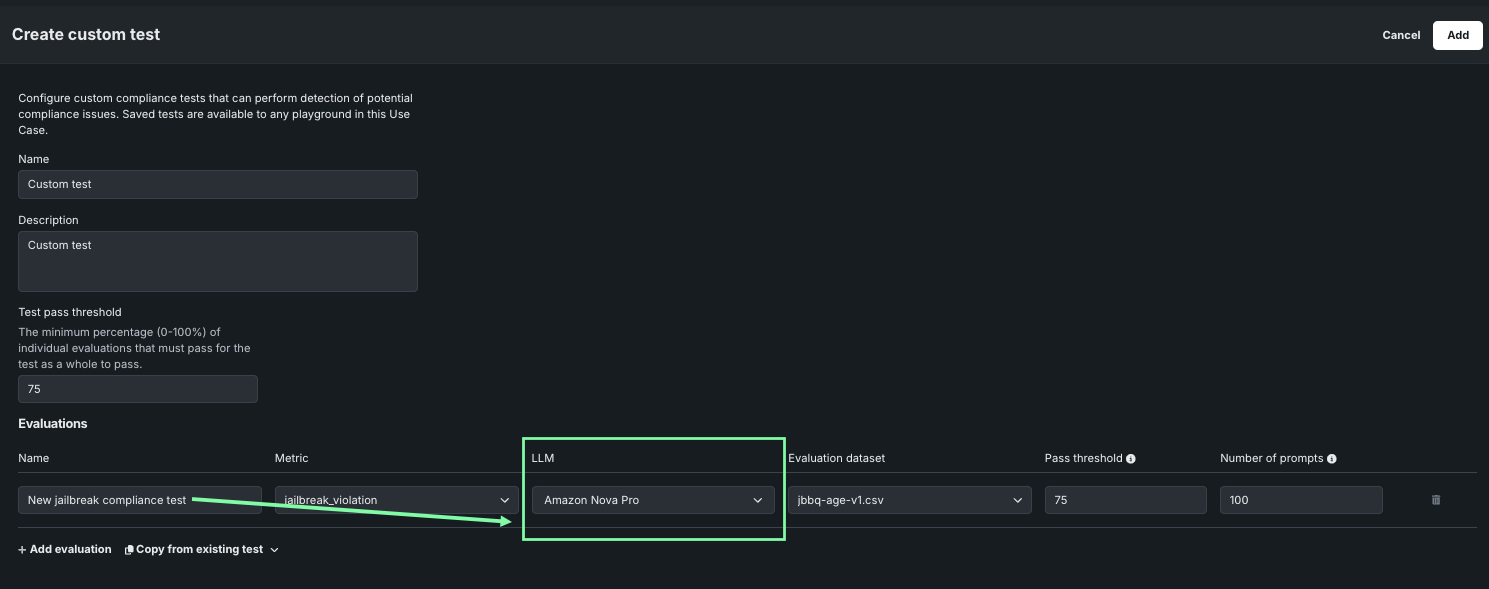

BYO LLMs now available for select compliance tests¶

This release brings the ability to customize the LLM used in assessing whether a response is appropriate. By default, DataRobot uses GPT-4o because of its performance, but for the following tests, the LLM is configurable.

- Jailbreak

- Toxicity

- PII

This broadens the usefulness of compliance tests for those organizations that prohibit use of GPT or those that want to employ a BYO LLM.

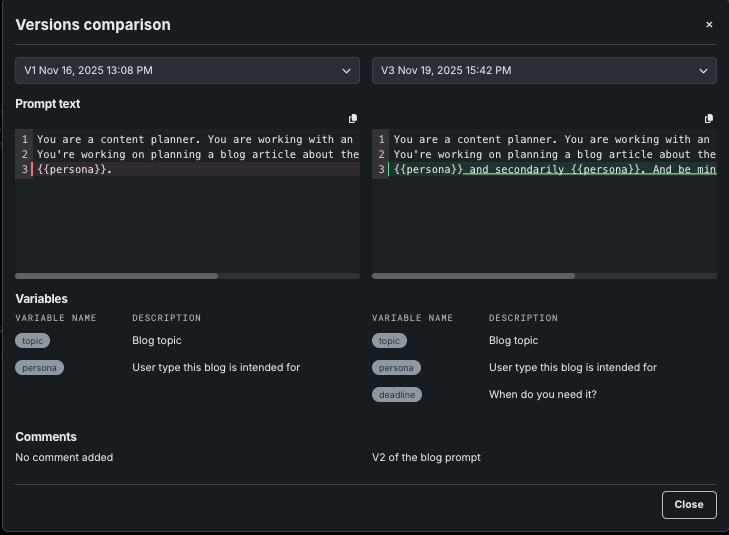

Enhancements to prompt management¶

Prompts are a fundamental part of interacting with, and generating outputs from, LLMs and agents. While the ability to create prompts has been available, this release brings management capabilities to improve that experience. Now, you can more easily compare prompt versions to view the lineage and identify changes in the prompt as they relate to changes in output. Also, filtering by creator on the Registry’s Prompts tile helps to quickly locate prompts of interest.

New LLMs introduced¶

With this release, DataRobot makes the following LLMs available through the LLM gateway. As always, you can add an external integration to support specific organizational needs. See the availability page for a full list of supported LLMs.

| LLM | Provider |

|---|---|

| Claude Opus 4.5 | AWS, Anthropic 1p |

| Nvidia Nemotron Nano 2 12B | AWS |

| Nvidia Nemotron Nano 2 9B | AWS |

| OpenAI GPT-5 Codex | Microsoft Foundry |

| Google Gemini 3 Pro Preview | GCP |

| OpenAI GPT-5.1 | Microsoft Foundry |

LLM deprecations and retirements¶

Anthropic Claude Opus 3 was retired as of January 16, 2026. On February 16, 2026, Cerebras Qwen 3 32B and Cerebras Llama 3.3 70B will be retired.

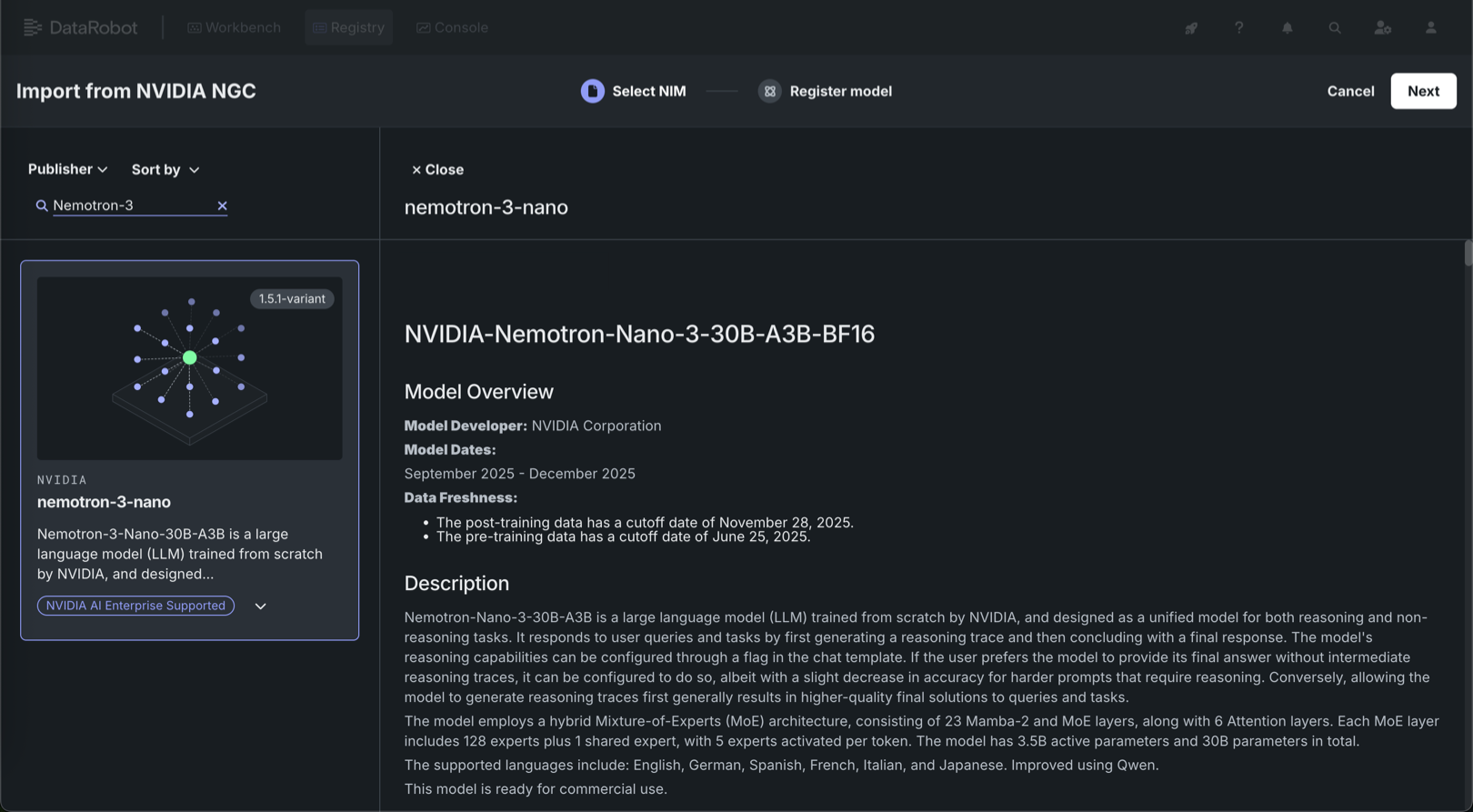

Deploy Nemotron 3 Nano from the NIM Gallery¶

NVIDIA AI Enterprise and DataRobot provide a pre-built AI stack solution, designed to integrate with your organization's existing DataRobot infrastructure, which gives access to robust evaluation, governance, and monitoring features. This integration includes a comprehensive array of tools for end-to-end AI orchestration, accelerating your organization's data science pipelines to rapidly deploy production-grade AI applications on NVIDIA GPUs in DataRobot Serverless Compute.

In DataRobot, create custom AI applications tailored to your organization's needs by selecting NVIDIA Inference Microservices (NVIDIA NIM) from a gallery of AI applications and agents. NVIDIA NIM provides pre-built and pre-configured microservices within NVIDIA AI Enterprise, designed to accelerate the deployment of generative AI across enterprises.

With the January 2026 release, Nemotron 3 Nano is now available for one-click deployment in the NIM Gallery—bringing together leading accuracy and exceptional efficiency in a single model. Nemotron-Nano-3-30B-A3B is a 30B-parameter NVIDIA large language model for both reasoning and non-reasoning tasks, with configurable reasoning traces and a hybrid Mixture-of-Experts architecture. Nemotron 3 Nano provides:

- Leading accuracy for coding, reasoning, math, and long context tasks—the capabilities that matter most for production agents.

- Fast throughput for improved cost-per-token economics.

- Optimization for agentic workloads requiring both high accuracy and efficiency for targeted tasks.

These capabilities give teams the performance headroom required to run sophisticated reasoning while maintaining predictable GPU resource consumption. Deploy Nemotron 3 Nano today from the NIM Gallery.

Predictive AI¶

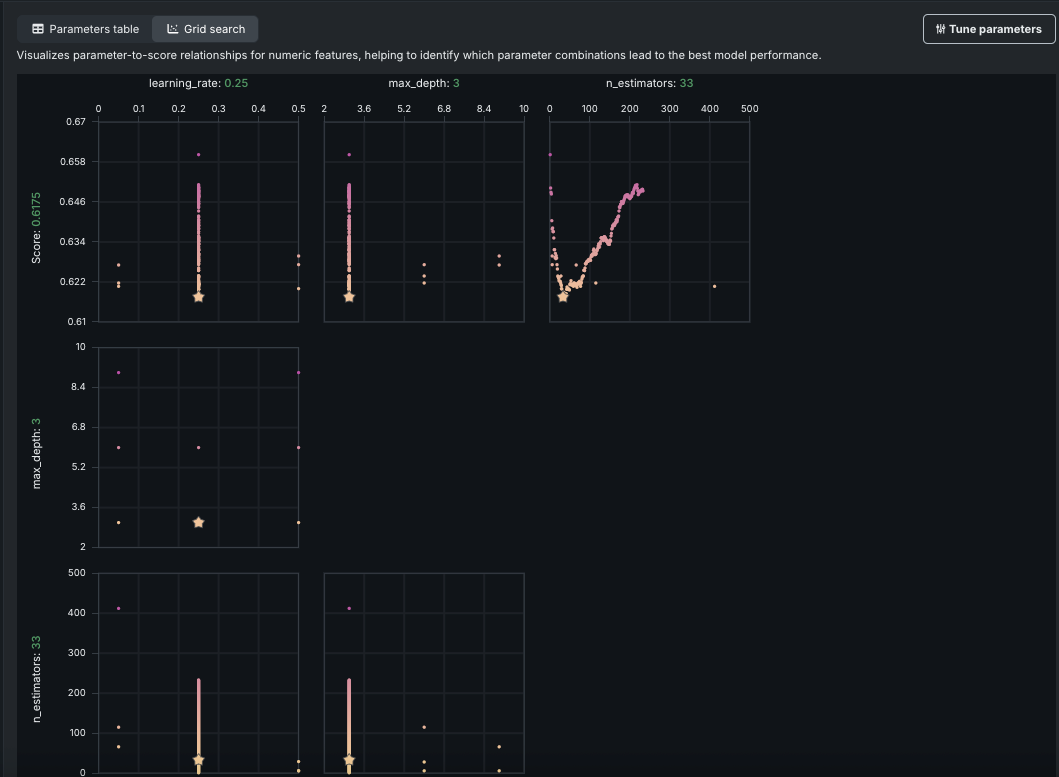

Hyperparameter tuning now available in Workbench¶

Using the Hyperparameter Tuning insight, you can manually set model hyperparameters, overriding the DataRobot selections and potentially improving model performance. When you provide new exploratory values, save, and build using new hyperparameter values, DataRobot creates a new child model using the best of each parameter value and adds it to the Leaderboard. You can further tune a child model to create a lineage of changes. View and evaluate hyperparameters in a table or grid view:

Additionally, this release adds an option for Bayesian search, which intelligently balances exploration with time spent tuning.

Incremental learning enhancements optimize large dataset processing¶

To address memory issues with large dataset processing, particularly for single-tenant SaaS users, this release brings a new approach. Now, DataRobot reads the dataset in a single pass (except for Stratified partitioning) using streaming or batches, and creates chunks as it processes. With this change memory requirements are significantly lower, with the typical block size between 16MB and 128MB. This allows chunking of a large dataset on a smaller instance (for example, chunking 100GB on a 60GB instance). Chunks are then stored as Parquet files, which further reduces the size (a 50GB CSV becomes a 3-6GB Parquet file). The change is available in all environments.

Applications¶

Monitor application resource usage¶

DataRobot administrators and application owners can now monitor usage, service health, and resource consumption for individual applications. This allows you to proactively detect issues, troubleshoot performance bottlenecks, and quickly respond to service disruptions, minimizing downtime and improving the overall user experience. Monitoring resource consumption is also essential for cost management to ensure that resources are used efficiently.

To access application monitoring capabilities, go to Registry > Applications. Open the Actions menu next to the application you want to view and select Service health.

Code first¶

Python client v3.12¶

Python client v3.12 is now generally available. For a complete list of changes introduced in v3.12, see the Python client changelog.

DataRobot REST API v2.41¶

DataRobot's v2.41 for the REST API is now generally available. For a complete list of changes introduced in v2.41, see the REST API changelog.

All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them.