Migrate models from dedicated to serverless prediction environments¶

Serverless prediction environments are a Kubernetes-based and scalable deployment architecture. Unlike dedicated prediction environments (DPEs), serverless prediction environments provide configurable resource settings, allowing you to modify the environment's resource allocation to suit the requirements of the deployed models.

With the Self-Managed AI Platform's 10.2 release, serverless prediction environments became the default deployment platform for all new DataRobot installations, replacing dynamic/static prediction endpoints. New Self-Managed organizations running a DataRobot 10.2+ installation have access to a pre-provisioned DataRobot Serverless prediction environment on the Prediction Environments page.

On the Managed AI Platform (SaaS), organizations created after November 2024 have access to a pre-provisioned DataRobot Serverless prediction environment on the Prediction Environments page.

Consider the guidelines in the table below when preparing to migrate model deployments to serverless prediction environments:

| Deployment category | Recommended action |

|---|---|

| New deployments | Use a serverless prediction environment for every new deployment you create. The unused dedicated prediction servers will be removed upon your next renewal. |

| Existing deployments | On the Managed AI Platform (SaaS), the support or account team may ask you to migrate your models during your next renewal. Consider migrating your models to serverless environments as soon as possible, completing the migration by the end of 2025. New deployments don't include historical observability data; if you want to retain this data, contact DataRobot support for assistance. |

| Deployments integrated with external applications | Consult with your application team to see if/when migration is possible. |

Contact us

If you find it challenging to complete a migration of your organization's models from dedicated prediction environments to serverless prediction environments before the end of 2025, contact the DataRobot account team.

Why serverless prediction environments?¶

Serverless prediction environments support:

- Deploying AutoML and time series models, custom models, GenAI blueprints, and vector databases.

- Real-time and batch prediction APIs.

- Scale-to-zero and CPU-based autoscaling for DataRobot models.

Serverless prediction environments enable scalable model deployments with:

- Distributed compute—through scoring on multiple compute nodes.

- Configurable resource settings—available on each deployment.

- Concurrent batch prediction jobs—with configurable maximum concurrency.

- Multi-threaded prediction gateways for custom models.

How do I use serverless prediction environments?¶

To migrate models to a serverless prediction environment, deploy registered models from Registry to a serverless prediction environment:

Considerations

When migrating models to serverless prediction environments, consider the following:

-

Serverless environments introduce a new signature for the real-time API; adjust your applications to use this new signature. DataRobot provides a Python library with helper functions to enable communication with serverless deployments via code.

-

New deployments don't include historical observability data; if you want to retain this data, contact DataRobot support for assistance.

-

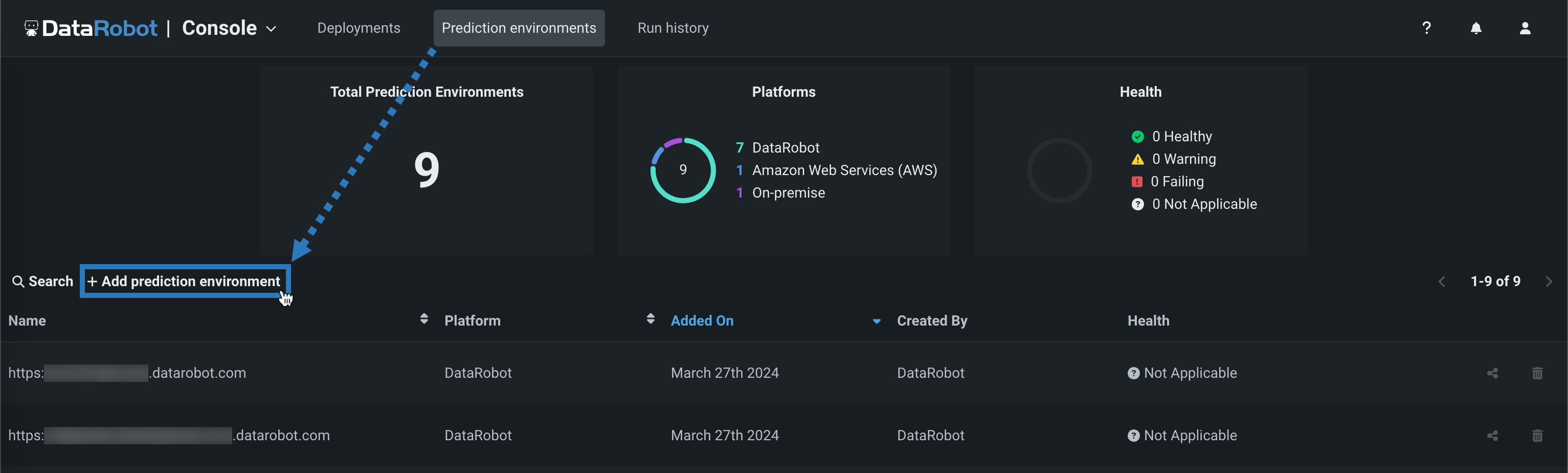

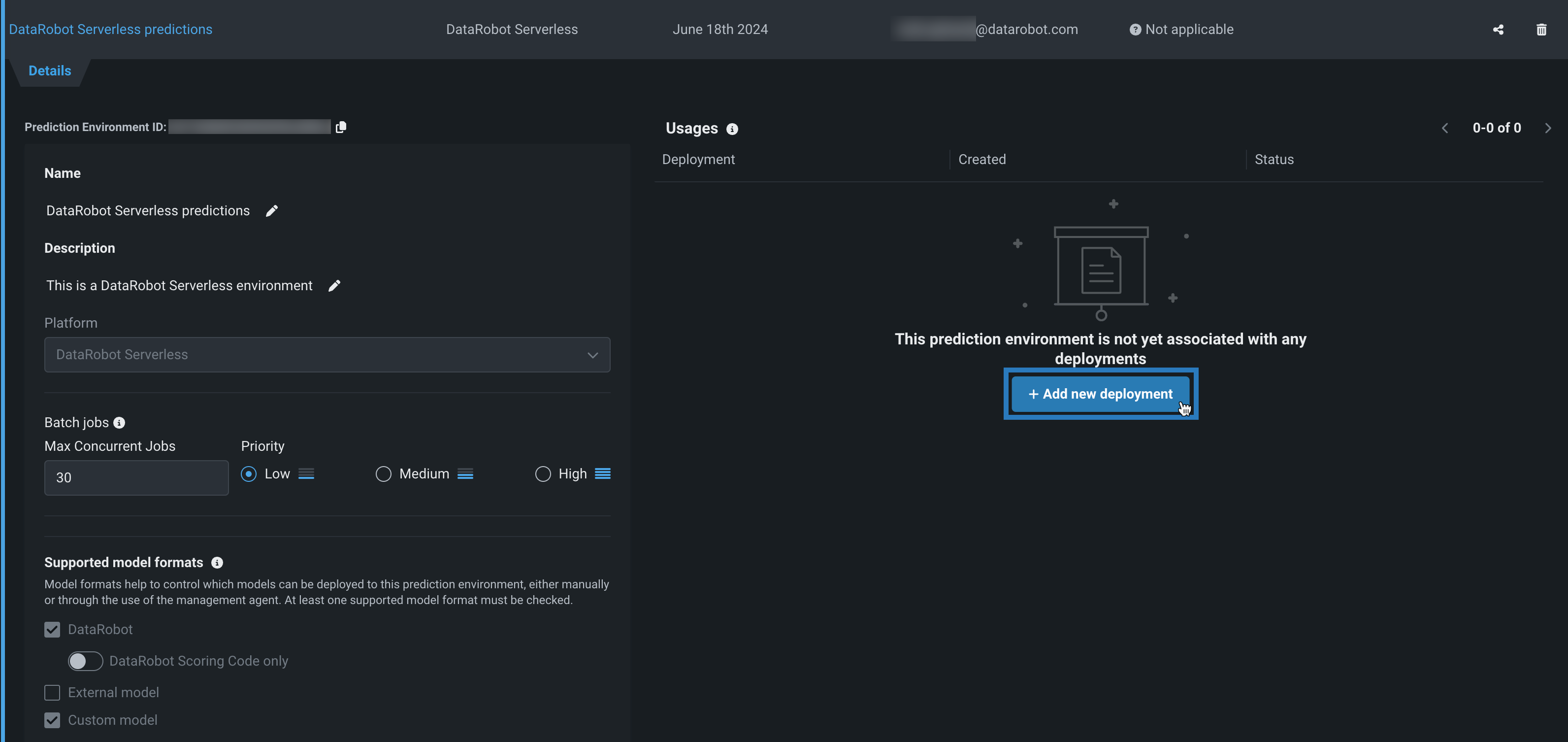

Create a serverless prediction environment in Console.

-

Deploy a model to the serverless environment.

-

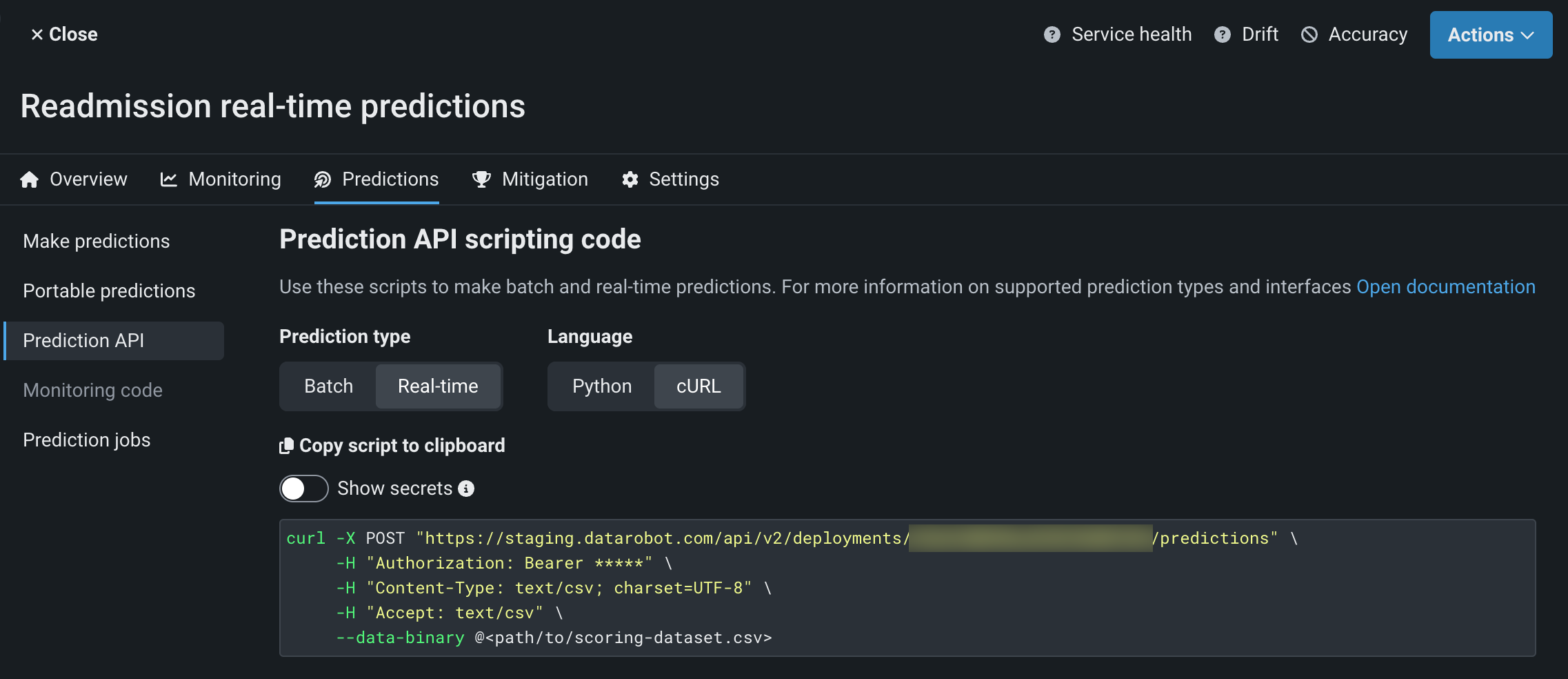

Make predictions using the real-time prediction API or via batch prediction jobs.

-

If the deployment is integrated with an external application, modify your existing applications and scripts to call the new prediction endpoint using the

datarobot-predictlibrary.Serverless environments also support the Bolt-on Governance API, enabling communication with deployed GenAI blueprints through the official Python library for the OpenAI API.