Generative AI (V11.0)¶

April 14, 2025

The DataRobot V11.0.0 release includes many new and improved GenAI capabilities. See additional details of Release 11.0:

Features grouped by capability

| Feature | Workbench | Classic |

|---|---|---|

| GenAI: General | ||

| NVIDIA AI Enterprise integration* | ✔ | |

| New LLMs now available in the playground | ✔ | |

| New LLM deprecation-to-retirement process protects LLM assets | ✔ | |

| Trial users now have access to all location-appropriate LLMs | ✔ | |

Premium

DataRobot's Generative AI capabilities are a premium feature; contact your DataRobot representative for enablement information. Try this functionality for yourself in a limited capacity in the DataRobot trial experience.

GenAI general enhancements¶

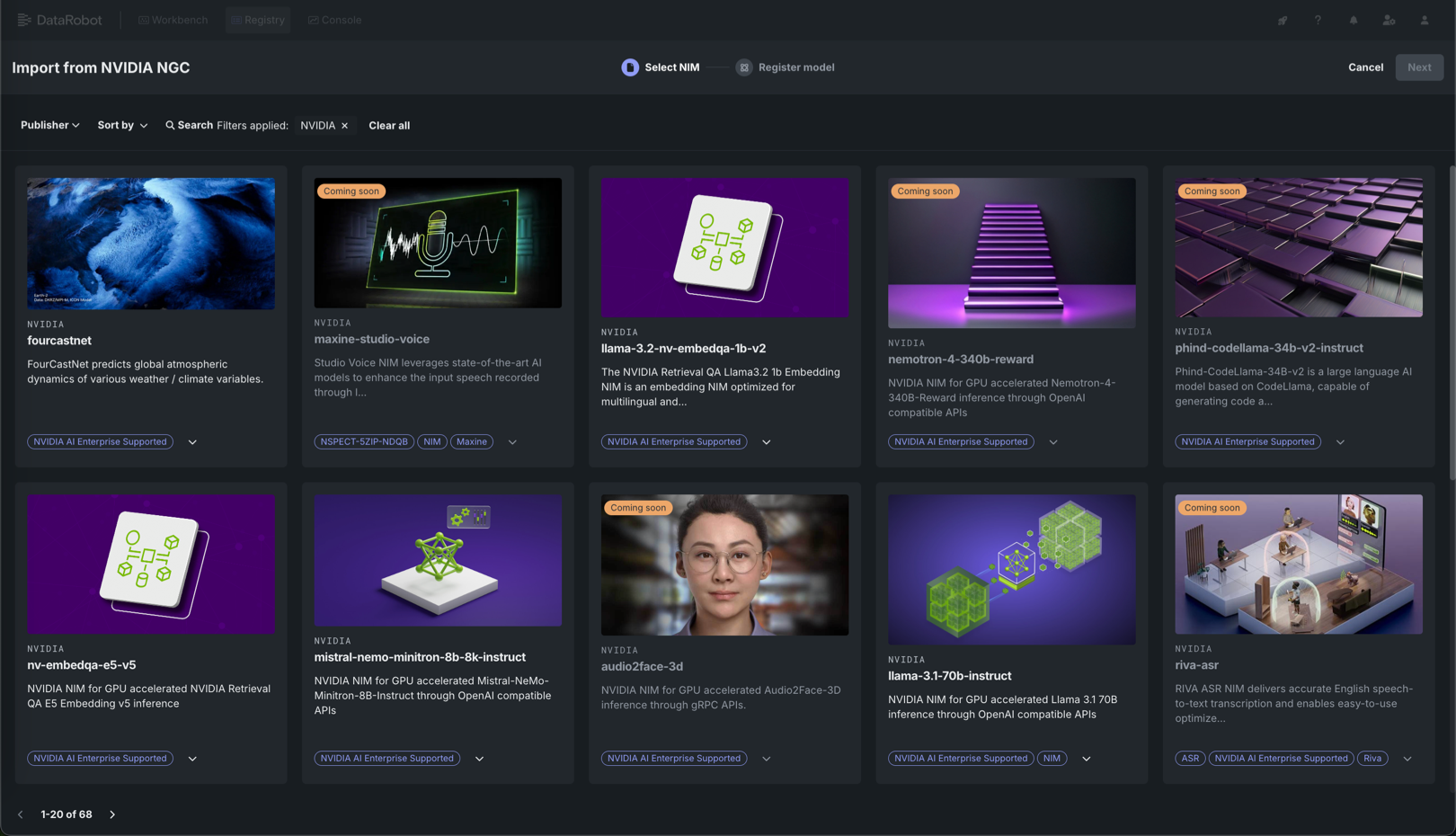

NVIDIA AI Enterprise integration¶

NVIDIA AI Enterprise and DataRobot provide a pre-built AI stack solution, designed to integrate with your organization's existing DataRobot infrastructure, providing access to robust evaluation, governance, and monitoring features. This integration includes a comprehensive array of tools for end-to-end AI orchestration, accelerating your organization's data science pipelines to rapidly deploy production-grade AI applications on NVIDIA GPUs in DataRobot Serverless Compute.

In DataRobot, create custom AI applications tailored to your organization's needs by selecting NVIDIA Inference Microservices (NVIDIA NIM) from a gallery of AI applications and agents. NVIDIA NIM provides pre-built and pre-configured microservices within NVIDIA AI Enterprise, designed to accelerate the deployment of generative AI across enterprises.

For more information on the NVIDIA AI Enterprise and DataRobot integration, review the workflow summary documentation, or review the documentation listed below:

| Task | Description |

|---|---|

| Create an inference endpoint for NVIDIA NIM | Register and deploy with NVIDIA NIM to create inference endpoints accessible through code or the DataRobot UI. |

| Evaluate a text generation NVIDIA NIM in the playground | Add a deployed text generation NVIDIA NIM to a blueprint in the playground to access an array of comparison and evaluation tools. |

| Use an embedding NVIDIA NIM to create a vector database | Add a registered or deployed embedding NVIDIA NIM to a Use Case with a vector database to enrich prompts in the playground with relevant context before they are sent to the LLM. |

| Use NVIDIA NeMo Guardrails in a moderation framework to secure your application | Connect NVIDIA NeMo Guardrails to deployed text generation models to guard against off-topic discussions, unsafe content, and jailbreaking attempts. |

| Use a text generation NVIDIA NIM in an application template | Customize application templates from DataRobot to use a registered or deployed NVIDIA NIM text generation model. |

New LLMs now available in the playground¶

DataRobot’s commitment to providing best-in-class and latest GenAI technology is enhanced with a suite of new LLMs, now generally available for all subscribed enterprise users and Trial users. The following LLMs, which can be used to create LLM blueprints from the playground, have been added since v10.2:

| LLM | Description |

|---|---|

| Amazon Nova Lite | A low cost multimodal model that is fast for processing image, video, and text inputs. |

| Amazon Nova Micro | A text-only model that can reason over text, offering low latency and low cost. |

| Amazon Nova Pro | A multimodal understanding foundation model that can reason over text, images, and videos with the best combination of accuracy, speed, and cost. |

| Anthropic Claude 3.5 Sonnet v1 | The first version of Sonnet excelling in complex reasoning, coding, and visual information. |

| Anthropic Claude 3.5 Sonnet v2 | The second version of Sonnet, excelling in complex reasoning, coding, visual information, and can generate computer actions (e.g., keystrokes, mouse clicks). Model access is disabled for Cloud users on the EU platform due to regulations. |

| Azure OpenAI GPT-4o mini | Excels at text and image processing with low cost and low latency, and in the appropriate use cases, can be a replacement for GPT-3.5 Turbo series models. |

See the full list of LLM availability in DataRobot, with links to creator documentation, for assistance in choosing the appropriate model.

New LLM deprecation-to-retirement process protects LLM assets¶

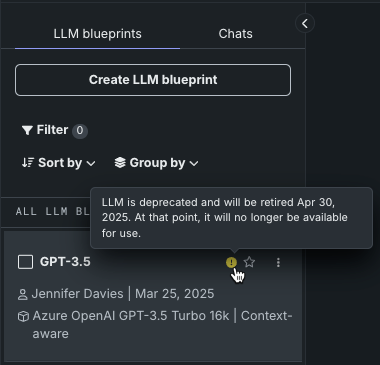

DataRobot now provides badges to alert when an LLM is in the deprecation process—a mechanism to protect experiments and deployments from unexpected removal of vendor support. When an LLM is marked with the deprecation badge, it is an indicator that the model will be retired in two months. When an LLM is deprecated, users are notified, but functionality is not curtailed. For example, you can still submit a chat or comparison prompt, generate metrics for the blueprint, or copy to a new blueprint. When retired, assets created from the retired model are still viewable but creation of new assets is prevented. Hover on the notification icon in the LLM blueprint list to see the final date.

If an LLM has been deployed, because DataRobot does not have control over the credentials used for the underlying LLM, the deployment will fail to return predictions. If this happens, replace the deployed LLM with a new model.

The following LLMs are currently, or will soon be, deprecated:

| LLM | Retirement date |

|---|---|

| Gemini Pro 1.5 | May 24, 2025 |

| Gemini Flash 1.5 | May 24, 2025 |

| Google Bison | April 9, 2025 |

| GPT 3.5 Turbo 16k | April 30, 2025 |

| GPT-4 | June 6, 2025 |

| GPT-4 32k | June 6, 2025 |

Trial users now have access to all location-appropriate LLMs¶

Previously, trial users had only a subset of LLMs available to them. Now, DataRobot offers trial users access to LLMs supported in their region. See the full list of LLM availability for region-specific information.

All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them.