Code-first (V10.2)¶

November 21, 2024

The DataRobot v10.2 release includes many new features and capabilities for code-first users, described below. See additional details of Release 10.2 in:

Code-first features

*Premium

Applications¶

GA¶

Provision DataRobot assets with the application template gallery¶

Application templates provide a code-first, end-to-end pipeline for provisioning DataRobot resources. With customizable components, templates assist you by programmatically generating DataRobot resources that support predictive and generative use cases. The templates include necessary metadata, perform auto-installation of dependencies configuration settings, and seamlessly integrate with existing DataRobot infrastructure to help you quickly deploy and configure solutions.

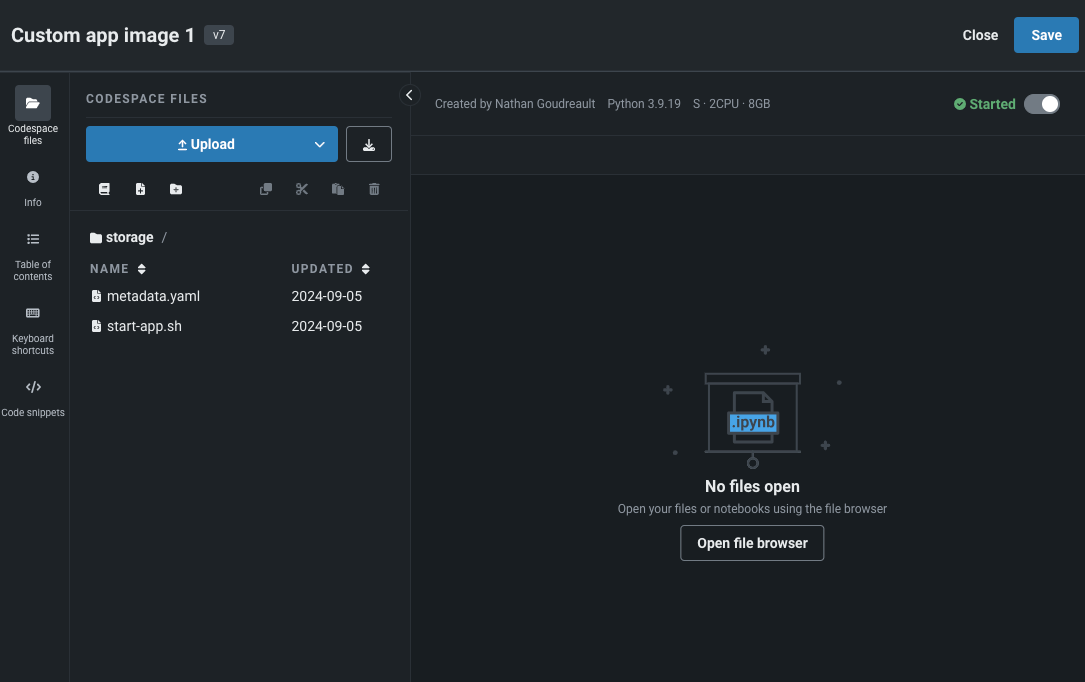

Manage custom applications in Registry¶

Now generally available, the Applications page in the NextGen Registry is home to all custom applications and application sources available to you. You can now create application sources, which contain the files, environment, and runtime parameters for custom applications you want to build, and build custom applications directly from these sources. You can also use the Applications page to manage applications by sharing or deleting them.

With general availability, you can open and manage application sources in a codespace, allowing you to directly edit a source's files, upload new files to it, and use all of the codespace's functionality.

Build custom applications from the template gallery¶

DataRobot provides templates from which you can build custom applications. These templates allow you to leverage pre-built application front-ends out of the box. The templates offer extensive customization options to the front-end. These templates can leverage a model that has already been deployed to quickly start and access a Streamlit, Flask or Slack application. Use a custom application template as a simple method for building and running custom code within DataRobot.

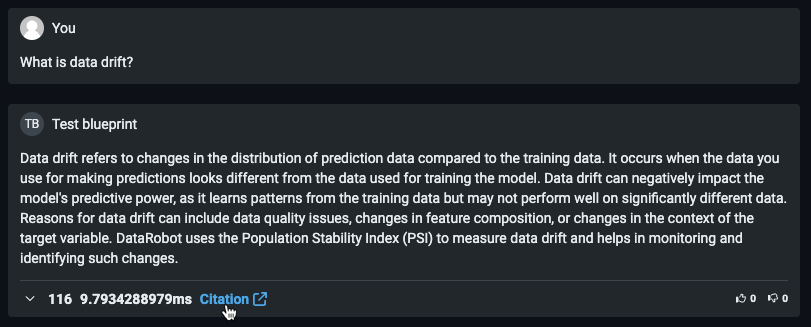

Chat generation Q&A application now GA¶

Now generally available, you can create a chat generation Q&A application with DataRobot to explore knowledge base Q&A use cases while leveraging generative AI to repeatedly make business decisions and showcase business value. The Q&A app offers an intuitive and responsive way to prototype, explore, and share the results of LLM models you've built. The Q&A app powers generative AI conversations backed by citations. Additionally, you can share the app with non-DataRobot users to expand its usability.

You can also use a code-first workflow to manage the chat generation Q&A application. To access the flow, navigate to DataRobot's GitHub repo. The repo contains a modifiable template for application components.

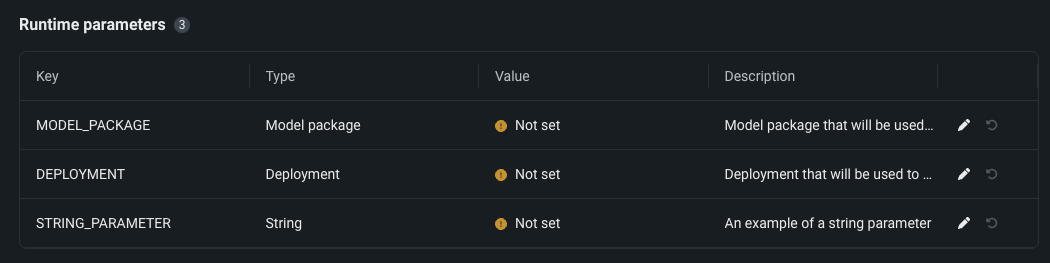

Custom application runtime parameters now GA¶

Now generally available, you can configure the resources and runtime parameters for application sources in the NextGen Registry. The resource bundle determines the maximum amount of memory and CPU that an application can consume to minimize potential environment errors in production. You can create and define runtime parameters used by the custom application by including them in the metadata.yaml file built from the application source.

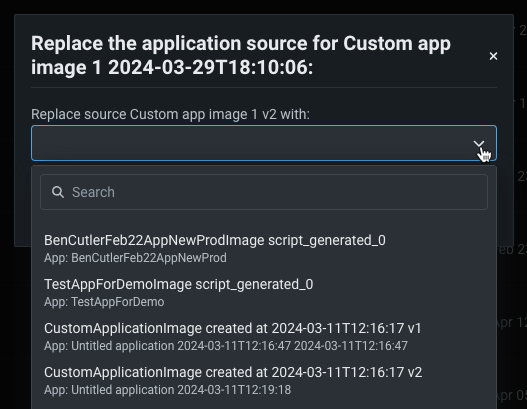

Replace custom application sources¶

Now generally available, you can replace the application source for custom applications. Doing so carries over a number of qualities from the application, including the application's code, the underlying execution environment, and the runtime parameters. When a source is replaced, all users with access to the application can still use it.

Simplified login process for applications¶

Applications now use an API key for authentication, so after creating an app in Workbench or DataRobot Classic, you no longer need to click through the OAuth authentication prompts. Note that when you create an app or access one that was shared with you, a new key (AiApp<app_id>) will appear in your list of API Keys.

Notebooks¶

GA¶

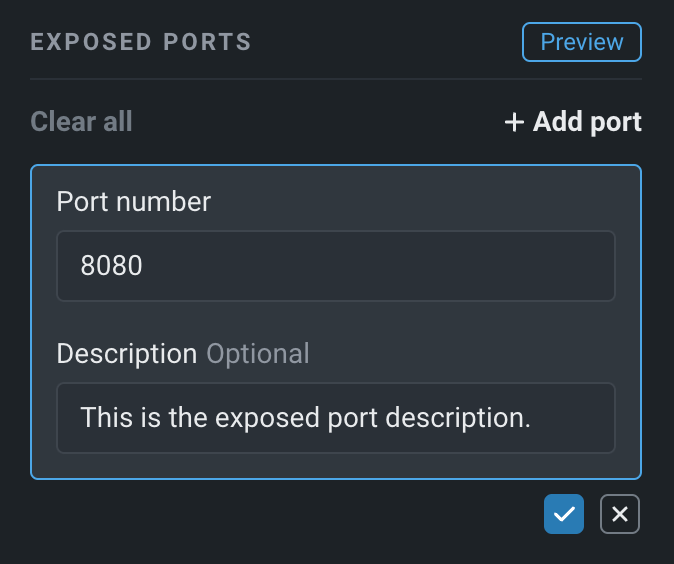

Notebook and codespace port forwarding now GA¶

Now generally available, you can enable port forwarding for notebooks and codespaces to access web applications launched by tools and libraries like MLflow and Streamlit. When developing locally, the web application is accessible at http://localhost:PORT; however, when developing in a hosted DataRobot environment, the port that the web application is running on (in the session container) must be forwarded to access the application. You can expose up to five ports in one notebook or codespace.

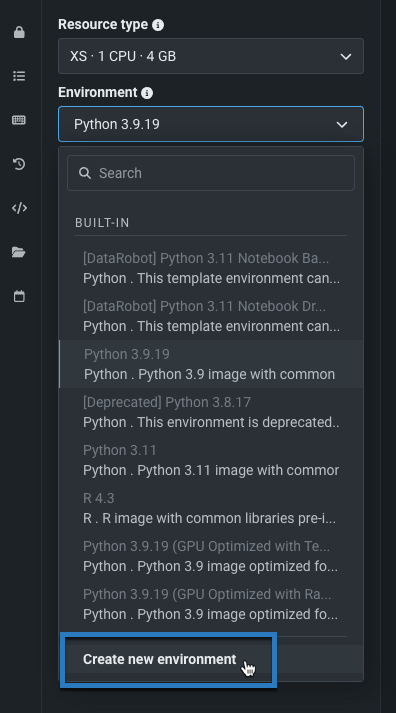

Create custom environments for notebooks¶

Now generally available, DataRobot Notebooks integrates with DataRobot custom environments, allowing you to define reusable custom Docker images for running notebook sessions. Custom environments provide full control over the environment configuration and the ability to leverage reproducible dependencies beyond those available in the built-in images. After creating a custom environment, you can share it with other users, or update its components to create a new version of the environment.

Convert standalone notebooks to codespaces¶

Now generally available, you can now use DataRobot to convert a standalone notebook into a codespace to incorporate additional workflow capabilities such as persistent file storage and Git compatibility. These types of features require a codespace. When converting a notebook, DataRobot maintains a number of notebook assets, including the environment configuration, the notebook contents, scheduled job definitions, and more.

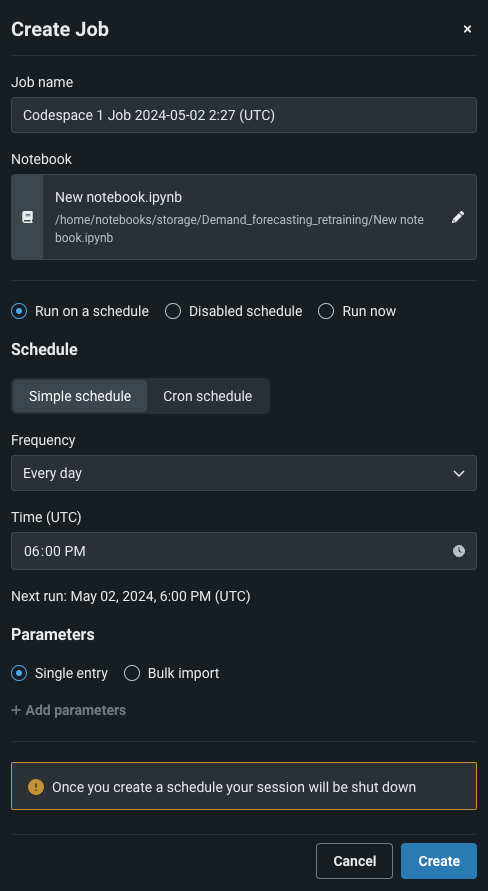

Create schedules for codespaces¶

Now generally available, you can automate your code-based workflows by scheduling notebooks in codespaces to run on a schedule in non-interactive mode. Scheduling is managed by notebook jobs, and you can only create a new notebook job when your codespace is offline. You can also parameterize a notebook to enhance the automation experience enabled by notebook scheduling. By defining certain values in a codespace as parameters, you can provide inputs for those parameters when a notebook job runs instead of having to continuously modify the notebook itself to change the values for each run.

GPU support for notebooks now GA¶

Now generally available (and GA premium for managed AI Platform users), DataRobot has added GPU resource types for notebook and codespace environment configuration.

GPU support for Notebook and codespace sessions is now available as a GA Premium feature for managed AI Platform users. When configuring the environment for your DataRobot Notebook or codespace session, you can select a GPU machine from the list of resource types. DataRobot also provides GPU-optimized built-in environments that you can select from to use for your session. These environment images contain the necessary GPU drivers as well as GPU-accelerated packages like TensorFlow, PyTorch, and RAPIDS.

API enhancements¶

Python client v3.6¶

v3.6 for DataRobot's Python client is now generally available. For a complete list of changes introduced in v3.6, view the Python client changelog.

DataRobot REST API v2.35¶

DataRobot's v2.35 for the REST API is now generally available. For a complete list of changes introduced in v2.35, view the REST API changelog.

Create vector databases with unstructured PDF documents¶

DataRobot now provides a service to run OCR on a dataset for you to easily extract and prepare unstructured data from PDFs to create vector databases, enabling you to start building RAG flows within DataRobot. The service produces an output of a dataset of PDF documents with the extracted text.

Use the declarative API to provision DataRobot assets¶

You can use the DataRobot declarative API as a code-first method for provisioning resources end-to-end in a way that is both repeatable and scalable. Supporting both Terraform and Pulumi, you can use the declarative API to programmatically provision DataRobot entities such as models, deployments, applications, and more. The declarative API allows you to:

- Specify the desired end state of infrastructure, simplifying management and enhancing adaptability across cloud providers.

- Automate provisioning to ensure consistency across environments and remove concerns about execution order.

- Simplify version control.

- Use application templates to reduce workflow duplication and ensure consistency.

- Integrate with DevOps and CI/CD to ensure predictable, consistent infrastructure and reduce deployment risks.

Review an example below of how you can use the declarative API to provision DataRobot resources using the Pulumi CLI:

import pulumi_datarobot as datarobot

import pulumi

import os

for var in [

"OPENAI_API_KEY",

"OPENAI_API_BASE",

"OPENAI_API_DEPLOYMENT_ID",

"OPENAI_API_VERSION",

]:

assert var in os.environ

pe = datarobot.PredictionEnvironment(

"pulumi_serverless_env", platform="datarobotServerless"

)

credential = datarobot.ApiTokenCredential(

"pulumi_credential", api_token=os.environ["OPENAI_API_KEY"]

)

cm = datarobot.CustomModel(

"pulumi_custom_model",

base_environment_id="65f9b27eab986d30d4c64268", # GenAI 3.11 w/ moderations

folder_path="model/",

runtime_parameter_values=[

{"key": "OPENAI_API_KEY", "type": "credential", "value": credential.id},

{

"key": "OPENAI_API_BASE",

"type": "string",

"value": os.environ["OPENAI_API_BASE"],

},

{

"key": "OPENAI_API_DEPLOYMENT_ID",

"type": "string",

"value": os.environ["OPENAI_API_DEPLOYMENT_ID"],

},

{

"key": "OPENAI_API_VERSION",

"type": "string",

"value": os.environ["OPENAI_API_VERSION"],

},

],

target_name="resultText",

target_type="TextGeneration",

)

rm = datarobot.RegisteredModel(

resource_name="pulumi_registered_model",

name=None,

custom_model_version_id=cm.version_id,

)

d = datarobot.Deployment(

"pulumi_deployment",

label="pulumi_deployment",

prediction_environment_id=pe.id,

registered_model_version_id=rm.version_id,

)

pulumi.export("deployment_id", d.id)

Accuracy over time data storage for Python 3 projects¶

DataRobot has changed the storage type for Accuracy over Time data from MongoDB to S3.

On the managed AI Platform (cloud), DataRobot uses blob storage by default. The feature flag BLOB_STORAGE_FOR_ACCURACY_OVER_TIME has been removed from the feature access settings.

All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them.