September 2023¶

September 27, 2023

With the latest deployment, DataRobot's AI Platform delivered the new GA and preview features listed below. From the release center you can also access:

In the spotlight¶

Compare models across experiments from a single view¶

Solving a business problem with Machine Learning is an iterative process and involves running many experiments to test ideas and confirm assumptions. To simplify the iteration process, Workbench introduces model Comparison—a tool that allows you to compare up to three models, side-by-side, from any number of experiments within a single Use Case. Now, instead of having to look at each experiment individually and record metrics for later comparison, you can compare models across experiments in a single view.

The comparison Leaderboard is accessible from any project in Workbench. It can be filtered to more easily locate and select models, compare models across different insights, and view and compare metadata for the selected models. The Comparison tab is a preview feature, on by default.

The video below provides a very quick overview of the comparison functionality.

Feature flag ON by default: Enable Use Case Leaderboard Compare

Preview documentation.

Video: Model comparison

New Google BigQuery connector added¶

The new BigQuery connector is now generally available in DataRobot. In addition to performance enhancements and Service Account authentication, this connector also enables support for BigQuery in Workbench, allowing you to:

- Create and configure data connections.

- Add BigQuery datasets to a Use Case.

- Wrangle BigQuery datasets, and then publish recipes to BigQuery to materialize the output in the Data Registry.

Video: Google BigQuery connector

September release¶

The following table lists each new feature:

Features grouped by capability

GA¶

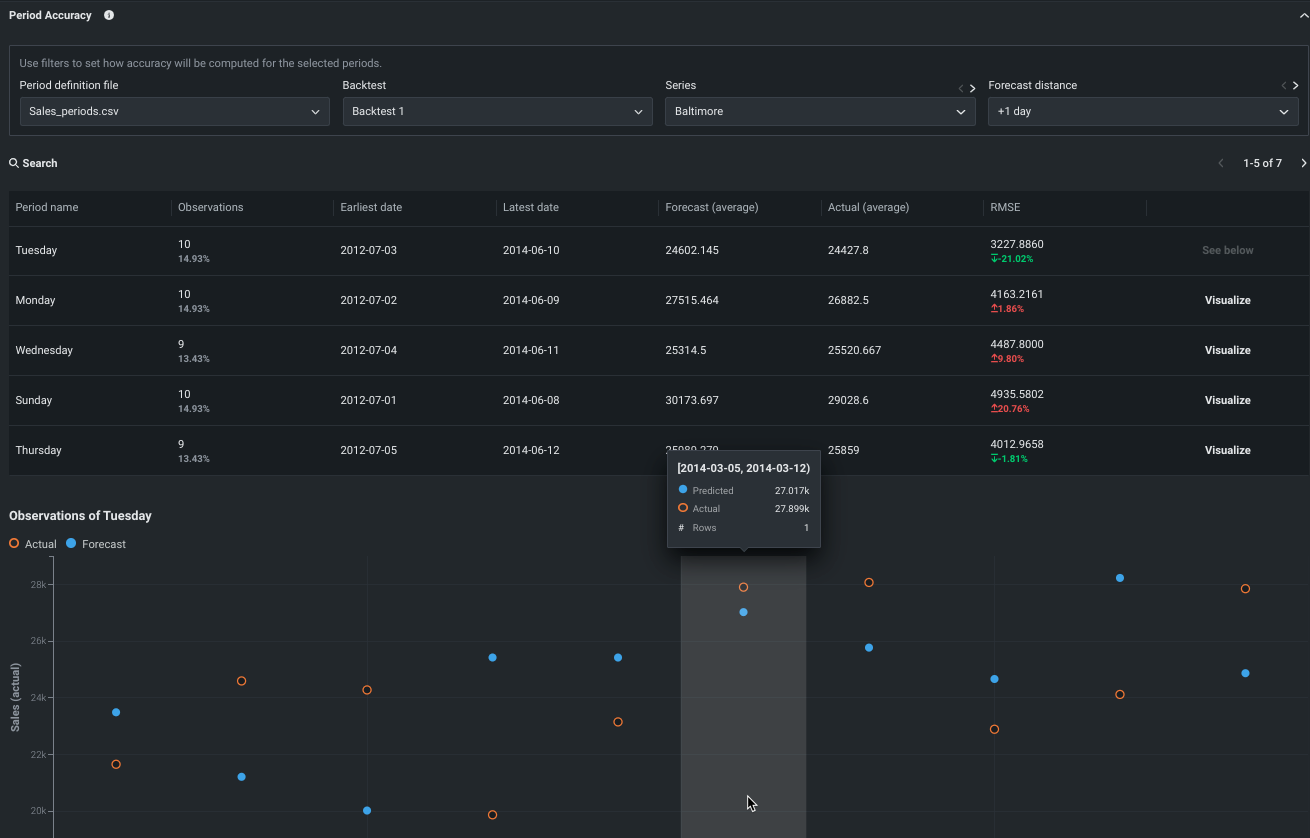

Period Accuracy now available in Workbench and DataRobot Classic¶

Period Accuracy is an insight that lets you define periods within your dataset and then compare their metric scores against the metric score of the model as a whole. It is now generally available for all time series projects. In DataRobot Classic, the feature can be found in the Evaluate > Period Accuracy tab. For Workbench, find the insight under Experiment information. The insight is also available for time-aware experiments

Expanded prediction and monitoring job definition access¶

This release expands role-based access controls (RBAC) for prediction and monitoring jobs to align with deployment permissions. Previously, when deployments were shared between users, job definitions and batch jobs weren’t shared alongside the deployment. With this update, the User role gains read access to prediction and monitoring job definitions associated with any deployments shared with them. The Owner role gains read and write access to prediction and monitoring job definitions associated with any deployments shared with them. For more information on the capabilities of deployment Users and Owners, review the Roles and permissions documentation. Shared job definitions appear alongside your own; however, if you don't have access to the credentials associated with a prediction Source or Destination in the AI Catalog, the connection details are [redacted]:

For more information, see the documentation for Shared prediction job definitions and Shared monitoring job definitions.

Preview¶

Materialize Workbench datasets in Google BigQuery¶

Now available for preview, you can materialize wrangled datasets in the Data Registry as well as BigQuery. To enable this option, wrangle a BigQuery dataset in Workbench, click Publish, and select Publish to BigQuery in the Publishing Settings modal.

Note that you must establish a new connection to BigQuery to use this feature.

Preview documentation.

Feature flag(s) ON by default:

- Enable BigQuery In-Source Materialization in Workbench

- Enable Dynamic Datasets in Workbench

Azure Databricks support added to DataRobot¶

Support for Azure Databricks has been added to both DataRobot Classic and Workbench, allowing you to:

- Create and configure data connections.

- Add Azure Databricks datasets.

Preview documentation.

Feature flag OFF by default: Enable Databricks Driver

Workbench time-aware capabilities expanded to include time series modeling¶

With this deployment, DataRobot users can now use date/time partitioning to build time series-based experiments. Support for time series setup, modeling, and insights extend date/time partitioning, bringing forecasting capabilities to Workbench. With a significantly more streamlined workflow, including a simple window settings modal with graphic visualization, Workbench users can easily set up time series experiments.

After modeling, all time series insights will be available, as well as experiment summary data that provides a backtest summary and partitioning log. Additionally:

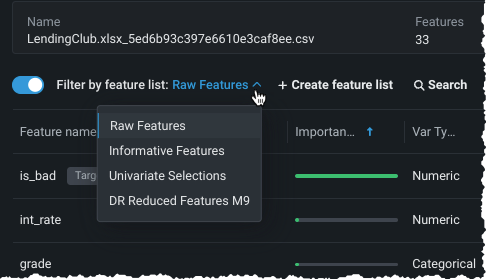

With feature lists and dataset views, you can see the results of feature extraction and reduction.

Because Quick mode trains only the most crucial blueprints, you can build more niche or long-running time series models, manually, from the blueprint repository.

Feature flags ON by default:

- Enable Date/Time Partitioning (OTV) in Workbench

- Enable Workbench for Time Series Projects

Leaderboard Data and Feature List tabs added to Workbench¶

This deployment brings the addition of two new tabs to the experiment info displayed on the Leaderboard:

-

The Data tab provides summary analytics of the data used in the project.

-

The Feature lists tab lists feature lists built for the experiment and available for model training.

Feature flag ON by default: Enable New No-Code AI Apps Edit Mode

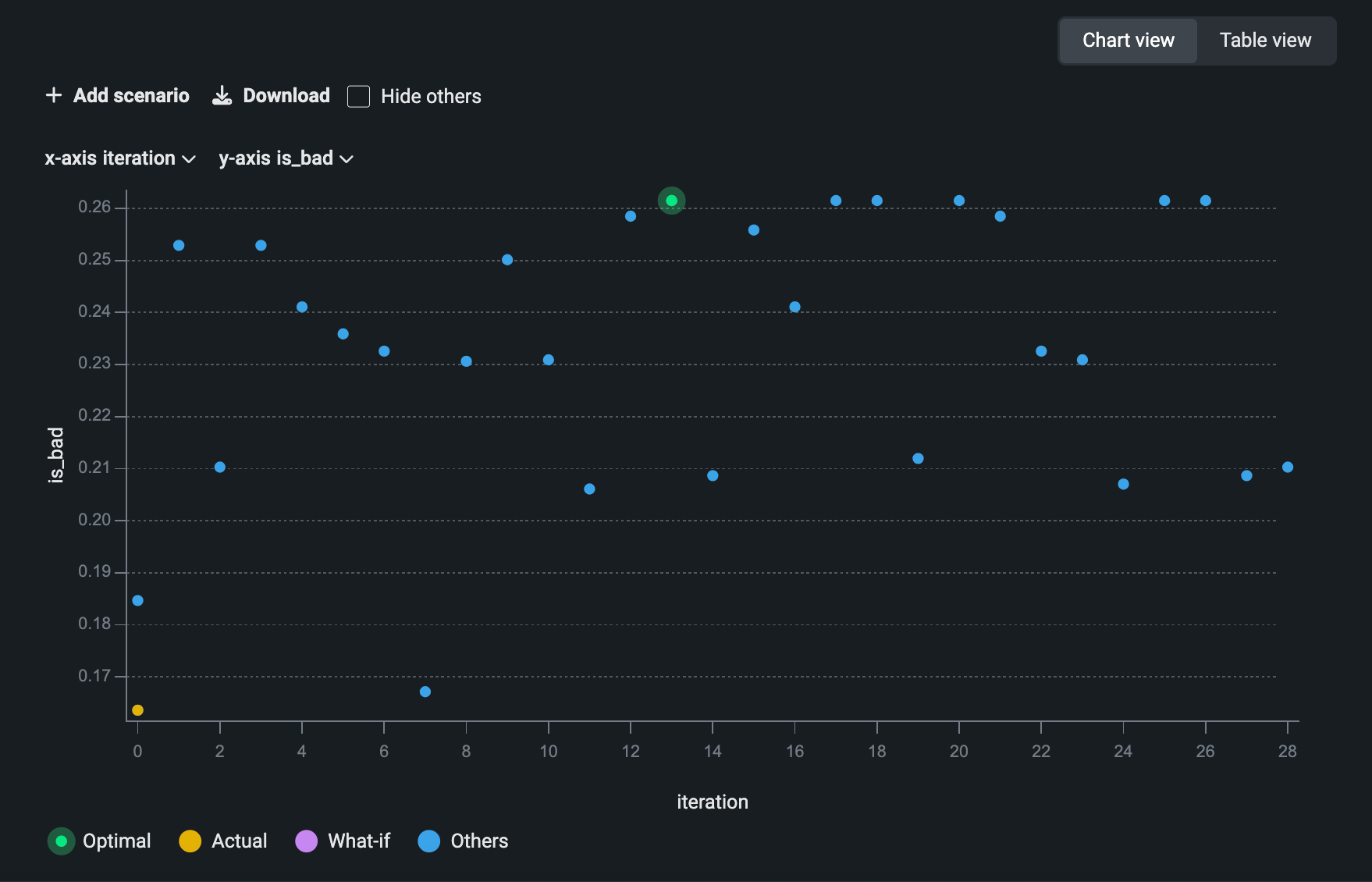

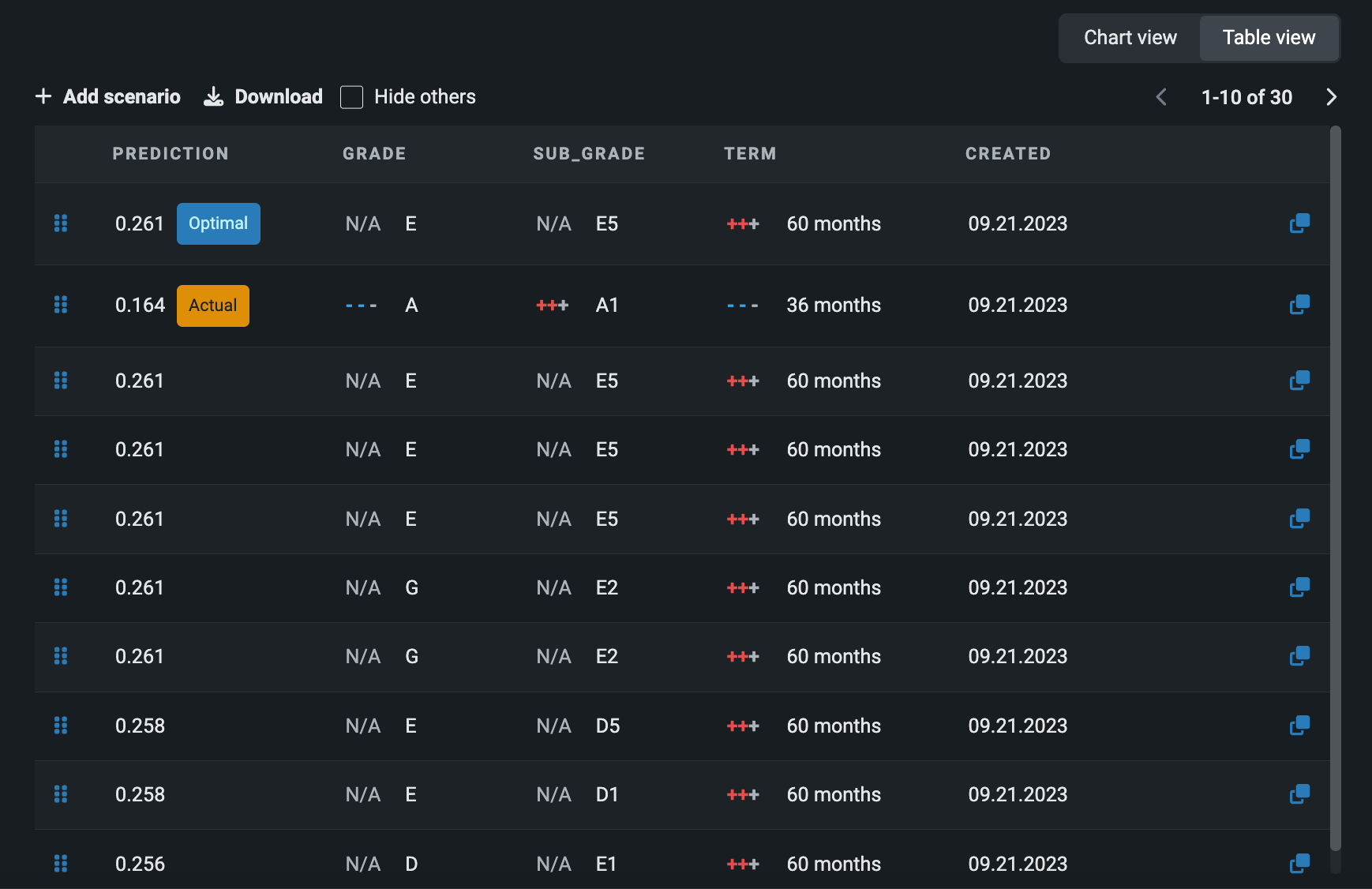

What-if and Optimizer available in the new app experience¶

In the new Workbench app experience, you can now interact with prediction results using the what-if and optimizer widget, which provides both a scenario comparison and optimizer tool.

Make sure the tool(s) you want to use are enabled in Edit mode. Then, click Present, select a prediction row in the All rows widget, and scroll down to What-if and Optimizer. From here, you can create new scenarios and view the optimized outcome.

Preview documentation.

Feature flag ON by default: Enable New No-Code AI Apps Edit Mode

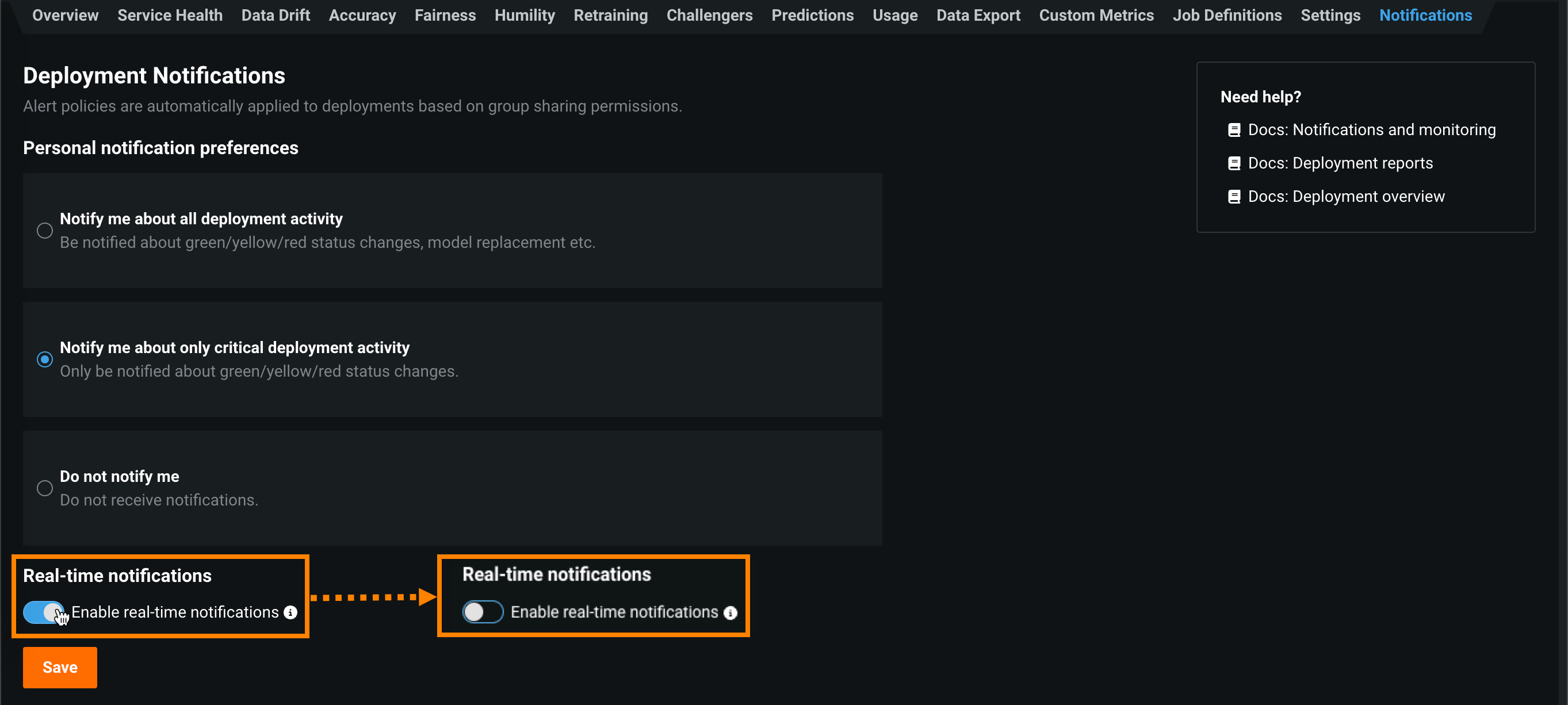

Real-time notifications for deployments¶

DataRobot provides automated monitoring with a notification system, allowing you to configure alerts triggered when service health, data drift status, model accuracy, or fairness values deviate from your organization's accepted values. Now available for preview, you can enable real-time notifications for these status alerts, allowing your organization to quickly respond to changes in model health without waiting for scheduled health status notifications:

For more information, see the notifications documentation.

Feature flag OFF by default: Enable Real-time Notifications for Deployments

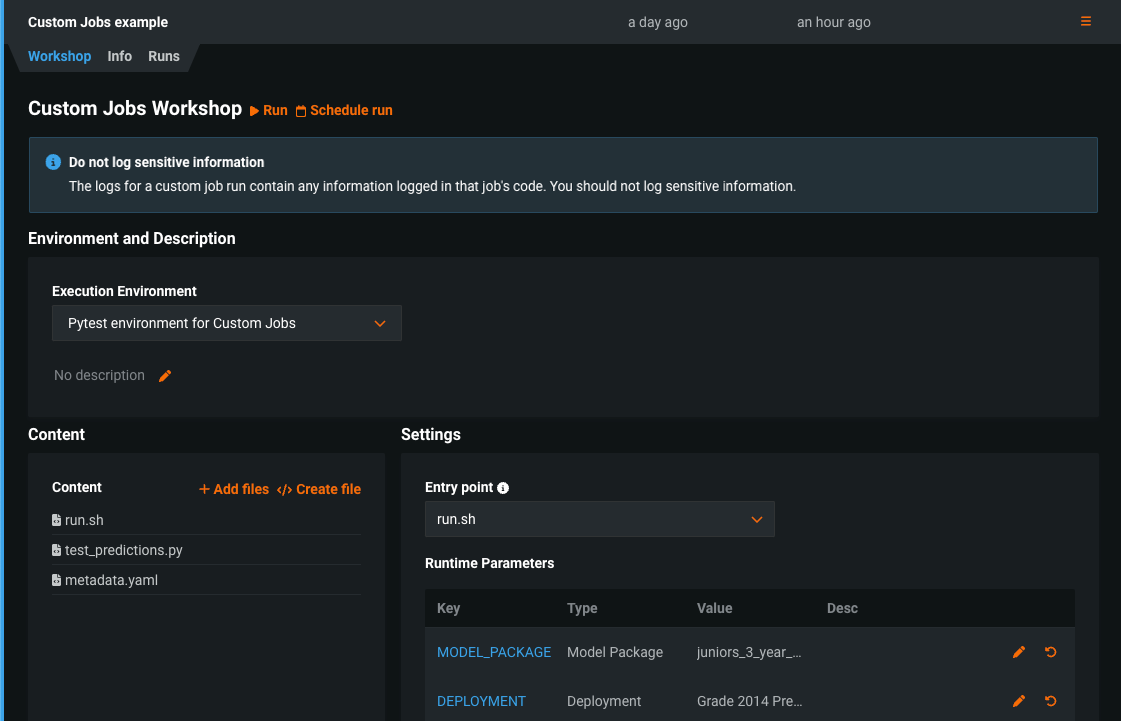

Custom jobs in the Model Registry¶

Now available as a preview feature, you can create custom jobs in the Model Registry to implement automation (for example, custom tests) for your models and deployments. Each job serves as an automated workload, and the exit code determines if it passed or failed. You can run the custom jobs you create for one or more models or deployments. The automated workload you define when you assemble a custom job can make prediction requests, fetch inputs, and store outputs using DataRobot's Public API.

For more information, see the documentation.

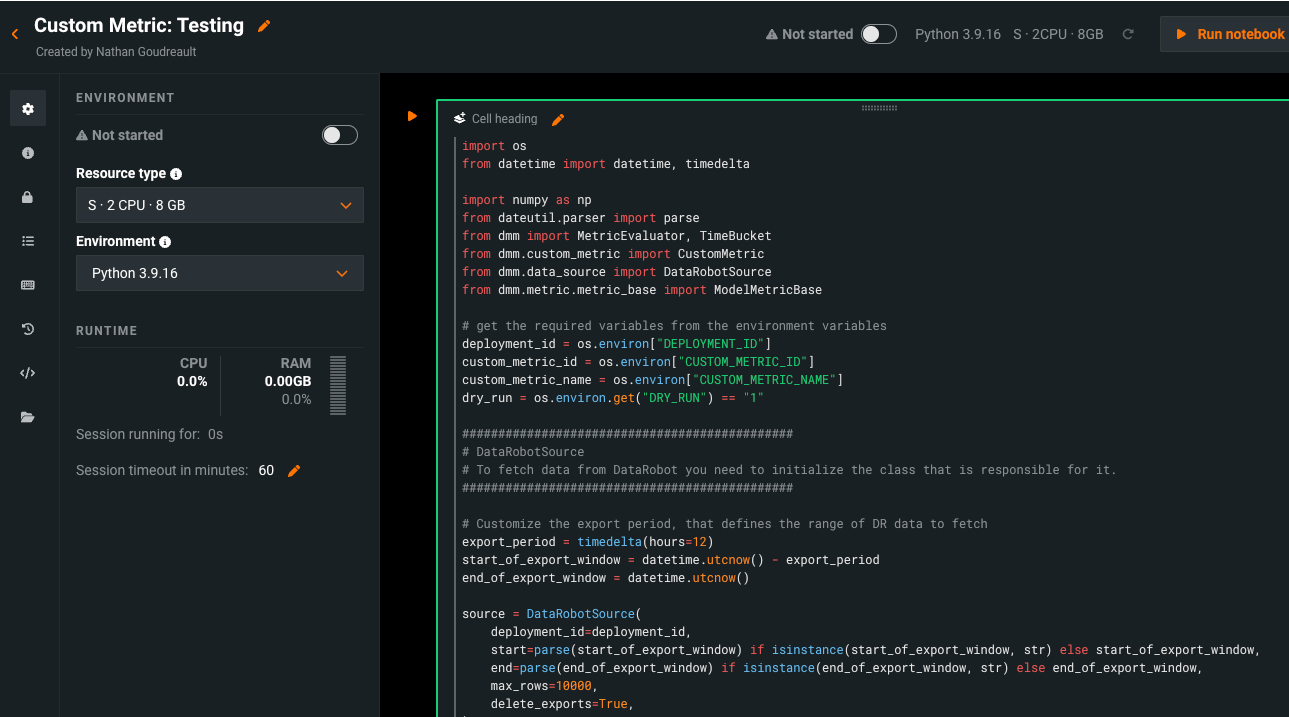

Hosted custom metrics¶

Now available as a preview feature, you can not only implement up to five of your organization's custom metrics into a deployment, but also upload and host code using DataRobot Notebooks to easily add custom metrics to other deployments. After configuring a custom metric, DataRobot loads a notebook that contains the code for the metric. The notebook contains one custom metric cell, a unique type of notebook cell that contains Python code defining how the metric is exported and calculated, code for scoring, and code to populate the metric.

For more information, see the documentation.

All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them.