Notifications tab¶

DataRobot provides automated monitoring with a configurable notification system, alerting you when service health, data drift status, model accuracy, or fairness values exceed your defined acceptable levels. Notifications can trigger in-app alerts, emails, and webhooks. They are off by default but can be enabled by a deployment owner. Keep in mind that notifications only control whether emails are sent to subscribers; if notifications are disabled, monitoring of service health, data drift, accuracy, and fairness statistics still occurs. By default, notifications occur in real time for your configured status alerts, allowing your organization to quickly respond to changes in model health without waiting for scheduled health status notifications; however, you can choose to send notifications on the monitoring schedule.

To set the types of notifications you want to receive and how you receive them, in the Deployments inventory, open a deployment and click the Notifications tab.

Deployment consumer notifications

A deployment consumer only receives a notification when a deployment is shared with them and when a previously shared deployment is deleted. They are not notified about other events.

Schedule deployment reports

You can also schedule deployment reports on the Notifications tab.

Configure monitoring definitions¶

Monitoring definitions are located on the deployment settings pages, and your control over those settings depends on your deployment role—owner or user. Both roles can set personal notification settings; however, only deployment owners can set up schedules and thresholds to monitor the following:

Enable notification policies¶

To configure deployment notifications through the creation of notification policies, you can configure and combine notification channels and templates. The notification template determines which events trigger a notification, and the channel determines which users are notified. When you create a notification policy for a deployment, you can use a policy template without changes or as the basis of a new policy with modifications. You can also create an entirely new notification policy.

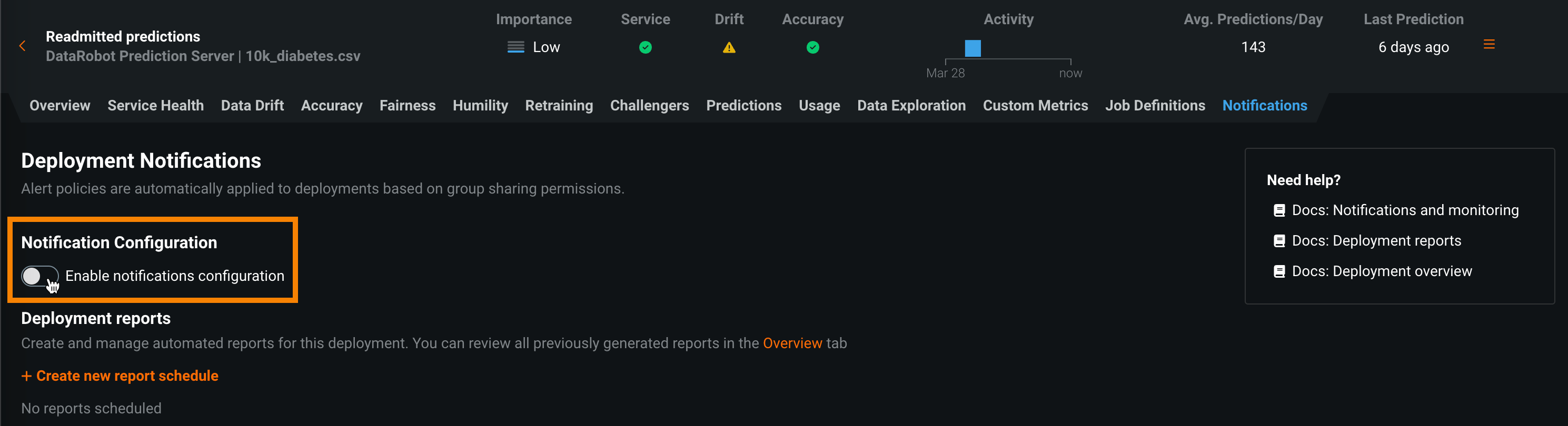

To use notification policies for a deployment, in the Notification configuration section, click Enable notifications configuration:

Create notification policies¶

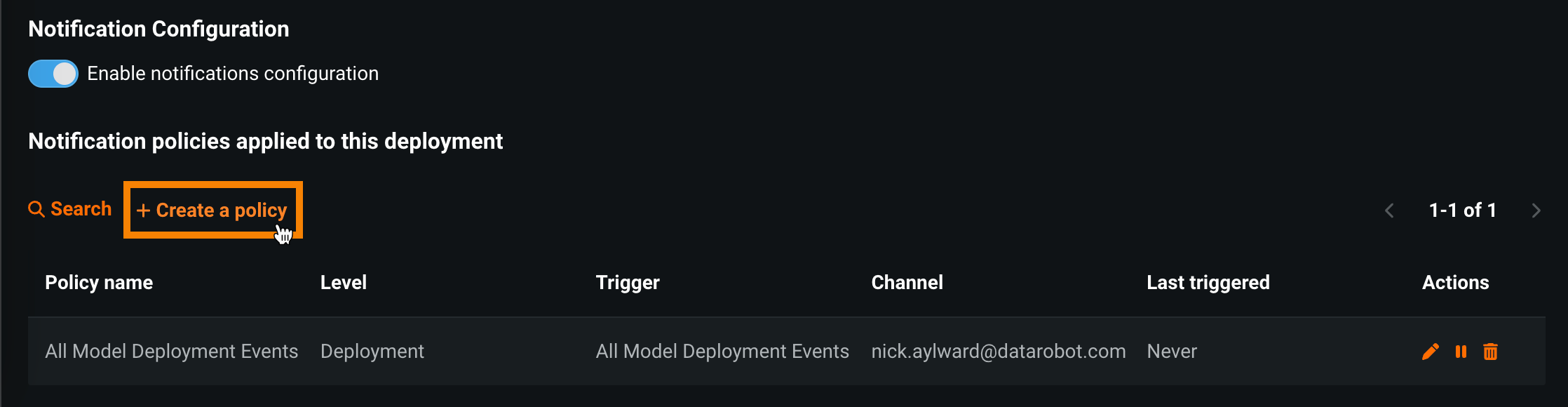

After you enable notification configuration, click + Create a policy to add or define a policy for the deployment. You can use a policy template without changes or as the basis of a new policy with modifications. You can also create an entirely new notification policy:

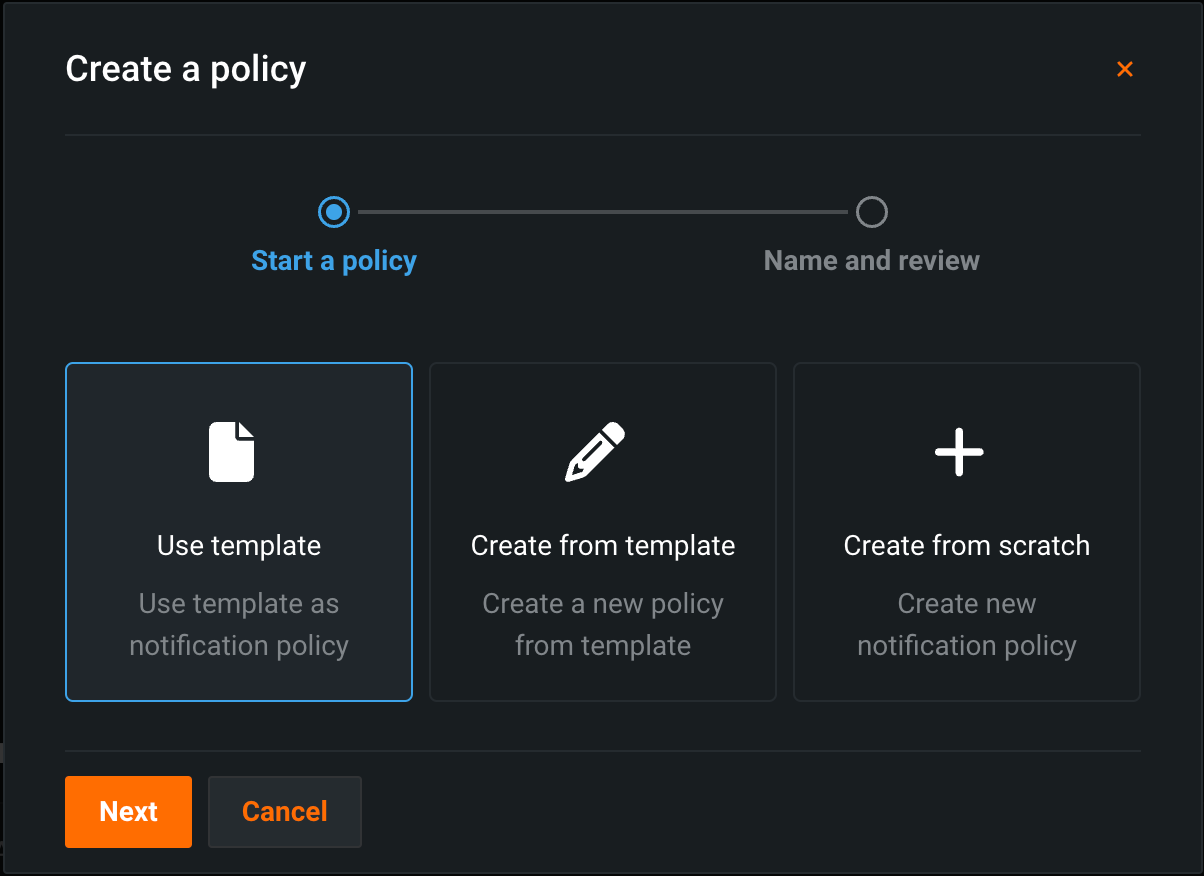

In the Create a policy dialog box, select one of the following, and then click Next:

| Option | Description |

|---|---|

| Use template | Create a policy from a template, without changes. |

| Create from template | Create a policy from a template, with changes. |

| Create from scratch | Create a new policy and optionally save it as a template. |

Use template¶

In the Create a policy dialog box, select a policy, and then click Next:

Enter a Policy name, then click Create policy.

Create from template¶

In the Create a policy dialog box, select a policy, and then click Next:

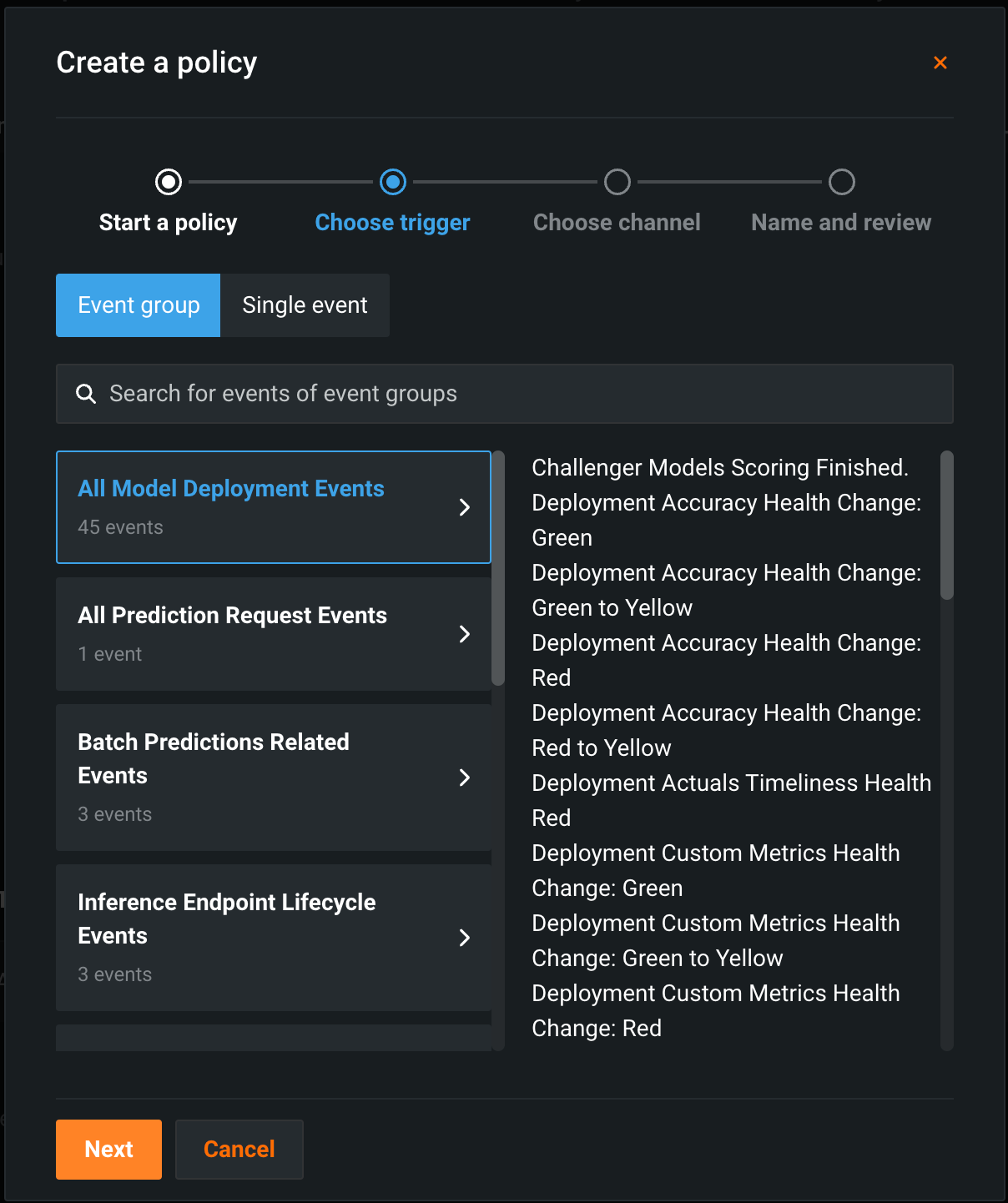

On the Choose trigger tab, select an Event group or a Single event, then click Next:

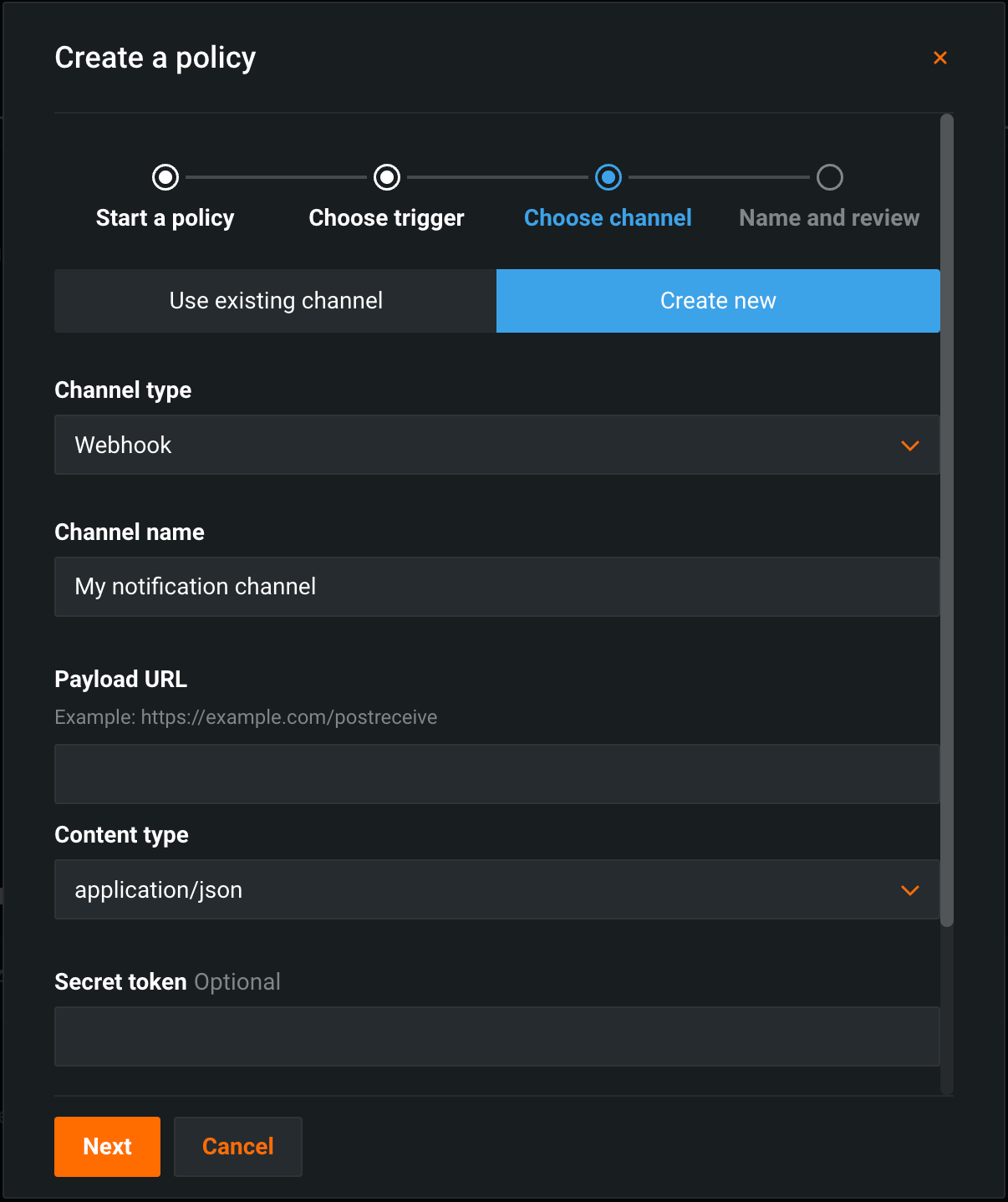

On the Choose channel tab, click Use existing channel and select a channel, or click Create new, enter a Channel name, and then configure the following fields:

| Channel type | Fields |

|---|---|

| External | |

| Webhook |

|

|

|

| Slack |

|

| Microsoft Teams |

|

| DataRobot | |

| User | Enter one or more existing DataRobot usernames to add those users to the channel. To remove a user, in the Username list, click the remove icon . |

After you select or create a channel, click Next, confirm the Trigger, Channel, or Policy name, then click Create policy or Finish and save as template:

Create from scratch¶

On the Choose trigger tab, select an Event group or a Single event, then click Next:

On the Choose channel tab, click Use existing channel and select a channel, or click Create new, enter a Channel name, and then configure the following fields:

| Channel type | Fields |

|---|---|

| External | |

| Webhook |

|

|

|

| Slack |

|

| Microsoft Teams |

|

| DataRobot | |

| User | Enter one or more existing DataRobot usernames to add those users to the channel. To remove a user, in the Username list, click the remove icon . |

After you select or create a channel, click Next, confirm the Trigger, Channel, or Policy name, then click Create policy or Finish and save as template.