Composable ML overview¶

Composable ML provides a full-flexibility approach to model building, allowing you to direct your data science and subject matter expertise to the models you build. With Composable ML, you build blueprints that best suit your needs using built-in tasks and custom Python/R code. Then, use your custom blueprint together with other DataRobot capabilities (MLOps, for example) to boost productivity.

Get started with Composable ML¶

The following resources are available to provide more information.

View¶

Quickstart¶

- Quickstart walks you through testing and learning Composable ML.

Code examples¶

- Task templates

- Drop-in environments

- Compose blueprints programmatically in the Blueprint Workshop:

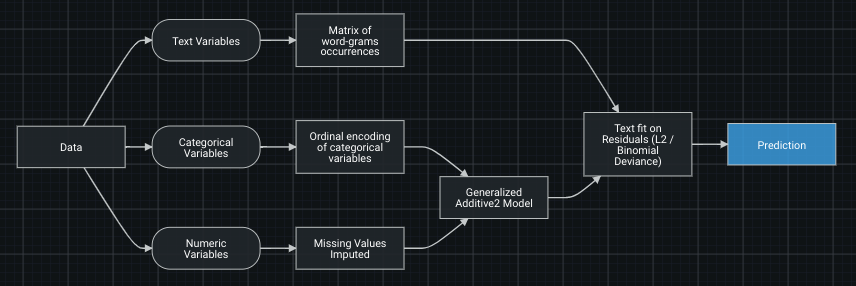

How it works¶

To compose a blueprint—an ML pipeline that includes both preprocessing and modeling tasks—you use some or all of these four key components:

-

Task: An ML method, for example, XGBoost or one-hot encoding, that is used to define a blueprint. There are hundreds of built-in tasks available and you can also define your own using Python or R. There are two types of tasks—Estimator and Transform—which are described in detail in the blueprint editor documentation.

-

Environment: A Docker container used to run a custom task.

-

Model: A trained ML pipeline capable of scoring new data.

-

DataRobot User Models (DRUM) CLI: A command line tool that helps to assemble, test, and run custom tasks. If you are using custom tasks, it is recommended that you install DRUM on your machine as a Python package so that you can quickly test tasks locally before uploading them into DataRobot.

Why use Composable ML?¶

Some of the key benefits of bringing training code into DataRobot include:

Flexibility: Use any method or algorithm for modeling and preprocessing.

- Use Python and/or R to define modeling logic.

- Stitch Python and R tasks together in a single blueprint—DataRobot will handle the data conversion.

- Install any dependency and, if required, bring your own Docker container.

Productivity: Instant integration with built-in components helps to streamline your end-to-end flow. Once a blueprint is trained on DataRobot's infrastructure, you get instant access to the model Leaderboard, MLOps, compliance documentation, model insights, Feature Discovery, and more.

Collaboration: With blueprint and task re-use, organizations can experience true modeling collaboration:

- Experts can build custom tasks and blueprints; users across the organization can easily re-use those creations in a few clicks, without needing to read the code.

- Citizen data scientists can share models with data science experts, who can then further experiment and enhance them.

Use cases¶

Some things to try:

-

Experiment with preprocessing and estimators to incorporate business and data science knowledge.

-

Remove certain preprocessing steps to comply with regulatory/compliance requirements.

-

Train and deploy models using domain-specific data: IP, chemical formulas, etc.

-

Create a library of state-of-the-art algorithms for specific use cases to easily leverage it across the organization (data scientists build custom ML algorithms and share them with business analysts, who can then use them without coding).

-

Compare your existing ML models to AutoML in DataRobot to find a better model or perhaps learn ways to improve your own model.