Data prep for time series¶

When starting a time series project, data quality detection evaluates whether the time step is irregular. This can result in significant gaps in some series and precludes the use of seasonal differencing and cross-series features that can improve accuracy. To avoid the inaccurate rolling statistics these gaps can cause, you can:

- Let DataRobot use row-based partitioning.

- Fix the gaps with the time series data prep tool by using duration-based partitioning.

Generally speaking, the data prep tool first aggregates the dataset to the selected time step, and, if there are still missing rows, imputes the target value. It allows you to choose aggregation methods for numeric, categorical, and text values. You can also use it to explore modeling at different time scales. The resulting dataset is then published to the AI Catalog.

Access the data prep tool¶

Access the data prep tool from the Start screen in a project or directly from the AI Catalog.

The method to modify a dataset in the AI Catalog is the same regardless of whether you start from the Start screen or from the catalog.

Access data prep from a project¶

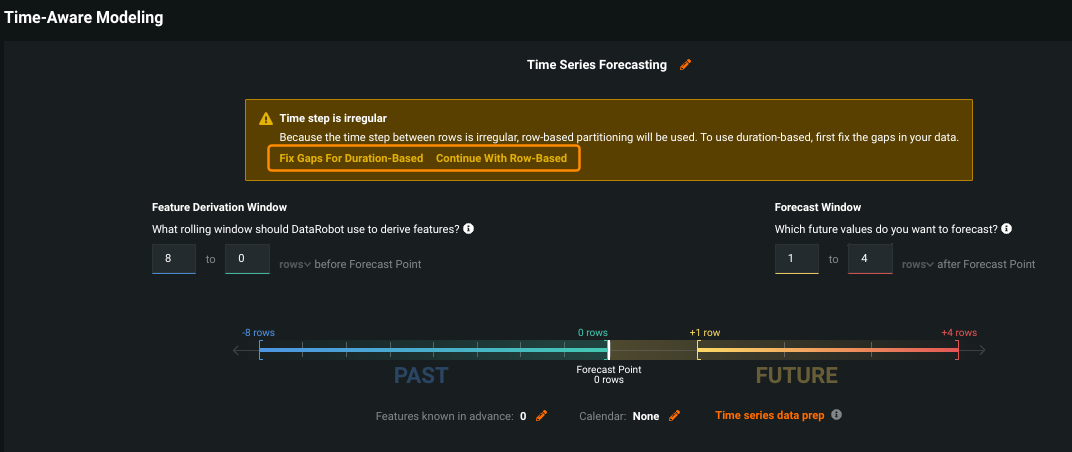

From the Start screen, the data prep tool becomes available after initial set up (target, date/time feature, forecasting or nowcasting, series ID, if applicable). Click Fix Gaps For Duration-Based to use the tool when DataRobot detects that the time steps are irregular:

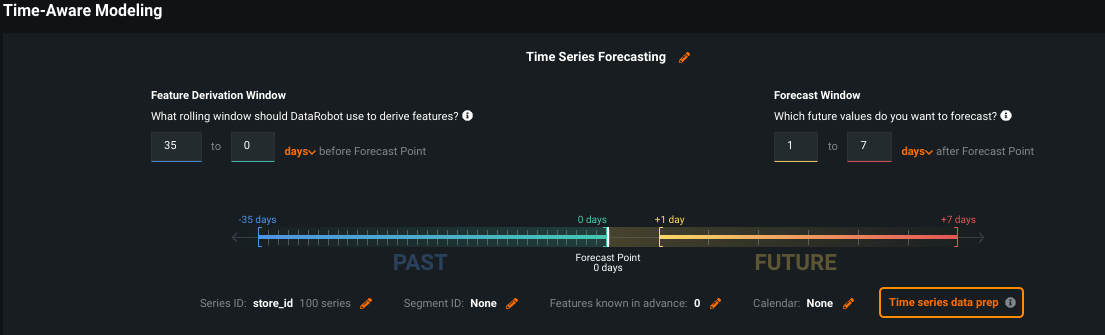

Or, even if the time steps are regular, use it to apply dataset customizations:

Click Time series data prep.

Warning

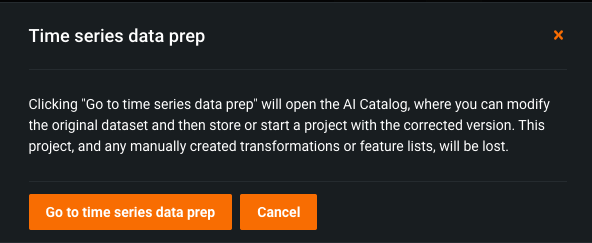

A message displays to warn you that the current project and any manually created feature transformations or feature lists in the project are lost when you access the time series data prep tool from the project:

Click Go to time series data prep to open and modify the dataset in the AI Catalog. Click Cancel to continue working in the current project.

Access data prep from the AI Catalog¶

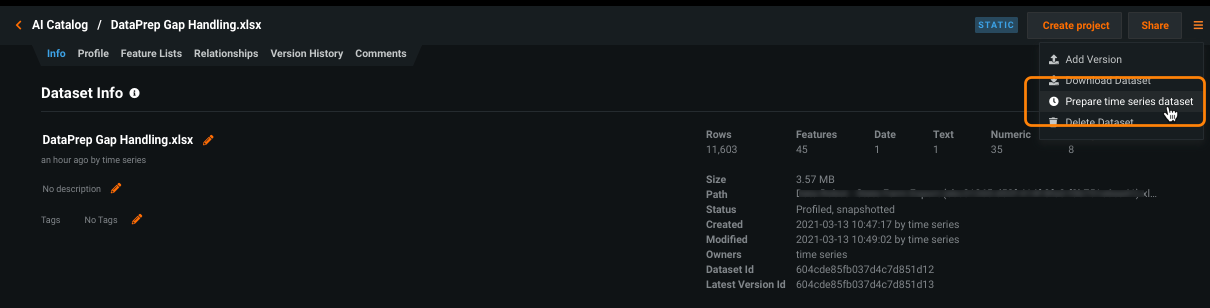

In the AI Catalog, open the dataset from the inventory and, from the menu, select Prepare time series dataset:

For the Prepare time series dataset option to be enabled for a dataset in the AI Catalog, you must have permission to modify it. Additionally, the dataset must:

- Have a status of static or Spark.

- Have at least one date/time feature.

- Have at least one numeric feature.

Modify a dataset¶

Use the following mechanisms to modify a dataset using the time series data prep tool:

- Set manual options using dropdowns and selectors to generate code that sets the aggregation and imputation methods.

- (Optional) Modify the Spark SQL query generated from the manual settings. (To instead create a dataset from a blank Spark SQL query, use the AI Catalog's Prepare data with Spark SQL functionality.)

Set manual options¶

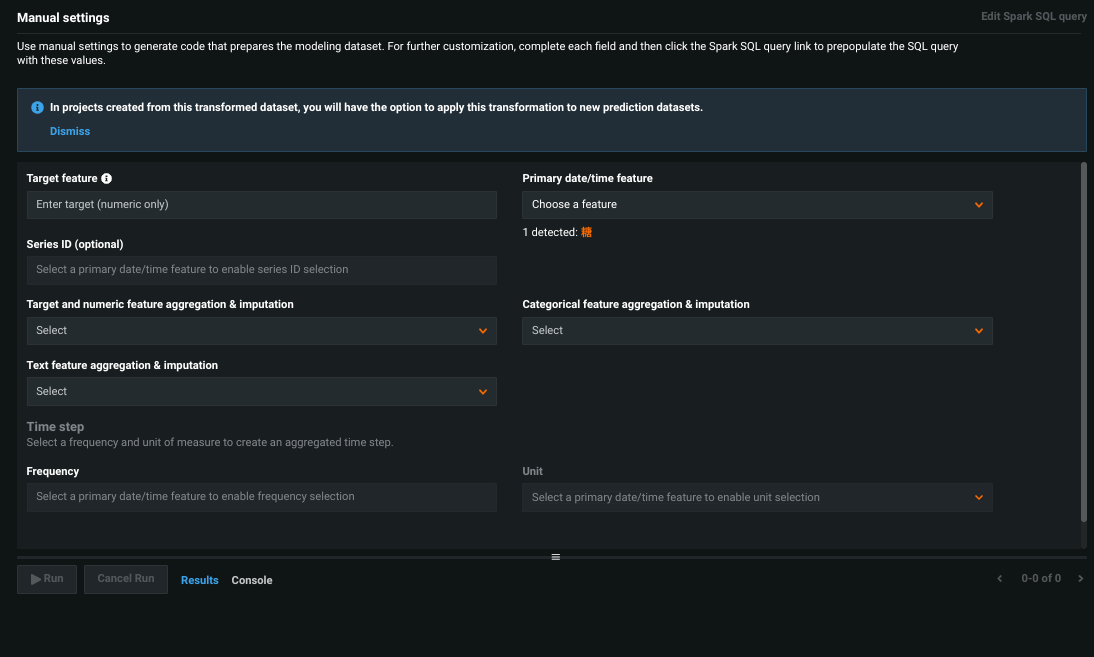

Once you open time series data prep, the Manual settings page displays.

Complete the fields that will be used as the basis for the imputation and aggregation that DataRobot computes. You cannot save the query or edit it in Spark SQL until all required fields are complete. (See additional information on imputation, below.)

| Field | Description | Required? |

|---|---|---|

| Target feature | Numeric column in the dataset to predict. | Yes |

| Primary date/time feature | Time feature used as the basis for partitioning. Use the dropdown or select from the identified features. | Yes |

| Series ID | Column containing the series identifier, which allows DataRobot to process the dataset as a separate time series. | No |

| Series start date (only available once series ID is set) | Basis for the series start date, either the earliest date for each series (per series) or the earliest date found for any series (global). | Defaults to per-series |

| Series end date (only available once series ID is set) | Basis for the series end date, either the last entry date for each series (per series) or the latest date found for any series (global). | Defaults to per-series |

| Target and numeric feature aggregation & imputation | Aggregate the target using either mean & most recent or sum & zero. In other words, the time step's aggregation is created using either the sum or the mean of the values. If there are still missing target values after aggregating, those values are imputed with zero (if sum) or the most recent value (if mean). |

Yes |

| Categorical feature aggregation & imputation | Aggregate categorical features using the most frequent value or the last value within the aggregation time step. Imputation only applies to features that are constant within a series (for example, the cross-series groupby column) which is imputed so that they remain constant within the series. | Yes |

| Text feature aggregation & imputation (only available if text features are present.) | Choose ignore to skip handling of text features or aggregate by:• most frequent text value • last text value• concatenate all text values• total text length• mean text length |

Yes |

| Time Step: The components—frequency and unit—that make up the detected median time delta between rows in the new dataset. For example, 15 (frequency) days (unit). | ||

| Frequency | Number of (time) units that comprise the time step. | Defaults to detected |

| Unit | Time unit (seconds, days, months, etc.) that comprise the time step from the dropdown. | Defaults to the detected unit |

Once all required fields are complete, three options become available:

-

Click Run to preview the first 10,000 results of the query (the resulting dataset).

Note

The preview can fail to execute if the output is too large, instead returning an alert in the console. You can still save the dataset to the AI catalog, however.

-

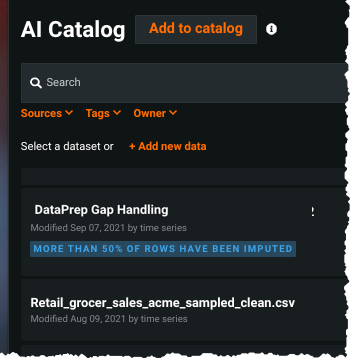

Click Save to create a new Spark SQL dataset in the AI Catalog. DataRobot opens the Info tab for that dataset; the dataset is available to be used to create a new project or any other options available for a Spark SQL dataset in the AI Catalog. If the dataset has greater than 50% or more imputed rows, DataRobot provides a warning message.

-

Click Edit Spark SQL query to open the Spark SQL editor and modify the initial query.

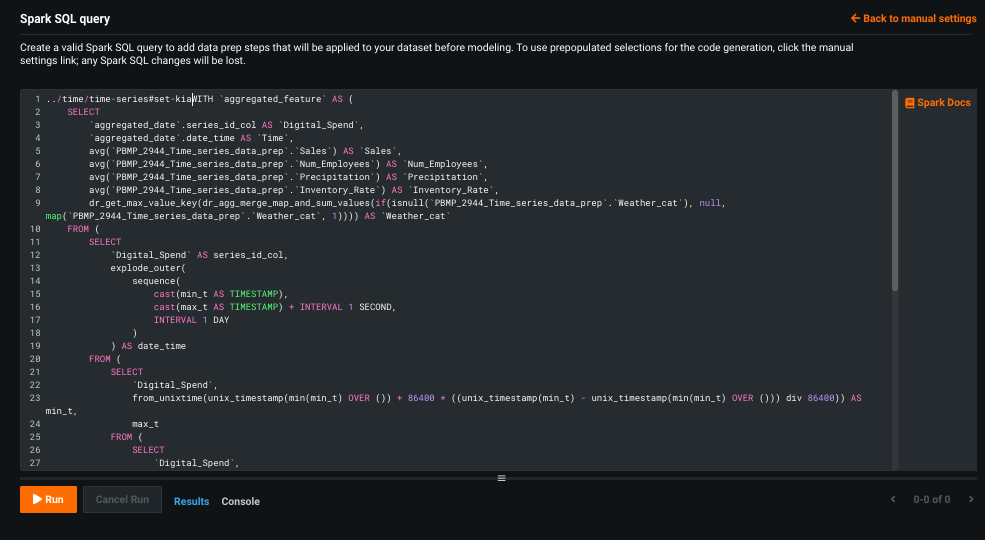

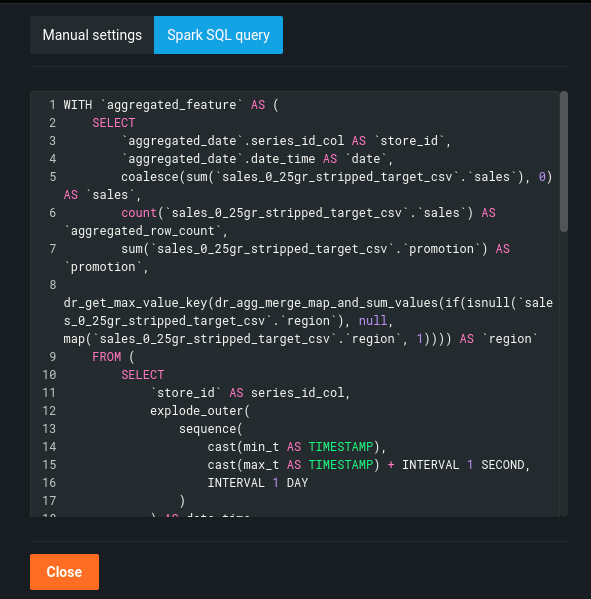

Edit the Spark SQL query¶

When you complete the Manual settings and click Edit Spark SQL query, DataRobot populates the edit window with an initial query based on the manual settings. The script is customizable, just like any other Spark SQL query, allowing you to create a new dataset or a new version of the existing dataset.

When you have finished with changes, click Run to preview the results. If satisfied, click Save to add the new dataset to the AI Catalog. Or, click Back to manual settings to return to the dropdown-based entry. Because switching back to Manual settings from the Spark SQL query configuration results in losing all Spark SQL dataset preparation, you can use it as a method of undoing modifications. If the dataset has greater than 50% or more imputed rows, DataRobot provides a warning message.

Note

If you update a query and then try to save it as a new AI Catalog Spark SQL item, you will not be able to use it for predictions. DataRobot provides a warning message in this case. You can choose to save the updated query, save with the initial query, or close the window without taking action. If you save the updated query, the dataset is saved as a standard Spark dataset.

Imputing values¶

Keep in mind these imputation considerations:

-

Known in advance: Because the time series data prep tool imputes target values, there is a risk of target leakage. This is due to a correlation between the imputation of target and feature values when features are known in advance (KA). All KA features are checked for imputation leakage and, if leakage is detected, removed from KA before running time series feature derivation.

-

Numeric features in a series: When numeric features are constant within a series, handling them with sum aggregation can cause issues. For example, if dates in an output dataset will aggregate multiple input rows, the result may make the numeric column ineligible to be a cross series groupby column. If your project requires that the value remain constant within the series instead of aggregated, convert the numeric to categorical prior to running the data prep tool.

Feature imputation¶

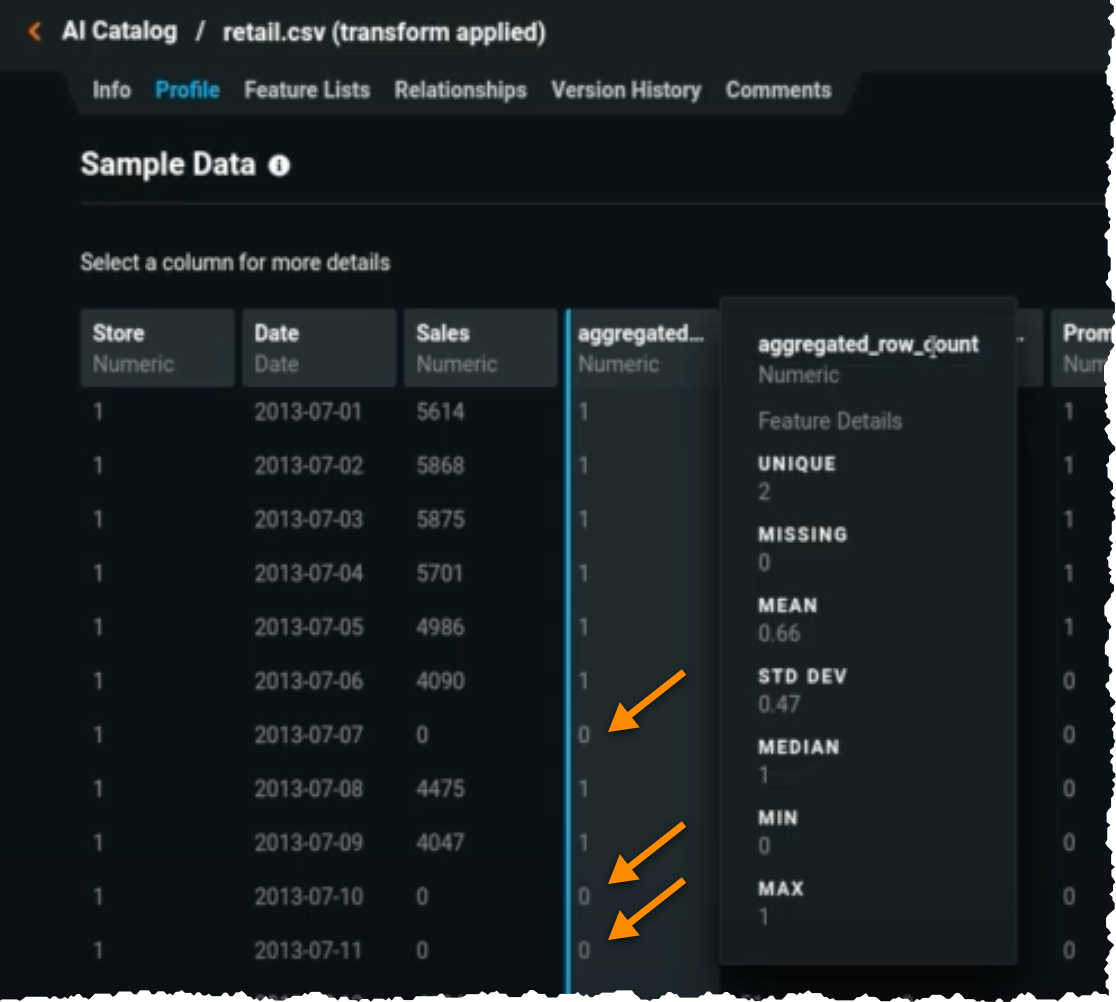

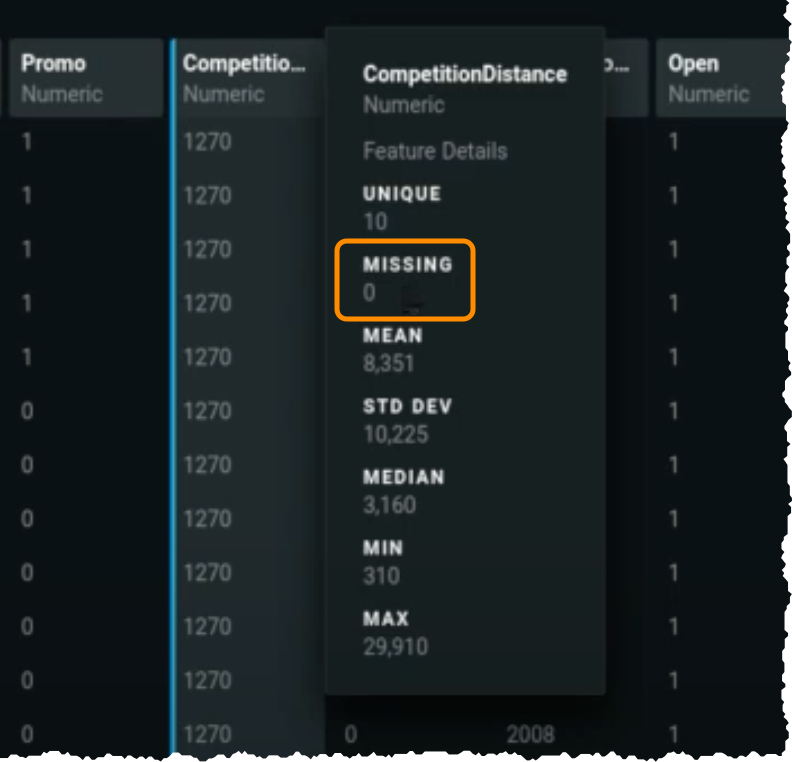

It is best practice to review the dataset before using it for training or predictions to ensure that changes will not impact accuracy. To do this, select the "new" dataset in the AI Catalog and open the Profile tab. A new column will be present—aggregated_row_count. Scroll through the column values; a 0 in a row indicates the value was imputed.

Notice that other, non-target features also have no missing values (with the possible exception of leading values at the start of each series where there is no value to forward fill). Feature imputation uses forward filling to enable imputation for all features (target and others) when applying time series data prep.

Imputation warning¶

When changes made with the data prep tool result in more than 50% of target rows being imputed, DataRobot alerts you with both:

Build models¶

Once the dataset is prepped, you can use it to create a project. Notice that when you upload the new dataset from the AI Catalog, after EDA1 completes, the warning indicating irregular time steps is gone and the forecast window setting shows duration, not rows.

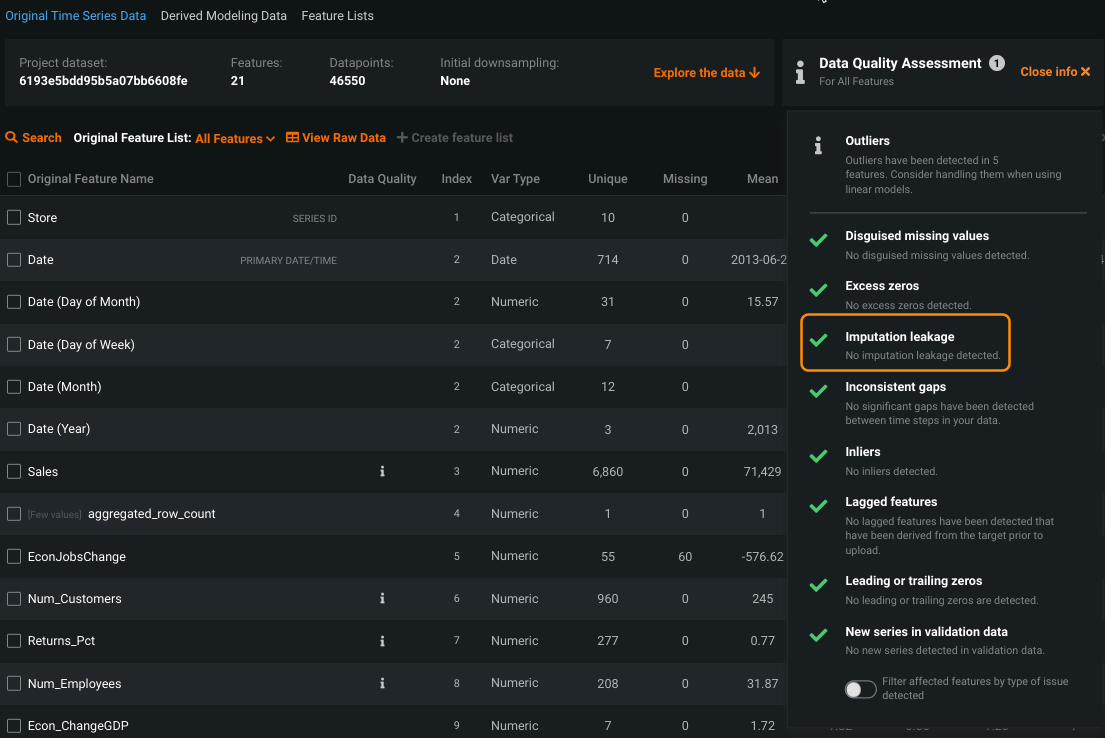

To ensure that there is no target leakage from known in advance features due to imputation during data prep, DataRobot runs an imputation leakage check. The check is run during EDA2 and is surfaced as part of the data quality assessment.

The check looks at the KA features to see if they have leaked the imputed rows. It is similar to the target leakage check but instead uses is_imputed as the target. If leakage is found for a feature, that feature's known in advance status is removed and the project proceeds.

Make predictions¶

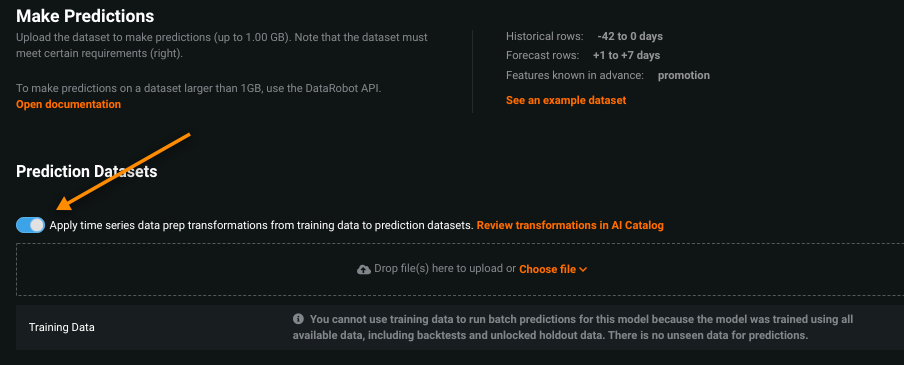

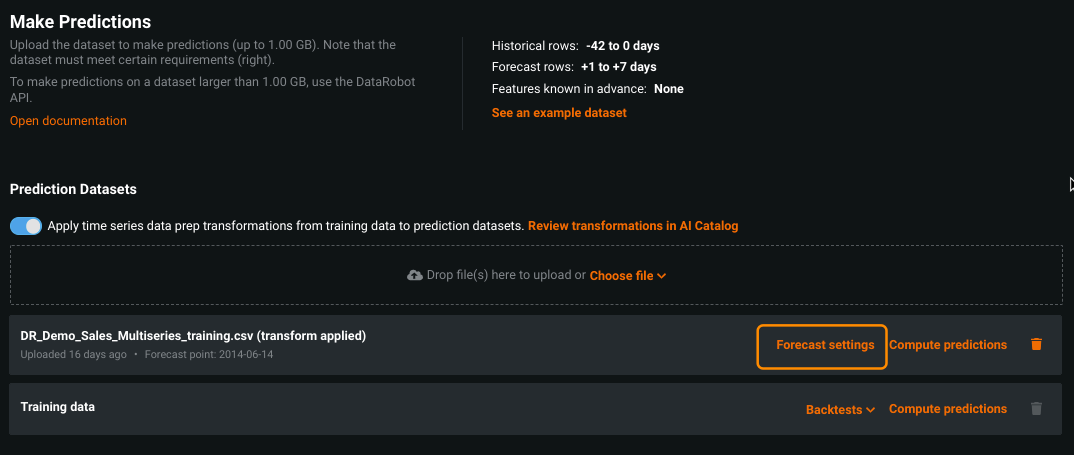

When a project is created from a dataset that was modified by the data prep tool, you can automatically apply the transformations to a corresponding prediction dataset. On the Make Predictions tab, toggle the option to make your selection:

When on, DataRobot applies the same transformations to the dataset that you upload. Click Review transformations in AI Catalog to view a read-only version of the manual and Spark SQL settings, for example:

Once the dataset is uploaded, configure the forecast settings. (Use forecast point to select a specific date or forecast range to predict on all forecast distances within the selected range.)

Note

You are required to specify a forecast point for forecast point predictions. DataRobot does not apply the most recent valid timestamp (the default when not using the tool).

When you deploy a model built from a prepped dataset, the Make Predictions tab in the Deployments section also allows you to apply time series data prep transformations.

See also the considerations for working with the time series data prep tool.