Set up humility rules¶

Availability information

The Humility tab is only available for DataRobot MLOps users. Contact your DataRobot representative for more information about enabling this feature. To enable humility-over-time monitoring for a deployment (displayed on the Summary page), you must enable Data Drift monitoring.

MLOps allows you to create humility rules for deployments on the Humility > Settings tab. Humility rules enable models to recognize, in real-time, when they make uncertain predictions or receive data they have not seen before. Unlike data drift, model humility does not deal with broad statistical properties over time—it is instead triggered for individual predictions, allowing you to set desired behaviors with rules that depend on different triggers. Using humility rules to add triggers and corresponding actions to a prediction helps mitigate risk for models in production. Humility rules help to identify and handle data integrity issues during monitoring and to better identify the root cause of unstable predictions.

The Humility tab contains the following sub-tabs:

-

Summary: View a summary of humility data over time after configuring humility rules and making predictions with humility monitoring enabled.

-

Settings: Create humility rules to monitor for uncertainty and specify actions to manage it.

-

Prediction Warnings (for regression projects only): Configure prediction warnings to detect when deployments produce predictions with outlier values.

Specific humility rules are available for multiseries projects detailed below. While they follow the same general workflow for humility rules as AutoML projects, they have specific settings and options.

Create humility rules¶

To create humility rules for a deployment:

-

In the Deployments inventory, select a deployment and navigate to the Humility > Settings tab.

-

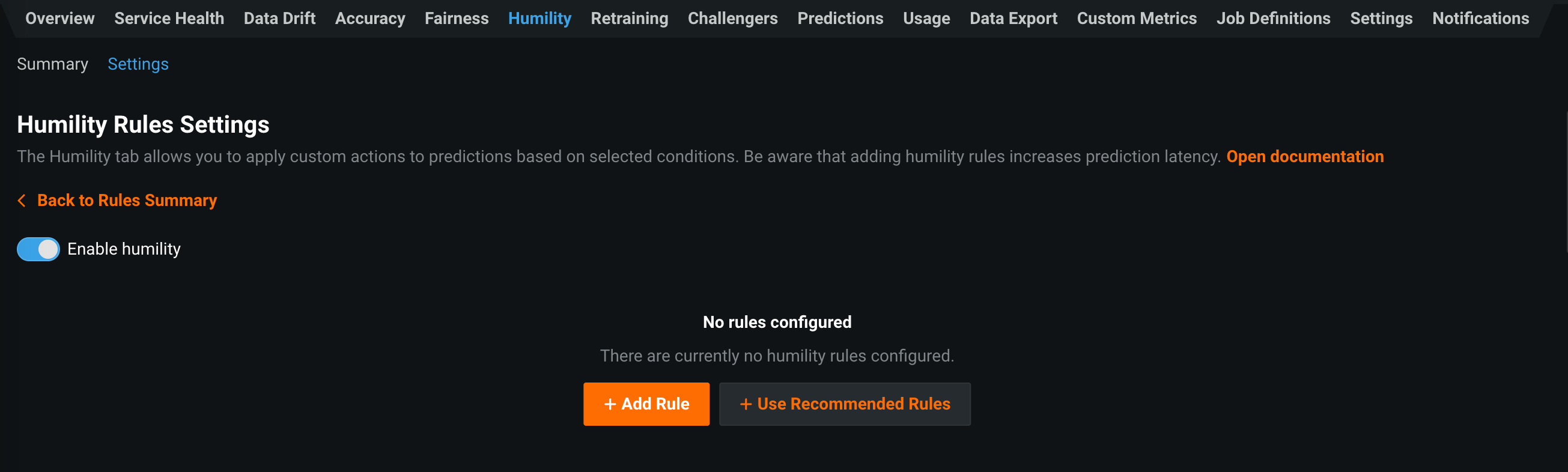

On the Humility Rules Settings page, if you haven't enabled humility for a model, click the Enable humility toggle, then:

-

To create a new, custom rule, click + Add Rule.

-

To use the rules provided by DataRobot, click + Use Recommended Rules.

-

-

Click the pencil icon (

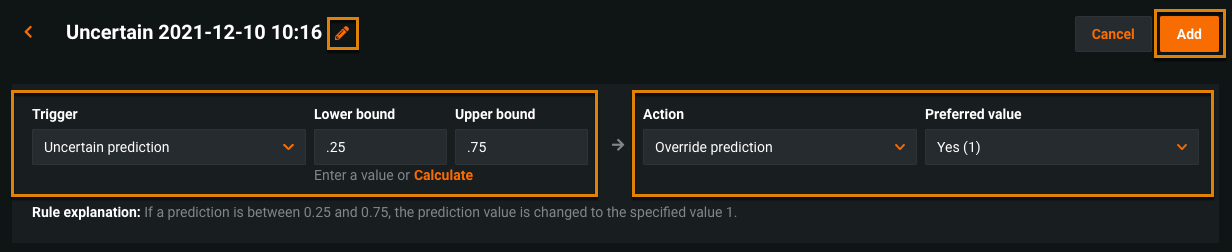

) to enter a name for the rule. Then select a Trigger and specify an Action to take based on the selected trigger. The trigger detects a rule violation and the action handles the violating prediction.

) to enter a name for the rule. Then select a Trigger and specify an Action to take based on the selected trigger. The trigger detects a rule violation and the action handles the violating prediction.When rule configuration is complete, a rule explanation displays below the rule describing what happens for the configured trigger and respective action.

-

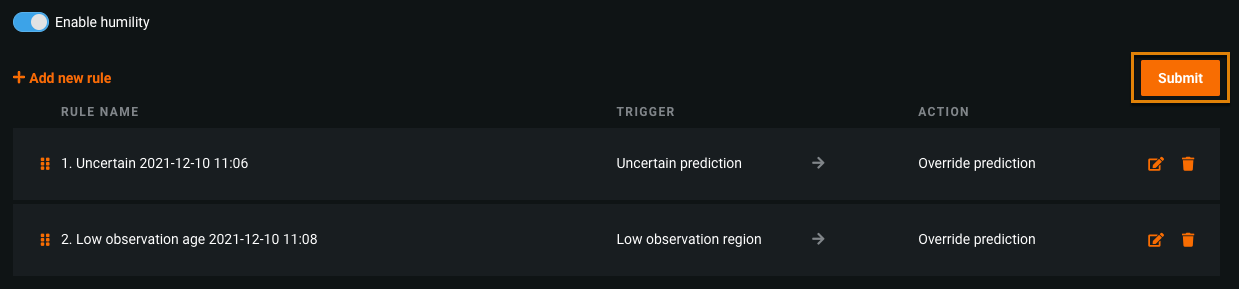

Click Add to save the rule, and click +Add new rule to add additional rules.

-

After adding and editing the rules, click Submit.

Warning

Clicking Submit is the only way to permanently save new rules and rule changes. If you navigate away from the Humility tab without clicking Submit, your rules and edits to rules are not saved.

Note

If a rule is a duplicate of an existing rule, you cannot save it. In this case, when you click Submit, a warning displays:

After you save and submit the humility rules, DataRobot monitors the deployment using the new rules and any previously created rules. After a rule is created, the prediction response body returns the humility object. Refer to the Prediction API documentation for more information.

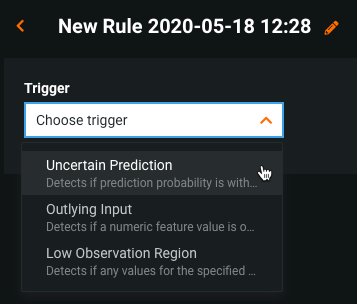

Choose a trigger for the rule¶

Select a Trigger for the rule you want to create. There are three triggers available:

Each trigger requires specific settings. The following table and subsequent sections describe these settings:

| Trigger | Description |

|---|---|

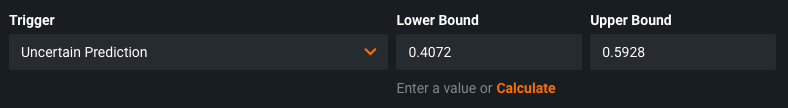

| Uncertain Prediction | Detects whether a prediction's value violates the configured thresholds. You must set lower-bound and upper-bound thresholds for prediction values. |

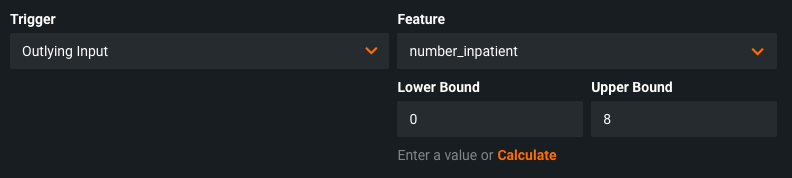

| Outlying Input | Detects if the input value of a numeric feature is outside of the configured thresholds. |

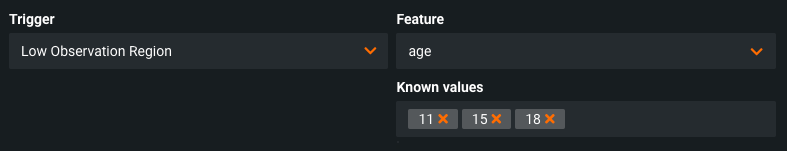

| Low Observation Region | Detects if the input value of a categorical feature value is not included in the list of specified values. |

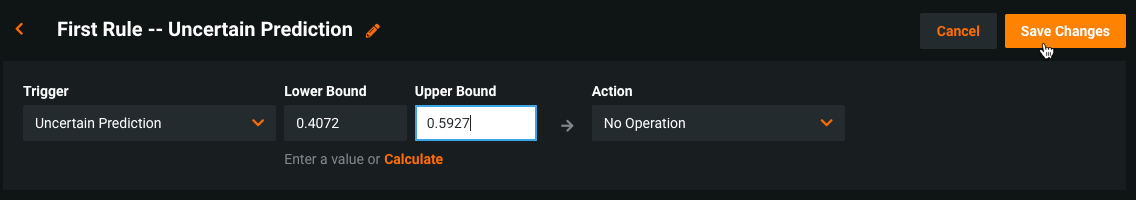

Uncertain Prediction¶

To configure the uncertain prediction trigger, set lower-bound and upper-bound thresholds for prediction values. You can either enter these values manually or click Calculate to use computed thresholds derived from the Holdout partition of the model (only available for DataRobot models). For regression models, the trigger detects any values outside of the configured thresholds. For binary classification models, the trigger detects any prediction's probability value that is inside the thresholds. You can view the type of model for your deployment from the Settings > Data tab.

Outlying Input¶

To configure the outlying input trigger, select a numeric feature and set the lower-bound and upper-bound thresholds for its input values. Enter the values manually or click Calculate to use computed thresholds derived from the training data of the model (only available for DataRobot models).

Low Observation Region¶

To configure the low observation region trigger, select a categorical feature and indicate one or more values. Any input value that appears in prediction requests that does not match the indicated values triggers an action.

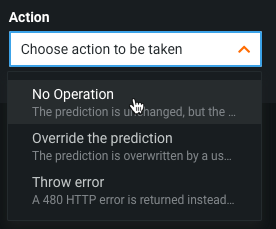

Choose an action for the rule¶

Select an Action for the rule you are creating. DataRobot applies the action if the trigger indicates a rule violation. There are three actions available:

| Action | Description |

|---|---|

| Override prediction | Modifies predicted values for rows violating the trigger with the value configured by the action. |

| Throw error | Rows in violation of the trigger return a 480 HTTP error with the predictions, which also contributes to the data error rate on the Service Health tab. |

| No operation | No changes are made to the detected prediction value. |

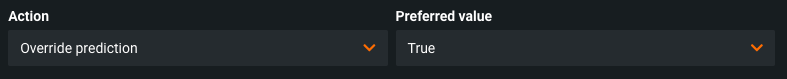

Override prediction¶

To configure the override action, set a value that will overwrite the returned value for predictions violating the trigger. For binary classification and multiclass models, the indicated value can be set to either of the model's class labels (e.g., "True" or "False"). For regression models, manually enter a value or use the maximum, minimum, or mean provided by DataRobot (only provided for DataRobot models).

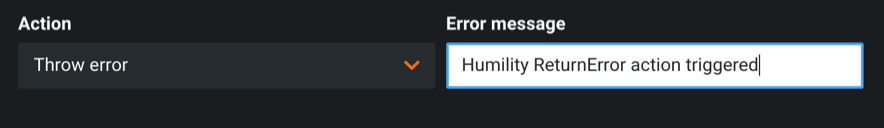

Throw error¶

To configure the throw error action, you can use the default error message provided or specify your own custom error message. This error message will appear along a 480 HTTP error with the predictions.

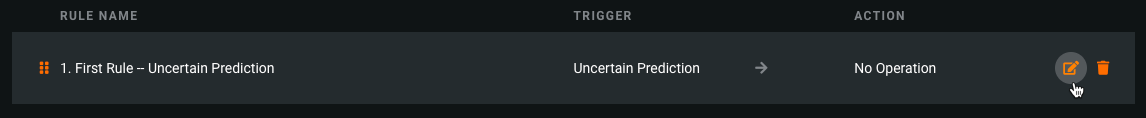

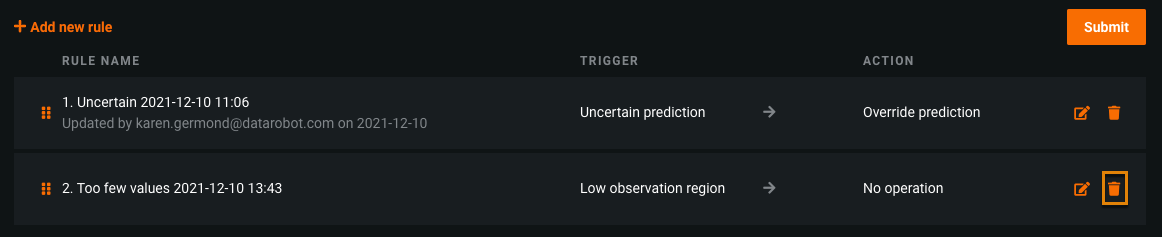

Edit humility rules¶

You can edit or delete existing rules from the Humility > Rules tab if you have Owner permissions.

Note

Edits to humility rules can have significant impact on deployment predictions, as prediction values can be overwritten with new values or can return errors based on the rules configured.

Edit a rule¶

-

Select the pencil icon for the rule.

-

Change the trigger, action, and any associated values for the rule. When finished, click Save Changes.

-

After editing the rules, click Submit. If you navigate away from the Humility tab without clicking Submit, your edits will be lost.

Delete a rule¶

-

Select the trash can icon for the rule.

-

Click Submit to complete the delete operation. If you navigate away from the Humility tab without clicking Submit, your rules will not be deleted.

Reorder a rule¶

To reorder the rules listed, drag and drop them in the desired order and click Submit.

Rule application order¶

The displayed list order of your rules determines the order in which they are applied. Although every humility rule trigger is applied, if multiple rules match the trigger of a prediction response, DataRobot applies the first rule in the list that changes the prediction value. However, if any triggered rule has the "Throw Error" action, that rule takes priority.

For example, consider a deployment with the following rules:

| Trigger | Action | Thresholds |

|---|---|---|

| Rule 1: Uncertain Prediction | Override the prediction value to 55. | Lower: 1 Upper: 50 |

| Rule 2: Uncertain Prediction | Override the prediction value to 66. | Lower: 45 Upper: 50 |

If a prediction returns the value 100, both rules will trigger, as both rules detect an uncertain prediction outside of their thresholds. The first rule, Rule 1, takes priority, so the prediction value is overwritten to 55. The action to overwrite the value to 66 (based on Rule 2) is ignored.

In another example, consider a deployment with the following rules:

| Trigger | Action | Thresholds |

|---|---|---|

| Rule 1: Uncertain Prediction | Override the prediction value to 55. | Lower: 1 Upper: 55 |

| Rule 2: Uncertain Prediction | Throw an error. | Lower: 45 Upper: 60 |

If a prediction returns the value 50, both rules will trigger. However, Rule 2 takes priority over Rule 1 because it is configured to return an error. Therefore, the value is not overwritten, as the action to return an error is higher priority than the numerical order of the rules.

Prediction warnings¶

Enable prediction warnings for regression model deployments on the Humility > Prediction warnings tab. Prediction warnings allow you to mitigate risk and make models more robust by identifying when predictions do not match their expected result in production. This feature detects when deployments produce predictions with outlier values, summarized in a report that returns with your predictions.

Prediction warnings availability

Prediction warnings are only available for deployments using regression models. This feature does not support classification or time series models.

Prediction warnings provide the same functionality as the Uncertain Prediction trigger that is part of humility monitoring. You may want to enable both, however, because prediction warning results are integrated into the Predictions Over Time chart on the Data drift tab.

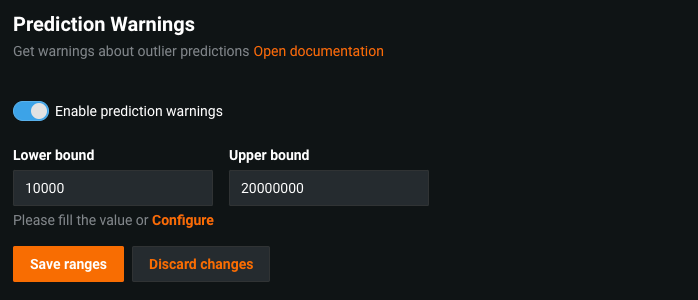

Enable prediction warnings¶

-

To enable prediction warnings, navigate to Humility > Prediction warnings.

-

Enter a Lower bound and Upper bound, or click Configure to have DataRobot calculate the prediction warning ranges.

DataRobot derives thresholds for the prediction warning ranges from the Holdout partition of your model. These are the boundaries for outlier detection—DataRobot reports any prediction result outside these limits. You can choose to accept the Holdout-based thresholds or manually define the ranges instead.

-

After making any desired changes, click Save ranges.

After the humility rules are in effect, you can include prediction outlier warnings when you make predictions. Prediction warnings are reported on the Predictions Over Time chart on the Data drift tab.

Note

Prediction warnings are not retroactive. For example, if you set the upper-bound threshold for outliers to 40, a prediction with a value of 50, made prior to setting up thresholds, is not retroactively detected as an outlier. Prediction warnings will only return with prediction requests made after the feature is enabled.

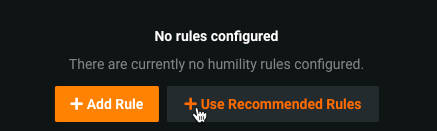

Recommended rules¶

If you want DataRobot to recommend a set of rules for your deployment, click Use Recommended Rules when adding a new humility rule.

This option generates two automatically configured humility rules:

- Rule 1: The Uncertain prediction trigger and the No operation action.

- Rule 2: The Outlying input trigger for the most important numeric feature (based on Feature Impact results) and the No operation action.

Both recommended rules have automatically calculated upper- and lower-bound thresholds.

Multiseries humility rules¶

DataRobot supports multiseries blueprints that support feature derivation and predicting using partial history or no history at all—series that were not trained previously and do not have enough points in the training dataset for accurate predictions. This is useful, for example, in demand forecasting. When a new product is introduced, you may want initial sales predictions. In conjunction with “cold start modeling” (modeling on a series in which there is not sufficient historical data), you can predict on new series, but also keep accurate predictions for series with a history.

With the support in place, you can set up a humility rule that:

- Triggers off a new series (unseen in training data).

- Takes a specified action.

- (Optional) Returns a custom error message.

Note

If you replace a model within a deployment using a model from a different project, the humility rule is disabled. If the replacement is a model from the same project, the rule is saved.

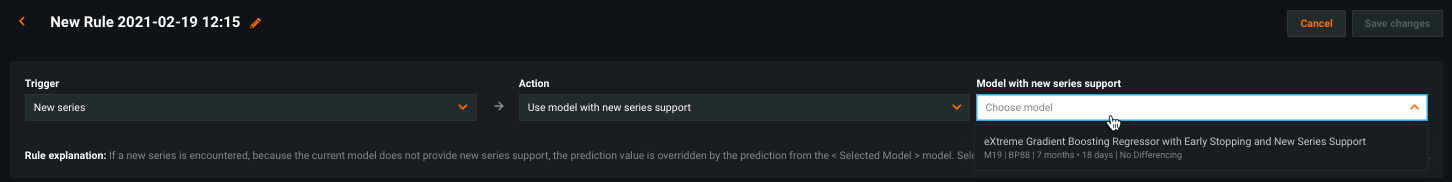

Create multiseries humility rules¶

-

Select a deployment from your Deployments inventory and click Humility. Toggle Enable humility to on.

-

Click + Add new rule to begin configuring the rule. Time series deployments can only have one humility rule applied. If you already have a rule, click it to edit if you want to make changes.

-

Select a trigger. To include new series data, select New series as the trigger. This rule detects if a series is present that was not available in the training data and does not have enough history in the prediction data for accurate predictions.

-

Select an action.

Subsequent options are dependent on the selected action, as described in the following table:

Action If a new series is encountered... Further action No operation DataRobot records the event but the prediction is unchanged. N/A Use model with new series support The prediction is overridden by the prediction from a selected model with new series support. Select a model that supports unseen series modeling. DataRobot preloads supported models in the dropdown. Use global most frequent class (binary classification only) The prediction value is replaced with the most frequent class across all series. N/A Use target mean for all series (regression only) The prediction value is overridden by the global target mean for all series. N/A Override prediction The prediction value is changed to the specified preferred value. Enter a numeric value to replace the prediction value for any new series. Return error The default or a custom error is returned with the 480 error. Use the default or click in the box to enter a custom error message.

When finished, click the Add button to create the new rule or save changes.

Select a model replacement¶

When you expand the Model with new series support dropdown, DataRobot provides a list of models available from the Model Registry, not the Leaderboard. Using models available from the registry decouples the model from the project and provides support for packages. In this way, you can use a backup model from any compatible project as long as it uses the same target and has the same series available.

Note

If no packages are available, deploy a “new series support” model and add it to the Model Registry. You can identify qualifying models from the Leaderboard by the NEW SERIES OPTIMIZED badge. There is also a notification banner in the Make Predictions tab if you try to use a non-optimized model.

Humility tab considerations¶

Consider the following when using the Humility tab:

- You cannot define more than 10 humility rules for a deployment.

- Humility rules can only be defined by owners of the deployment. Users of the deployment can view the rules but cannot edit them or define new rules.

- The "Uncertain Prediction" trigger is only supported for regression and binary classification models.

- Multiclass models only support the "Override prediction" trigger.