Custom model Portable Prediction Server¶

Availability information

The Portable Prediction Server is a premium feature exclusive to DatRobot MLOps. Contact your DataRobot representative or administrator for information on enabling this feature.

The custom model Portable Prediction Server (PPS) is a solution for deploying a custom model to an external prediction environment. It can be built and run disconnected from main installation environments. The PPS is available as a downloadable bundle containing a deployed custom model, a custom environment, and the monitoring agent. Once started, the custom model PPS installation serves predictions via the DataRobot REST API.

Download and configure the custom model PPS bundle¶

The custom model PPS bundle is provided for any custom model tagged as having an external prediction environment in the deployment inventory.

Note

Before proceeding, note that DataRobot supports Linux-based prediction environments for PPS. It is possible to use other Unix-based prediction environments, but only Linux-based systems are validated and officially supported.

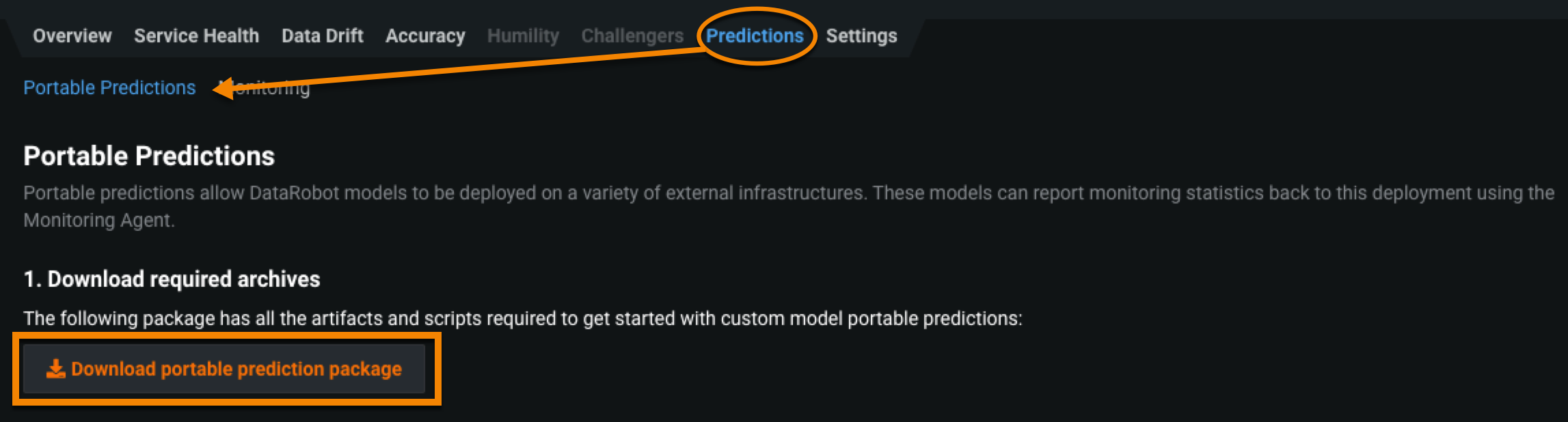

Select the custom model you wish to use, navigate to the Predictions > Portable Predictions tab of the deployment, and select Download portable prediction package.

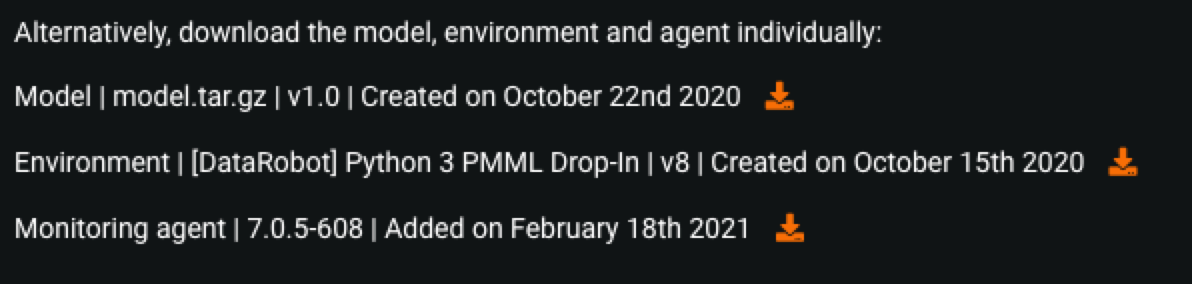

Alternatively, instead of downloading the contents in one bundle, you can download the custom model, custom environment, or the monitoring agent as individual components.

After downloading the .zip file, extract it locally with an unzip command:

unzip <cm_pps_installer_*>.zip

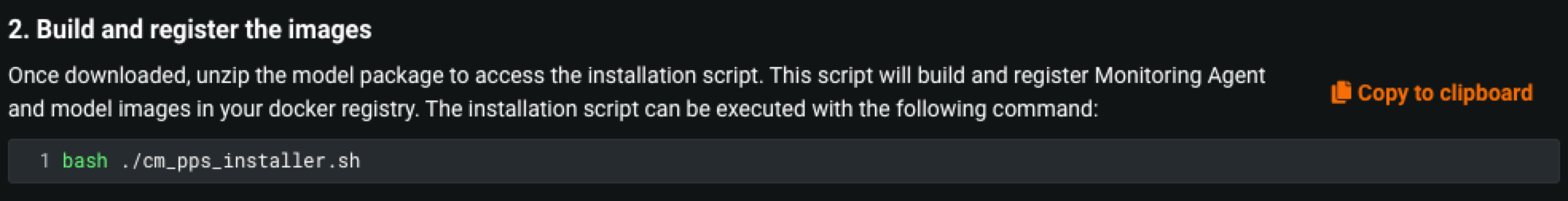

Next, access the installation script (unzipped from the bundle) to build the custom model PPS with monitoring agent support. To do so, run the command displayed in step 2:

For more build options, such as the ability to skip the monitoring agent Docker image install, run:

bash ./cm_pps_installer.sh --help

If the build passes without errors, it adds two new Docker images to the local Docker registry:

-

cm_pps_XYZis the image assembling the custom model and custom environment. -

datarobot/mlops-tracking-agentis the monitoring agent Docker image, used to report prediction statistics back to DataRobot.

Make predictions with PPS¶

DataRobot provides two example Docker Compose configurations in the bundle to get you started with the custom model PPS:

-

docker-compose-fs.yml: uses a file system-based spooler between the model container and the monitoring agent container. Recommended for a single model. -

docker-compose-rabbit.yml: uses a RabbitMQ-based spooler between the model container and the monitoring agent container. Use this configuration to run several models with a single monitoring agent instance.

Include dependencies for the example Docker Compose files

To use the provided Docker Compose files, add the datarobot-mlops package (with additional dependencies as needed) to your model's requirements.txt file.

After selecting the configuration to use, edit the Docker Compose file to include the deployment ID and your API key in the corresponding fields.

To define a runtime parameters file, modify the example Docker Compose configuration file you're using to uncomment the two lines highlighted below:

volumes:

- shared-volume:/opt/mlops_spool

# - <path_to_runtime_params_file_on_host>:/opt/runtime.yaml

environment:

ADDRESS: "0.0.0.0:6788"

MODEL_ID: "{{ model_id }}"

DEPLOYMENT_ID: "< provide deployment id to report to >"

RUNTIME_PARAMS_FILE: "/opt/runtime.yaml"

# RUNTIME_PARAMS_FILE: "/opt/runtime.yaml"

When you uncomment the line under volumes, replace the <path_to_runtime_params_on_host> placeholder with the appropriate path. The line under environment is a DRUM environment variable, described in the DRUM-based environment variables section. If you aren't using an example Docker Compose configuration, add these lines to your configuration file. In both scenarios, make sure the runtime parameters file exists and the path to the file is correct.

Once the Docker Compose file is properly configured, start the prediction server:

-

For single models using the file system-based spooler, run:

docker compose -f docker-compose-fs.yml up -

For multiple models with a single monitoring agent instance, use the RabbitMQ-based spooler:

docker compose -f docker-compose-rabbit.yml up

When the PPS is running, the Docker image exposes three HTTP endpoints:

POST /predictionsscores a given dataset.GET /inforeturns information about the loaded model.GET /pingensures the tech stack is running.

Note

Note that prediction routes only support comma-separated value (CSV) scoring datasets. The maximum payload size is 50MB.

The following demonstrates a sample prediction request and JSON response:

curl -X POST http://localhost:6788/predictions/ \

-H "Content-Type: text/csv" \

--data-binary @path/to/scoring.csv

{

"data": [{

"prediction": 23.03329917456927,

"predictionValues": [{

"label": "MEDV",

"value": 23.03329917456927

}],

"rowId": 0

},

{

"prediction": 33.01475956455371,

"predictionValues": [{

"label": "MEDV",

"value": 33.01475956455371

}],

"rowId": 1

},

]

}

MLOps environment variables¶

The following table lists the MLOps service environment variables supported for all custom models using PPS. You may want to adjust these settings based on the run environment used.

| Variable | Description | Default |

|---|---|---|

MLOPS_SERVICE_URL |

The address of the running DataRobot application. | Autogenerated value |

MLOPS_API_TOKEN |

Your DataRobot API key. | Undefined; must be provided. |

MLOPS_SPOOLER_TYPE |

The type of spooler used by the custom model and monitoring agent. | Autogenerated value |

MLOPS_FILESYSTEM_DIRECTORY |

The filesystem spooler configuration for the monitoring agent. | Autogenerated value |

MLOPS_RABBITMQ_QUEUE_URL |

The RabbitMQ spooler configuration for the monitoring agent. | Autogenerated value |

MLOPS_RABBITMQ_QUEUE_NAME |

The RabbitMQ spooler configuration for the monitoring agent. | Autogenerated value |

START_DELAY |

Triggers a delay before starting the monitoring agent. | Autogenerated value |

DRUM-based environment variables¶

The following table lists the environment variables supported for DRUM-based custom environments:

| Variable | Description | Default |

|---|---|---|

ADDRESS |

The prediction server's starting address. | 0.0.0.0:6788 |

MODEL_ID |

The ID of the deployed model (required for monitoring). | Autogenerated value |

DEPLOYMENT_ID |

The deployment ID. | Undefined; must be provided. |

MONITOR |

A flag that enables MLOps monitoring. | True. Provide an empty value or remove this variable to disable monitoring. |

MONITOR_SETTINGS |

Settings for the monitoring agent spooler. | Autogenerated value |

RUNTIME_PARAMS_FILE |

The path to, and name of, the .yaml file containing the runtime parameter values. |

Undefined; must be provided. |

Provide a path to the runtime parameters file

To define a RUNTIME_PARAMS_FILE, modify the example Docker Compose configuration file you're using to uncomment the required paths in volumes and environment. If you aren't using an example Docker Compose configuration, add these lines to your configuration file. In both scenarios, make sure the runtime parameters file exists and the path to the file is correct.

RabbitMQ service environment variables¶

| Variable | Description | Default |

|---|---|---|

RABBITMQ_DEFAULT_USER |

The default RabbitMQ user. | Autogenerated value |

RABBITMQ_DEFAULT_PASS |

The default RabbitMQ password. | Autogenerated value |