External prediction comparison¶

For organizations that have existing supervised time series models outside of the DataRobot application, the ability to compare these model predictions with DataRobot-driven predictions helps to drive the best business decisions. With this feature, you can use the output of your forecasts (your predictions) and as a baseline to compare against DataRobot predictions. You can not only compare existing predictions but can also compare with pre-existing models from a project with different settings. DataRobot applies specific, scaled metrics for result comparison.

Enabling external prediction comparison¶

To compare predictions:

- Create a time-aware project, select a date feature, and optionally (for time series), a series ID.

- Create an external baseline file.

- Upload the file from Advanced options.

- Start the project, and when building completes, select a metric.

Create an external baseline file¶

The baseline file you use to compare predictions is created from the predictions of your alternate model.

Note

The prediction file used for comparison must not have more than 20% missing values in any backtest.

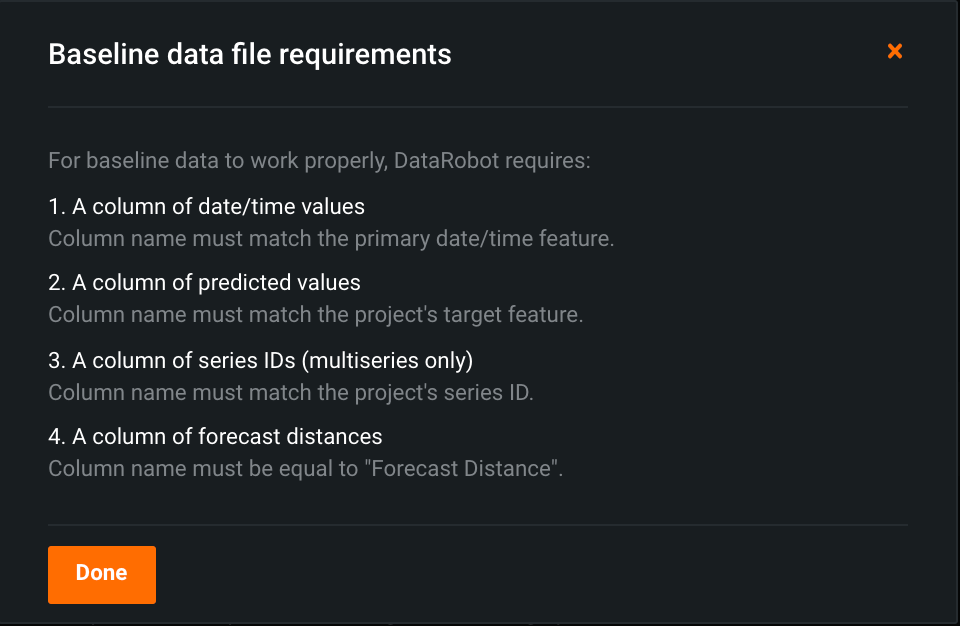

It must contain the same target, date, series ID (if applicable), and forecast distance as the DataRobot models you are building. When uploading, you can view the file requirements from within the setting.

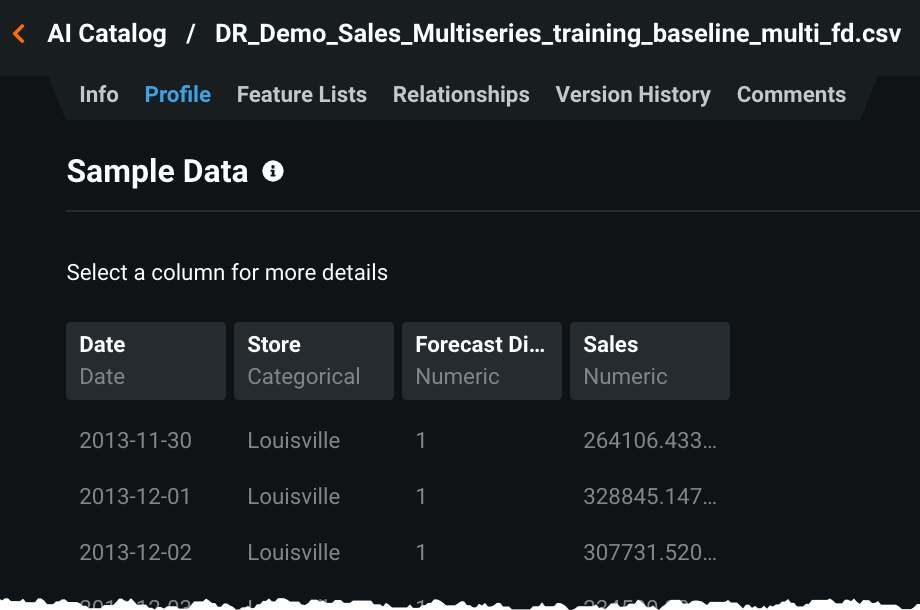

Column names must match exactly and the date and series ID columns must match the original data. In this multiseries example, stored in the AI Catalog, each row in this example represents the prediction (the value in the sales column) of a specific series ID (the value in the store column) at a specific date (the value in the date column) with a specific forecast distance (value in Forecast Distance column).

Baseline prediction file requirements:

| Column | Description |

|---|---|

| Date/time values | Column name must match the primary date/time feature. This timestamp refers to the forecast timestamp, not the forecast point. For example, a row with a timestamp Aug 1 and FD = 1, the baseline value should be compared to the Aug 1 actuals (and would have been generated via the baseline model as of July 31). |

| Predicted values | Column name must match the project's target feature. This is the forecast of the given timestamp at the given forecast distance. For classification projects, this indicates the predicted probabilities of the positive class. |

| Series ID (multiseries only) | Column name must match the project's series ID. |

| Forecast distances | Column name must be equal to "Forecast Distance". This refers to the number of forecast distances away from forecast point. |

Note

When the target is a transformed target (for example, if you applied the Log(target) transformation operation within DataRobot to transform the target column), the prediction column name must use the transformed name (Log(target)) rather than original target name (target).

Upload the baseline file¶

Once the baseline file is created and meets the file criteria, upload the file into DataRobot. Open Advanced options > Time Series and scroll to find Compare models to baseline data?. Select a method for uploading the baseline, either a local file or a file from the AI Catalog. Note that if you use the local file option, the file will also be added to AI Catalog.

Once the file is successfully uploaded, return to the top of the page to start model building.

Metrics used for comparison¶

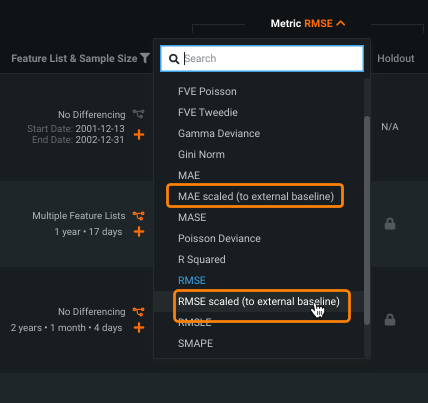

When building completes, expand the model metrics dropdown and select a metric to use for comparison.

| Metric | Project type |

|---|---|

| MAE scaled (to external baseline) | Regression |

| RMSE scaled (to external baseline) | Regression, binary classification |

| LogLoss scaled (to external baseline) | Binary classification |

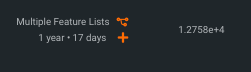

Look at the value in the Backtest 1 column. A value less than 1 indicates higher accuracy (lower error) for the DataRobot model. A value greater than 1 indicates the external model had higher accuracy.

Using standard RMSE:

Using scaled RMSE:

Calculations to scale the metrics to the external baseline are as follows:

<metric> of the DataRobot model / <metric> of the external model

All values scale to the external baseline (uploaded predictions), and work with weighted projects. Because the values are scaled (and calculated by dividing the two errors), these special metrics are not straight derivatives of their original.