Set up fairness monitoring¶

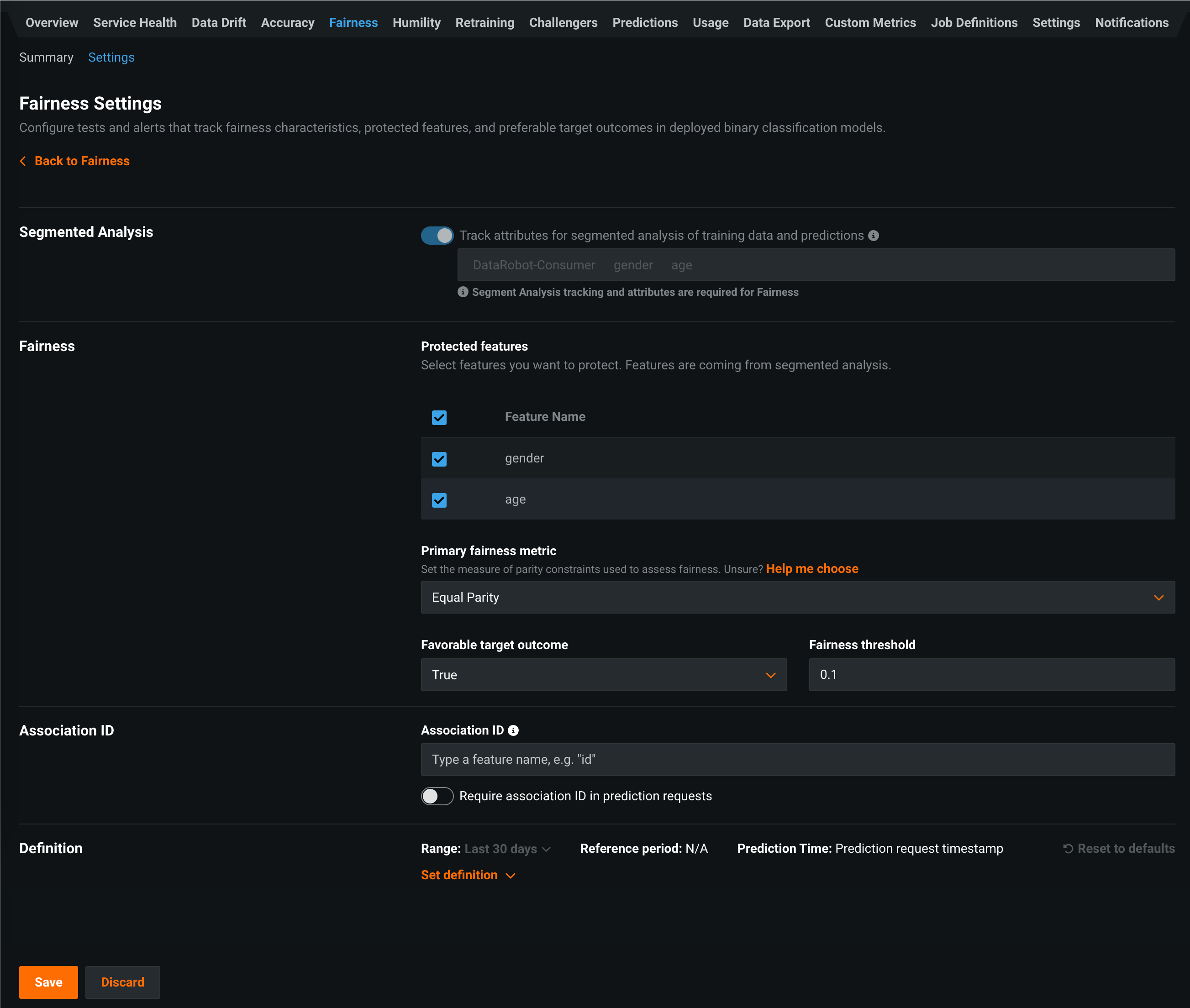

On a deployment's Fairness > Settings tab, you can define Bias and Fairness settings for your deployment to identify any biases in a binary classification model's predictive behavior. If fairness settings are defined prior to deploying a model, the fields are automatically populated. For additional information, see the section on defining fairness tests.

Note

To configure fairness settings, you must enable target monitoring for the deployment. Target monitoring allows DataRobot to monitor how the values and distributions of the target change over time by storing prediction statistics. If target monitoring is turned off, a message displays on the Fairness tab to remind you to enable target monitoring.

Configuring fairness criteria and notifications can help you identify the root cause of bias in production models. On the Fairness tab for individual models, DataRobot calculates per-class bias and fairness over time for each protected feature, allowing you to understand why a deployed model failed the predefined acceptable bias criteria. For information on fairness metrics and terminology, see the Bias and Fairness reference page.

To measure the fairness of production models, you must configure bias and fairness testing in the Fairness > Settings tab of a deployed model. If bias and fairness testing was configured for the model prior to deployment, the fields are automatically populated.

On a deployment's Fairness Settings page, you can configure the following settings:

| Field | Description |

|---|---|

| Segmented Analysis | |

| Track attributes for segmented analysis of training data and predictions | Enables DataRobot to monitor deployment predictions by segments, for example by categorical features. |

| Fairness | |

| Protected features | Selects each protected feature's dataset column to measure fairness of model predictions against; these features must be categorical. |

| Primary fairness metric | Selects the statistical measure of parity constraints used to assess fairness. |

| Favorable target outcome | Selects the outcome value perceived as favorable for the protected class relative to the target. |

| Fairness threshold | Selects the fairness threshold to measure if a model performs within appropriate fairness bounds for each protected class. |

| Association ID | |

| Association ID | Defines the name of the column that contains the association ID in the prediction dataset for your model. An association ID is required to calculate two of the Primary fairness metric options: True Favorable Rate & True Unfavorable Rate Parity and Favorable Predictive & Unfavorable Predictive Value Parity. The association ID functions as an identifier for your prediction dataset so you can later match up outcome data (also called "actuals") with those predictions. |

| Require association ID in prediction requests | Requires your prediction dataset to have a column name that matches the name you entered in the Association ID field. When enabled, you will get an error if the column is missing. This cannot be enabled with Enable automatic association ID generation for prediction rows. |

| Enable automatic association ID generation for prediction rows | With an association ID column name defined, allows DataRobot to automatically populate the association ID values. This cannot be enabled with Require association ID in prediction requests. |

| Definition | |

| Set definition | Configures the number of protected classes below the fairness threshold required to trigger monitoring notifications. |

Select a fairness metric¶

DataRobot supports the following fairness metrics in MLOps:

-

True Favorable and True Unfavorable Rate Parity (True Positive Rate Parity and True Negative Rate Parity)

-

Favorable Predictive and Unfavorable Predictive Value Parity (Positive Predictive Value Parity and Negative Predictive Value Parity)

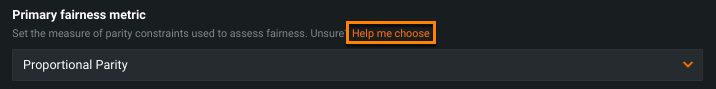

If you are unsure of the appropriate fairness metric for your deployment, click help me choose.

Note

To calculate True Favorable Rate & True Unfavorable Rate Parity and Favorable Predictive & Unfavorable Predictive Value Parity, the deployment must provide an association ID.

Define fairness monitoring notifications¶

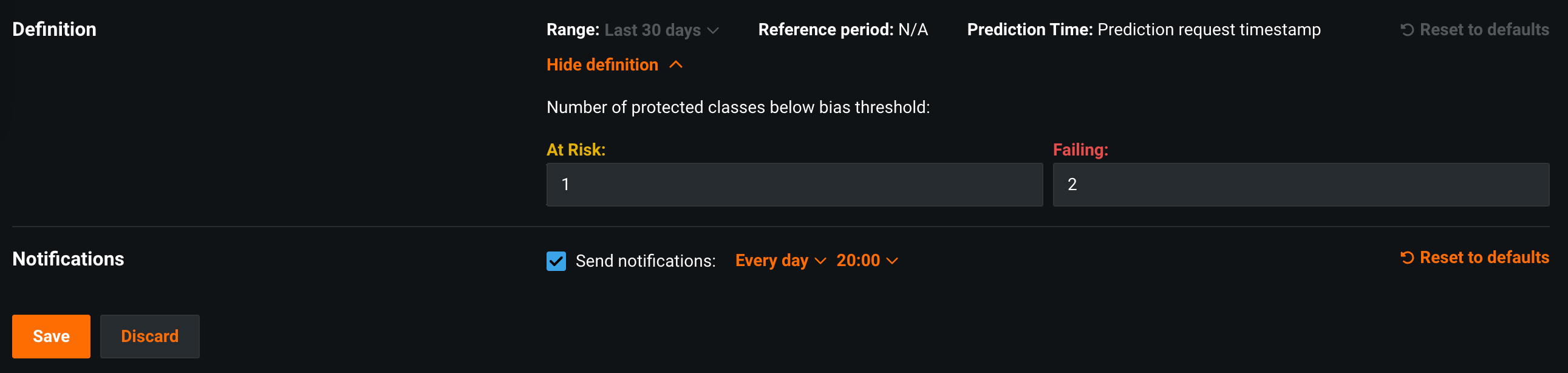

Configure notifications to alert you when a production model is at risk of or fails to meet predefined fairness criteria. You can visualize fairness status on the Fairness tab. Fairness monitoring uses a primary fairness metric and two thresholds—protected features considered to be "At Risk" and "Failing"—to monitor fairness. If not specified, DataRobot uses the default thresholds.

Note

To access the settings in the Definition & Notifications section, configure and save the fairness settings. Only deployment Owners can modify fairness monitoring settings; however, Users can configure the conditions under which notifications are sent to them. Consumers cannot modify monitoring or notification settings.

To customize the rules used to calculate the fairness status for each deployment:

-

On the Fairness Settings page, in the Definition section, click Set definition and configure the threshold settings for monitoring fairness:

Threshold Description At Risk Defines the number of protected features below the bias threshold that, when exceeded, classifies the deployment as "At Risk" and triggers notifications. The threshold for At Risk should be lower than the threshold for Failing.

Default value:1Failing Defines the number of protected features below the bias threshold that, when exceeded, classifies the deployment as "Failing" and triggers notifications. The threshold for Failing should be higher than the threshold for At Risk.

Default value:2Note

Changes to thresholds affect the periods in which predictions are made across the entire history of a deployment. These updated thresholds are reflected in the performance monitoring visualizations on the Fairness tab.

-

After updating the fairness monitoring settings, click Save.