Prediction Explanations overview¶

Prediction Explanations illustrate what drives predictions on a row-by-row basis—they provide a quantitative indicator of the effect variables have on the predictions, answering why a given model made a certain prediction. It helps to understand why a model made a particular prediction so that you can then validate whether the prediction makes sense. It's especially important in cases where a human operator needs to evaluate a model decision and also when a model builder needs to confirm that the model works as expected. For example, "why does the model give a 94.2% chance of readmittance?" (See more examples below.)

DataRobot offers two methodologies for computing Prediction Explanations: SHAP (based on Shapley Values) and XEMP (eXemplar-based Explanations of Model Predictions).

Enable SHAP in DataRobot Classic

In the DataRobot Classic UI, to avoid confusion when the same insight is produced yet potentially returns different results, you must enable SHAP in Advanced options prior to project start.

DataRobot also provides Text Prediction Explanations specific to text features, which help to understand, at the word level, the text and its influence on the model. Text Prediction Explanations support both XEMP and SHAP methodologies.

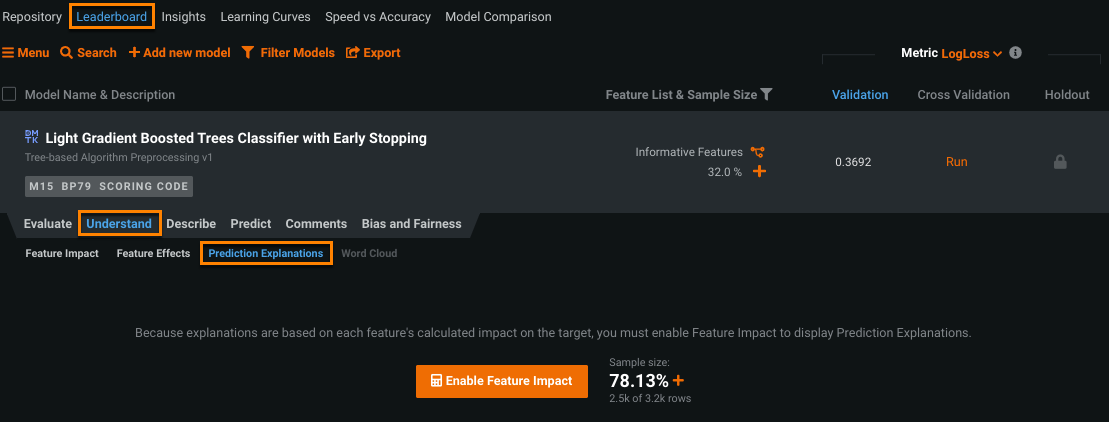

To access and enable Prediction Explanations, select a model on the Leaderboard and click Understand > Prediction Explanations.

See these things to consider when working with Prediction Explanations.

SHAP or XEMP-based methodology?¶

Both SHAP and XEMP methodologies estimate which features have stronger or weaker impact on the target for a particular row. While the two methodologies usually provide similar results, the explanation values are different (because methodologies are different). The list below illustrates some differences:

| Characteristic | SHAP | XEMP |

|---|---|---|

| Open-source? | Open source algorithm provides regulators an easy audit path. | Uses a well-supported DataRobot proprietary algorithm. |

| Model support | NextGen: All models DataRobot Classic: Linear models, Keras deep learning models, and tree-based models, including tree ensembles. |

XEMP works for all models. |

| Column/value limits | No column or value limits. | Up to 10 values in up to the top 50 columns. |

| Speed | 5-20 times faster than XEMP. | — |

| Measure | Multivariate, measuring the effect of varying multiple features at once. Additivity allocates the total effect across individual features that were varied. | Univariate, measuring the effect of varying a single feature at a time. |

| Best use case | Explaining exactly how you get from an average outcome to a specific prediction amount. | Explaining which individual features have the greatest impact on the outcome versus an average input value (i.e., which feature has a value that most changed the prediction versus an average data row). |

| Additional notes | SHAP is additive, making it easy to see how much top-N features contribute to a prediction. | — |

Note

While Prediction Explanations provide several quantitative indicators for why a prediction was made, the calculations do not fully explain how a prediction is computed. For that information, use the coefficients with preprocessing information from the Coefficients tab.

See the XEMP or SHAP pages for a methodology-based description of using and interpreting Prediction Explanations.

Examples¶

A common question when evaluating data is “why is a certain data point considered high-risk (or low-risk) for a certain event”?

A sample case for Prediction Explanations:

Sam is a business analyst at a large manufacturing firm. She does not have a lot of data science expertise, but has been using DataRobot with great success to predict the likelihood of product failures at her manufacturing plant. Her manager is now asking for recommendations for reducing the defect rate, based on these predictions. Sam would like DataRobot to produce Prediction Explanations for the expected product failures so that she can identify the key drivers of product failures based on a higher-level aggregation of explanations. Her business team can then use this report to address the causes of failure.

Other common use cases and possible reasons include:

-

What are indicators that a transaction could be at high risk for fraud? Possible explanations include transactions out of a cardholder's home area, transactions out of their “normal usage” time range, and transactions that are too large or small.

-

What are some reasons for setting a higher auto insurance price? The applicant is single, male, under 30 years old, and has received a DUI or multiple tickets. A married homeowner may receive a lower rate.

SHAP estimates how much a feature is responsible for a given prediction being different from the average. Consider a credit risk example that builds a simple model with two features—number of credit cards and employment status. The model predicts that an unemployed applicant with 10 credit cards has a 50% probability of default, while the average default rate is 5%. SHAP estimates how each feature contributed to the 50% default risk prediction, determining that 25% is attributed to the number of cards and 20% is due to the customer's lack of a job.

Feature considerations¶

Consider the following when using Prediction Explanations. See also time-series specific considerations.

-

Prediction Explanations are only generated on datasets that are 1GB or less.

-

Predictions requested with Prediction Explanations will typically take longer to generate than predictions without explanations, although actual speed is model-dependent. Computation runtime is affected by the number of features, blenders (only supported for XEMP), and text variables. You can try to increase speed by reducing the number of features used, or by avoiding blenders and text variables.

-

Image Explanations—or Prediction Explanations for images—are not available from a deployment (for example, Batch predictions or the Predictions API). See also SHAP considerations below.

-

In the DataRobot Classic UI, once you set an explanation method (XEMP or SHAP), insights are only available for that method.

-

Anomaly detection models trained from DataRobot blueprints always compute Feature Impact using SHAP. For anomaly detection models from user blueprints, Feature Impact is computed using the permutation-based approach.

-

The deployment Data Exploration tab doesn't store the Prediction Explanations for export, even when Prediction Explanations are requested while making predictions through that deployment.

Prediction Explanation and Feature Impact methods¶

Prediction Explanations and Feature Impact are calculated in multiple ways depending on the project and target type:

Note

SHAP Impact is an aggregation of SHAP explanations. For more information, see SHAP-based Feature Impact

| Target type | Feature Impact method | Prediction Explanations method |

|---|---|---|

| Regression | Permutation Impact or SHAP Impact | XEMP or SHAP (opt-in when using the Classic UI) |

| Binary | Permutation Impact or SHAP Impact | XEMP or SHAP (opt-in when using the Classic UI) |

| Multiclass | Permutation Impact | XEMP |

| Unsupervised Anomaly Detection | SHAP Impact | XEMP |

| Unsupervised Clustering | Permutation Impact | XEMP |

| Target type | Feature Impact method | Prediction Explanations method |

|---|---|---|

| Regression | Permutation Impact | XEMP |

| Binary | Permutation Impact | XEMP |

| Multiclass | N/A* | N/A* |

| Unsupervised Anomaly Detection | SHAP Impact | XEMP** |

| Unsupervised Clustering | N/A* | N/A* |

* This project type isn't available.

** For the time series unsupervised anomaly detection visualizations, the Anomaly Assessment chart uses SHAP to calculate explanations for anomalous points.

XEMP¶

Consider the following when using XEMP (which is based on permutation-based Feature Importance scores):

-

Prediction Explanations are compatible with models trained before the feature was introduced.

-

There must be at least 100 rows in the validation set for Prediction Explanations to compute.

-

Prediction Explanations work for all variable types (numeric, categorical, text, date, time, image) except geospatial.

-

DataRobot uses a maximum of 50 features for Prediction Explanations computation, limiting the computational complexity and improving the response time. Features are selected in order of their Feature Impact ranking.

-

Prediction Explanations are not returned for features that have extremely low, or no, importance. This is to avoid suggesting that a feature has an impact when it has very little or none.

-

The maximum number of Prediction Explanations via the UI is 10 and via the prediction API is 50.

SHAP¶

-

Multiclass classification Prediction Explanations are not supported for SHAP (but are available for XEMP).

-

SHAP-based Prediction Explanations for models trained into Validation and Holdout are in-sample, not stacked.

-

For AutoML, SHAP is only supported by linear, tree-based, and Keras deep learning blueprints. Most of the non-blender AutoML BPs that typically appear at the top of Leaderboard are supported (see the compatibility matrix.

-

SHAP is not supported for:

- Time series projects in the Classic UI

- Sliced insights in the Classic UI

- Scoring Code Prediction Explanations

- Blender models

-

SHAP does not support image feature types. As a result, Image Explanations are not available.

-

When a link function is used, SHAP is additive in the margin space (

sum(shap) = link(p)-link(p0)). The recommendation is:- When you require additive qualities of SHAP, use blueprints that don’t use a link function (e.g., a tree-based model).

- When log is used as a link function, you could also explain predictions using

exp(shap).

-

Limits on the number of explanations available:

- Backend/API: You can retrieve SHAP values for all features (using

shapMatricesAPI). - UI: Prediction Explanations preview is limited to five explanations; however, you can download up to 100 via CSV or use the API if you need access to more than 100 explanations. Feature Impact preview is limited to 25 features, but you can export up to 1000 to CSV.

- Backend/API: You can retrieve SHAP values for all features (using

-

Unsupervised anomaly detection models:

- Feature Impact is calculated using SHAP.

- Prediction Explanations are calculated using XEMP.

- As with supervised mode, SHAP is not calculated for blenders.

- SHAP is not available for models built on feature lists with greater than 1000 features.