DataRobot Prime (deprecated)¶

Availability information

The ability to create new DataRobot Prime models has been removed from the application. This does not affect existing Prime models or deployments. To export Python code in the future, use the Python code export function in any RuleFit model.

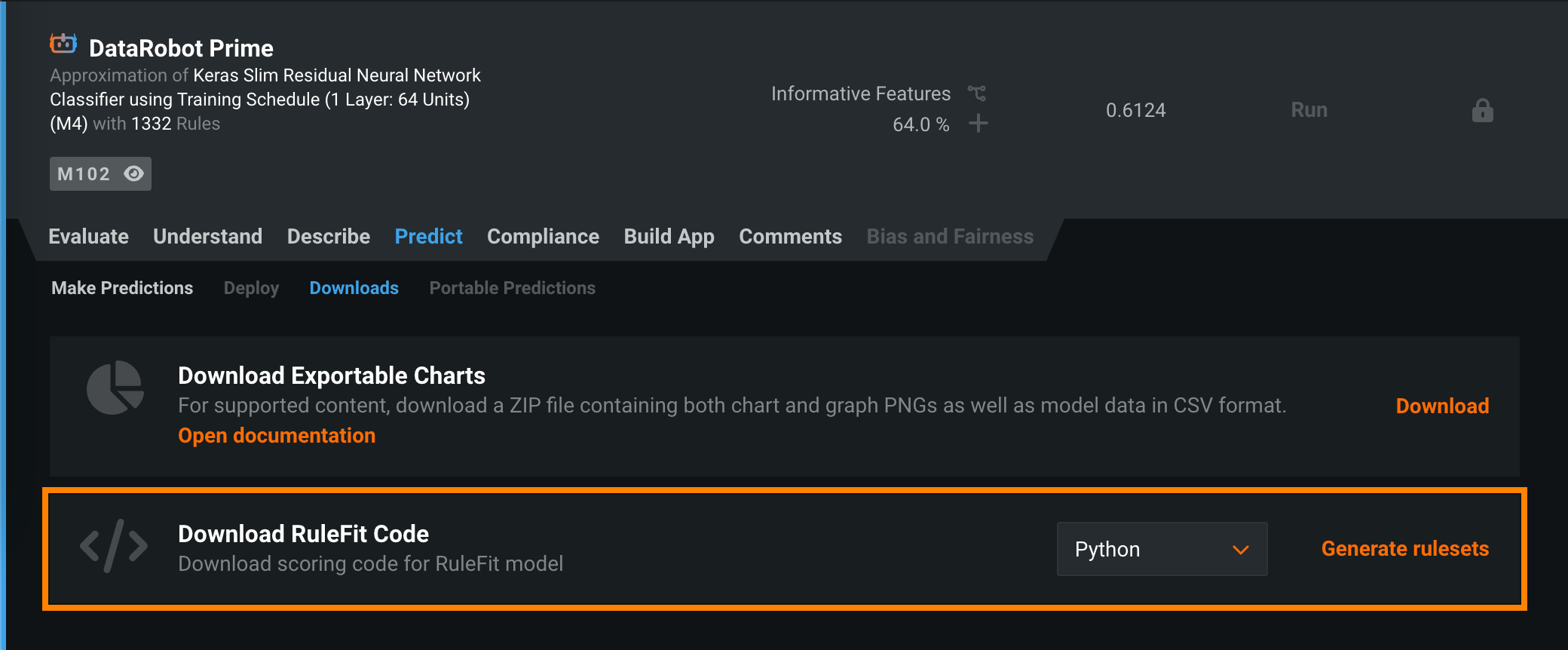

With the deprecation of DataRobot Prime, you can still view existing DataRobot Prime models on the Leaderboard and download approximation code. You can generate the code for the model as a Python module or a Java class. To do this, on the Leaderboard, locate and open the deprecated DataRobot Prime model and click Predict > Downloads. In the Download RuleFit Code group box, select Python or Java, and then click Download to download the Scoring Code for the DataRobot Prime model:

After you download the Python or Java DataRobot Prime approximation code, you can run it locally. For more information, review the examples below:

Running the downloaded code with Python requires:

- Python (Recommended: 3.7)

- Numpy (Recommended: 1.16)

- Pandas < 1.0 (Recommended: 0.23)

To make predictions with the downloaded model, run the exported Python script file using the following command:

python <prediction_file> --encoding=<encoding> <data_file> <output_file>

| Placeholder | Description |

|---|---|

| prediction_file | Specifies the downloaded Python code version of the RuleFit model. |

| encoding | (Optional) Specifies the encoding of the dataset you are going to make predictions with. RuleFit defaults to UTF-8 if not otherwise specified. See the "Codecs" column of the Python-supported standards chart for possible alternative entries. |

| data_file | Specifies a .csv file (your dataset). The columns must correspond to the feature set used to generate the model. |

| output_file | Specifies the filename where DataRobot writes the results. |

In the following example, rulefit.py is a Python script containing a RuleFit model trained on the following dataset:

race,gender,age,readmitted

Caucasian,Female,[50-60),0

Caucasian,Male,[50-60),0

Caucasian,Female,[80-90),1

The following command produces predictions for the data in data.csv and outputs the results to results.csv.

python rulefit.py data.csv results.csv

The file data.csv is a .csv file that looks like this:

race,gender,age

Hispanic,Male,[40-50)

Caucasian,Male,[80-90)

AfricanAmerican,Male,[60-70)

The results in results.csv look like this:

Index,Prediction

0,0.438665626555

1,0.611403738867

2,0.269324648106

To run the downloaded code with Java:

- You must use the JDK for Java version 1.7.x or later.

- Do not rename any of the classes in the file.

- You must include the Apache commons CSV library version 1.1 or later to be able to run the code.

- You must rename the exported code Java file to

Prediction.java.

Compile the Java file using the following command:

javac -cp ./:./commons-csv-1.1.jar Prediction.java -d ./ -encoding 'UTF-8'

Execute the compiled Java class using the following command:

java -cp ./:./commons-csv-1.1.jar Prediction <data file> <output file>

| Placeholder | Description |

|---|---|

| data_file | Specifies a .csv file (your dataset); columns must correspond to the feature set used to generate the RuleFit model. |

| output_file | Specifies the filename where DataRobot writes the results. |

The following example generates predictions for data.csv and writes them to results.csv:

javac -cp ./:./commons-csv-1.1.jar Prediction.java -d ./ -encoding 'UTF-8'

java -cp ./:./commons-csv-1.1.jar Prediction data.csv results.csv

See the Python example for details on the format of input and output data.