Additional¶

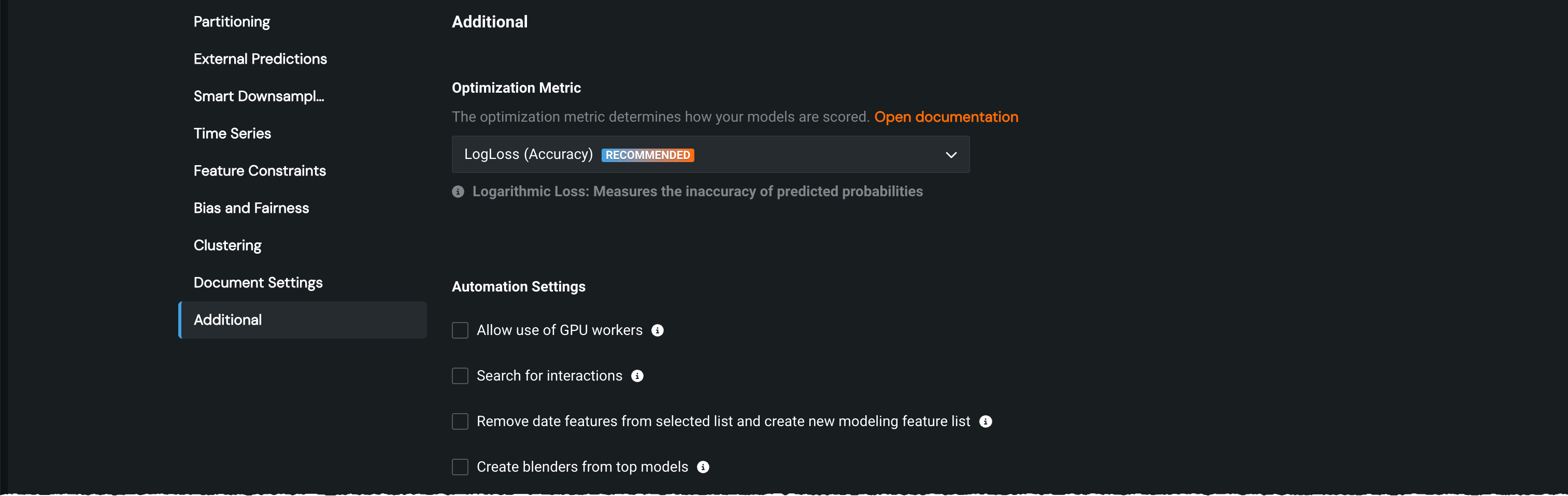

From the Additional tab you can fine-tune a variety of aspects of model building, with the options dependent on the project type. Options that are not applicable to the project are grayed out or do not display (depending on the reason that the option is unavailable).

The following table describes each of the additional parameter settings available in Advanced options.

| Parameter | Description |

|---|---|

| Optimization metric | |

| Optimization metric | Provides access to the complete list of available optimization metrics. Once you specify a target, DataRobot chooses from a comprehensive set of metrics and recommends one suited for the given data and target. The chosen metric is displayed below the Start button on the Data page. Use this dropdown to change the metric before beginning model building. |

| Automation settings | |

| Remove date features from selected list and create new modeling feature list | Duplicates selected feature list, removes raw date features, and uses the new list to run Autopilot. Excluding raw date features from non-time aware projects can prevent issues like overfitting. |

| Search for interactions | Automatically uncovers new features when it finds interactions between features from your primary dataset. Run as part of EDA2, enabling this results in not only new features but also new default and custom feature lists, identified by a plus sign (+). This is useful for finding additional insights in existing data. |

| Include only blueprints with Scoring Code support | Toggle on to only train models that support Scoring Code export. This is useful when scoring data outside of DataRobot or at a very low latency. |

| Create blenders from top models | Toggle whether DataRobot computes blenders from the best-performing models at the end of Autopilot. Note that enabling this feature may increase modeling and scoring time. |

| Include only models with SHAP value support | Includes only SHAP-based blueprints (often necessary for regulatory compliance). You must check this prior to project start to have access to SHAP-based insights (also true for API and Python client access). If enabled, in addition to the selected Autopilot mode only running SHAP blueprints, the Repository will also only have SHAP-supported blueprints available. When enabled, Feature Impact and Prediction Explanations produce only SHAP-based insights. This option is only available if "create blenders from top models" is not selected. |

| Recommend and prepare a model for deployment | Toggle on to activate the blueprint recommendation flow (feature list reduction and retraining at higher sample size), which indicates whether DataRobot trains a model, labeled as "recommended for deployment" and "prepared for deployment" at the end of Autopilot. |

| Include blenders when recommending a model | Toggle on to allow blender models to be considered as part of the model recommendation process. |

| Use accuracy-optimized metablueprint | Runs XGBoost models with a lower learning rate and more trees, as well as an XGBoost forest blueprint. In certain cases, these models can slightly increase accuracy, but they can take 10x to 100x longer to run. If set, you should increase the Upper-bound running time setting to approximately 30 hours (default three hours) so that DataRobot can promote the longer running models to the next stage of Autopilot. Note that because a better model is not guaranteed, this option is only intended for users who are already hand-tuning their XGBoost models and are aware of the runtime requirements of large XGBoost models. There is no guarantee that this setting will work for datasets greater than 1.5GB. If you get out of memory errors, try running the models without accuracy-optimized set, or with a smaller sample size. |

| Run Autopilot on feature list with target leakage removed | Automatically creates a feature list (Informative Features - Leakage Removed) that removes the high-risk problematic columns that may lead to target leakage. |

| Number of models to run cross-validation/backtesting on | Enter the number of models for which DataRobot will compute cross-validation during Autopilot. This parameter also applies to backtesting for Time Series and OTV projects. The setting is activated if the number is greater than the Autopilot default. |

| Upper-bound running time | Sets an execution limit time, in hours. If a model takes longer to run than this limit, Autopilot excludes the model from larger training sample runs. Models that exceed this time limit are identified on the Leaderboard; you can still run them at higher sample sizes manually, if needed. |

| Response cap (regression projects only) | Limits the maximum value of the response (target) to a percentile of the original values. For example, if you enter 0.9, any values above the 90th percentile are replaced with the value of the 90th percentile of the non-zero values during training. This capping is used only for training, not predicting or scoring. Enter a value between 0.5 and 1.0 (50-100%). |

| Random seed | Sets the starting value used to initiate random number generation. This fixes the value used by DataRobot so that subsequent runs of the same project will have the same results. If not set, DataRobot uses a default seed (typically 1234). To ensure exact reproducibility, however, it is best to set your own seed value. |

| Positive class assignment (binary classification only) | Sets the class to use when a prediction scores higher than the classification threshold of .5. |

| Weighting Settings (see additional details below) | |

| Weight | Sets a single feature to use as a differential weight, indicating the relative importance of each row. |

| Exposure | Sets a feature to be treated with strict proportionality in target predictions, adding a measure of exposure when modeling insurance rates. Regression problems only. |

| Count of Events | Used by Frequency-Severity models, sets a feature for which DataRobot collects (and treats as a special column) information on the frequency of non-zero events. Zero-inflated Regression problems only. |

| Offset | Sets feature(s) that should be treated as a fixed component for modeling (coefficient of 1 in generalized linear models or gradient boosting machine models). Regression and binary classification problems only. |

Change the optimization metric¶

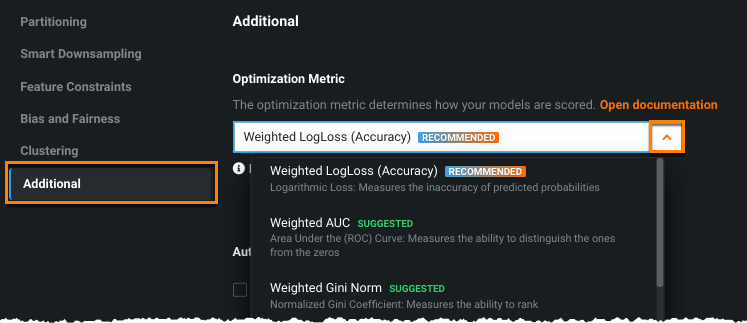

The optimization metric defines how DataRobot scores your models. After you choose a target feature, DataRobot selects an optimization metric based on the modeling task. This metric is reported under the Start button on the project start page. To build models using a different metric, overriding the recommended metric, use the Optimization Metric dropdown:

The metric DataRobot chooses for scoring models is usually the best selection. Changing the metric is an advanced functionality and recommended only for those who understand the metrics and the algorithms behind them.

Some notes:

-

If the selected target has only two unique values, DataRobot assumes that it is as classification task and recommends a classification metric. Examples of recommended classification methods include LogLoss (if it is necessary to calculate a probability for each class), and Gini and AUC when it is necessary to sort records in order of ranking.

-

Otherwise, DataRobot assumes that the selected target represents a regression task. The most popular metrics for regression are RMSE (Root Mean Square Error) and MAE (Mean Absolute Error).

-

If you are using smart downsampling to downsize your dataset or you selected a weight column as your target, only weighted metrics are available. Alternately, you cannot choose a weighted metric if neither of those scenarios are true. A weighted metric takes into account a skew in the data.

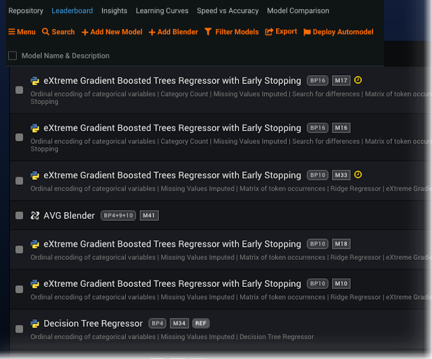

Note that although you choose and build a project optimized for a specific metric, DataRobot computes many applicable metrics on each of the models. After the build completes, you can redisplay the Leaderboard listing based on a different metric. It will not change any values within the models, but it will simply reorder the model listing based on their performance on this alternate metric:

See the reference material for a complete list of available metrics for more information.

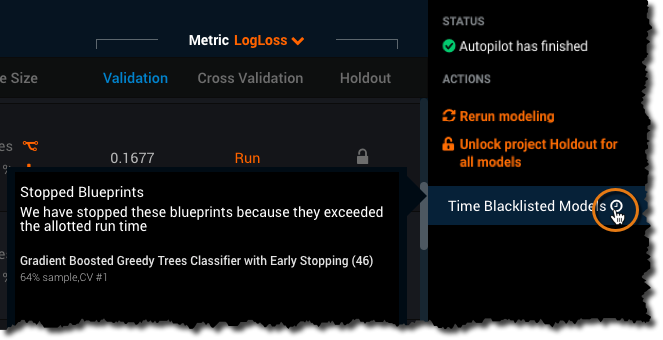

Time limit exceptions¶

When you set the Upper Bound Running Time, DataRobot uses that time limit to control how long a single model can run. Time limit is three hours by default. Any model that exceeds the limit continues to run until it completes, but DataRobot does not build the model with the subsequent sample size. For example, perhaps you run full Autopilot and a model at the 16% sample size exceeds the limit. The model continues to run until it completes, but DataRobot does not begin the 32% sample size build for any model until all the 16% models are complete. By excepting very long running models, you can complete Autopilot and then manually run any that were halted.

These excepted models are maintained in a model list, indicated with an ![]() icon on the Leaderboard:

icon on the Leaderboard:

You can view the contents of the list (that is, the models excluded from subsequent sample size builds) by expanding the Time limit exceeded models link in the Worker Queue.

Additional weighting details¶

The information below describes valid feature values and project types for the weighting options. With the Weight, Exposure and Offset parameters, you can add constraints to your modeling process. You do this by selecting which features (variables) should be treated differently; when set, the Data page indicates that the parameter is applied to a feature.

The following describes the weighting options. See below for more detail and usage criteria.

-

Weight: Sets a single feature to use as a differential weight, indicating the relative importance of each row. It is used when building or scoring a model—for computing metrics on the Leaderboard—but not for making predictions on new data. All values for the selected feature must be greater than 0. DataRobot runs validation and ensures the selected feature contains only supported values.

-

Exposure: In regression problems, sets a feature to be treated with strict proportionality in target predictions, adding a measure of exposure when modeling insurance rates. DataRobot handles a feature selected for Exposure as a special column, adding it to raw predictions when building or scoring a model; the selected column(s) must be present in any dataset later uploaded for predictions.

-

Count of Events: Improves modeling of a zero-inflated target by adding information on the frequency of non-zero events. To use Count of Events, select the feature (variable) to treat as the source for the count.

-

Offset: In regression and binary classification problems, sets feature(s) that should be treated as a fixed component for modeling (coefficient of 1 in generalized linear models or gradient boosting machine models). Offsets are often used to incorporate pricing constraints or to boost existing models. DataRobot handles a feature selected for Offset as a special column, adding it to raw predictions when building or scoring a model; the selected column(s) must be present in any dataset later uploaded for predictions.

To use the weighting parameters, enter feature name(s) from the uploaded dataset. DataRobot uses the features as the offset and/or exposure in modeling and only builds those models that support the parameters—ElasticNet, GBM, LightGBM, GAM, ASVM, XGBoost, and Frequency x Severity models (Count of Events is only used in Frequency-Severity models). You can blend resulting models using the Average, GLM, and ENET blenders.

Weighting parameter requirements¶

For Weight, Exposure, and Count of Events, DataRobot filters out all features that do not match the criteria (listed in the tooltip) so that you cannot select them. For Offset, you can select multiple features. The following table describes the dataset requirements for each parameter:

| Criteria | Weight | Exposure | Count of Events | Offset |

|---|---|---|---|---|

| Project type | all | regression | regression | regression and binary classification |

| Target value | any | positive | zero-inflated | any |

| Missing values, all zeros, or no values (empty) allowed? | no | no | no | no |

| Positive values required? | yes | yes | yes | no |

| Zero values allowed? | no | no | yes | yes |

| Columns allowed | single | single | single | multiple |

| Duplicate columns allowed? | no | no | no | no |

| Data type* | numeric | numeric | numeric | numeric |

| Transformed features allowed? | yes | no | no | no |

| Cardinality > 2 allowed? | yes | no | yes | yes |

| Multiclass allowed? | yes | no | no | no |

| Time series projects? | yes | no | no | no |

| Unsupervised projects? | no | no | yes | no |

| ETL downsampled? | yes | no | no | no |

| Target zero-inflated? | no | yes | yes | yes |

* Columns selected must be a "pure" numeric (not date, time, etc.). Note also that columns selected for Offset, Exposure, or Count of Events cannot be specified as other special columns (user partition, weights, etc.).

Weighting effect on insights¶

Projects built using the Offset, Exposure, and/or Count of Events parameters produce the same DataRobot insights as projects that do not. However, DataRobot excludes Offset, Exposure, and Count of Events columns from the predictive set. That is, the selected columns are not part of the Coefficients, Prediction Explanations, or Feature Impact visualizations; they are treated as special columns throughout the project. While the Exposure, Offset, and Count of Events columns do not appear in these displays as features, their values have been used in training.

What is a link function?

A link function—used by Exposure and Offset—maps a non-linear relationship to a linear one, allowing you to use a linear model (linear regression) for data that would otherwise not support that model type. Specifically, it transforms the probabilities of each categorical response variable to a continuous, unbounded scale that is unbounded.

Set Exposure¶

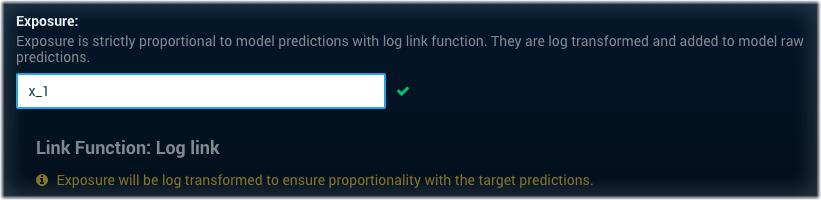

The Exposure parameter accepts only a single feature. Entering a second feature name will overwrite your previous selection. To set Exposure, start typing a name in the entry box. DataRobot string matches your entry and when completed, validates the entry:

Only optimization metrics with the log link function (Poisson, Gamma, or Tweedie deviance) can make use of the exposure values in modeling. For these optimization metrics, DataRobot log transforms the value of the field you specify as an exposure (you do not need to do it). If you select otherwise, DataRobot returns an informative message. See below for more training and prediction application details.

Exposure explained

You cannot compare "present day value" when each row of your data has a different history length. Use the Exposure parameter when calculating risk by comparing observations that are not of equal duration. Exposure is a special weighting used to balance risk over time. For example, let's say you have determined that a policy files, on average, two claims per year. When comparing two policies—a half-year policy and a full-year policy—statistically speaking, the half-year policy will file one claim while the full-year policy will file two claims (on average). Exposure allows DataRobot to adjust predictions for the time difference.

Set Count of Events¶

Count of Events improves modeling of a zero-inflated target by adding information on the frequency of non-zero events. Frequency x Severity models handle it as a special column. The frequency stage uses the column to model the frequency of non-zero events. The severity stage normalizes the severity of non-zero events in the column and uses that value as the weight. This is specially required to improve interpretability of frequency and severity coefficients. The column is not used for making predictions on new data.

The parameter accepts only a single feature. Entering a second feature name will overwrite your previous selection. DataRobot string matches your entry and, when completed, validates the entry.

Set Offset¶

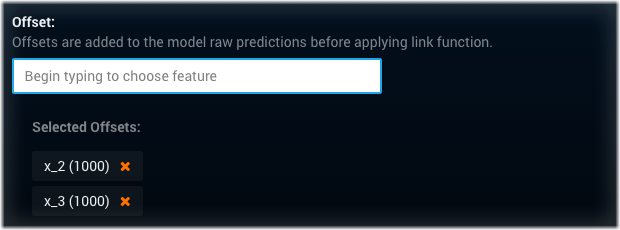

The Offset parameter adjusts the model intercept (linear model) or margin (tree-based model) for each sample; it accepts multiple features. DataRobot displays a message below the selection reporting which link function it will use.

-

For regression problems, if the optimization metric is Poisson, Gamma, or Tweedie deviance, DataRobot uses the log link function, in which case offsets should be log transformed in advance. Otherwise, DataRobot uses the identity link function and no transformation is needed for offsets.

-

For binary classification problems, DataRobot uses the logit link function, in which case offsets should be logit transformed in advance.

See below for more training and prediction application details.

Offset explained

Applying the Offset parameter is helpful when working with projects that rely on data that has a fixed component and a variable component. Offsets lets you limit a model to predicting on only the variable component. This is especially important when the fixed component varies. When you set the Offset parameter, DataRobot marks the feature as such and makes predictions without considering the fixed value.

Two examples:

-

Residual modeling is a commonly used method when important risk factors (for example, underwriting cycle, year, age, loss maturity, etc.) contribute strongly to the outcome, and mask all other effects, potentially leading to a highly biased result. Setting Offsets deals with the data bias issue. Using a feature set as an offset is the equivalent of running the model against the residuals of the selected feature set. By modeling on residuals, you can tell the model to focus on telling you new information, rather than what you already know. With offsets, DataRobot focuses on the "other" factors when model building, while still incorporating the main risk factors in the final predictions.

-

The constraint issue in insurance can arise due to market competition or regulation. Some examples are: discounts on multicar or home-auto package policies being limited to a 20% maximum, suppressing rates for youthful drivers, or suppressing rates for certain disadvantaged territories. In these types of cases, some of the variables can be set to a specific value and added to the model predictions as offsets.

Offset and Exposure in modeling¶

During training, Offset and Exposure are incorporated into modeling using the following logic:

| Project metric | Modeling logic |

|---|---|

| RMSE | Y-offset ~ X |

| Poisson/Tweedie/Gamma/RMSLE | ln(Y/Exposure) - offset ~ X |

When making predictions, the following logic is applied:

| Project metric | Prediction calculation logic |

|---|---|

| RMSE | model(X) + offset |

| Poisson/Tweedie/Gamma/RMSLE | exp(model(X) + offset) * exposure |