Anomaly detection¶

DataRobot works with unlabeled data (or partially labeled data) to build anomaly detection models. Anomaly detection, also referred to as outlier and novelty detection, is an application of unsupervised learning. Where supervised learning models use target features and make predictions based on the learning data, unsupervised learning models have no targets and detect patterns in the learning data.

Anomaly detection can be used in cases where there are thousands of normal transactions with a low percentage of abnormalities, such as network and cyber security, insurance fraud, or credit card fraud. Although supervised methods are very successful at predicting these abnormal, minority cases, it can be expensive and very time-consuming to label the relevant data.

See the associated considerations for important additional information.

Anomaly detection workflow¶

The following provides an overview of the anomaly detection workflow, which works for both AutoML and time series projects.

-

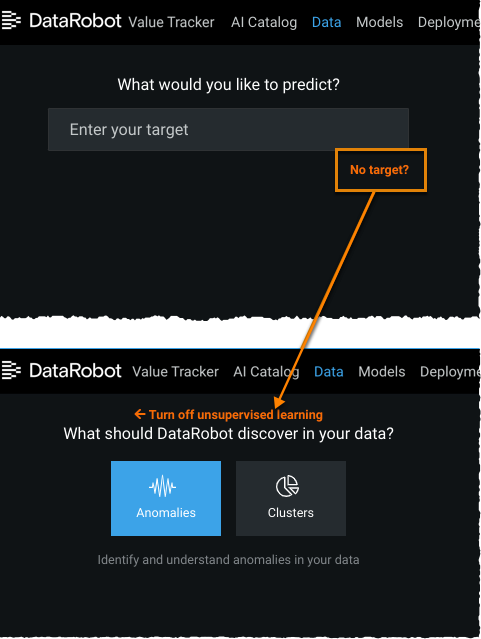

Upload data, click No target? and select Anomalies.

-

If using time-aware modeling:

- Click Set up time-aware modeling.

- Select the primary date/time feature.

- Select to set up Time Series Modeling.

- Set the rolling window (FDW) for anomaly detection.

-

Set the modeling mode and click Start. If you chose manual mode, navigate to the Repository and run an anomaly detection blueprint.

- From the Leaderboard, consider the scores and select a model.

-

For time series projects, expand a model and choose Anomaly Over Time or Anomaly Assessment. This visualization helps to understand anomalies over time and functions similarly to the non-anomaly Accuracy Over Time.

-

Compute Feature Impact.

Note

Regardless of project settings, Feature Impact for anomaly detection models trained from DataRobot blueprints is always computed using SHAP. For anomaly detection models from user blueprints, Feature Impact is computed using the permutation-based approach.

-

Compute Feature Effect.

- Compute Prediction Explanations to understand which features contribute to outlier identification.

- Consider changing the outlier threshold.

- Make predictions (or use partially labeled data).

Synthetic AUC metric¶

Anomaly detection is performed in unsupervised mode, which finds outliers in the data without requiring a target. Without a target, however, traditional data science metrics cannot be calculated to estimate model performance. To address this, DataRobot uses the Synthetic AUC metric to compare models and sort the Leaderboard.

Once unsupervised mode is enabled, Synthetic AUC appears as the default metric. The metric works by generating two synthetic datasets out of the validation sample—one made more normal, one made more anomalous. Both samples are labelled accordingly, and then a model calculates anomaly score predictions for both samples. The usual ROC AUC value is estimated for each synthetic dataset, using artificial labels as the ground truth. If a model has a Synthetic AUC of 0.9, it is not correct to interpret that score to mean that the model is correct 90% of the time. It simply means that a model with, for example, a Synthetic AUC of 0.9 is likely to outperform a model with a Synthetic AUC of 0.6.

Outlier thresholds¶

After you have run anomaly models, for some blueprints you can set the expected_outlier_fraction parameter in the Advanced Tuning tab.

This parameter sets the percent of the data that you want considered as outliers—the expected "contamination factor" you would expect to see. In AutoML, it is used to define the content of the Insights table display. In special cases such as the SVM model, this value sets the nu parameter, which affects the decision function threshold. By default, the expected_outlier_fraction is 0.1 (10%).

Interpret anomaly scores¶

As with non-anomaly models, DataRobot reports a model score on the Leaderboard. The meaning of the score differs, however. A "good" score indicates that the abnormal rows in the dataset are related somehow to the class. A "poor" score indicates that you do have anomalies but they are not related to the class. In other words, the score does not indicate how well the model performs. Because the models are unsupervised, scores could be influenced by something like noisy data—what you may think is an anomaly may not be.

Anomaly scores range between 0 and 1 with a larger score being more likely to be anomalous. They are calibrated to be interpreted as the probability that the model identifies a given row is an outlier when compared to other rows in the training set. However, since there is no target in unsupervised mode, the calibration is not perfect. The calibrated scores should be considered an estimated probability rather than quantitatively exact.

Anomaly score insights¶

Note

This insight is not available for time series projects.

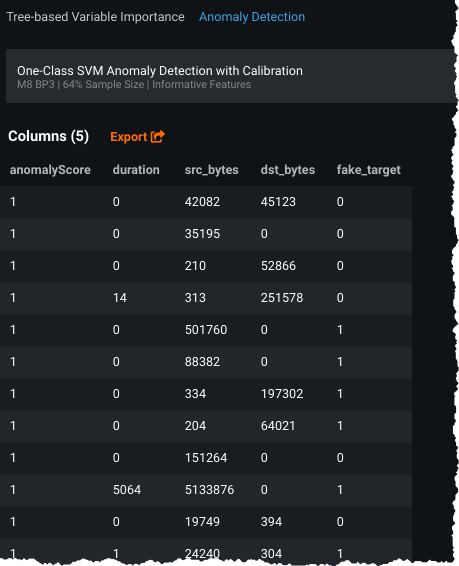

DataRobot anomaly detection models automatically provide an anomaly score for all rows, helping you to identify unusual patterns that do not conform to expected behavior. A display available from the Insights tab lists up to the top 100 rows with the highest anomaly scores, with a maximum of 1000 columns and 200 characters per column. There is an Export button on the table display that allows you to download a CSV of the complete listing of anomaly scores. Alternatively, you can compute predictions from the Make Predictions tab and download the results. The anomaly score is shown in the Prediction column of your results.

For a summary of anomaly results, click Anomaly Detection on the Insights tab:

DataRobot displays a table sorted on the anomaly scores (the score from making a prediction with the model). Each row of the table represents a row in the original dataset. From this table, you can identify rows in your original data by searching or you can download the model's predictions (which will have the row ID appended).

The number of rows presented is dependent on the expected_outlier_fraction parameter, with a maximum display of 100 rows (1000 columns and 200 characters per column). That is, the display includes the smaller of (expected_outlier_fraction * number of rows) and 100. You can download the entire anomaly table for all rows used to train the model by clicking the Export button.

To view insights for another anomaly model, click the pulldown in the model name bar and select a new model.

Partially labeled data¶

The following provides a quick overview of using partially labeled data when working with anomaly detection (not clustering) projects:

-

Upload data, enable unsupervised mode by selecting No target?, select the Anomalies option, and click Start. Depending on the modeling mode selected, either let DataRobot select, or you select via the Repository, the most appropriate anomaly detection models.

-

Select a best fit model by considering Synthetic AUC model rankings.

-

Use DataRobot tools to assess the anomaly detection model and review examples of rows with low and high anomaly scores. Tools to leverage include Feature Impact, Prediction Explanations, and Anomaly Detection insights.

-

Either take a copy of the original dataset, or use any other scoring dataset with the appropriate scoring columns, and create an "actual value" column where you label the rows as 0 or 1 (true anomaly as “1” and no anomaly as a “0”). This label is typically based on a human review of the rows, though it could be any label desired (such as a known fraud label). This column must have a unique name (that is, it cannot already be used as a column name in the original training dataset from step 1).

-

Upload the newly labeled data from step 4 to a selected model on the Leaderboard via the Predict > Make Predictions tab, and choose Run external test.

-

You can now access the Lift Chart and ROC Curve. From Menu > Leaderboard options > Show external test column you can sort the Leaderboard evaluation metric, including the AUC option.

-

Potentially reconsider the top model selected in step 2, and then deploy the final selection into production.

Anomaly detection blueprints¶

DataRobot Autopilot composes blueprints based on the unique characteristics of the data, including the dataset size. Some blueprints, because they tend to consume too much of modeling resources or build more slowly, are not automatically added to the Repository. If there are specific anomaly detection blueprints you would like to run but do not see listed in the Repository, try composing them in the blueprint editor. If running the resulting blueprint still consumes too many resources, DataRobot generates model errors or out of memory errors and displays them in the model logs.

The anomaly detection algorithms that DataRobot implements are:

| Model | Description |

|---|---|

| Isolation Forest | Isolates observations by randomly selecting a feature and randomly selecting a split value between the max and min values of the selected feature. Random partitioning produces shorter tree paths for anomalies. Good for high-dimensional data. |

| One Class SVM | Captures the shape of the dataset and is usually used for Novelty Detection. Good for high-dimensional data. |

| Local Outlier Factor (LOF) | Based on k-Nearest Neighbor, measures the local deviation of density for a given row with respect to its neighbors. Considered "local" in that the anomaly score depends on the object's isolation with respect to its surrounding neighborhood. |

| Double Median Absolute Deviation (MAD) | Uses two median values—one from the left tail (median of all points less than or equal to the median of all the data) and one from the right tail (median of all points greater than or equal to the median of all the data). It then checks if either tail median is greater than the threshold. Not practical for boolean or near-constant data; good for symmetric and asymmetric distributions. |

| Anomaly detection with Supervised Learning (XGB) | Uses the average score of the base models and labels a percentage as Anomaly and the rest as Normal. The percentage labeled as Anomaly is defined by the calibration_outlier_fraction parameter. Base models are Isolation Forest and Double MAD, resulting in a faster and less memory-intense experience. If the dataset contains text, there will be 2 XGBoost models in the Repository. One of the models uses singular-value decomposition from the text; the other model uses the most frequent words from the text. |

| Mahalanobis Distance | The Mahalanobis distance is a measure of the distance between a point, P, and a distribution, D. It is a multi-dimensional representation of the idea of measuring how many standard deviations away the point is from the mean of the distribution. This model requires more than one column of data. |

| Time series: Bollinger Band | A feature value that deviates significantly with respect to its most recent value can be an indication of anomalous behavior. Bollinger Band refers to robust z-score (also known as modified z-score) values as a basis for anomaly detection. A robust z-score value is evaluated using the median value of samples, and it suggests how far a value is away from sample median (z-score is similar, but it references to sample mean instead). Bollinger Band suggests higher anomaly scores whenever the robust z-scores exceed the specified threshold. Bollinger Band refers to the median value of partition training data as reference for the computation of robust z-score. |

| Time series: Bollinger Band (rolling) | In contrast to the Bollinger Band described above, Bollinger Band (rolling) refers to the median value of feature derivation window samples only, instead of the whole partition training data. Bollinger Band (rolling) requires use of the “Robust z-score Only” feature list for modeling, which has all the robust z-score values derived in a rolling manner. |

The blueprint(s) DataRobot selects during Autopilot depend on the size of the dataset. For example, Isolation Forest is typically selected, but for very large datasets, Autopilot builds Double MAD models. Regardless of which model DataRobot builds, all anomaly blueprints are available to run from the Repository.

Time series anomaly detection¶

DataRobot’s time series anomaly detection allows you to detect anomalies in your data. To enable the capability, you do not specify a target variable at project start, which results in DataRobot performing unsupervised mode for time series data. Instead, you click to enable unsupervised mode.

After enabling unsupervised mode and selecting a primary date/time feature, you can adjust the feature derivation window (FDW) as you normally would in time series modeling. Notice, however, that there is no need to specify a forecast window. This is because DataRobot detects anomalies in real time, as the data becomes anomalous.

For example, imagine using anomaly detection for predictive maintenance. If you had a pump with sensors reporting different components’ pressure readings, your DataRobot time series model can alert you when one of those components has a pressure reading that is abnormally high. Then, you can investigate that component and fix anything that may be broken before an ultimate pump failure.

DataRobot offers a selection of anomaly detection blueprints and also allows you to create blended blueprints. You may want to create a max blender model, for example, to make a model that produces a high false positive rate, making it extra sensitive to anomalies. (Note that blenders are not created automatically for anomaly detection projects.)

For time series anomaly detection, DataRobot ranks Leaderboard models using a novel error metric method, Synthetic AUC. This error metric can help determine which blueprint may be best suited for your use case. If you want to verify AUC scores, you can upload partially labeled data and create a column to specify known anomalies. DataRobot can then use that partially labelled dataset to rank the Leaderboard by AUC score. Partially labeled data is data in which you’ve taken a sample of values in the training dataset and flagged anomalies in real-life as “1” or lack of an anomaly as a “0”.

Anomaly scores can be calibrated to be interpreted as probabilities. This happens in-blueprint using outlier detection on the raw anomaly scores as a proxy for an anomaly label. Raw scores that are outliers amongst the scores from the training set are assumed to be anomalies for purposes of calibration. This synthetic target is used to do Platt scaling on the raw anomaly scores. The calibrated score is interpreted as the probability that the raw score is an outlier, given the distribution of scores seen in the training set.

Deployments with time series anomaly detection work in the same way as all other time series blueprint deployments.

Anomaly detection feature lists for time series¶

DataRobot generates different time series feature lists that are useful for point anomalies and anomaly windows detection. To provide the best performance, typically DataRobot selects the "SHAP-based Reduced Features" or "Robust z-score Only" feature list when running Autopilot.

Both "SHAP-based Reduced Features" or "Robust z-score Only" feature lists consider a selective set of features from all available derived features. Additional feature lists are available via the menu:

- "Actual Values and Rolling Statistics"

- "Actual Values Only"

- "Rolling Statistics Only"

- Time Series Informative Features

- Time Series Extracted Features

Note that if "Actual Values and Rolling Statistics" is a duplicate of "Time Series Informative Features", DataRobot only displays "Time Series Informative Features" in the menu. "Time Series Informative Features" does not include duplicate features, while "Time Series Extracted Features" contains all time series derived features.

Seasonality detection for feature lists¶

There are cases where some features are periodic and/or have trend, but there are no anomalies present. Anomaly detection algorithms applied to the raw features do not take the periodicity or trend into account. They may identify false positives where the features have large amplitudes or may identify false negatives where there is an anomalous value that is small in comparison to the overall amplitude of the normal signal.

Because anomalies are inherently irregular, DataRobot prevents periodic features from being part of most default feature lists used for automated modeling in anomaly detection projects. That is, after applying seasonality detection logic to a project's numeric features, DataRobot removes those features before creating the default lists. This logic is not applied to (features are not deleted from) the Time Series Extracted Features and Time Series Informative Features lists. Specifically:

- If the feature is seasonal, the logic assumes that the actual values and rolling z-scores are also seasonal and therefore drops them.

- If the rolling window is shorter than the period for that feature, the rolling stats are assumed to be seasonal and the features are dropped.

These features are still available in the project and can be used for modeling by adding them to a user-created feature list.

Sample use cases¶

Following are some sample use cases for anomaly detection.

When the data is labeled:

Kerry has millions of rows of credit card transactions but only a small percentage has been labeled as fraud or not-fraud. Of those that are labeled, the labels are noisy and are known to contain false positives and false negatives. She would like to assess the relationship between “anomaly” and “fraud” and then fine-tune anomaly detection models so that she can trust the predictions on the large amounts of unlabeled data. Because her company has limited resources for investigating claims, successful anomaly detection will allow them to prioritize the cases they think are most likely fraudulent.

or

Kim works for a network security company which has huge amounts of data, much of which has been labeled. The problem is that when a malicious behavior is recognized and acted on (system entry blocked, for example), hackers change the behavior and create new forms of network intrusion. This makes it difficult to keep supervised models up-to-date so that they recognize the behavior change.

Kim uses anomaly detection models to predict if new data is novel— that is, novel from “normal” access and previously known “intrusion” access. Because much less data is need to recognize a change, anomaly detection models do not have to re-trained as frequently as supervised models. Kim will use the existing labeled data to fine-tune existing anomaly detection models.

When the data is not labeled:

Laura works for a manufacturing company that keeps machine-based data on machine status at specific points in time. With anomaly detection they hope to identify anomalous time points in their machine logs, thereby identifying necessary maintenance that could prevent a machine breakdown.

Anomaly detection considerations¶

Consider the following when working with anomaly detection:

-

In the case of numeric missing values, DataRobot supplies the imputed median (which, by definition, is non-anomalous).

-

The higher the number of features in a dataset, the longer it takes DataRobot to detect anomalies and the more difficult it is to interpret results. If you have more than 1000 features, be aware that the anomaly score becomes difficult to interpret, making it potentially difficult to identify the root cause of anomalies.

-

If you train an anomaly detection model on greater than 1000 features, Insights in the Understand tab are not available. These include Feature Impact, Feature Effects, Prediction Explanations, Word Cloud, and Document Insights (if applicable).

-

Because anomaly scores are normalized, DataRobot labels some rows as anomalies even if they’re not too far away from normal. For training data, the most anomalous row will have a score of 1. For some models, test data and external data can have anomaly score predictions that are greater than 1 if the row is more anomalous than other rows in the training data.

-

Synthetic AUC is an approximation based on creating synthetic anomalies and inliers from the training data.

-

Synthetic AUC scores are not available for blenders that contain image features.

-

Feature Impact for anomaly detection models trained from DataRobot blueprints is always computed using SHAP. For anomaly detection models from user blueprints, Feature Impact is computed using the permutation-based approach.

-

Because time series anomaly detection is not yet optimized for pure text data anomalies, data must contain some numerical or categorical columns.

-

The following methods are implemented and tunable:

| Method | Details |

|---|---|

| Isolation Forest |

|

| Double Mean Absolute Deviation (MAD) |

|

| One Class Support Vector Machine (SVM) |

|

| Local outlier factor |

|

| Mahalanobis Distance |

|

-

The following is not supported:

-

Projects with weights or offsets, including smart downsampling

-

Scoring Code

-

Anomaly detection does not consider geospatial data (that is, models will build but those data types will not be present in blueprints).

Additionally, for time series projects:

- Millisecond data is the lower limit of data granularity.

- Datasets must be less than 1GB.

- Some blueprints don’t run on purely categorical data.

- Some blueprints are tied to feature lists and expect certain features (e.g., Bollinger Band rolling must be run on a feature list with robust z-score features only).

- For time series projects with periodicity, because applying periodicity affects feature reduction/processing priorities, if there are too many features then seasonal features are also not included in Time Series Extracted and Time Series Informative Features lists.

Additionally, the time series considerations apply.