Configure retraining¶

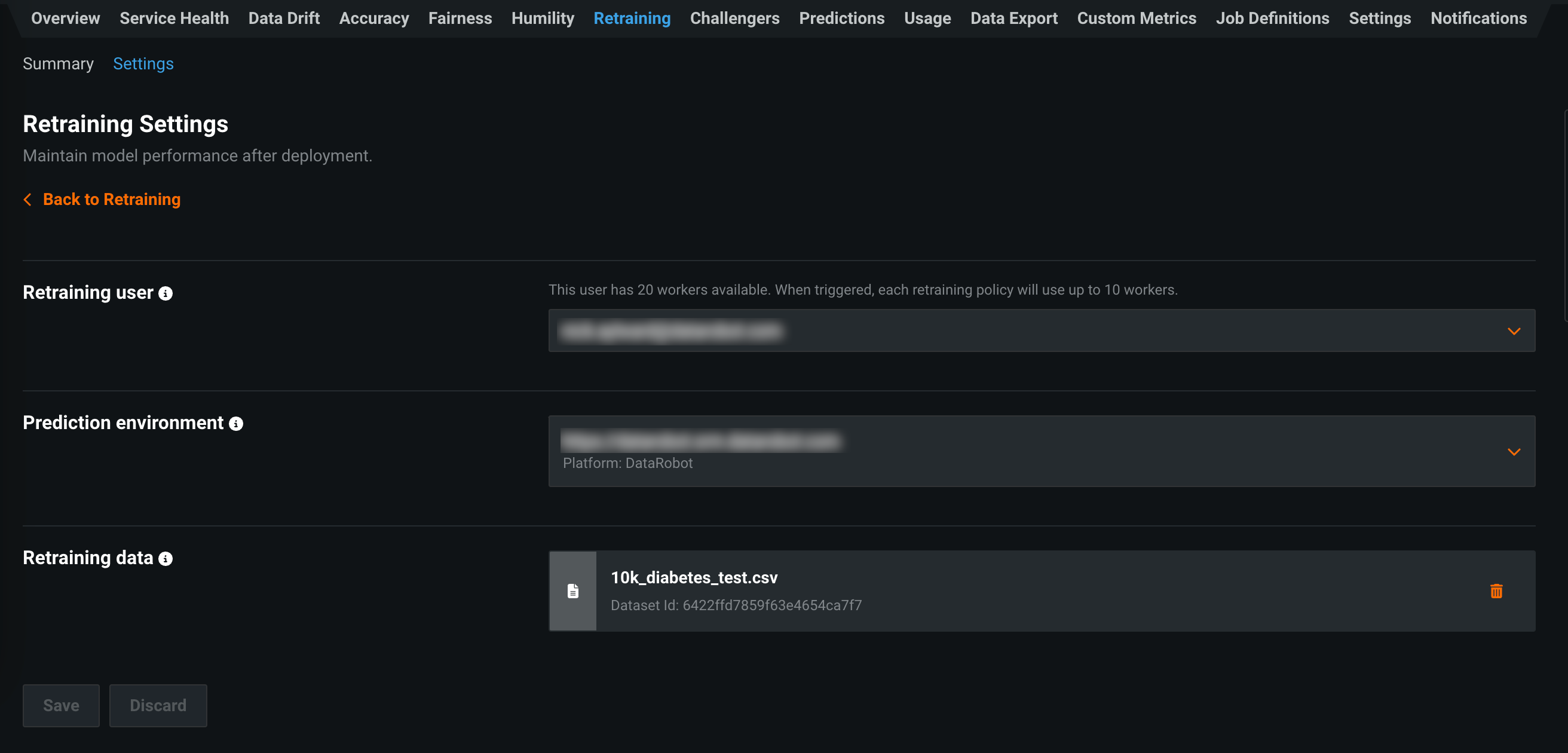

To maintain model performance after deployment without extensive manual work, DataRobot provides an automatic retraining capability for deployments. Upon providing a retraining dataset registered in the AI Catalog, you can define up to five retraining policies on each deployment. Before you define retraining policies, you must configure a deployment's general retraining settings on the Retraining > Settings tab.

Note

Editing retraining settings requires Owner permissions for the deployment. Those with User permissions can view the retraining settings for the deployment.

On a deployment's Retraining Settings page, you can configure the following settings:

| Element | Description |

|---|---|

| Retraining user | Selects a retraining user who has Owner access for the deployment. For resource monitoring, retraining policies must be run as a user account. |

| Prediction environment | Selects the default prediction environment for scoring challenger models. |

| Retraining data | Defines a retraining dataset for all retraining profiles. Drag or browse for a local file or select a dataset from the AI Catalog. |

After you click Save and define these settings, you can define a retraining policy.

Select a retraining user¶

When executed, scheduled retraining policies use the permissions and resources of an identified user (manually triggered policies use the resources of the user who triggers them.) The user needs the following:

- For the retraining data, permission to use data and create snapshots.

- Owner permissions for the deployment.

Modeling workers are required to train the models requested by the retraining policy. Workers are drawn from the retraining user's pool, and each retraining policy requests 50% of the retraining user's total number of workers. For example, if the user has a maximum of four modeling workers and retraining policy A is triggered, it runs with two workers. If retraining policy B is triggered, it also runs with two workers. If policies A and B are running and policy C is triggered, it shares workers with the other two policies running.

Note

Interactive user modeling requests do not take priority over retraining runs. If your workers are applied to retraining, and you initiate a new modeling run (manual or Autopilot), it shares workers with the retraining runs. For this reason, DataRobot recommends creating a user with a capped number of workers and designating this user for retraining jobs.

Choose a prediction environment¶

Challenger analysis requires replaying predictions that were initially made with the champion model against the challenger models. DataRobot uses a defined schedule and prediction environment for replaying predictions. When a new challenger is added as a result of retraining, it uses the assigned prediction environment to generate predictions from the replayed requests. It is possible to later change the prediction environment any given challenger is using from the Challengers tab.

While they are acting as challengers, models can only be deployed to DataRobot prediction environments. However, the champion model can use a different prediction environment from the challengers—either a DataRobot environment (for example, one marked for "Production" usage to avoid resource contention) or a remote environment (for example, AWS, OpenShift, or GCP). If a model is promoted from challenger to champion, it will likely use the prediction environment of the former champion.

Provide retraining data¶

All retraining policies on a deployment refer to the same AI Catalog dataset. When a retraining policy triggers, DataRobot uses the latest version of the dataset (for uploaded AI Catalog items) or creates and uses a new snapshot from the underlying data source (for catalog items using data connections or URLs). For example, if the catalog item uses a Spark SQL query, when the retraining policy triggers, it executes that query and uses the resulting rows as input to the modeling settings (including partitioning). For AI Catalog items with underlying data connections, if the catalog item already has the maximum number of snapshots (100), the retraining policy will delete the oldest snapshot before taking a new one.