Manage Automated Retraining policies¶

To maintain model performance after deployment without extensive manual work, DataRobot provides an automatic retraining capability for deployments. Upon providing a retraining dataset registered in the AI Catalog, you can define up to five retraining policies on each deployment, each consisting of a trigger, a modeling strategy, modeling settings, and a replacement action. When triggered, retraining will produce a new model based on these settings and notify you to consider promoting it.

Configure retraining settings

To configure a retraining policy, use the NextGen UI.

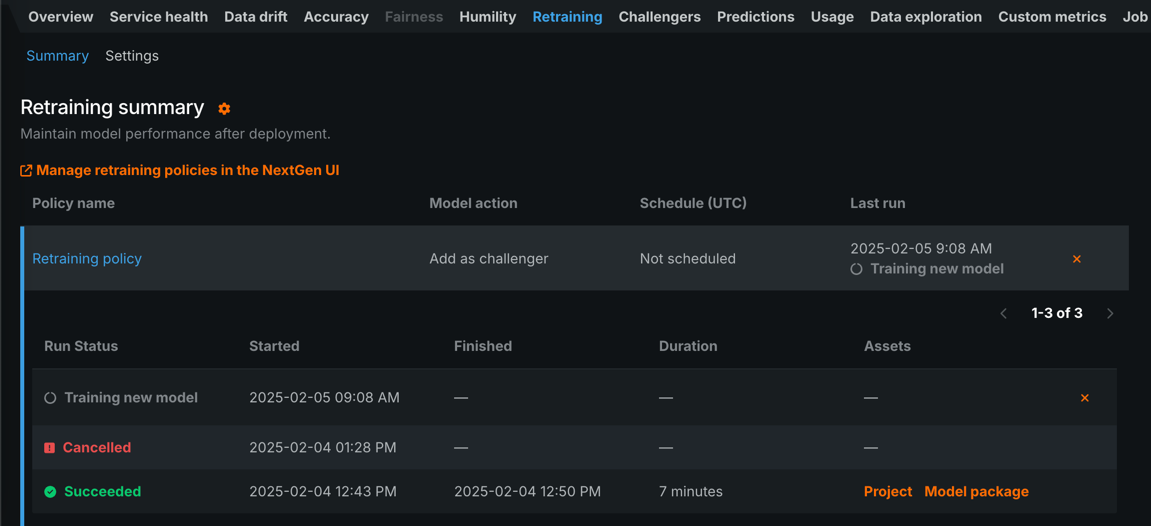

Manage existing retraining policies¶

You can start retraining policies or cancel retraining policies from the Classic UI. To edit or delete a retraining policy, use the NextGen UI.

| Element | Definition | |

|---|---|---|

| 1 | Manage retraining policies in the NextGen UI | Open the deployment's Mitigation > Retraining tab in the NextGen UI to edit or delete the retraining policy. |

| 2 | Retraining policy row | Click on a retraining policy row to expand it and view the retraining policy runs. |

| 3 | Run | Click the run button to start a policy manually. Alternatively, edit the policy by clicking the policy row and scheduling a run using the retraining trigger. |

| 4 | Cancel | Click the cancel button to cancel a policy that is in progress or scheduled to run. You can't cancel a policy if it has finished successfully, reached the "Creating challenger" or "Replacing model" step, failed, or has already been canceled. |

View retraining history¶

You can view all previous runs of a training policy, successful or failed. Each run includes a start time, end time, duration, and—if the run succeeded—links to the resulting project and model package. While only the DataRobot-recommended model for each project is added automatically to the deployment, you may want to explore the project's Leaderboard to find or build alternative models.

Note

Policies cannot be deleted or interrupted while they are running. If the retraining worker and organization have sufficient workers, multiple policies on the same deployment can be running at once.