Configure a deployment¶

Regardless of where you create a new deployment (the Leaderboard, the Model Registry, or the deployment inventory) or the type of artifact (DataRobot model, custom inference model, or remote mode), you are directed to the deployment information page where you can customize the deployment.

The deployment information page outlines the capabilities of your current deployment based on the data provided, for example, training data, prediction data, or actuals. It populates fields for you to provide details about the training data, inference data, model, and your outcome data.

Standard options and information¶

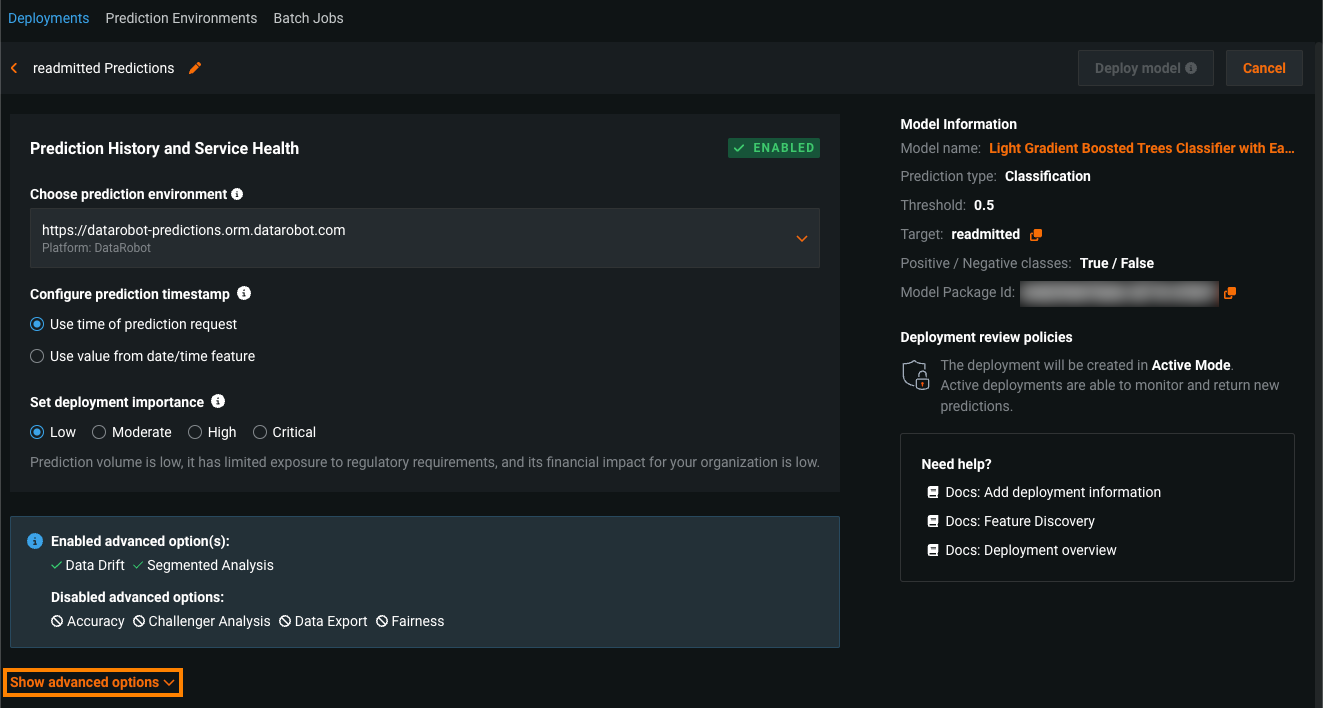

When you initiate model deployment, the Deployments tab opens to the Model information and the Prediction history and service health options:

Model information¶

The Model information section provides information about the model being used to make predictions for your deployment. DataRobot uses the files and information from the deployment to complete these fields, so they aren't editable.

| Field | Description |

|---|---|

| Model name | The name of your model. |

| Prediction type | The type of prediction the model is making. For example: Regression, Classification, Multiclass, Anomaly Detection, Clustering, etc. |

| Threshold | The prediction threshold for binary classification models. Records above the threshold are assigned the positive class label and records below the threshold are assigned the negative class label. This field isn't available for Regression or Multiclass models. |

| Target | The dataset column name the model will predict on. |

| Positive / Negative classes | The positive and negative class values for binary classification models. This field isn't visible for Regression or Multiclass models. |

| Model Package Id (Registered Model Version ID) | The id of the Model Package (Registered Model Version) in the Model Registry. |

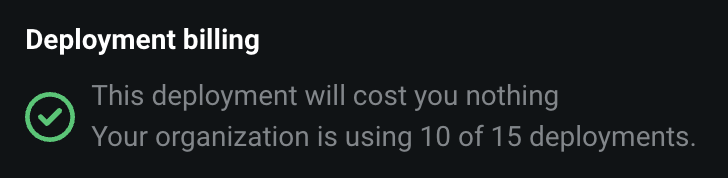

Note

If you are part of an organization with deployment limits, the Deployment billing section notifies you of the number of deployments your organization is using against the deployment limit and the deployment cost if your organization has exceeded the limit.

Prediction history and service health¶

The Prediction history and service health section provides details about your deployment's inference (also known as scoring) data—the data that contains prediction requests and results from the model.

| Setting | Description |

|---|---|

| Configure prediction environment | Environment where predictions are generated. Prediction environments allow you to establish access controls and approval workflows. |

| Enable batch monitoring | Determines if predictions are grouped and monitored in batches, allowing you to compare batches of predictions or delete batches to retry predictions. For more information, see the Batch monitoring for deployment predictions documentation. |

| Configure prediction timestamp | Determines the method used to time-stamp prediction rows for Data Drift and Accuracy monitoring.

This setting cannot be changed after the deployment is created and predictions are made. |

| Set deployment importance | Determines the importance level of a deployment. These levels—Critical, High, Moderate, and Low—determine how a deployment is handled during the approval process. Importance represents an aggregate of factors relevant to your organization such as the prediction volume of the deployment, level of exposure, potential financial impact, and more. When a deployment is assigned an importance of Moderate or above, the Reviewers notification appears (under Model Information) to inform you that DataRobot will automatically notify users assigned as reviewers whenever the deployment requires review. |

Time of Prediction

The Time of Prediction value differs between the Data drift and Accuracy tabs and the Service health tab:

-

On the Service health tab, the "time of prediction request" is always the time the prediction server received the prediction request. This method of prediction request tracking accurately represents the prediction service's health for diagnostic purposes.

-

On the Data drift and Accuracy tabs, the "time of prediction request" is, by default, the time you submitted the prediction request, which you can override with the prediction timestamp in the Prediction History and Service Health settings.

Advanced options¶

Click Show advanced options:

To configure the following deployment settings:

-

Advanced predictions configuration (for Feature Discovery deployments)

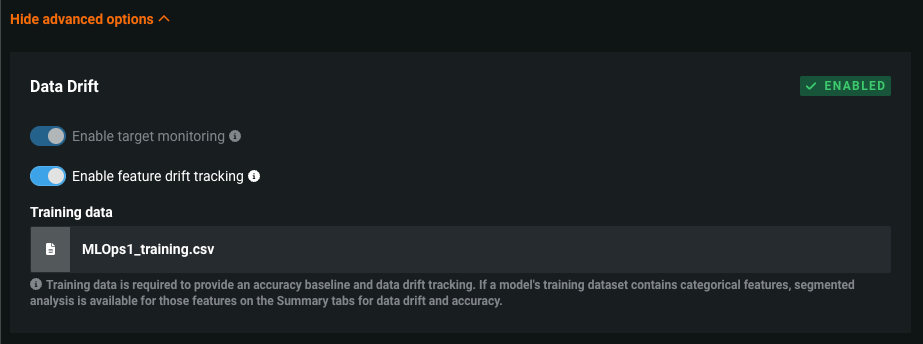

Data drift¶

When deploying a model, there is a chance that the dataset used for training and validation differs from the prediction data. To enable drift tracking you can configure the following settings:

| Setting | Description |

|---|---|

| Enable feature drift tracking | Configures DataRobot to track feature drift in a deployment. Training data is required for feature drift tracking. |

| Enable target monitoring | Configures DataRobot to track target drift in a deployment. Actuals are required for target monitoring, and target monitoring is required for accuracy monitoring. |

| Training data | Required to enable feature drift tracking in a deployment. |

How does DataRobot track drift?

DataRobot tracks two types of drift:

-

Target drift: DataRobot stores statistics about predictions to monitor how the distribution and values of the target change over time. As a baseline for comparing target distributions, DataRobot uses the distribution of predictions on the holdout.

-

Feature drift: DataRobot stores statistics about predictions to monitor how distributions and values of features change over time. The supported feature data types are numeric, categorical, and text. As a baseline for comparing distributions of features:

-

For training datasets larger than 500MB, DataRobot uses the distribution of a random sample of the training data.

-

For training datasets smaller than 500MB, DataRobot uses the distribution of 100% of the training data.

-

DataRobot monitors both target and feature drift information by default and displays results in the Data Drift dashboard. Use the Enable target monitoring and Enable feature drift tracking toggles to turn off tracking if, for example, you have sensitive data that should not be monitored in the deployment.

You can customize how data drift is monitored. See the data drift page for more information on customizing data drift status for deployments.

Note

Data drift tracking is only available for deployments using deployment-aware prediction API routes (i.e., https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions).

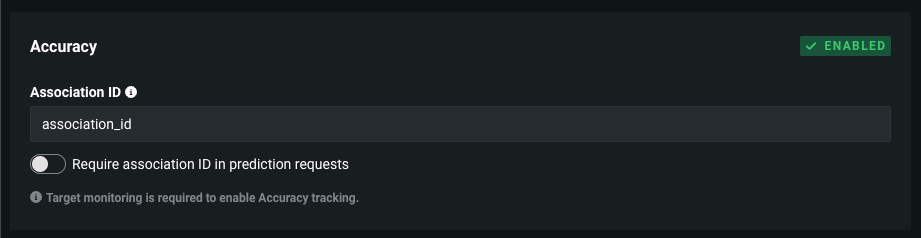

Accuracy¶

Configuring the required settings for the Accuracy tab allows you to analyze the performance of the model deployment over time using standard statistical measures and exportable visualizations.

| Setting | Description |

|---|---|

| Association ID | Specifies the column name that contains the association ID in the prediction dataset for your model. Association IDs are required for setting up accuracy tracking in a deployment. The association ID functions as an identifier for your prediction dataset so you can later match up outcome data (also called "actuals") with those predictions. If your deployment is for a time series project, see Association IDs for time series deployments to learn how to select or construct an effective association ID. |

| Require association ID in prediction requests | Requires your prediction dataset to have a column name that matches the name you entered in the Association ID field. When enabled, you will get an error if the column is missing. Note that the Create deployment button is inactive until you enter an association ID or turn off this toggle. This cannot be enabled with Enable automatic association ID generation for prediction rows. |

| Enable automatic association ID generation for prediction rows | With an association ID column name defined, allows DataRobot to automatically populate the association ID values. This cannot be enabled with Require association ID in prediction requests. |

| Enable automatic actuals feedback for time series models | For time series deployments that have indicated an association ID. Enables the automatic submission of actuals, so that you do not need to submit them manually via the UI or API. Once enabled, actuals can be extracted from the data used to generate predictions. As each prediction request is sent, DataRobot can extract an actual value for a given date. This is because when you send prediction rows to forecast, historical data is included. This historical data serves as the actual values for the previous prediction request. |

Important: Association ID for monitoring agent and monitoring jobs

You must set an association ID before making predictions to include those predictions in accuracy tracking. For agent-monitored external model deployments with challengers (and monitoring jobs for challengers), the association ID should be __DataRobot_Internal_Association_ID__ to report accuracy for the model and its challengers.

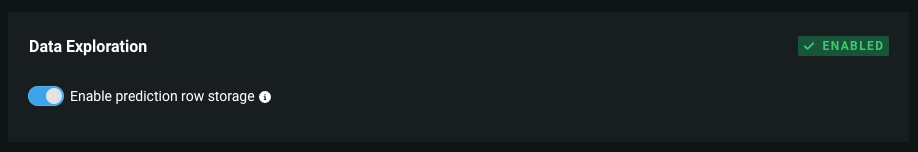

Data exploration¶

Enable prediction row storage to activate the Data exploration tab. From there, you can export a deployment's stored training data, prediction data, and actuals to compute and monitor custom business or performance metrics on the Custom metrics tab or outside DataRobot.

| Setting | Description |

|---|---|

| Enable prediction row storage | Enables prediction data storage, a setting required to store and export a deployment's prediction data for use in custom metrics. |

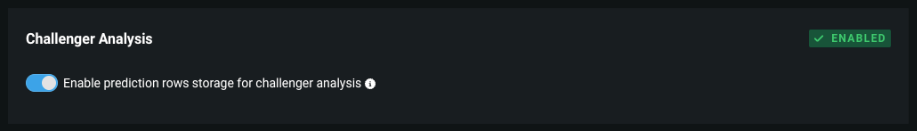

Challenger analysis¶

DataRobot can securely store prediction request data at the row level for deployments (not supported for external model deployments). This setting must be enabled for any deployment using the Challengers tab. In addition to enabling challenger analysis, access to stored prediction request rows enables you to thoroughly audit the predictions and use that data to troubleshoot operational issues. For instance, you can examine the data to understand an anomalous prediction result or why a dataset was malformed.

Note

Contact your DataRobot representative to learn more about data security, privacy, and retention measures or to discuss prediction auditing needs.

| Setting | Description |

|---|---|

| Enable prediction rows storage for challenger analysis | Enables the use of challenger models, which allow you to compare models post-deployment and replace the champion model if necessary. Once enabled, prediction requests made for the deployment are collected by DataRobot. Prediction Explanations are not stored. |

Important

Prediction requests are only collected if the prediction data is in a valid data format interpretable by DataRobot, such as CSV or JSON. Failed prediction requests with a valid data format are also collected (i.e., missing input features).

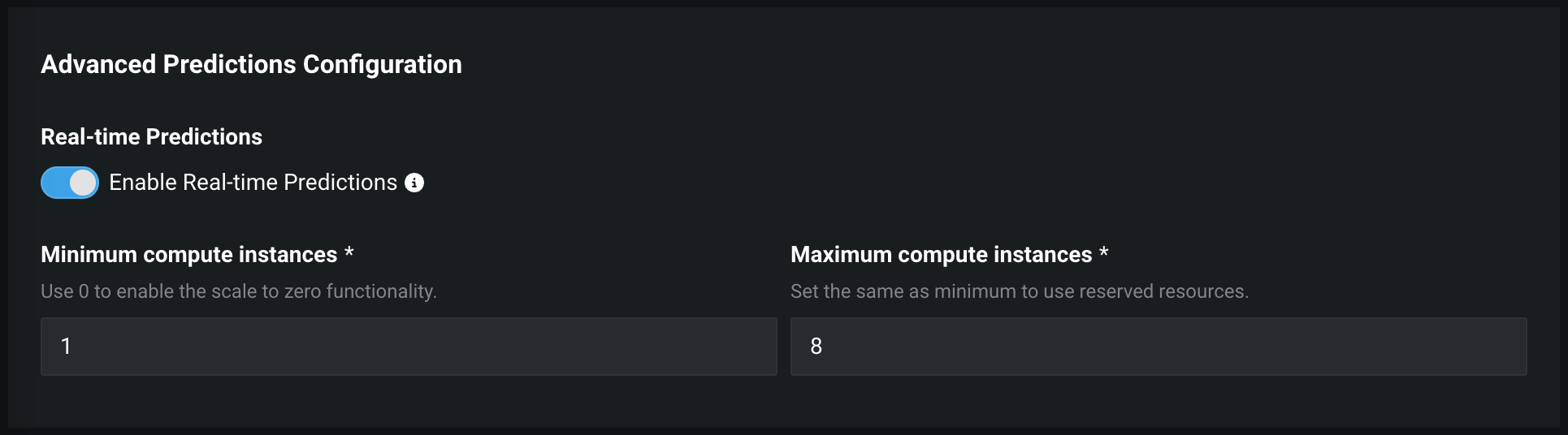

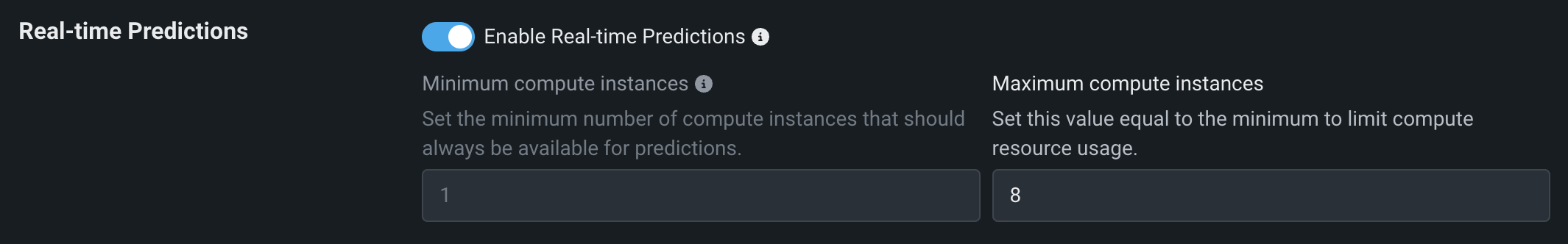

Advanced predictions configuration¶

In the Advanced predictions configuration section, you can configure settings dependent on the project type of the model being deployed and the prediction environment the model is being deployed to:

- When you deploy a model to a DataRobot Serverless environment, you can configure the predictions autoscaling settings.

- When you deploy a model from a Feature Discovery project, you can configure the secondary dataset configurations.

Predictions autoscaling¶

To configure on-demand predictions on this environment, click Show advanced options, scroll down to Advanced Predictions Configuration, and set the following Autoscaling options:

Autoscaling automatically adjusts the number of replicas in your deployment based on incoming traffic. During high-traffic periods, it adds replicas to maintain performance. During low-traffic periods, it removes replicas to reduce costs. This eliminates the need for manual scaling while ensuring your deployment can handle varying loads efficiently.

To configure autoscaling, modify the following settings. Note that for DataRobot models, DataRobot performs autoscaling based on CPU usage at a 40% threshold.:

| Field | Description |

|---|---|

| Minimum compute instances | (Premium feature) Set the minimum compute instances for the model deployment. If your organization doesn't have access to "always-on" predictions, this setting is set to 0 and isn't configurable. With the minimum compute instances set to 0, the inference server will be stopped after an inactivity period of 7 days. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

| Maximum compute instances | Set the maximum compute instances for the model deployment to a value above the current configured minimum. To limit compute resource usage, set the maximum value equal to the minimum. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

To configure autoscaling, select the metric that will trigger scaling:

-

CPU utilization: Set a threshold for the average CPU usage across active replicas. When CPU usage exceeds this threshold, the system automatically adds replicas to provide more processing power.

-

HTTP request concurrency: Set a threshold for the number of simultaneous requests being processed. For example, with a threshold of 5, the system will add replicas when it detects 5 concurrent requests being handled.

When your chosen threshold is exceeded, the system calculates how many additional replicas are needed to handle the current load. It continuously monitors the selected metric and adjusts the replica count up or down to maintain optimal performance while minimizing resource usage.

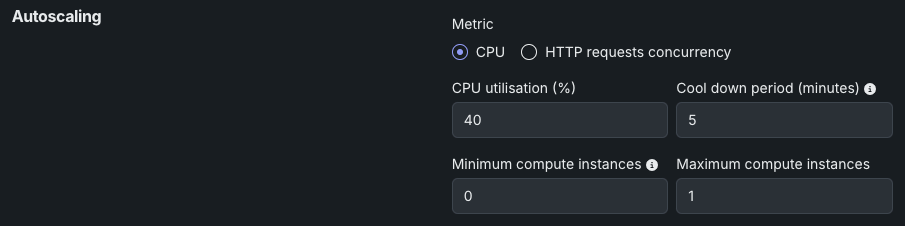

Review the settings for CPU utilization below.

| Field | Description |

|---|---|

| CPU utilization (%) | Set the target CPU usage percentage that triggers scaling. When CPU utilization reaches this threshold, the system adds more replicas. |

| Cool down period (minutes) | Set the wait time after a scale-down event before another scale-down can occur. This prevents rapid scaling fluctuations when metrics are unstable. |

| Minimum compute instances | (Premium feature) Set the minimum compute instances for the model deployment. If your organization doesn't have access to "always-on" predictions, this setting is set to 0 and isn't configurable. With the minimum compute instances set to 0, the inference server will be stopped after an inactivity period of 7 days. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

| Maximum compute instances | Set the maximum compute instances for the model deployment to a value above the current configured minimum. To limit compute resource usage, set the maximum value equal to the minimum. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

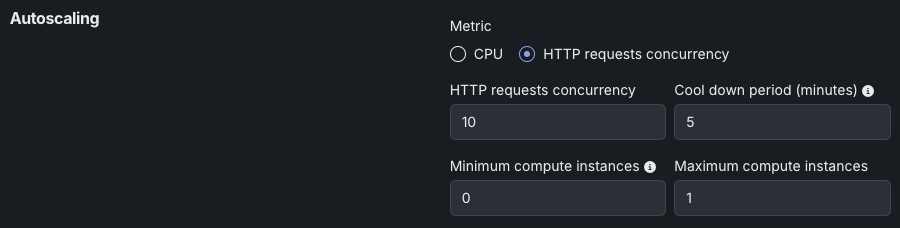

Review the settings for HTTP request concurrency below.

| Field | Description |

|---|---|

| HTTP request concurrency | Set the number of simultaneous requests required to trigger scaling. When concurrent requests reach this threshold, the system adds more replicas. |

| Cool down period (minutes) | Set the wait time after a scale-down event before another scale-down can occur. This prevents rapid scaling fluctuations when metrics are unstable. |

| Minimum compute instances | (Premium feature) Set the minimum compute instances for the model deployment. If your organization doesn't have access to "always-on" predictions, this setting is set to 0 and isn't configurable. With the minimum compute instances set to 0, the inference server will be stopped after an inactivity period of 7 days. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

| Maximum compute instances | Set the maximum compute instances for the model deployment to a value above the current configured minimum. To limit compute resource usage, set the maximum value equal to the minimum. The minimum and maximum compute instances depend on the model type. For more information, see the compute instance configurations note. |

Premium feature: Always-on predictions

Always-on predictions are a premium feature. Deployment autoscaling management is required to configure the minimum compute instances setting. Contact your DataRobot representative or administrator for information on enabling the feature.

Feature flag: Enable Deployment Auto-Scaling Management

Compute instance configurations

For DataRobot model deployments:

- The default minimum is 0 and default maximum is 3.

- The minimum and maximum limits are taken from the organization's

max_compute_serverless_prediction_apisetting.

For custom model deployments:

- The default minimum is 0 and default maximum is 1.

- The minimum and maximum limits are taken from the organization's

max_custom_model_replicas_per_deploymentsetting. - The minimum is always greater than 1 when running on GPUs (for LLMs).

Additionally, for high availability scenarios:

- The minimum compute instances setting must be greater than or equal to 2.

- This requires business critical or consumption-based pricing.

Update compute instances settings

If, after deployment, you need to update the number of compute instances available to the model, you can change these settings on the Predictions Settings tab.

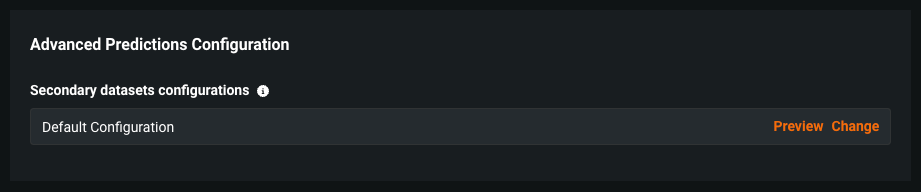

Secondary datasets for Feature Discovery¶

Feature Discovery identifies and generates new features from multiple datasets so that you no longer need to perform manual feature engineering to consolidate multiple datasets into one. This process is based on relationships between datasets and the features within those datasets. DataRobot provides an intuitive relationship editor that allows you to build and visualize these relationships. DataRobot’s Feature Discovery engine analyzes the graphs and the included datasets to determine a feature engineering “recipe” and, from that recipe, generates secondary features for training and predictions. While configuring the deployment settings, you can change the selected secondary dataset configuration.

| Setting | Description |

|---|---|

| Secondary datasets configurations | Previews the dataset configuration or provides an option to change it. By default, DataRobot makes predictions using the secondary datasets configuration defined when starting the project. Click Change to select an alternative configuration before uploading a new primary dataset. |

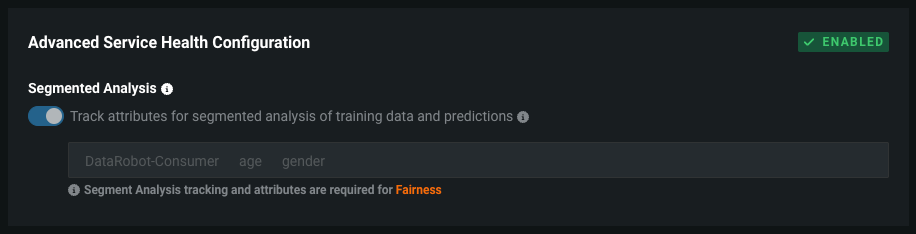

Advanced service health configuration¶

Segmented Analysis identifies operational issues with training and prediction data requests for a deployment. DataRobot enables drill-down analysis of data drift and accuracy statistics by filtering them into unique segment attributes and values.

| Setting | Description |

|---|---|

| Track attributes for segmented analysis of training data and predictions | Enables DataRobot to monitor deployment predictions by segments (for example, by categorical features). This setting requires training data and is required to enable Fairness monitoring. |

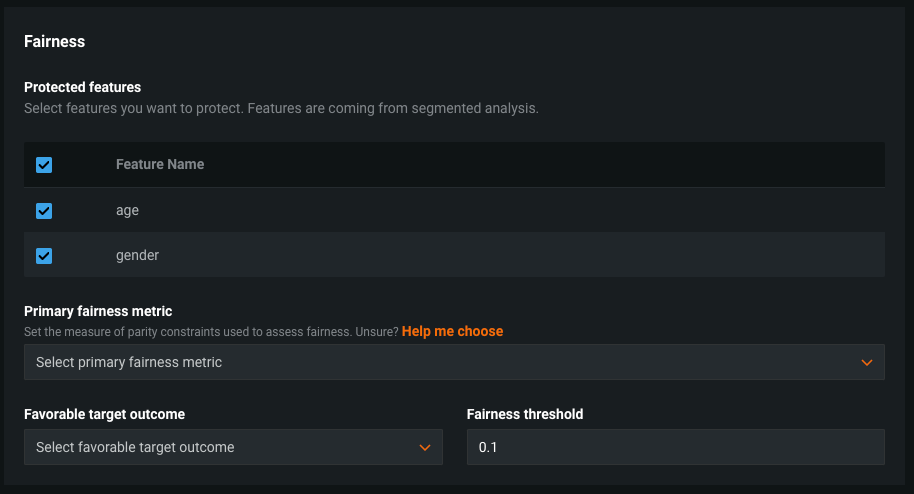

Fairness¶

Fairness allows you to configure settings for your deployment to identify any biases in the model's predictive behavior. If fairness settings are defined prior to deploying a model, the fields are automatically populated. For additional information, see the section on defining fairness tests.

| Setting | Description |

|---|---|

| Protected features | Identifies the dataset columns to measure fairness of model predictions against; must be categorical. |

| Primary fairness metric | Defines the statistical measure of parity constraints used to assess fairness. |

| Favorable target outcome | Defines the outcome value perceived as favorable for the protected class relative to the target. |

| Fairness threshold | Defines the fairness threshold to measure if a model performs within appropriate fairness bounds for each protected class. |

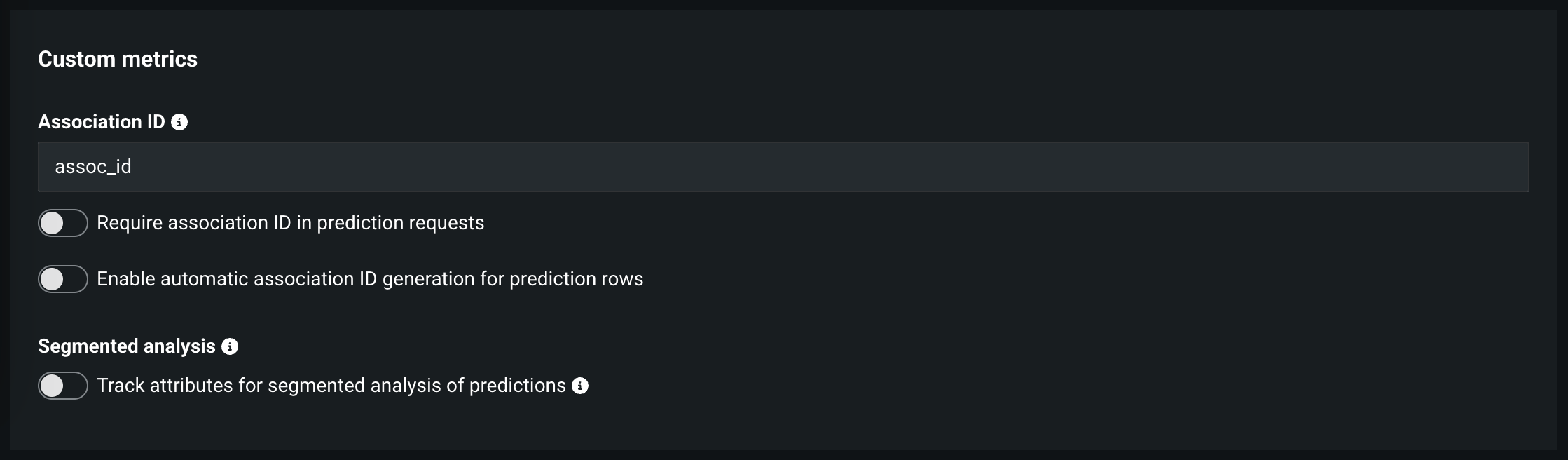

Custom metrics¶

For generative AI deployments, configure these settings to monitor data quality and custom metrics.

| Setting | Description |

|---|---|

| Association ID | Specifies the column name that contains the association ID in the prediction dataset for your model. Association IDs are required for setting up accuracy tracking in a deployment. The association ID functions as an identifier for your prediction dataset so you can later match up outcome data (also called "actuals") with those predictions. |

| Require association ID in prediction requests | Requires your prediction dataset to have a column name that matches the name you entered in the Association ID field. When enabled, you will get an error if the column is missing. Note that the Create deployment button is inactive until you enter an association ID or turn off this toggle. This cannot be enabled with Enable automatic association ID generation for prediction rows. |

| Enable automatic association ID generation for prediction rows | With an association ID column name defined, allows DataRobot to automatically populate the association ID values. This cannot be enabled with Require association ID in prediction requests. |

| Track attributes for segmented analysis of training data and predictions | Enables DataRobot to monitor deployment predictions by segments (for example, by categorical features). This setting requires training data and is required to enable Fairness monitoring. |

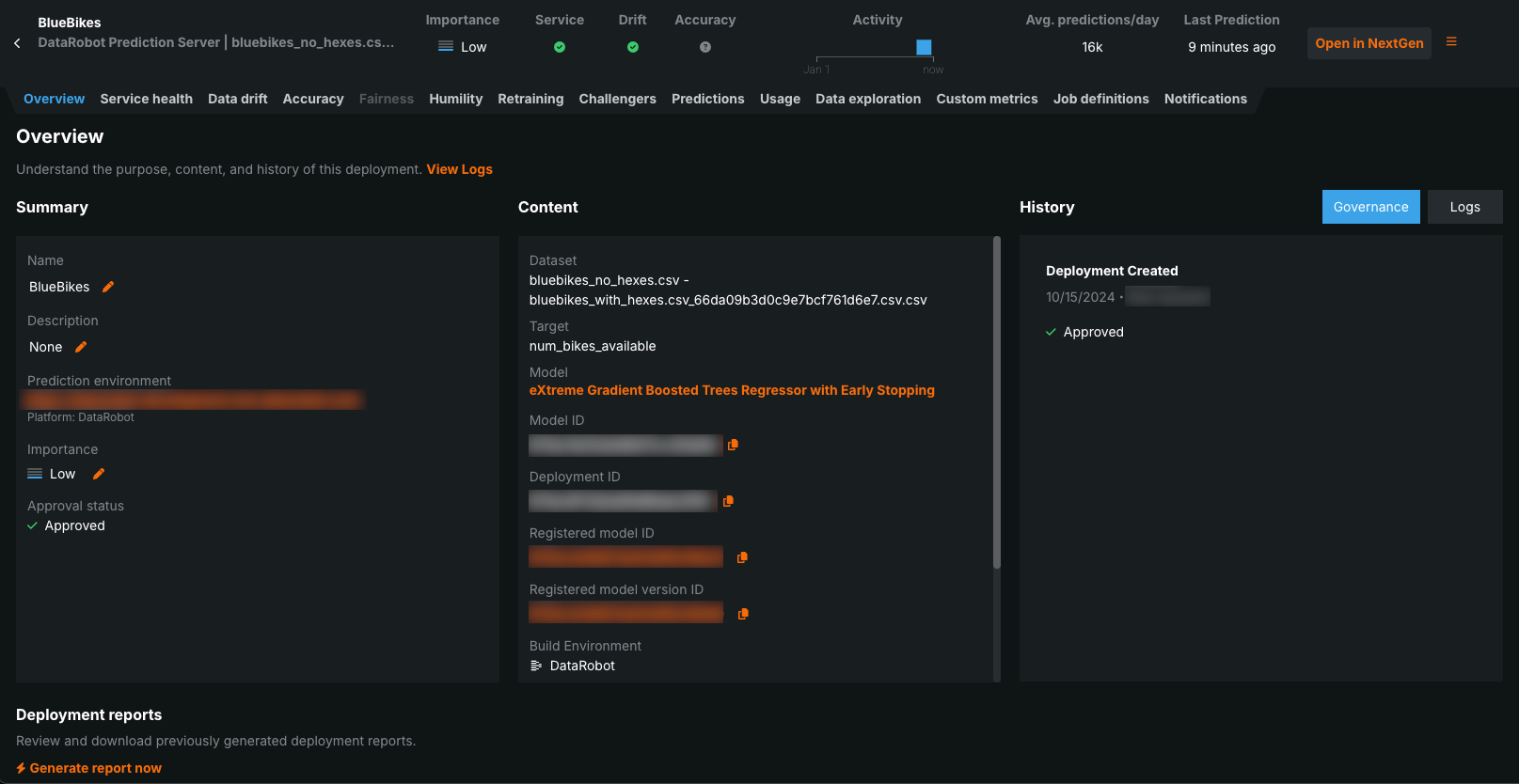

Deploy the model¶

After you add the available data and your model is fully defined, click Deploy model at the top of the screen.

Note

If the Deploy model button is inactive, be sure to either specify an association ID (required for enabling accuracy monitoring) or toggle off Require association ID in prediction requests.

The Creating deployment message appears, indicating that DataRobot is creating the deployment. After the deployment is created, the Overview tab opens.

Click the arrow to the left of the deployment name to return to the deployment inventory.