Predictions¶

You can make predictions with models using engineered features in the same way as you do with any other DataRobot model. To make predictions, use either:

- Make Predictions to generate predictions, in-app, on a scoring dataset.

- Deploy to create a deployment capable of batch predictions and prediction integrations.

When using Feature Discovery with either method, the dataset configuration options available at prediction time are the same. The following describes that configuration followed by descriptions of each tab option.

Select a secondary dataset configuration¶

When applying a dataset configuration to prediction data, Feature Discovery allows you to use:

- The default configuration.

- An alternative, existing configuration.

- A newly created configuration.

If the feature list used by a model doesn’t rely on any of the secondary datasets supplied—because no features were derived or a custom feature list excluded the Feature Discovery features, for example—DataRobot supplies an informational message.

Use the default configuration¶

By default, DataRobot makes predictions using the secondary dataset configuration defined by the relationships used when building the project. You can view this configuration by clicking the Preview link.

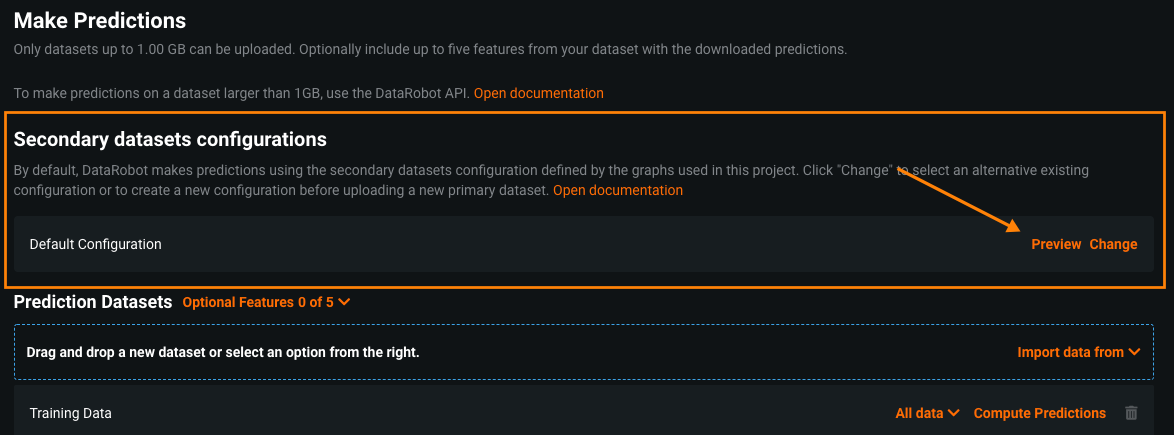

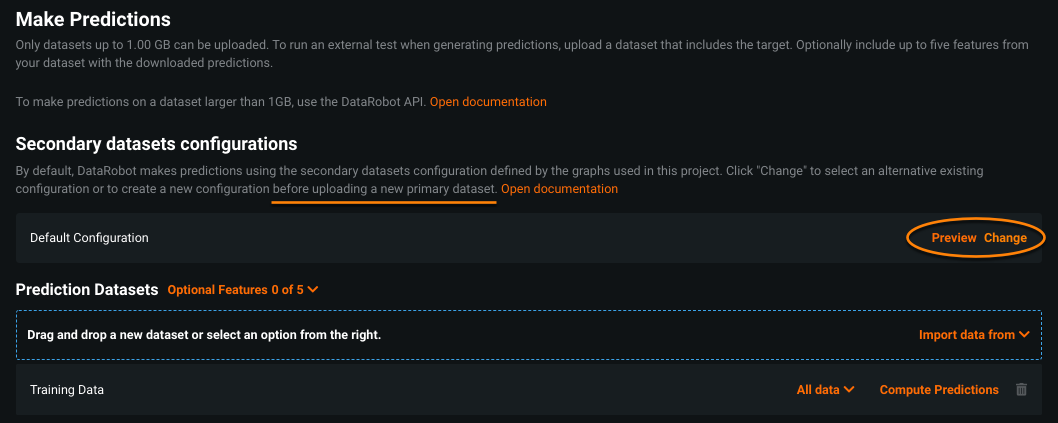

From Make Predictions:

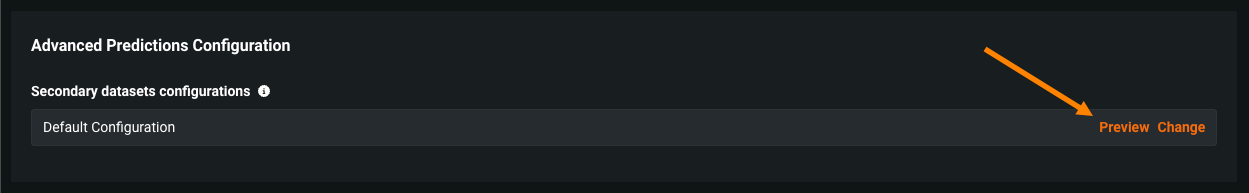

From Deploy:

The default configuration cannot be modified or deleted from the predictions tabs.

Use an alternate configuration¶

To select an alternative to the default configuration—or to create a new configuration—click Change. When you create new secondary dataset configurations, they become available to all models in the project that use the same feature list. Note that you must make any changes to the secondary dataset configuration before uploading your prediction dataset.

Apply an existing configuration¶

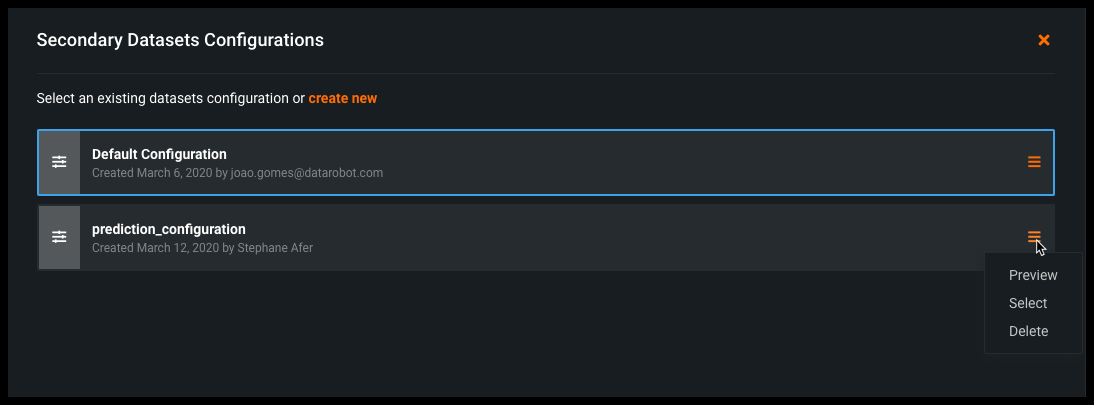

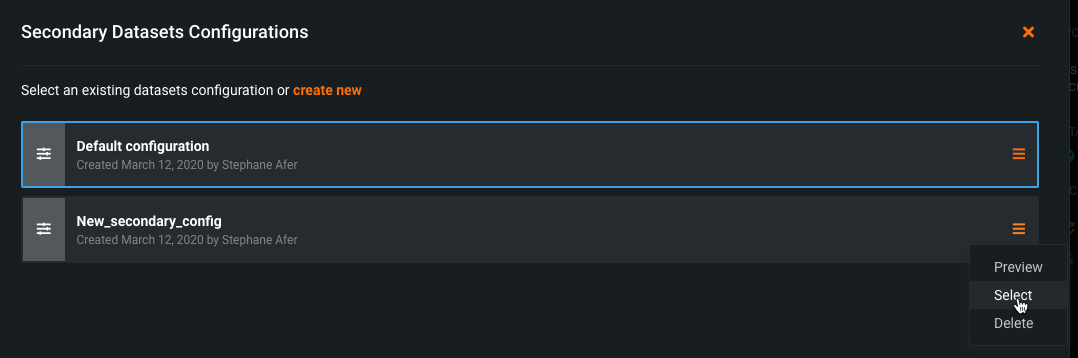

To apply a different, existing configuration, click Change to open the Secondary Datasets Configuration modal:

Expand the menu and select one of the following options:

-

To preview but not select the configuration, click on a configuration name and select Preview from the menu. The Secondary Datasets Configurations modal opens.

-

To select a configuration other than the currently selected item, click Select from the menu. The item highlights and the Secondary Datasets Configurations modal opens.

-

Click Delete to remove a configuration. You cannot delete the default configuration.

Create a new configuration¶

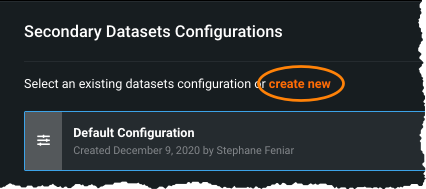

To create a new configuration, click Change and then create new in the resulting Secondary Datasets Configurations modal:

The Secondary Datasets Configurations modal expands to include configuration fields.

After changing or creating the configuration, you will see the entry listed in the secondary dataset configuration preview and also as the selected configuration on the Make Predictions or Deploy page. Click to select which configuration to use when making predictions with this model and click Apply.

Secondary Datasets Configurations modal¶

Access the modal by clicking Preview, Change > Preview, or Change > create new.

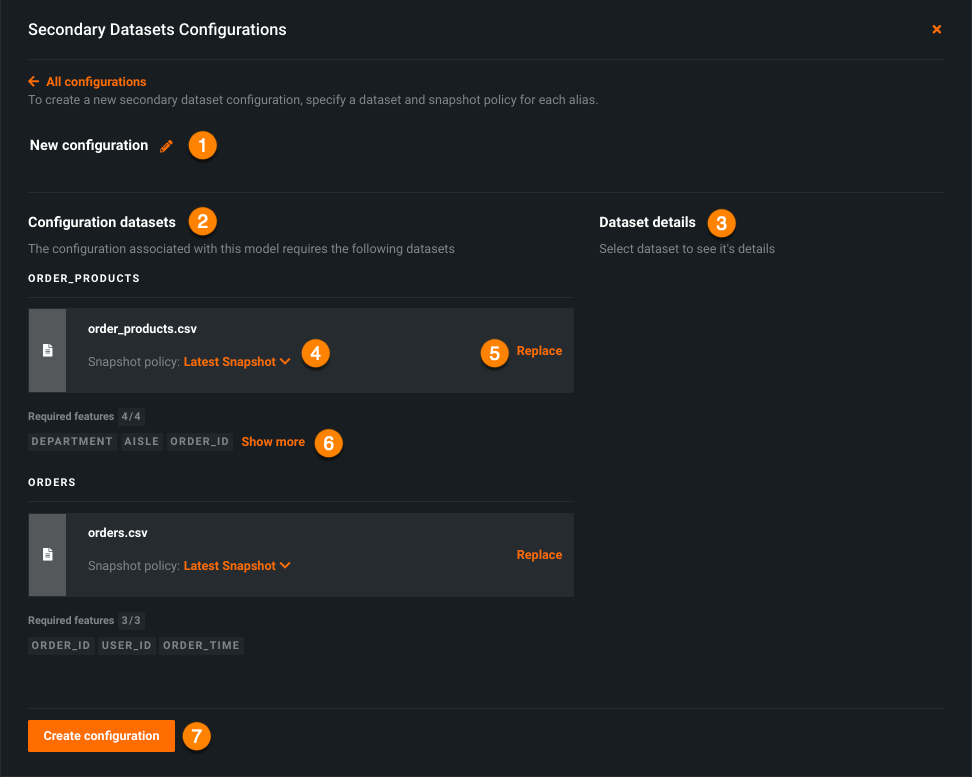

Complete the fields as follows:

| Field | Description | |

|---|---|---|

| 1 | Configuration name | The name of the configuration, "New Configuration" by default. Click the pencil icon to change. |

| 2 | Configuration datasets | The dataset(s) that make up the relationship for the new or existing secondary configuration. |

| 3 | Dataset details | Basic dataset information, similar but less detailed than the information available from the AI Catalog. Click on a configuration dataset to display. |

| 4 | Snapshot policy | The snapshot policy to apply to the dataset. By default, DataRobot applies the snapshot policy you defined in the graphs to your secondary datasets. |

| 5 | Replace | A tool to choose which dataset(s) to use as the basis of the relationship to the primary dataset. Clicking "Replace" provides a list of available files in the AI Catalog. Click a dataset to view dataset details; click Use dataset in configuration to add the dataset and return to the previous screen. |

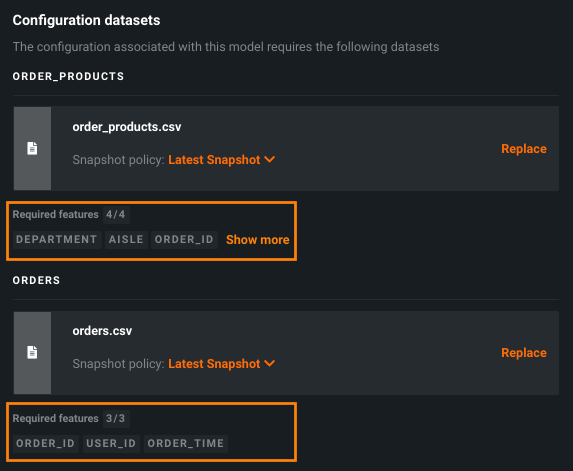

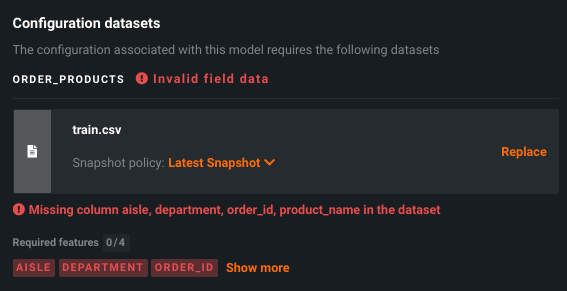

| 6 | Required features | Lists the minimum features needed to support the configuration, based on the original relationships configured. If features are missing, DataRobot returns a validation error. See Features required in relationships, below. |

| 7 | Create configuration | DataRobot automatically runs validation as you set up the configuration. When validated, click to create a new configuration. |

Snapshot reference¶

DataRobot applies snapshot policy for a secondary dataset as follows:

-

Dynamic: DataRobot pulls data from the associated data connection at the time you upload a new primary dataset.

-

Latest snapshot: DataRobot uses the latest snapshot available when you upload a new primary dataset.

-

Specific snapshot: DataRobot uses a specific snapshot, even if a more recent snapshot exists.

Note that:

-

Changes apply to primary datasets that are uploaded after the changes are saved; you must review the secondary datasets before uploading a primary dataset.

-

Changes are only applicable while you are on the Make Predictions or Deploy page. Leaving or refreshing the page causes the default snapshot policy to apply to a new primary dataset.

Features required in relationships¶

After the Feature Discovery and reduction workflow, DataRobot may prune some engineered features that were not used for modeling. This pruning may mean that some raw features are never used, and as such, are not necessary to include in the secondary datasets used for predictions. In other words, if only a subset of raw features are used in the final models, your secondary datasets do not need to include them. This allows you to collect and upload a subset of datasets and/or features, which can result in faster prediction and deployment times.

When creating secondary dataset configurations, the modal initially displays the datasets used when building the model. Below the dataset entry, DataRobot reports the raw features used to engineer new features (the required raw features). Any replacement datasets must include those features:

If you replace a dataset with an alternative that does not include the required features, DataRobot returns a validation error:

Use Make Predictions¶

If you accessed the secondary dataset configurations from the Make Predictions tab, complete the remaining fields as you would with any other DataRobot model. When you upload test data to run against the model, DataRobot runs validation that it includes the required features and returns a validation error if it does not. If validation passes, the uploaded dataset is added to the list of available datasets and uses the new configuration.

Remember that you must finalize the secondary dataset configuration before uploading a dataset:

Once you upload a new scoring dataset, DataRobot extracts relevant data from secondary datasets that were used in the associated project relationships. Note that if the secondary datasets or selected snapshot policy are dynamic, DataRobot prompts for authentication. The Import data from... button is locked until credentials are provided.

Use Deploy for batch predictions¶

You can make batch predictions using the Deploy tab. Once deployed, the model is added to the Deployments page and model deployment and management functionality is available.

To make batch predictions:

-

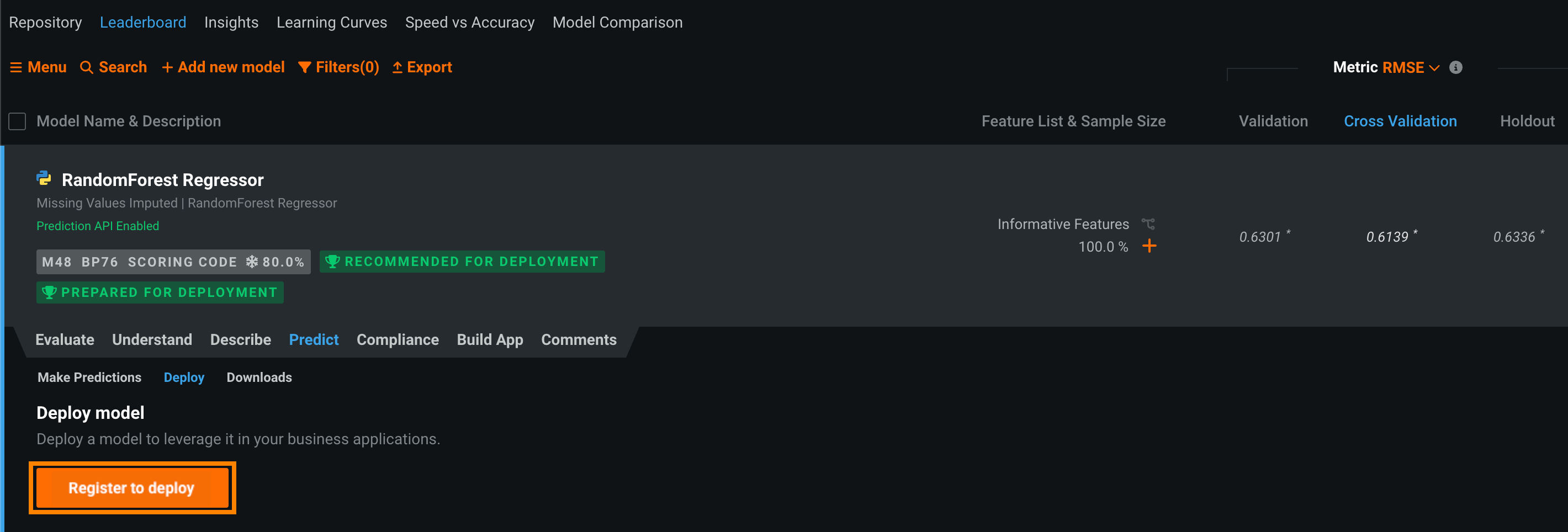

From the chosen model, select Predict > Deploy. The Deploy tab opens for configuration. If the model is not the one chosen and prepared for deployment by DataRobot, consider using the Prepare for deployment option.

-

Under Deploy model, click Register to deploy.

-

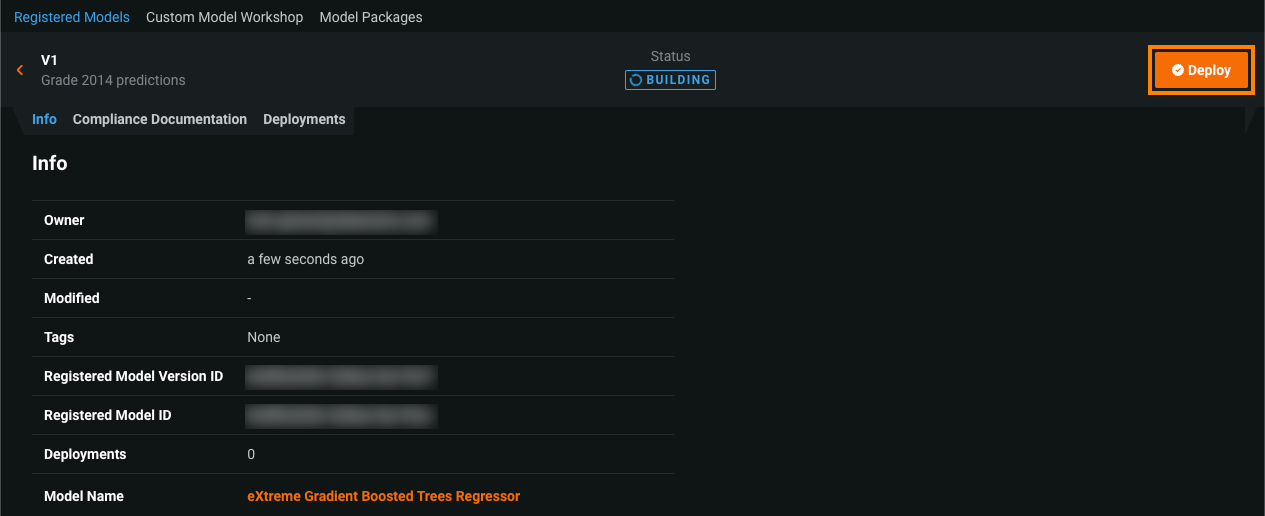

Configure the model registration settings and then click Add to registry. The model opens on the Model Registry > Registered Models tab.

-

While the registered model builds, click Deploy and then configure the deployment settings.

-

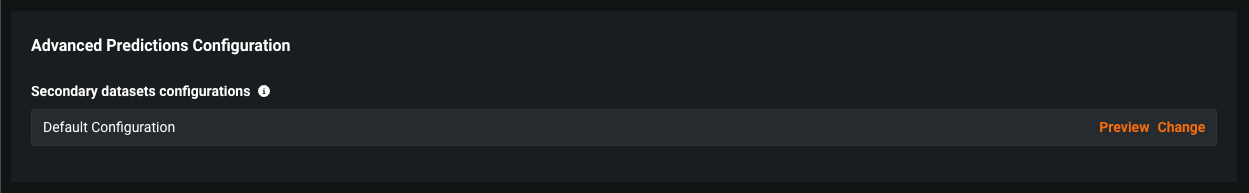

In the deployment settings, click Show advanced options, navigate to the Advanced Predictions Configuration section, and set the Secondary datasets configuration. Reference the details on working with these configurations:

-

If the secondary dataset configuration was created with a dynamic snapshot policy, authenticate to proceed. When authentication succeeds, or if it is not required, click the Create deployment button (upper right corner). If the button is not activated, be sure you have correctly configured the association ID (If association ID is toggled on, you must supply the feature name containing the IDs.)

-

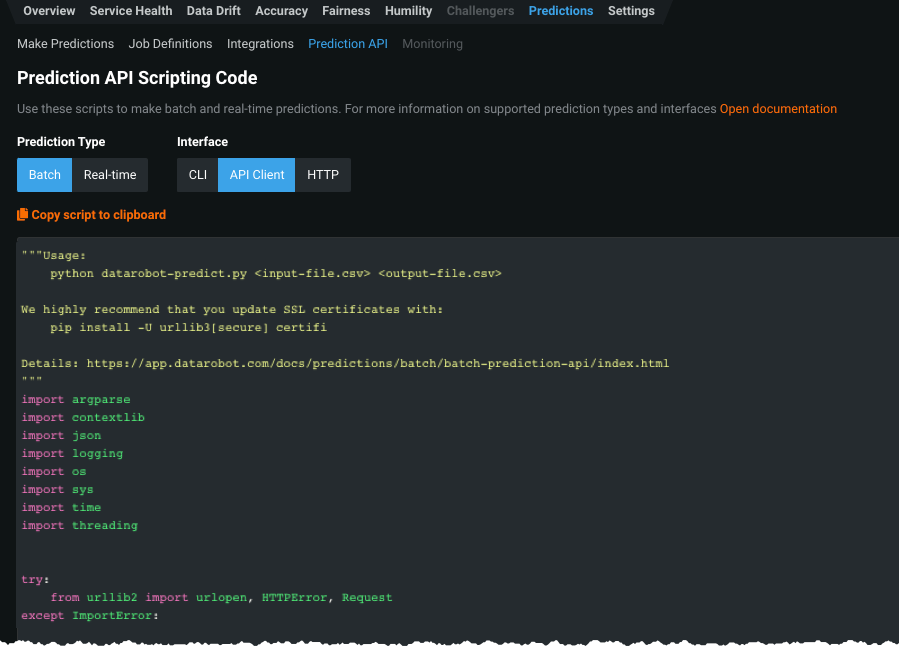

When deployment completes, DataRobot opens the Overview page for your deployment on the Deployments page. Select Predictions > Prediction API tab, select Batch and API Client to access the snippet required for making batch predictions:

-

Click the Copy script to clipboard link.

Follow the sample and make the necessary changes when you want to integrate the model, via API, into your production application.

-

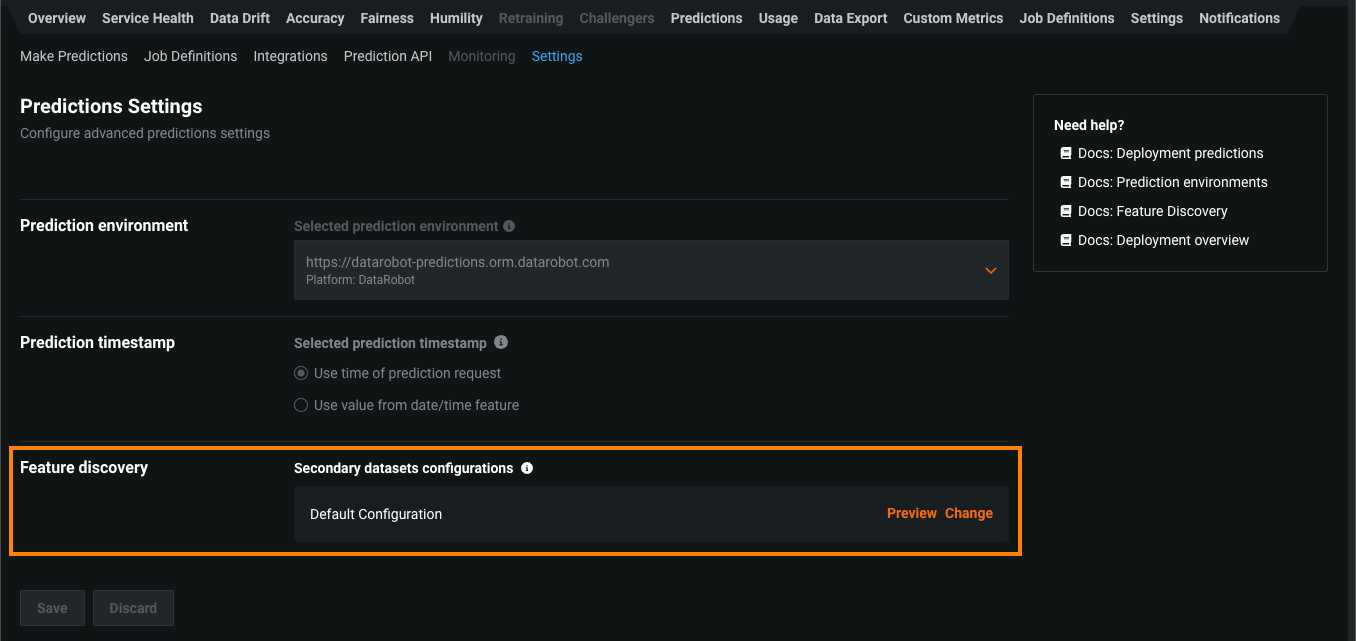

You can view the secondary dataset configuration used in the deployment from the Predictions > Settings tab.

Click Preview to open a modal displaying the configuration.

Batch prediction considerations¶

-

Only DataRobot models are supported, no external or custom model support.

-

Governance workflow and Feature Discovery model package export is not supported for Feature Discovery models.

-

You cannot replace a Feature Discovery model with a non-Feature Discovery model or vice versa.

-

You cannot change the configuration once a deployment is created. To use a different configuration, you must create a new deployment.

-

When a Feature Discovery model is replaced with another Feature Discovery model, the configuration used by the new model becomes the default configuration.

-

Feature Discovery predictions will be slower than other DataRobot models because feature engineering is also applied.