SHAP Prediction Explanations¶

SHAP vs XEMP

This section describes SHAP-based Prediction Explanations. See also the general description of Prediction Explanations for an overview of SHAP and XEMP methodologies.

In the DataRobot Classic UI, to retrieve SHAP-based Prediction Explanations, you must enable the Include only models with SHAP value support advanced option prior to model building.

SHAP-based explanations help to understand what drives predictions on a row-by-row basis by providing an estimation of how much each feature contributes to a given prediction differing from the average. They answer why a model made a certain prediction—What drives a customer's decision to buy—age? gender? buying habits? Then, they help identify the impact on the decision for each factor. They are intuitive, unbounded (computed for all features), fast, and, due to the open source nature of SHAP, transparent. Not only does SHAP provide the benefit of helping you better understand model behavior—and quickly—it also allows you to easily validate if a model adheres to business rules.

See the SHAP reference for additional technical detail. See the associated SHAP considerations for important additional information.

Preview Prediction Explanations¶

SHAP-based Prediction Explanations, when previewed, display the top five features for each row. This provides a general "intuition" of model performance. You can then quickly compute and download explanations for the entire training dataset to perform a deeper analytics. See SHAP calculations for more detail.

You can also:

-

Upload external datasets and manually compute (and download) explanations.

-

Access explanations via the API, for both deployed and Leaderboard models.

Interpret SHAP Prediction Explanations¶

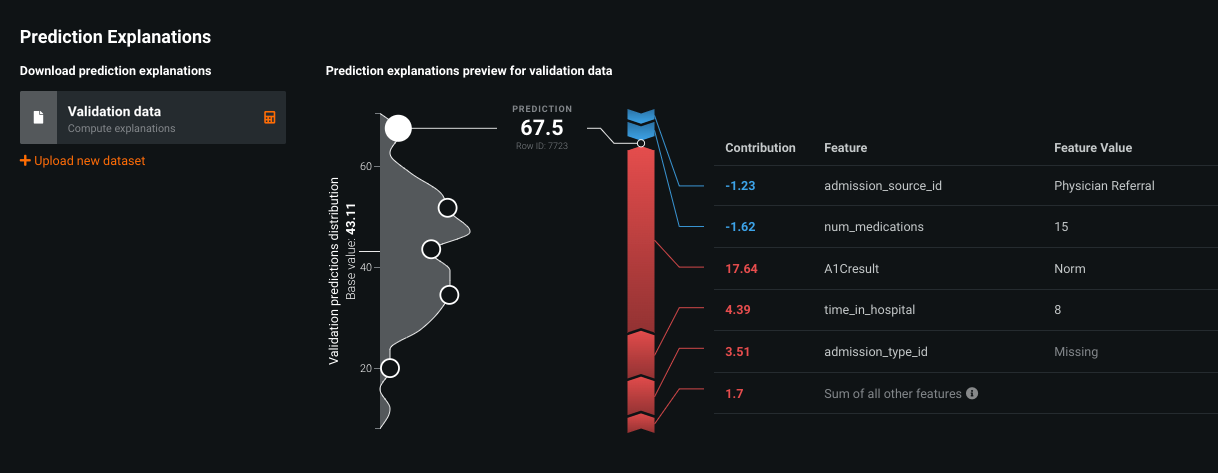

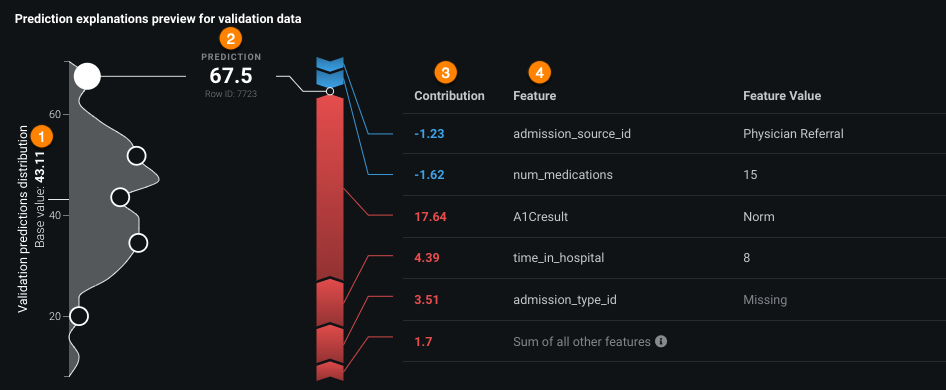

Open the Prediction Explanations tab to see an interactive preview of the top five features that contribute most to the difference from the average (base) prediction value. In other words, how much does each feature explain the difference? For example:

The elements describe:

| Element | Value in example | |

|---|---|---|

| 1 | Base (average) prediction value | 43.11 |

| 2 | Prediction value for the row | 67.5 |

| 3 | Contribution, or how much each feature explains the difference between the base and prediction values | Varies from row to row and from feature to feature |

| 4 | Top 5 features | Varies from row to row |

Subtract the base prediction value from the row prediction value to determine the difference from the average, in this case 24.4. The contribution then describes how much each listed feature is responsible for pushing the target away from the average (the allocation of 24.4 between the features).

SHAP is additive which means that the sum of all contributions for all features equals the difference between the base and row prediction values. (See additivity details here.)

Some additional notes on interpreting the visualization:

-

Contributions can be either positive or negative. Features that push the predictive value to be higher display in red and are positive numbers. Features that reduce the prediction display in blue and are negative numbers.

-

The arrows on the plot are proportionate to the SHAP values positively and negatively impacting the observed prediction.

-

The "Sum of all other features" is the sum of features that are not part of the top five contributors.

See the SHAP reference for information on additivity (including possible breakages).

Deep dive: SHAP preview

The SHAP preview shows a preview based on validation data, even when training into the validation partition. When the model has been trained into validation, the option to download SHAP explanations for the full validation data is not available because models trained into validation are making in-sample predictions (predictions on a row that was also used to train the model). Because this type of prediction does not accurately represent what the model will do on new, unseen data, DataRobot does not provide it and instead uses a technique called stacked predictions. It is not possible to calculate SHAP explanation for Holdout data.

To understand this, consider that during cross-validation, DataRobot generates a series of models, equal to the number of CV partitions (or "folds," five by default). If you download training predictions, you receive predictions on all rows. These predictions are “stacked”—each row is predicted by whichever of the sub-models did not use it for training.

By contrast, all SHAP explanations are based on the “primary” model—the model trained on CV fold 1 (or a portion of it) and tested on the Validation fold. Applying that model to training rows outside of the Validation fold would result in predictions and explanations being in-sample. For the same reason, if the model is trained into validation, predictions on validation become in-sample instead of the usual out-of-sample.

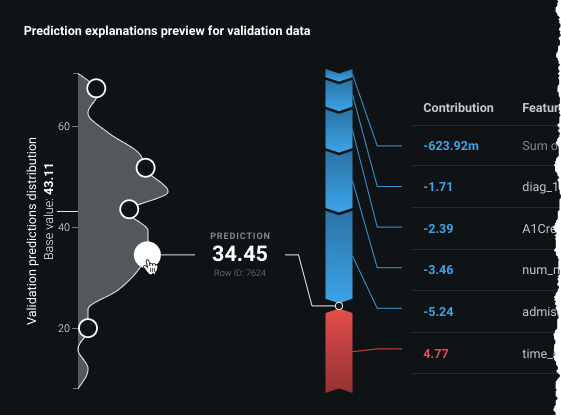

View points in the distribution¶

Use the prediction distribution component to click through a range of prediction values and understand how the top and bottom values are explained. In the chart, the Y-axis shows the prediction value, while the X-axis indicates the frequency.

Notice that if you look at a point near the bottom of the distribution, the contribution values show more blue than red values (more negative than positive contributions). This is because majority of key features are pushing the prediction value to be lower.

Computing and downloading explanations¶

While DataRobot automatically computes the explanations for selected records, you can compute explanations for all records by clicking the calculator (![]() ) icon. DataRobot computes the remaining explanations and when ready, activates a download button. Click to save the list of explanations as a CSV file. Note that the CSV will only contain the top 100 explanations for each record. To see all explanations, use the API.

) icon. DataRobot computes the remaining explanations and when ready, activates a download button. Click to save the list of explanations as a CSV file. Note that the CSV will only contain the top 100 explanations for each record. To see all explanations, use the API.

Upload a dataset¶

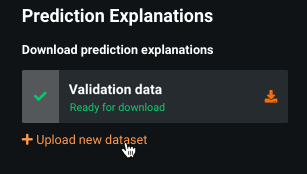

To compute explanations for additional data using the same model, click Upload new dataset:

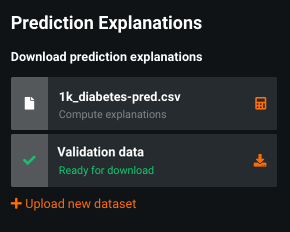

DataRobot opens the Make Predictions tab where you can upload a new, external dataset. When complete, return to Prediction Explanations, where the new dataset is listed in the download area.

Compute (![]() ) and then download explanations in the same way as with the training dataset. DataRobot runs computations for the entire external set.

) and then download explanations in the same way as with the training dataset. DataRobot runs computations for the entire external set.

Prediction Explanation calculations¶

DataRobot automatically computes SHAP Prediction Explanations. In the UI, SHAP initially returns the five most important features in each previewed row. Additional features are bundled and reported in Sum of all other features. (You can compute for all features as described above.) In the API, explanations for a given row are limited to the top 100 most important features in that row. If there are more features, they get bundled together in the shapRemainingTotal value. See the public API documentation for more detail.