Customizing time series projects¶

DataRobot provides default window settings (Feature Derivation and Forecast windows) and partition sizes for time series projects. These settings are based on the characteristics of the dataset and can generally be left as-is—they will result in robust models.

If you choose to modify the default configurations, keep in mind that setting up a project requires matching your actual prediction requests with your work environment. Modifying project settings out of context to increase accuracy independent of your use case often results in disappointing outcomes.

The following reference material describes how DataRobot determines defaults, requirement, and a selection of other settings, specifically covering:

-

Guidance on setting window values.

-

Understanding backtests, including:

- Backtest settings.

- Backtest importance.

- The logic behind row selection and usage from training data.

-

Understanding duration and row count.

-

How DataRobot handles training and validation folds.

-

How to change the training period.

Additionally, see the guidance on model retraining before deploying.

Tip

Read the real-world example to understand how gaps and window settings relate to data availability and production environments.

Set window values¶

Use the Feature Derivation Window to configure the periods of data that DataRobot uses to derive features for the modeling dataset.

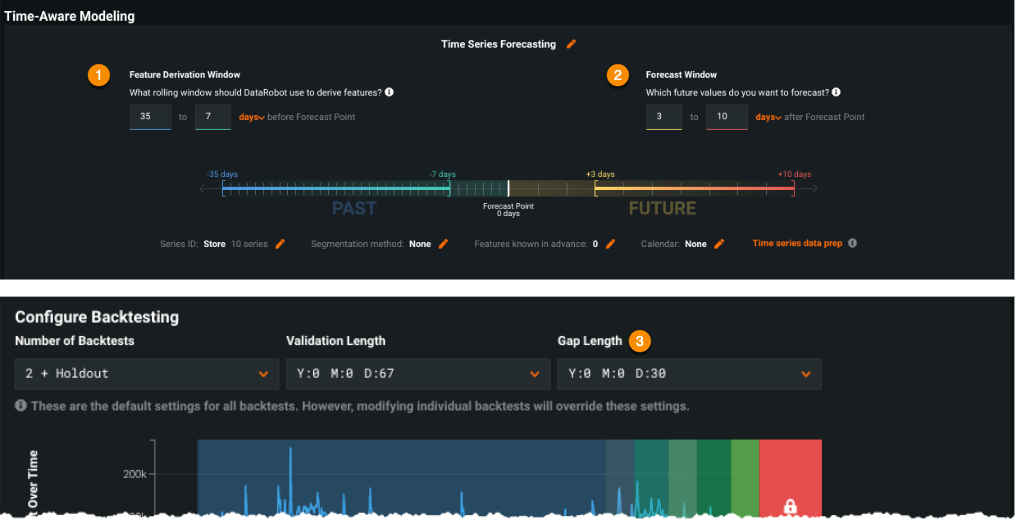

On the left, the Feature Derivation Window (1), constrains the time history used to derive features. That is, it defines how many values to look at, which determines how much data you need to provide to make a prediction. (It does not constrain the time history used for modeling—that is determined by the training partitions.) In the example above, DataRobot will use the most recent 35 days of data.

DataRobot auto-suggests values for the windows based on the dataset's time unit. For example, a common period for the Feature Derivation Window is a roughly one-month setting ("35 to 0 days"), or for minute-based data, an hourly span (60 to 0 minutes). These settings provide enough coverage to view some important lags but not so much that irrelevant lagged features are derived. The feature reduction process removes those lags that don't produce good information anyway, so creating a too-wide Feature Derivation Window increases build time without necessarily adding benefit. (DataRobot creates a maximum of five lags, regardless of the Feature Derivation Window size, in order to limit the window used for rolling statistics.)

Unless you are dealing with data in which the time unit is yearly, it is very rare that what happened, for example, on February of last year is relevant to what will happen February of this year. There are instances where certain past year dates are relevant, and in this case using a calendar is a better solution for determining yearly importance and yearly lags. For daily data, the primary seasonality is more likely to be weekly and so a Feature Derivation Window of 365 days is not necessary and not likely to improve results.

What is a lag?

A lagged feature contains information about that feature from previous time steps. Each lag shifts the feature back in time, so that at any time step you can see the value of that feature from the past.

For example, if you have the following series:

| Date | Target |

|---|---|

| 8/1 | 10 |

| 8/2, | 11 |

| 8/3 | 12 |

The resulting lags would be:

| Date | Target | Lag1 | Lag2 |

|---|---|---|---|

| 8/1 | 10 | NaN | NaN |

| 8/2 | 11 | 10 | NaN |

| 8/3 | 12 | 11 | 10 |

Tip

Ask yourself, "how much data do I realistically have access to that is also relevant at the time I am making predictions?" Just because you have, for example, six years of data, that does not mean you should widen the Feature Derivation Window to six years. If your Forecast Window is relatively small, your Feature Derivation Window should be compatibly reasonable. With time series data, because new data is always flowing in, feeding "infinite" history will not result in more accurate models.

Keep in mind the distinction between the Feature Derivation Window and the training window. It might be very reasonable to train on six years of data, but the derived features should typically focus on the most recent data on a time scale that is similar to the Forecast Window (for example, a few multiples of it).

On the right, the Forecast Window (2) sets the time range of predictions that the model outputs. The example configures DataRobot to make predictions on days 1 through 7 after the forecast point. The time unit displayed (days, in this case) is based on the unit detected when you selected a date/time feature.

Tip

It is not uncommon to think you need a larger Forecast Window than you actually need. For example, if you only need 2 weeks of predictions and a "comparison result" from 30 days ago, it is better practice to configure your operationalized model for two weeks and create a separate project for the 30-day result. Predicting from 1-30 days is suboptimal because the model will optimize to be as accurate as possible for each of the 1-30 predictions. In reality, though, you only need accuracy for days 1-14 and day 30. Splitting the project up ensures the model you are using is best for the specific need.

You can specify either the time unit detected or a number of rows for the windows. DataRobot calculates rolling statistics using that selection (e.g., Price (7 days average) or Price (7 rows average)). If the time-based option is available, you should use that. Note that when you configure for row-based windows, DataRobot does not detect common event patterns or seasonalities. DataRobot provides special handling for datasets with irregularly spaced date/time features, however. If your dataset is irregular, the window settings default to row-based.

When to use row-based mode

Row-based mode (that is, using Row Count) is a method for modeling when the dataset is irregular.

You can change these values (and notice that the visualization updates to reflect your change). For example, you may not have real-time access to the data or don't want the model to be dependent on data that is too new. In that case, change the Feature Derivation Window (FDW in the calculation below)—move it to the end of the most recent data that will be available. If you don't care about tomorrow's prediction because it is too soon to take action on, change the Forecast Window (FW) to the point from which you want predictions forward. This changes how DataRobot optimizes models and ranks them on the Leaderboard, as it only compares for accuracy against the configured range.

Deep dive

DataRobot's default suggestion will always be FDW=[-n, -0] and FW=[1, m] time units/rows. Consider adjusting the -0 and 1 so that DataRobot can optimize the models to match the data that will be available relative to the forecast point when the model is in production.

Understanding gaps¶

When you set the Feature Derivation Window and Forecast Window, you create time period gaps (not to be confused with the Gap Length setting gaps):

- Blind history is the time window between when you actually acquire/receive data and when the forecast is actually made. Specifically, it extends from the most recent time in the Feature Derivation Window to the forecast point.

- Can't operationalize represents the period of time that is too near-term to be useful. Specifically, it extends from immediately after the Forecast Point to the beginning of the Forecast Window.

Blind history example¶

Blind history accounts for the fact that the predictions you are making are using data that has some delay. Setting the blind history gap is mainly relevant to the state of your data at the time of predictions. If you misunderstand where your data comes from, and the associated delay, it is likely that your actual predictions will not be as accurate as the model suggested they would be.

Put another way: you can look at your data and say “Ah, I have two years of daily data, and for any day in my dataset there is always a previous day of data, so I have no gap!” From the training perspective, this is true—it is unlikely that you will have a visible or obvious gap in your data if you are viewing it from the perspective of "now" relative to your training data.

The key is to understand where your actual, prediction data comes from. Consider:

- Your company processes all data available in a large batch job that runs 1 time weekly.

- You collect up historical data and train a model, but are unaware of this delay.

- You build a model that makes 2 weeks of projections for the company to act on.

- Your model goes into production but can't figure out why the predictions are so "off."

The hard thing to understand here is this:

At any given time, if the most reliable data you have available is X days old, nothing newer than that can be reliably used for modeling.

Let's get more specific about how to set the gap

If your company:

-

Collects data every Monday, but that data isn’t processed and available for making predictions until Friday—“blind history” = 5 days.

-

Makes real-time predictions, where the data you receive comes in and your model predicts on the next point—“blind history” = 0 days.

-

Collects data every day, the processing for predictions takes a day (or two days) to appear, and data is logged as it is processed—“blind history” = 1 (or 2) days.

-

Collects data every day, and the data takes the same 1 (or 2) days to process, but everything is retroactively dated—“blind history” = 0 days.

You can also use blind history to cut out short term biases. For example, if the previous 24 hours of your data is very volatile, use the blind gap to ignore that input.

Can't operationalize example¶

Let's say you are making predictions for how much bread to stock in your store and there is a period of time that is too near-term to be useful.

-

It takes 2 days to fulfill a bread order (have the stock arrive at your store).

-

Predictions for 1 and 2 days out aren't useful—you cannot take any action on those predictions.

-

The "can't operationalize" gap = 2 days.

-

With this setting, the forecast is 3 days from when you generate the prediction so that there is enough time to order and stock the bread.

Setting backtests¶

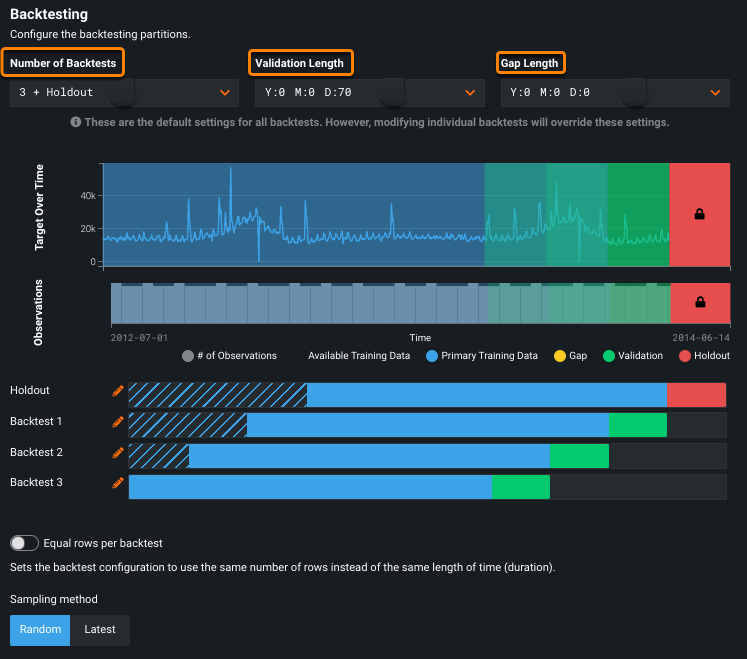

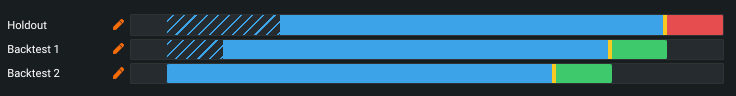

Backtesting simulates the real prediction environments a model may see. Use backtests to evaluate accuracy given the constraints of your use case. Be sure not to build backtests in a way that gives you accuracy but are not representative of real life. (See the description of date/time partitioning for details of the configuration settings.) The following describes considerations when changing those settings.

The following sections describe:

- Setting Validation Length

- Setting Number of Backtests

- Setting Gap Length

- Backtest importance

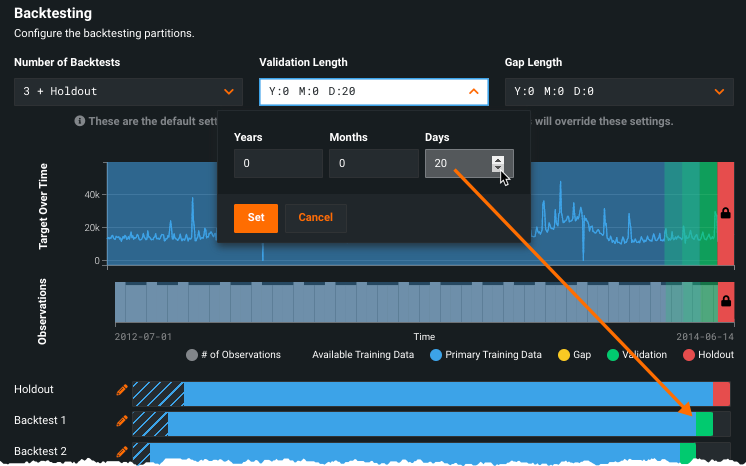

Validation length¶

Validation length describes the frequency with which you retrain your models. It is the most important setting to factor in if you change the default configuration. For example, if you plan to retrain every 2 weeks, set the validation length to 14 days; if you will retrain every quarter, set it to 3 months or 90 days. Setting a validation length to an arbitrary number that doesn’t match your retraining plan will not provide the information you need to understand model performance.

Number of backtests¶

Setting the number of backtests is somewhat of a "gut instinct." The question you are trying to answered is "What do I need to feel comfortable with the predictions based on my retraining schedule?" Ultimately, you want a number of backtests such that the validation lengths cover, at a minimum, the full forecast window. Coverage beyond this depends on what time period you need to feel comfortable with the performance of the model.

While more backtests will provide better validation of the model, it will take longer to train and you will likely run into a limit on how many backtests you can configure based on the amount of historical data you have. For a given validation duration, the training duration will shrink as you increase the number of backtests and at some point the training duration may get too small.

Select the validation duration based on the retraining schedule and then select the number of backtests to balance your confidence in the model against the time to train and availability of training data to support more backtests.

If you retrain often, you shouldn't care if the model performs differently across different backtests, as you will be retraining on more recent data more often. In this case you can have fewer backtests, maybe only Backtest 1. But if you expect to retrain less often, volatility in backtest performance could impact your model's accuracy in production. In this case, you want more backtests so that you get models that are validated to be more stable across time. Backtesting assures that you select a model that generalizes well across different time periods in your historical data. The longer you plan to leave a model in production the before retraining the more of a concern this is.

Also, setting the number depends on whether the Forecast Distance is less or more than the retraining period.

| If... | Number of backtests equals... |

|---|---|

FW < retraining period |

The number of retraining periods where you want to minimize volatility. While volatility should always should be low, consider the minimum time you want to minimize volatility. |

- Example: You have a Forecast Window of one week and will retrain each month. Based on historical sales, you want to feel comfortable that the model is stable over a quarter of the year. Because

FW = [1,7]and the retraining period is 1M, select the validation duration to match that. To be comfortable that the model is stable over 3M, select 3 backtests.

If the Forecast Window is longer than the retraining period, you can consider the previous example but you also want to make sure you have enough backtests to account for the entire Forecast Window.

| If... | Number of backtests equals... |

|---|---|

FW > retraining period |

The number of backtest from above, multiplied by the Forecast Window or retraining period. |

- Example: You have a Forecast Window of 30 days and will retrain every 15 days. You need a minimum of two backtests to validate that. To feel comfortable that the model is stable over a quarter of the year, like the last example, use six backtests.

Ultimately, you want a number of backtests such that the validation lengths cover, at a minimum, the full Forecast Window. Coverage beyond this depends on what time period you need to feel comfortable with the performance of the model.

Gap Length¶

The Gap Length set as part of date/time partitioning (not to be confused with the blind history or "can't operationalize" periods) helps to address the issue of delays in getting a model to production. For example, if you train a model and it takes five days to get the model running in production, then you would want a gap of five days. For example, ask yourself, how many days old from Friday is the most recent data point have that equals actual and usable data? Few companies have the capacity to train, deploy, and begin using the deployments immediately in their production environments.

Backtest importance¶

All backtests are not equal.

Backtest 1 is the most important, because when you setup a project you are trying to simulate reality. Backtest 1 is simulating what happens if you train and validate on the data that would actually be available to you at the most recent time period you have available. This is the most recent and likely most relevant data that you would be training on, and so the accuracy of Backtest 1 is extremely important.

Holdout simulates what's going to happen when your model goes into production—the best possible “simulation” of what would be happening during the time you are using the model between retrainings. Accuracy is important, but should be used more as a guideline of what to except of the model. Determine if there are drastic differences in performance between Holdout and Backtest 1, as this could point to over- or under-fitting.

Other backtests are designed to give you confidence that the model performs reliably across time. While they are important, having a perfect “All backtests” score is a lot less important than the scores for Backtest 1 and Holdout. These tests can provide guidance on how often you need to retrain your data due to volatility in the performance across time. If Backtest 1 and Holdout have good/similar accuracy, but the other backtests have low accuracy, this may simply mean that you need to retrain the model more often. In other words, don't try to get a model that performs well on all backtests at the cost of a model that performs well on Backtest 1.

Valid backtest partitions¶

When configuring backtests for time series projects, the number of backtests you select must result in the following minimum rows for each backtest:

- A minimum of 20 rows in the training partition.

- A minimum of four rows in the validation and holdout partitions.

When setting up partitions:

-

Consider the boundaries from a predictions point-of-view and make sure to set the appropriate gap length.

-

If you are collecting data in realtime to make predictions, a feature derivation window that ends at

0will suffice. However, most users find that the blind history gap is more realistically anywhere from 1 to 14 days.

Deep dive: default partition¶

It is important to understand how DataRobot calculates the default training partitions for time series modeling before configuring backtest partitions. The following assumes you meet the minimum row requirements in the training partition of each backtest.

Note that for projects with greater than 10 forecast distances, DataRobot does not include a number of rows, the amount determined by the forecast distance, in the training partition. As a result, the dataset requires more rows than the stated minimum, with the number of additional rows determined by the depth of the forecast distance.

Note

DataRobot uses rows outside of the training partitions to calculate features as part of the time series feature derivation process. That is, the rows removed are still uses to calculate new features.

To reduce bias to certain forecast distances, and to leave room for validation, holdout, and gaps, DataRobot does not include all dataset rows in the backtest training partitions. The number of rows in your dataset that DataRobot does not include in the training partitions is dependent on the elements described below.

Calculations described below using the following terminology:

| Term | Link to description |

|---|---|

| BH | "Blind history" |

| FD | Forecast Distance |

| FDW | Feature Derivation Window |

| FW | Forecast Window |

| CO | "Can't operationalize" |

| Holdout | Holdout |

| Validation | Validation |

The following are calculations for the number rows not included in training.

Single series and <= 10 FDs¶

For a single series with 10 or fewer forecast distances, DataRobot calculates excluded rows as follows:

FDW + 1 + BH + CO + Validation + Holdout

In other words

Feature Derivation Window +

1 +

Gap between the end of FDW and the start of FW +

Gap between training and validation +

Validation +

Holdout

Multiseries or > 10 FDs¶

When a project has a Forecast Distance > 10, DataRobot adds the length of Forecast Window to the rows removed. For example, if project a has 20 Forecast Distances, DataRobot removes 20 rows from consideration in the training set. In other words, the greater the number of Forecast Distances, the more rows removed from training consideration (and thus the more data you need to have in the project to maintain the 20 row minimum).

For a multiseries project, or single series with greater than forecast distances, DataRobot calculates excluded rows as follows:

FDW + 1 + FW + BH + CO + Validation + Holdout

In other words

Feature Derivation Window +

1 +

Forecast window +

Gap between the end of FDW and the start of FW +

Gap between training and validation +

Validation+

Holdout

Projects with seasonality¶

If there is seasonality (i.e., you selected the Apply differencing advanced options), replace FDW + 1 with FDW + Seasonal period. Note that if not selected, DataRobot tries to detect seasonality by default. In other words, DataRobot calculates excluded rows as follows:

-

Single series and <= 10 FDs:

FDW + Seasonal period + BH + CO + Validation + Holdout -

Multiseries or >10 FDs:

FDW + Seasonal period + FW + BH + CO + Validation + Holdout

Duration and Row Count¶

If your data is evenly spaced, Duration and Row Count give the same results. It is not uncommon, however, for date/time datasets to have unevenly spaced data with noticeable gaps along the time axis. This can impact how Duration and Row Count affect the training data for each backtest. If the data has gaps:

- Row Count results in an even number of rows per backtest (although some of them may cover longer time periods). Row Count models can, in certain situations, use more RAM than Duration models over the same number of rows.

- Duration results in a consistent length-of-time per backtest (but some may have more or fewer rows).

Additionally, these values have different meanings depending on whether they are being applied to training or validation.

For irregular datasets, note that the setting for Training Window Format defaults to Row Count. Although you can change the setting to Duration, it is highly recommended that you leave it, as changing may result in unexpected training windows or model errors.

Handle training and validation folds¶

The values for Duration and Row Count in training data are set in the training window format section of the Time Series Modeling configuration.

When you select Duration, DataRobot selects a default fold size—a particular period of time—to train models, based on the duration of your training data. For example, you can tell DataRobot "always use three months of data." With Row Count, models use a specific number of rows (e.g., always use 1000 rows) for training models. The training data will have exactly that many rows.

For example, consider a dataset that includes fraudulent and non-fraudulent transactions where the frequency of transactions is increasing over time (the number is increasing per time period). Set Row Count if you want to keep the number of training examples constant through the backtests in the training data. It may be that the first backtest is only trained on a short time period. Select Duration to keep the time period constant between backtests, regardless of the number of rows. In either case, models will not be trained into data more recent than the start of the holdout data.

Validation is always set in terms of duration (even if training is specified in terms of rows). When you select Row Count, DataRobot sets the Validation Length based on the row count.

Change the training period¶

Note

Consider retraining your model on the most recent data before final deployment.

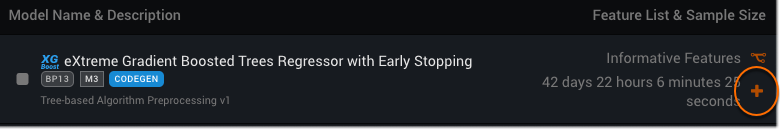

You can change the training range and sampling rate and then rerun a particular model for date-partitioned builds. Note that you cannot change the duration of the validation partition once models have been built; that setting is only available from the Advanced options link before the building has started. Click the plus sign (+) to open the New Training Period dialog:

The New Training Period box has multiple selectors, described in the table below:

| Selection | Description | |

|---|---|---|

| 1 | Frozen run toggle | Freeze the run. |

| 2 | Training mode | Rerun the model using a different training period. Before setting this value, see the details of row count vs. duration and how they apply to different folds. |

| 3 | Snap to | "Snap to" predefined points, to facilitate entering values and avoid manually scrolling or calculation. |

| 4 | Enable time window sampling | Train on a subset of data within a time window for a duration or start/end training mode. Check to enable and specify a percentage. |

| 5 | Sampling method | Select the sampling method used to assign rows from the dataset. |

| 6 | Summary graphic | View a summary of the observations and testing partitions used to build the model. |

| 7 | Final Model | View an image that changes as you adjust the dates, reflecting the data to be used in the model you will make predictions with (see the note below). |

Once you have set a new value, click Run with new training period. DataRobot builds the new model and displays it on the Leaderboard.

Window and gap settings example¶

There is an important difference between the Gap Length setting (the time required to get a model into production) and the gap periods (the time needed to make data available to the model) created by window settings.

The following description provides concrete examples of how these values each impact the final model in production.

At the highest level, there are two discrete actions involved in modeling a time series project:

-

You build a model, which involves data processing, and the model ultimately makes predictions.

-

Once built, you productionalize the model and it starts contributing value to your organization.

Let's start with the more straight-forward piece. Item #2 is the Gap Length—the one-time delay between you completing your work and the time it takes, for example, the IT department to actually move the latest model into production. (In some heavily regulated environments, this can be 30 days or more.)

For item #1, you must understand these fundamental things about your data:

- How often is your data collected or aggregated?

-

What does the data's timestamp really represent? A very common real-world kind of example:

- The database system runs a refresh process on the data and, for this example, let's say it becomes usable on 9/9/2022 ("today").

- The refresh process is, however, actually being applied to data from the previous Friday, in this example, 9/2/2022.

- The database timestamps the update as the day the processing happened (9/9/2022) even though this is actually the data for 9/2/2022.

Why all the delays?

In most systems there is a grace period between data being collected in real time and data being processed for analytics or predictions. This is because often you have a lot of complex systems that feed into a database. After the initial collection, the data is moved through a "cleaning and processing" stage. In these complex systems, each step can take hours or days, creating a time lag. From the example, this is how data might be processed and made usable Friday, but the original, raw data may have arrived days before. This is why you need to understand the lag—you need to know both when the data was created and when it became usable.

In another example:

- The database system runs a refresh process on the data but timestamps it with the actual date (9/2/2022) in the most recent data refresh.

Important

In both of these examples you know the latest actual age of data on 9/9/2022 is really 9/2/2022. You must understand your data, as it is critically important for properly understanding your project setup.

Once you know what is happening with your data acquisition, you can adjust your project to account for it. For training, the timestamp/delay issue isn't a problem. You have a dataset over time and every day has values. But this in itself is also a challenge, as you and the system you are using need to account for the difference in training and production data. Another example:

-

Today is Friday 9/9/2022, and you received a refresh of data. You need to make predictions for Monday. How should you setup the Feature Derivation Window and Forecast Window in this situation?

- You know the most recent data you have is actually from 7 days ago.

- As of 9/9/2022, the predictions you need to make each day are 3 days in the future, and you want to project a week out.

- The Feature Derivation Window start date could be any time, but for the example the "length" is 28 days.

Think about everything from the point of prediction. As of prediction any date, the example can be summarized as:

- Most recent data is actually 7 days in the past.

- The Feature Derivation Window is 28 days.

- You want to predict three days ahead of today.

- You want to know what will happen over the next 7 days.

- After building the model, it will take your company 30 days to deploy it to production.

How do you configure this? In this scenario, settings would be as following

- Feature Derivation Window: -35 to -7 days

- Forecast Window: 3 to 10 days

- Gap Length: 30 days

Another way to express it is that blind history is 7 days, the "can't operationalize" period is 3 days, and the Gap Length is 30.