Tune models¶

Using the information gained from Visual AI insights, you may decide to calibrate tuning to:

- apply a different image augmentation list.

- change the neural network architecture.

- ensure the model is applying the right level of granularity to the dataset.

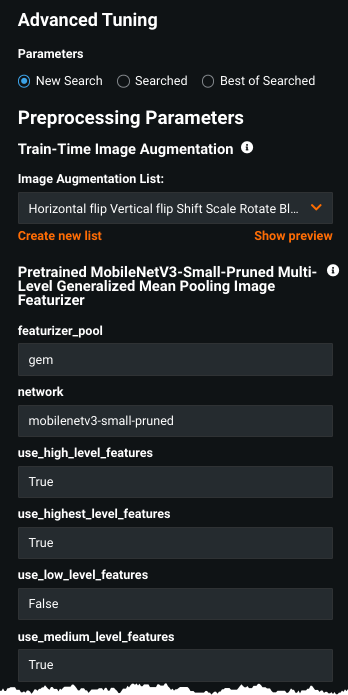

These settings, and more, are accessed from the Advanced Tuning tab. This page describes the settings on that page that are specific to Visual AI projects.

For a simplified tuning walkthrough, see the tuning guide.

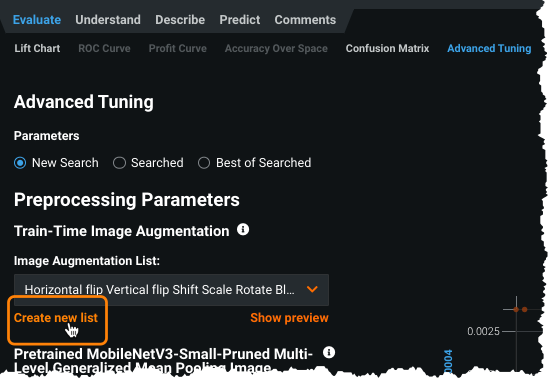

Augmentation lists¶

Initially, DataRobot applies the settings from Advanced options to build models and saves those settings in a list (similar to feature list) named Initial Augmentation List. After Autopilot completes, you can continue exploring different transformation strategies for a model by creating different settings and saving them as custom augmentation lists.

Select any saved augmentation list and begin tuning a model with it to compare the scores of models trained with a variety of different augmentation strategies.

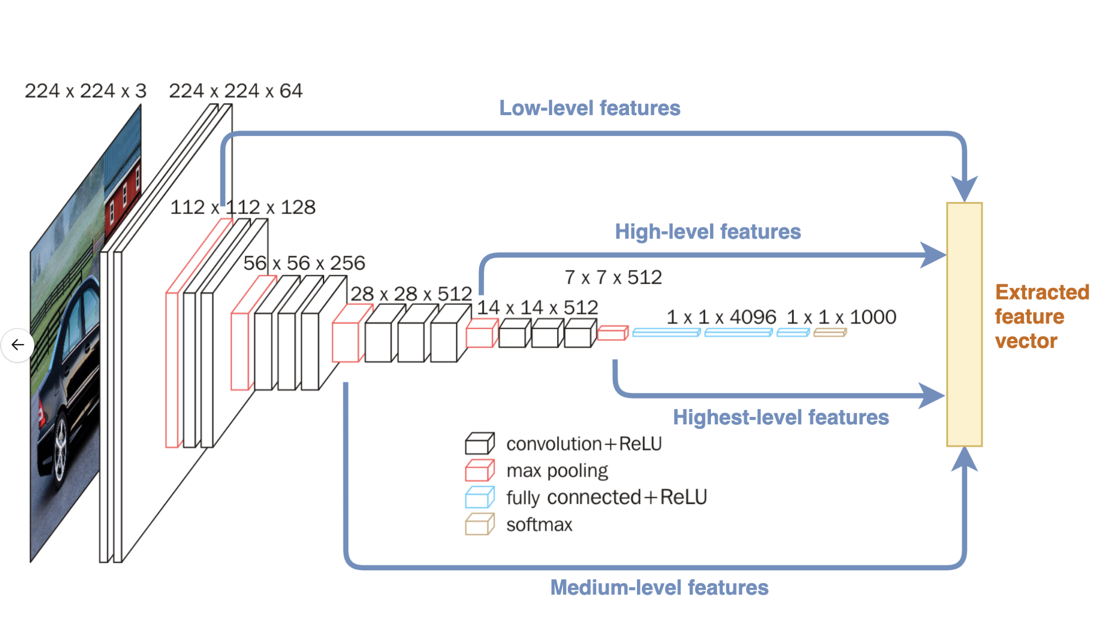

Granularity¶

Set the preprocessing parameters to capture the correct level of granularity, adjusting whether to pay more attention to details than to high-level shapes and textures. For example, don’t use high-level features to focus on points, edges, corners for decisions; don’t use low-level features to focus on detecting common objects. The settings enable/disable the complexity of patterns the model reacts to when processing the input image.

The higher the level, the more complex type of pattern it can detect—simple shapes versus complex shapes. Imagine low-level features such as fish scales which are a simple part of a complex image of a fish.

Levels are cumulative—results are drawn for each selected level. So, combinations of low-level features form medium-level features, combinations of medium-level features form high-level features. Highest, High, and Medium levels are enabled (True) by default. The tradeoff is that the more detail you include (the more features you add to the model), the more you increase training time and the risk of overfitting.

When modifying levels, at least one level must be set to True. Note that the type of feature extraction for a given layer can vary depending on the network architecture chosen.

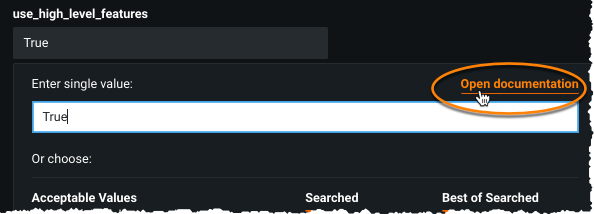

The following table briefly describes each parameter; open the documentation for more complete descriptions.

| Parameter | Description |

|---|---|

| featurizer_pool | The method used to aggregate across all filters in the final step of image featurization; different methods summarize in different ways, producing slightly different types of features. |

| network | The pre-trained model architecture. |

| use_highest_level_features | Features extracted from the final layer of the network. Good for cases when the target contains “ordinary” images (e.g., animals or vehicles). |

| use_high_level_features | Features extracted from one of the final convolutional layers. This layer provides high-level features, typically a combination of patterns from low- and medium- level features that form complex objects. |

| use_medium_level_features | Features extracted from one of the intermediate convolution layers. Medium-level features are typically a combination of patterns from different levels of low-level features. If you think your model is underfitting and you want to include more features from the middle of the network, set this to True. |

| use_low_level_features | Features extracted from one of the initial convolution layers. This layer provides low-level visual features and is good for problems that vary greatly from the “ordinary” (single object) image datasets. |

Featurizer pool options¶

The following briefly describes the featurizer_pool options. While the default featurizer_pool operation is avg, for improved accuracy test the gem pooling option. gem is the same as avg but with additional regularization applied (clipping extreme values).

avg= GlobalAveragePooling2Dgem= GeneralizedMeanPoolingmax= GlobalMaxPooling2Dgem-max= applied concatenation operation ongemandmaxavg-max= applied concatenation operation onavgandmax

Image fine-tuning¶

The following sections describe setting the number of layers and the learning rates used by image fine-tuners.

Trainable scope for fine-tuners¶

Trainable scope specifies the number of layers to configure as trainable, starting from the classification/regression network layer and continuing through the input convolutional network layer. The count of layers starts from the final layer. This is because lower level information that starts from the input convolution layer and is pre-trained on a larger, more representative image dataset are more generalizable across different problem types.

Determine this value based on how unique the data or the problem that the dataset represents it. The more unique, the better suited for allowing more layers to be trainable as that will improve the resulting metric score of the final trained CNN fine-tuner model. By default, all layers are trainable (as testing showed the greatest improvements for most datasets). Basic guidelines to follow while tuning this parameter is to slowly decrease the amount of trainable layers until improvement stops.

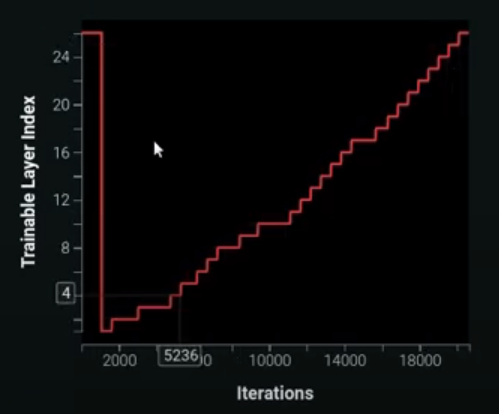

DataRobot also provides a smart adaptive method called "chain-thaw" that attempts to gradually unfreeze (set a layer to be trainable) the network. It iteratively trains each layer independently until convergence, but it is a very exhaustive and time consuming method. The following figure shows an example of layers-trained-by-iteration from the Training Dashboard interface.

Discriminative learning rates¶

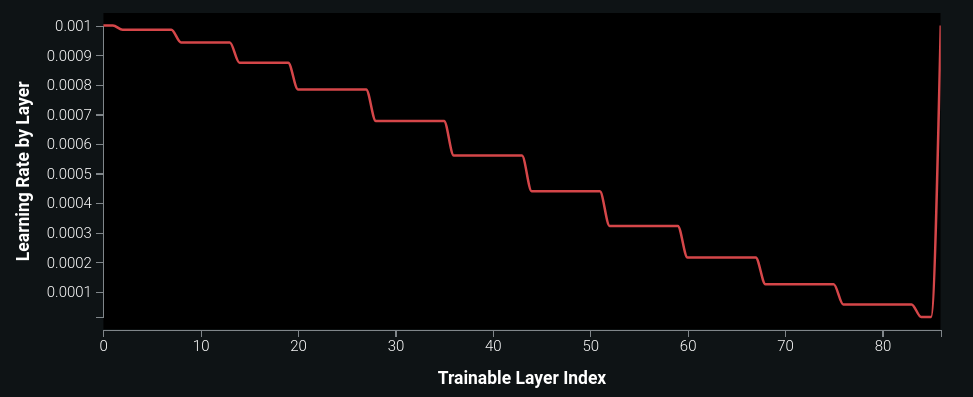

Enabling discriminative learning rates use the same theory as that used for setting trainable scope. That is, starting from the final few layers allows the model to modify more of the higher level features and modify less of the lower level features (which already generalize well across different datasets). This parameter can be paired with trainable scope to enable and achieve a truly fine-grained learning process. The following figure shows an example learning-rate-by-layer graph from the Training Dashboard, which represents each trainable layer.

Tune hyperparameters¶

During Autopilot, Visual AI automatically adjusts the Neural Network Architecture and pooling type. As a result, Visual AI offers state-of-the-art accuracy and, at the same time, is computationally efficient. For extra accuracy, after model building completes, you can tune Visual AI hyperparameters and potentially further improve accuracy.

The following hyperparameter tuning section is divided into two segments—Deep Learning Tuning and Modeler Tuning. Each can be applied independently or together, with experimentation leading to the best results. Before beginning manual tuning, you must first run Autopilot (full or Quick).

Deep learning tuning¶

To tune models for deep learning, select the best Leaderboard blueprint and choose Advanced Tuning. The following options provide some tuning alternatives to consider:

EfficientNetV2-S-Pruned is the latest incarnation of Computer Vision research, developed by the Google AI team, which is delivering vision results much more efficiently than other vision transformers. The model is pre-trained with ImageNet21k (consisting of 14,197,122 images, each tagged in a single-label fashion by one of 21,841 possible classes). This larger ImageNet tuning scenario is beneficial if your image categories fall outside of the traditional ImageNet1K dataset labels.

EfficientNetB4-Pruned is older than EfficientNetV2-S-Pruned (above); it is a part of the first generation of EfficientNets. It is pre-trained on the smaller ImageNet1K and B4 type is considered as large variant of EfficientNet family of models. DataRobot strongly advises trying this network.

ResNet50-Pruned was the standard in the Computer Vision field for efficient Deep Learning architecture. It is pre-trained on ImageNet1K without using any advanced image augmentation techniques (for example, AutoAugment, MixUp, CutMix, etc). This training regime and architecture, solely based on skip-connections, is an excellent next-step neural network architecture in every tuning setting.

Modeler tuning¶

To tune models for deep learning, select the best Leaderboard blueprint (the type is specified in the suggestions) and choose Advanced Tuning. Consider the following options:

For a Keras blueprint, increase the number of epochs (by 2 or 3 for example). DataRobot Keras blueprint modelers are optimized for different image scenarios, but sometimes, depending on the image dataset, training more epochs can boost performance.

For a Keras blueprint, change its activation function to Swish. For some image datasets, Swish is better than Rectified linear Unit (ReLU).

For a Best Gradient Boosting Trees (XGBoost/LightGBM) blueprint, decrease/increase the learning rate.

Select the best blueprint of any type and change its scaling method. Some examples to try:

-

None -> Standardization -

None -> Robust Standardization -

Standardization -> Robust Standardization

For a Linear ElasticNet (binary target), double the max iteration (for example, change max_iter = 100, the default, to max_iter = 200.

For Regularized Logistic Regression(L2), increase the regularization by a factor of 2. For example, for C (the inverse of regularization strength), if C = 4, change it to C = 2.

For the best SGD(multiclass), increase the n_iter by a factor of 2. For example, if n_iter is 100, change it to 200.