Deploy custom models¶

After you create a custom inference model using the Custom Model Workshop, you can deploy it to a custom model environment.

Note

While you can deploy your custom inference model to an environment without testing, DataRobot strongly recommends your model pass testing before deployment.

Register and deploy a custom model¶

To deploy an unregistered custom model:

-

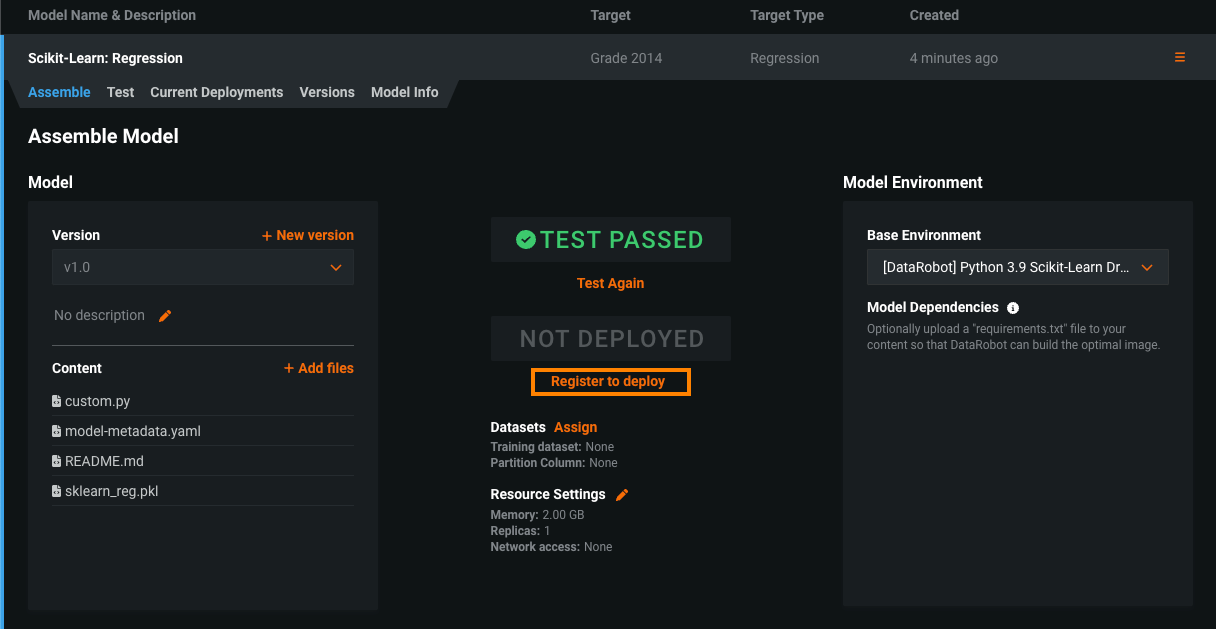

Navigate to Model Registry > Custom Model Workshop > Models and select the model you want to deploy.

-

On the Assemble tab, click Register to deploy in the middle of the page.

Note

DataRobot recommends testing that your model can make predictions before deploying.

-

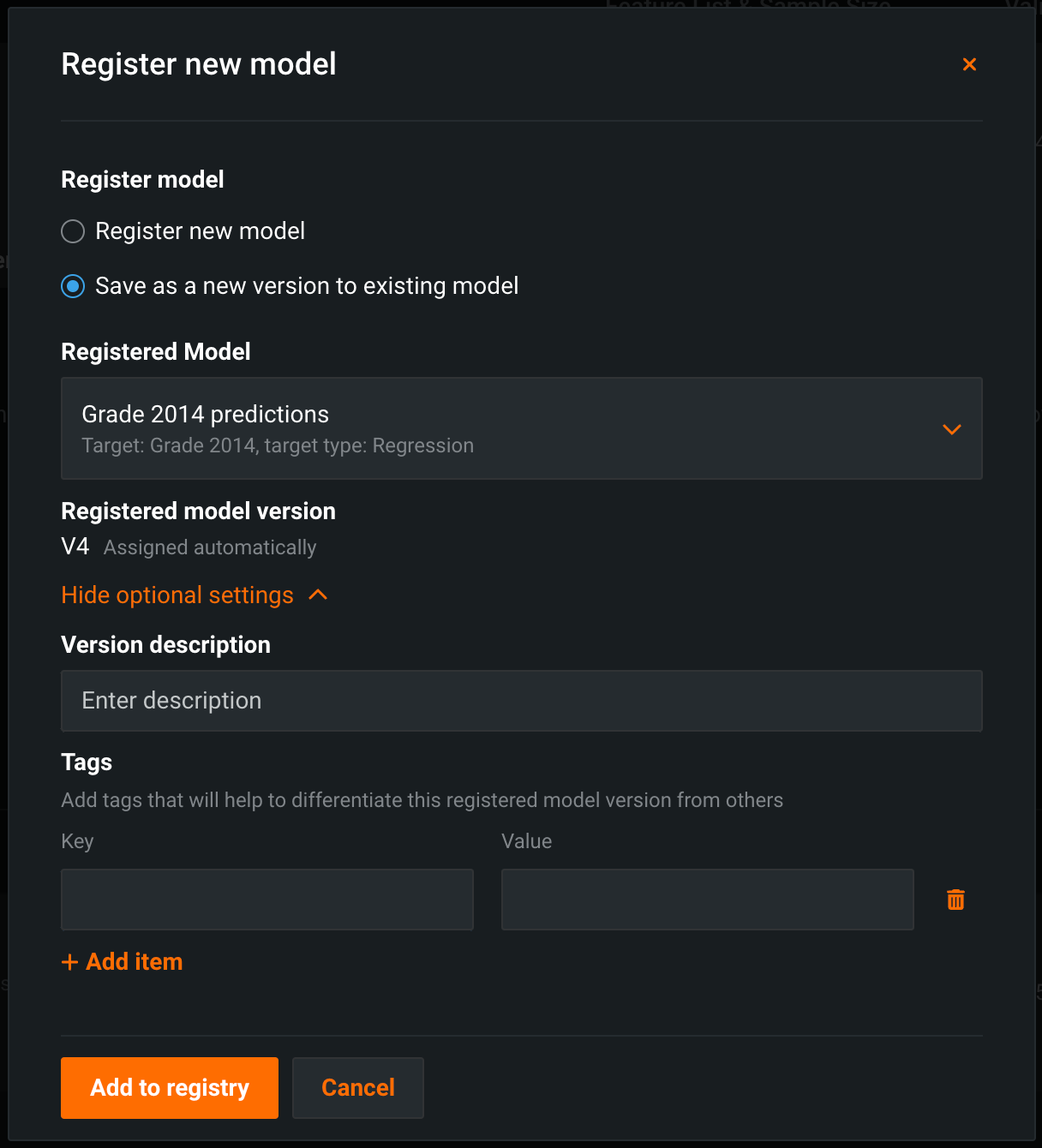

In the Register new model dialog box, configure the following:

Field Description Register model Select one of the following: - Register new model: Create a new registered model. This creates the first version (V1).

- Save as a new version to existing model: Create a version of an existing registered model. This increments the version number and adds a new version to the registered model.

Registered model name / Registered Model Do one of the following: - Registered model name: Enter a unique and descriptive name for the new registered model. If you choose a name that exists anywhere within your organization, the Model registration failed error message appears.

- Registered Model: Select the existing registered model you want to add a new version to.

Registered model version Assigned automatically. This displays the expected version number of the version (e.g., V1, V2, V3) you create. This is always V1 when you select Register a new model. Optional settings Version description Describe the business problem these model packages solve, or, more generally, the relationship between them. Tags Click + Add item and enter a Key and a Value for each key-value pair you want to tag the model version with. Tags do not apply to the registered model, just the versions within. Tags added when registering a new model are applied to V1. -

Click Add to Registry. The model opens on the Model Registry > Registered Models tab.

-

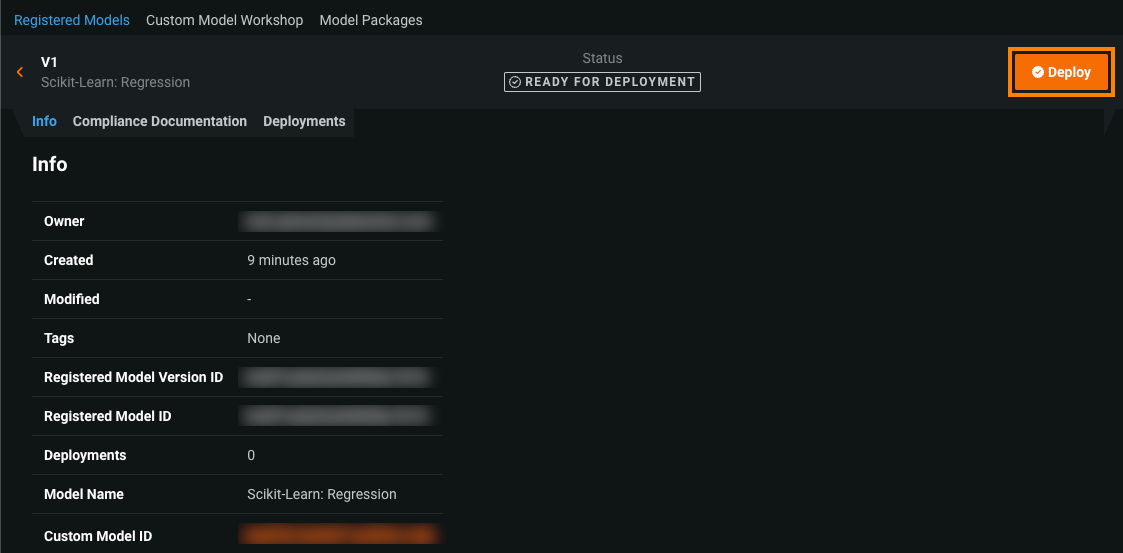

In the registered model version header, click Deploy, and then configure the deployment settings.

Most information for your custom model is provided automatically.

-

Click Deploy model.

Deploy a registered custom model¶

To deploy a registered custom model:

-

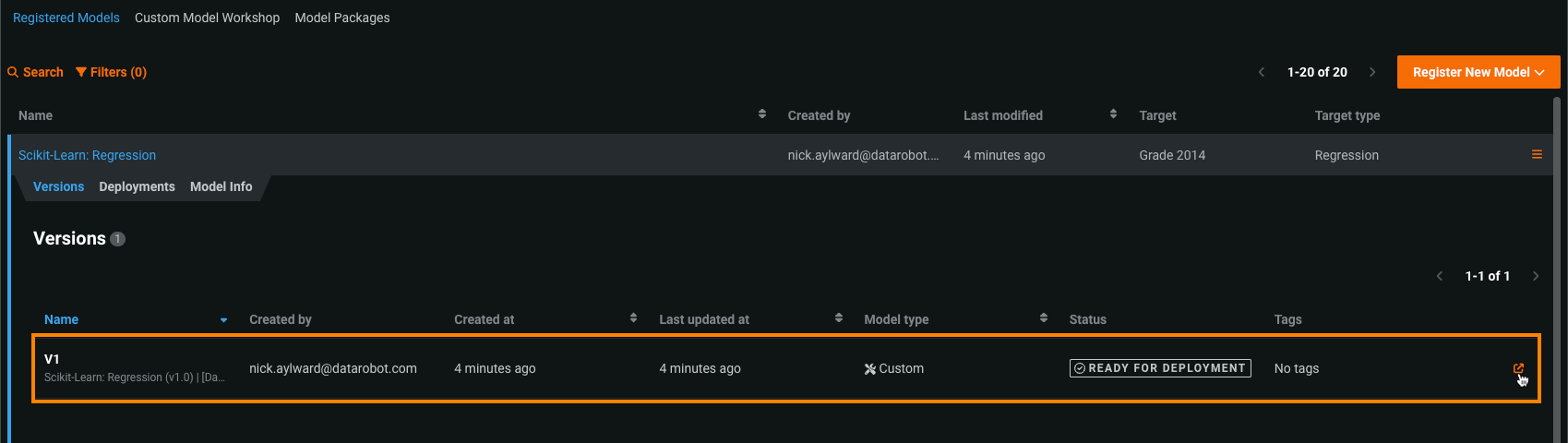

On the Registered Models page, click the registered model containing the model version you want to deploy.

-

To open the registered model version, do either of the following:

-

In the version header, click Deploy, and then configure the deployment settings.

-

Click Deploy model.

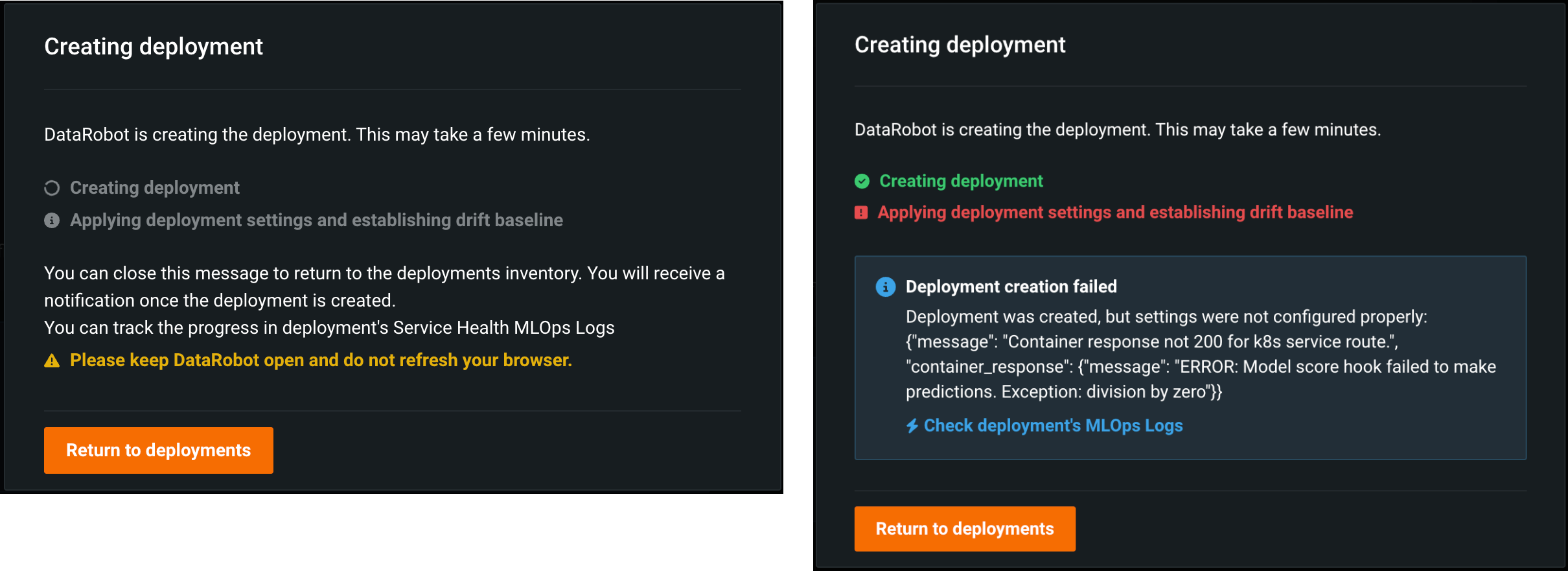

The Creating deployment modal appears, tracking the status of the deployment creation process, including the application of deployment settings and the calculation of the drift baseline. You can Return to deployments or monitor the deployment progress from the modal, allowing you to access the Check deployment's MLOps logs link if an error occurs:

Make predictions¶

Once a custom inference model is deployed, it can make predictions using API calls to a dedicated prediction server managed by DataRobot. You can find more information about using the prediction API in the Predictions documentation.

Training dataset considerations

When making predictions through a deployed model, the prediction dataset is handled as follows:

-

Without training data, only the target feature is removed from the prediction dataset.

-

With training data, any features not in the training dataset are removed from the prediction dataset.

Deployment status¶

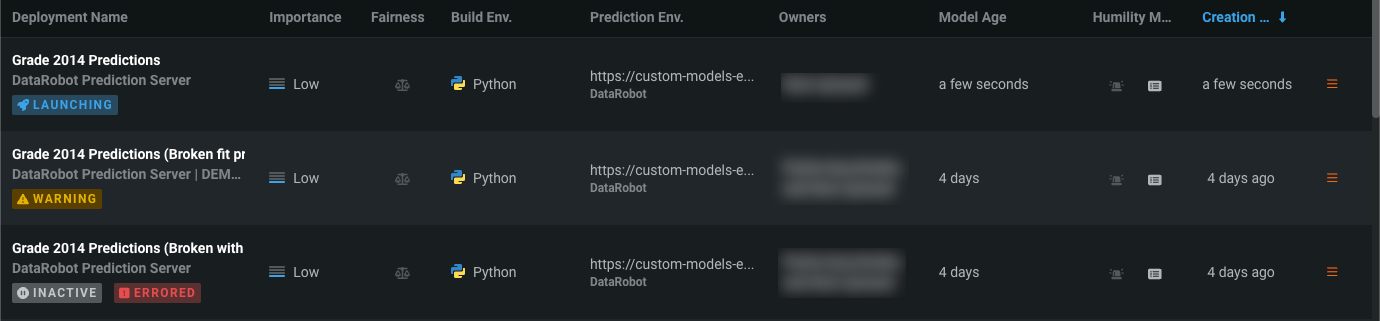

When DataRobot deploys a custom model, a Launching badge appears under the deployment name in the deployment inventory, and on any tab within the deployment. The following deployment status values are available for custom model deployments:

| Status | Badge |

|---|---|

|

The custom model deployment process is still in progress. You can't currently make predictions through this deployment, or access deployment tabs that require an active deployment. |

|

The custom model deployment process completed with errors. You may be unable to make predictions through this deployment; however, if you deactivate this deployment, you can't reactivate it until you resolve the deployment errors. You should check the MLOps Logs to troubleshoot the custom model deployment. |

|

The custom model deployment process failed and the deployment is Inactive. You can't currently make predictions through this deployment, or access deployment tabs that require an active deployment. You should check the MLOps Logs to troubleshoot the custom model deployment. |

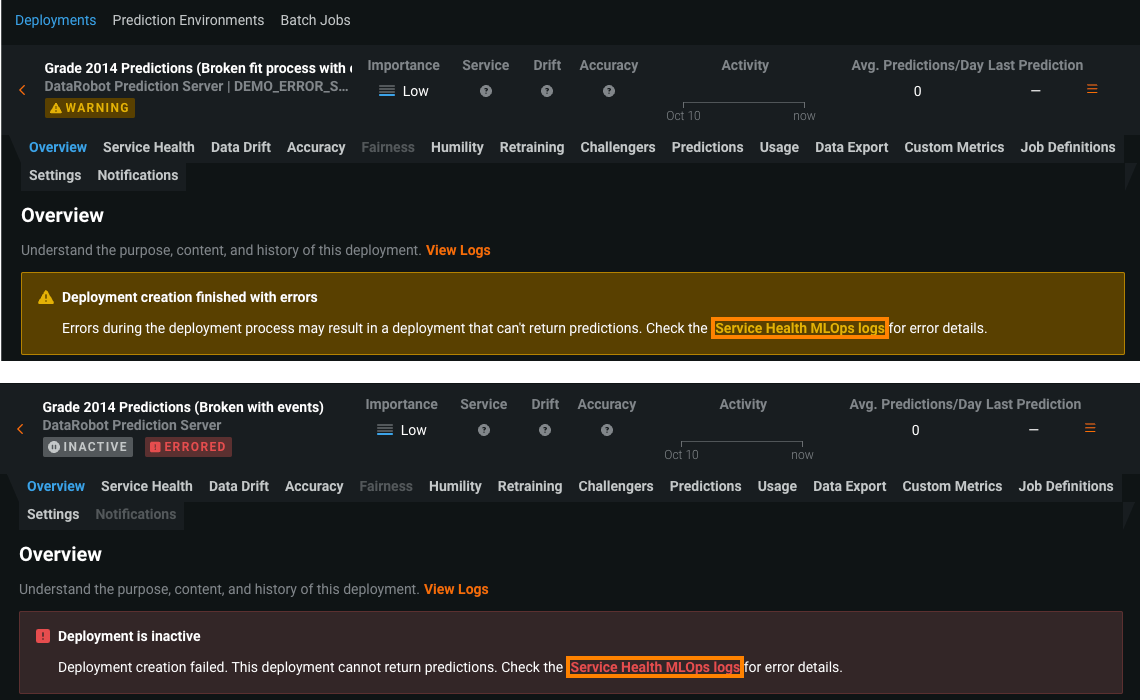

From a deployment with an Errored or Warning status, you can access the Service Health MLOps logs link from the warning on any tab. This link takes you directly to the Service Health tab:

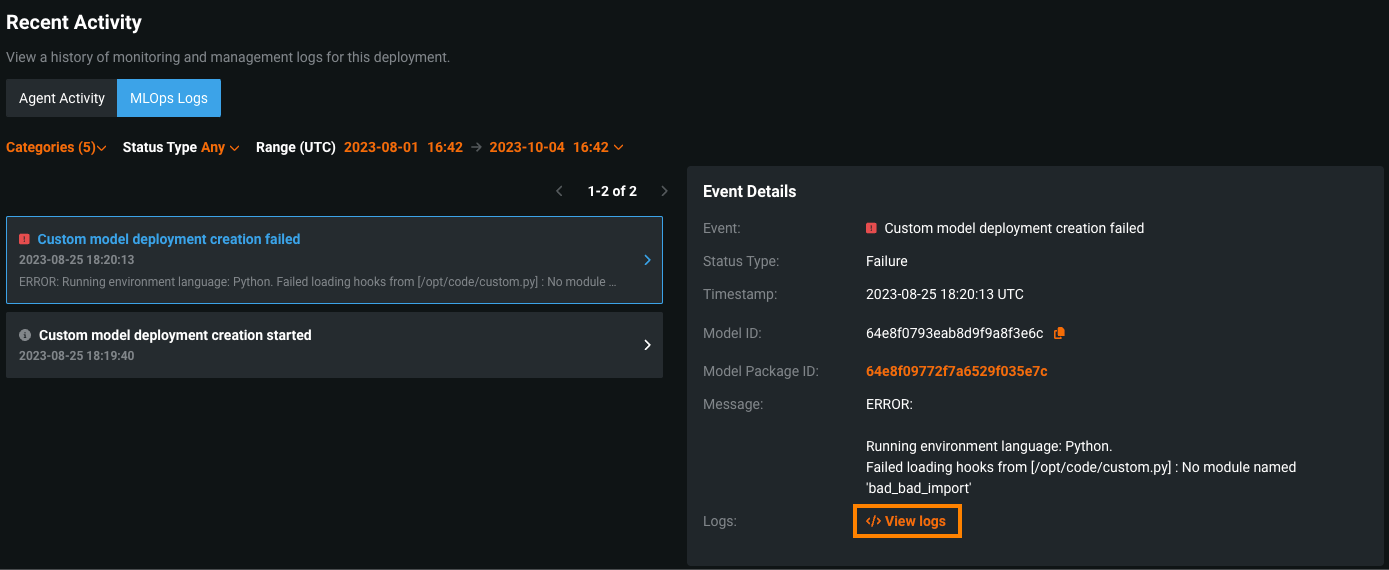

On the Service Health tab, under Recent Activity, you can click the MLOps Logs tab to view the Event Details. In the Event Details, you can click View logs to access the custom model deployment logs to diagnose the cause of the error:

Deployment logs¶

When you deploy a custom model, it generates log reports unique to this type of deployment, allowing you to debug custom code and troubleshoot prediction request failures from within DataRobot.

To view the logs for a deployed model, navigate to the deployment, open the Actions menu , and select View Logs.

You can access two types of logs:

-

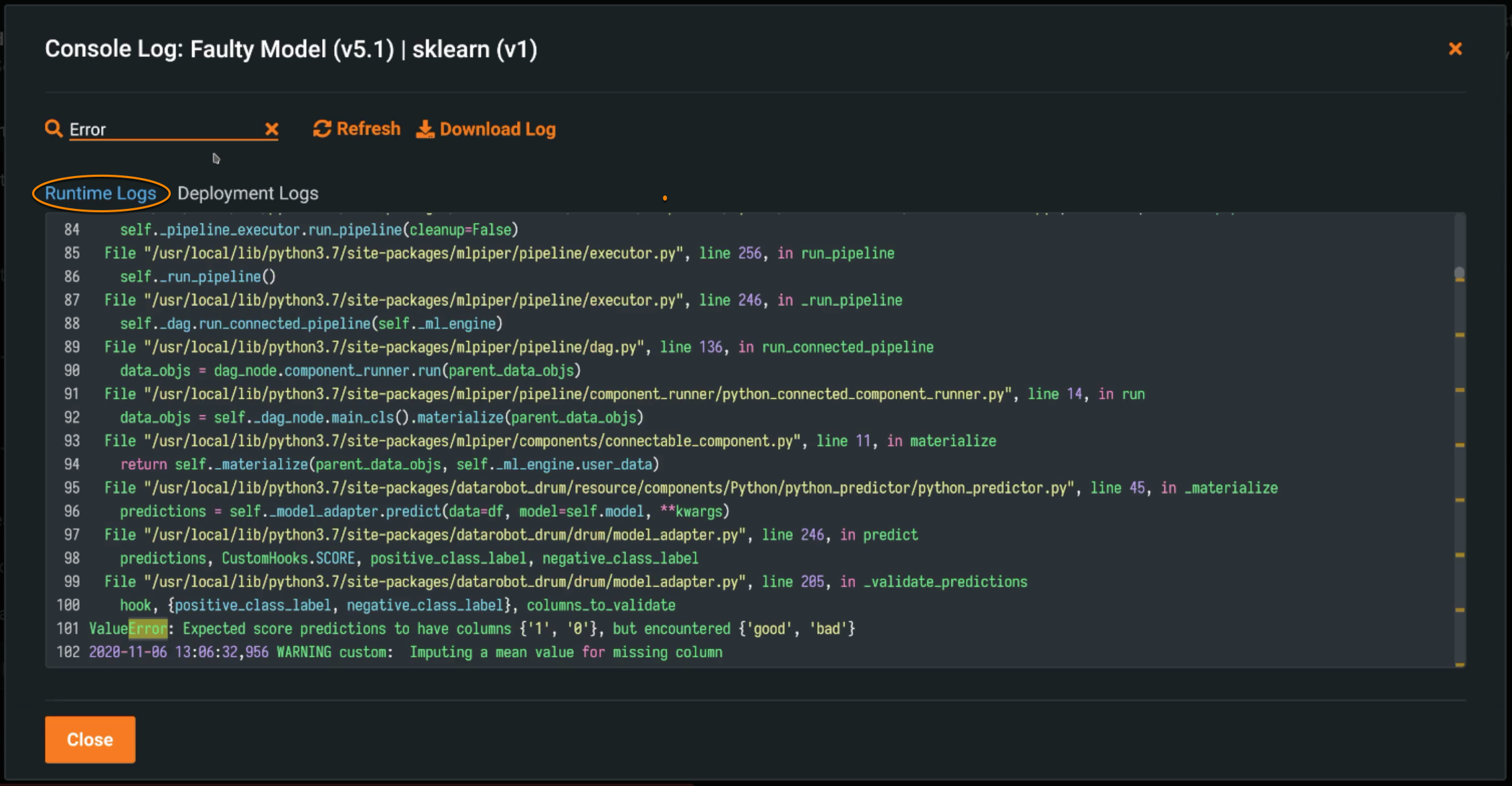

Runtime Logs are used to troubleshoot failed prediction requests (via the Predictions tab or the API). The logs are captured from the Docker container running the deployed custom model and contain up to 1MB of data. The logs are cached for 5 minutes after you make a prediction request. You can re-request the logs by clicking Refresh.

-

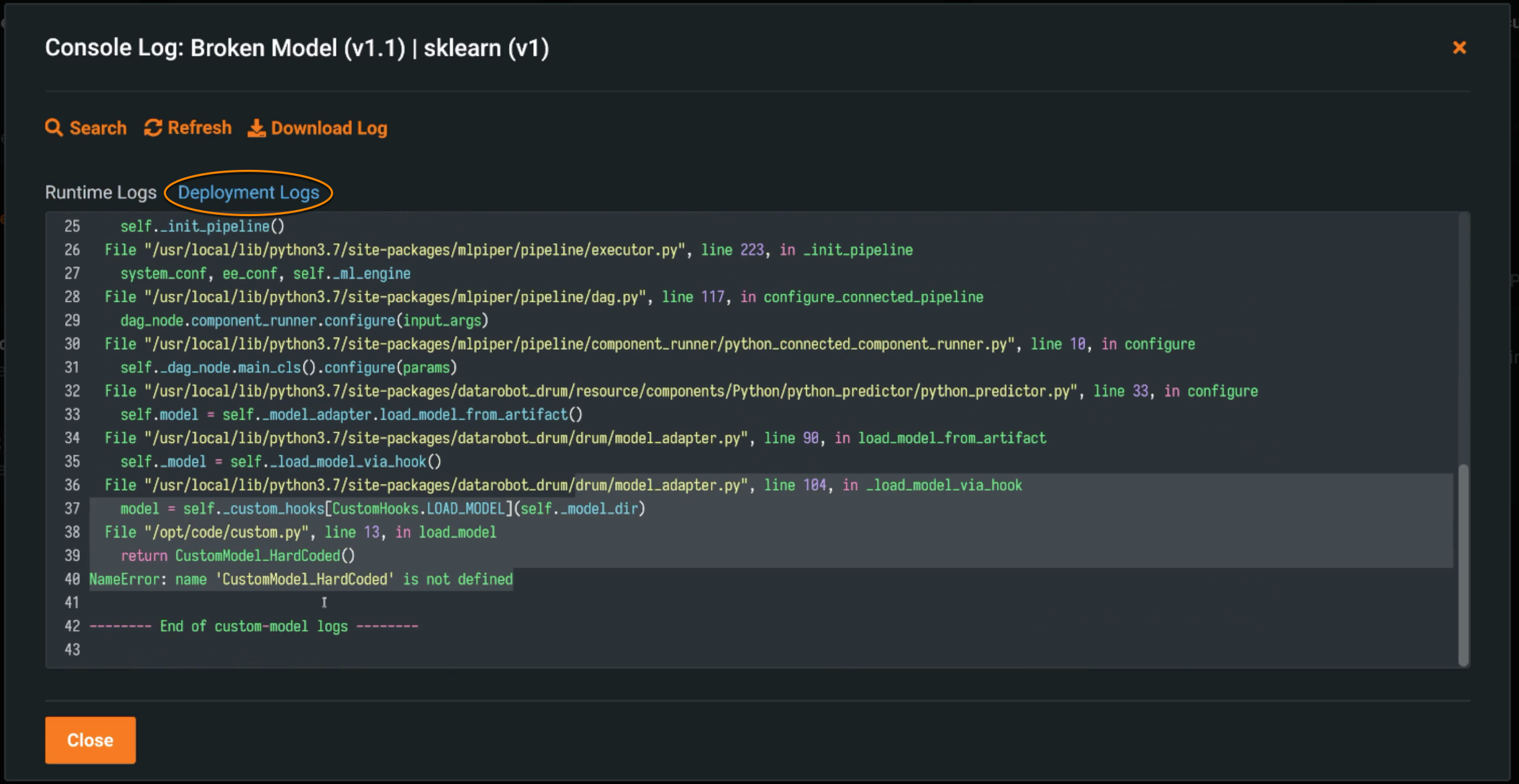

Deployment logs are automatically captured if the custom model fails while deploying. The logs are stored permanently as part of the deployment.

Note

DataRobot only provides logs from inside the Docker container from which the custom model runs. Therefore, it is possible in cases where a custom model fails to deploy or fails to execute a prediction request that no logs will be available. This is because the failures occurred outside of the Docker container.

Use the Search bar to find specific references within the logs. Click Download Log to save a local copy of the logs.