Set up data drift monitoring¶

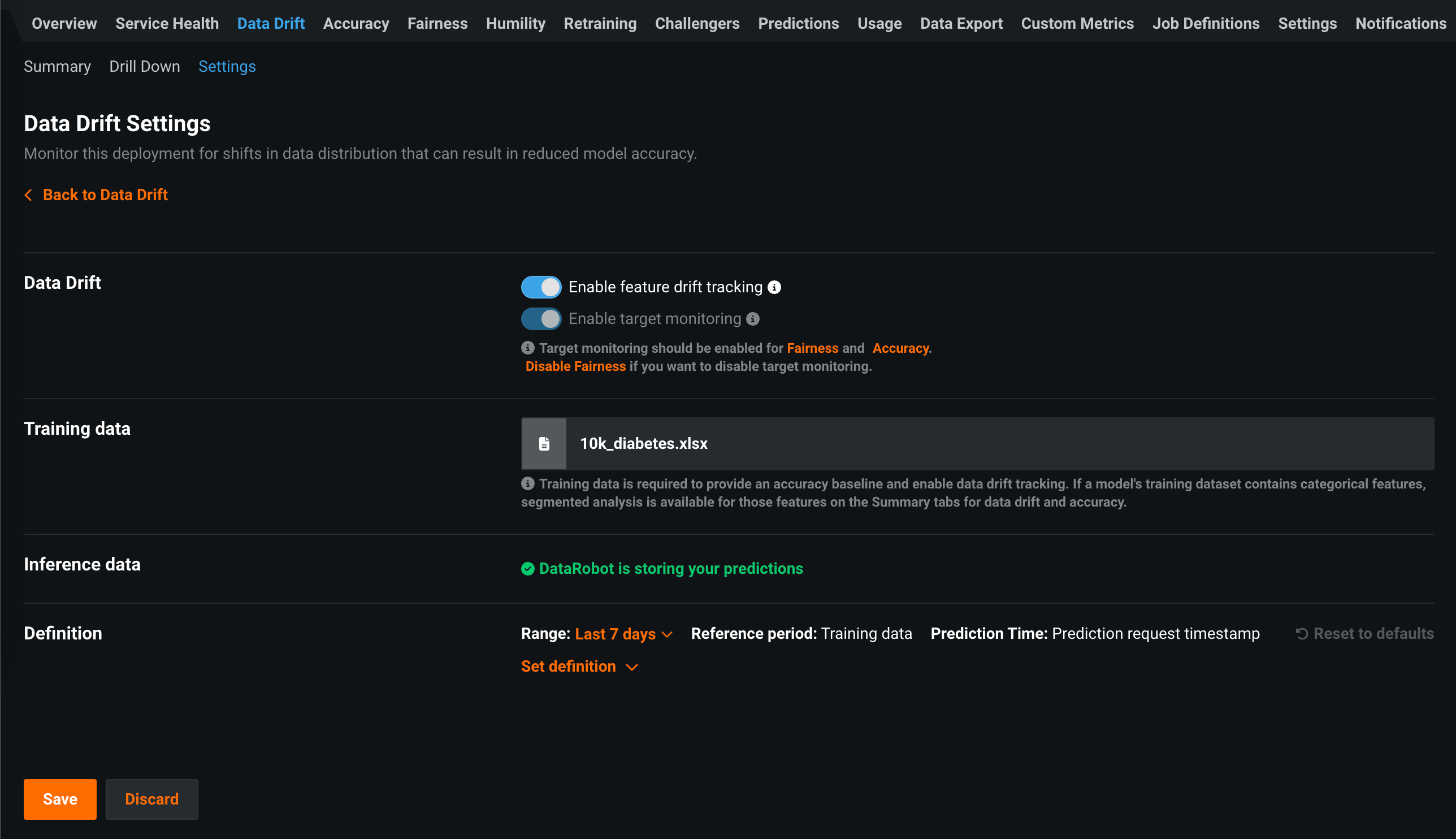

When deploying a model, there is a chance that the dataset used for training and validation differs from the prediction data. You can enable data drift monitoring on the Data Drift > Settings tab. DataRobot monitors both target and feature drift information and displays results on the Data Drift tab.

How does DataRobot track drift?

DataRobot tracks two types of drift:

-

Target drift: DataRobot stores statistics about predictions to monitor how the distribution and values of the target change over time. As a baseline for comparing target distributions, DataRobot uses the distribution of predictions on the holdout.

-

Feature drift: DataRobot stores statistics about predictions to monitor how distributions and values of features change over time. The supported feature data types are numeric, categorical, and text. As a baseline for comparing distributions of features:

-

For training datasets larger than 500MB, DataRobot uses the distribution of a random sample of the training data.

-

For training datasets smaller than 500MB, DataRobot uses the distribution of 100% of the training data.

-

Availability information

Data drift tracking is only available for deployments using deployment-aware prediction API routes (i.e., https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions).

On a deployment's Data Drift Settings page, you can configure the following settings:

| Field | Description |

|---|---|

| Data Drift | |

| Enable feature drift tracking | Configures DataRobot to track feature drift in a deployment. Training data is required for feature drift tracking. |

| Enable target monitoring | Configures DataRobot to track target drift in a deployment. Target monitoring is required for accuracy monitoring. |

| Training data | |

| Training data | Displays the dataset used as a training baseline while building a model. |

| Inference data | |

| DataRobot is storing your predictions | Confirms DataRobot is recording and storing the results of any predictions made by this deployment. DataRobot stores a deployment's inference data when a deployment is created. It cannot be uploaded separately. |

| Inference data (external model) | |

| DataRobot is recording the results of any predictions made against this deployment | Confirms DataRobot is recording and storing the results of any predictions made by the external model. |

| Drop file(s) here or choose file | Uploads a file with prediction history data to monitor data drift. |

| Definition | |

| Set definition | Configures the drift and importance metric settings and threshold definitions for data drift monitoring. |

Note

DataRobot monitors both target and feature drift information by default and displays results in the Data Drift dashboard. Use the Enable target monitoring and Enable feature drift tracking toggles to turn off tracking if, for example, you have sensitive data that should not be monitored in the deployment. The Enable target monitoring setting is also required to enable accuracy monitoring.

Define data drift monitoring notifications¶

Drift assesses how the distribution of data changes across all features for a specified range. The thresholds you set determine the amount of drift you will allow before a notification is triggered.

Note

Only deployment Owners can modify data drift monitoring settings; however, Users can configure the conditions under which notifications are sent to them. Consumers cannot modify monitoring or notification settings.

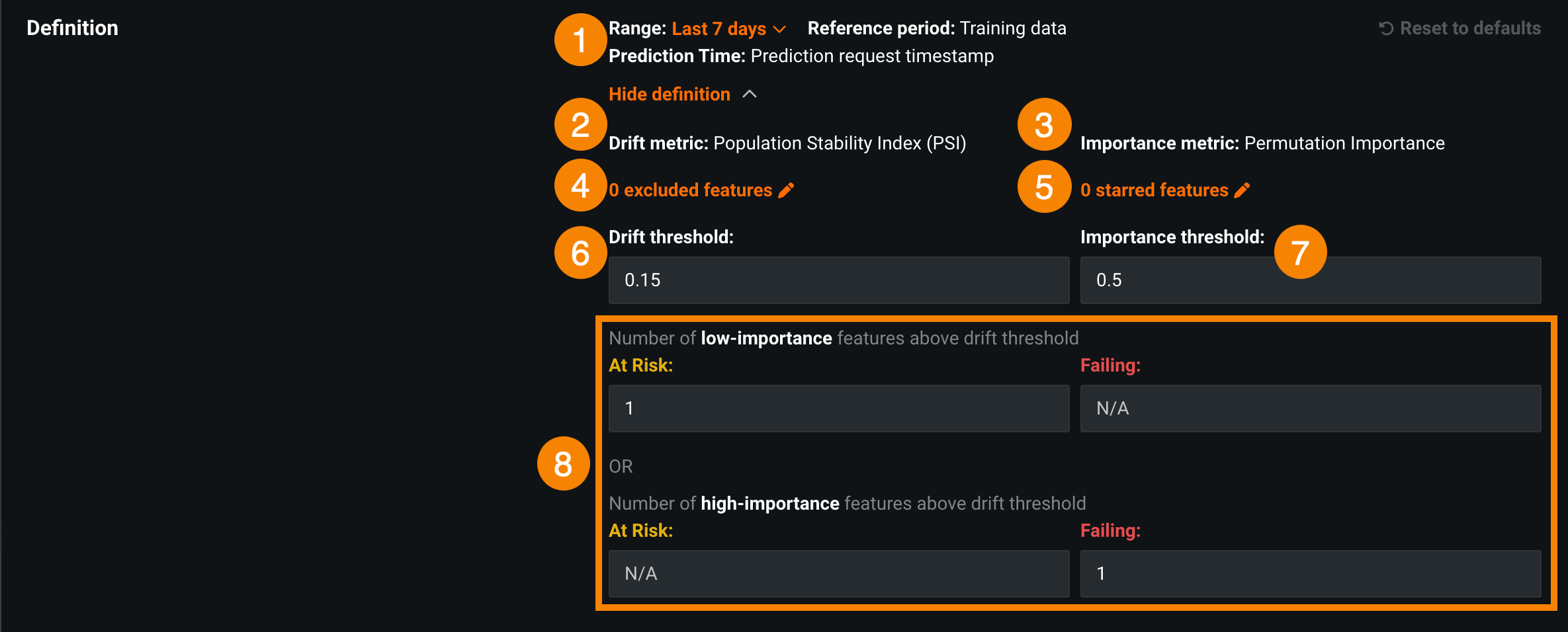

Use the Definition section of the Data Drift > Settings tab to set thresholds for drift and importance:

-

Drift is a measure of how new prediction data differs from the original data used to train the model.

-

Importance allows you to separate the features you care most about from those that are less important.

For both drift and importance, you can visualize the thresholds and how they separate the features on the Data Drift tab. By default, the data drift status for deployments is marked as "Failing" (![]() ) when at least one high-importance feature exceeds the set drift metric threshold; it is marked as "At Risk" (

) when at least one high-importance feature exceeds the set drift metric threshold; it is marked as "At Risk" (![]() ) when no high-importance features, but at least one low-importance feature exceeds the threshold.

) when no high-importance features, but at least one low-importance feature exceeds the threshold.

Deployment Owners can customize the rules used to calculate the drift status for each deployment. As a deployment Owner, you can:

-

Define or override the list of high or low-importance features to monitor features that are important to you or put less emphasis on less important features.

-

Exclude features expected to drift from drift status calculation and alerting so you do not get false alarms.

-

Customize what "At Risk" and "Failing" drift statuses mean to personalize and tailor the drift status of each deployment to your needs.

To set up monitoring of drift status for a deployment:

-

On the Data Drift Settings page, in the Definition section, configure the settings for monitoring data drift:

Element Description 1 Range Adjusts the time range of the Reference period, which compares training data to prediction data. Select a time range from the dropdown menu. 2 Drift metric DataRobot only supports the Population Stability Index (PSI) metric. For more information, see the note on Drift metric support below. 3 Importance metric DataRobot only supports the Permutation Importance metric. The importance metric measures the most impactful features in the training data. 4 Xexcluded featuresExcludes features (including the target) from drift status calculations. Click Xexcluded features to open a dialog box where you can enter the names of features to set as Drift exclusions. Excluded features do not affect drift status for the deployment but still display on the Feature Drift vs. Feature Importance chart. See an example.5 Xstarred featuresSets features to be treated as high importance even if they were initially assigned low importance. Click Xstarred features to open a dialog box where you can enter the names of features to set as High-importance stars. Once added, these features are assigned high importance. They ignore the importance thresholds, but still display on the Feature Drift vs. Feature Importance chart. See an example.6 Drift threshold Configures the thresholds of the drift metric. When drift thresholds are changed, the Feature Drift vs. Feature Importance chart updates to reflect the changes. 7 Importance threshold Configures the thresholds of the importance metric. The importance metric measures the most impactful features in the training data. When importance thresholds are changed, the Feature Drift vs. Feature Importance chart updates to reflect the changes. See an example. 8 "At Risk" / "Failing" thresholds Configures the values that trigger drift statuses for "At Risk" (  ) and "Failing" (

) and "Failing" ( ). See an example.

). See an example.Note

Changes to thresholds affect the periods of time in which predictions are made across the entire history of a deployment. These updated thresholds are reflected in the performance monitoring visualizations on the Data Drift tab.

-

After updating the data drift monitoring settings, click Save.

Drift metric support

While the DataRobot UI only supports the Population Stability Index (PSI) metric, the DataRobot API supports Kullback-Leibler Divergence, Hellinger Distance, Histogram Intersection, and Jensen–Shannon Divergence. In addition, using the Python API client, you can retrieve a list of supported metrics.

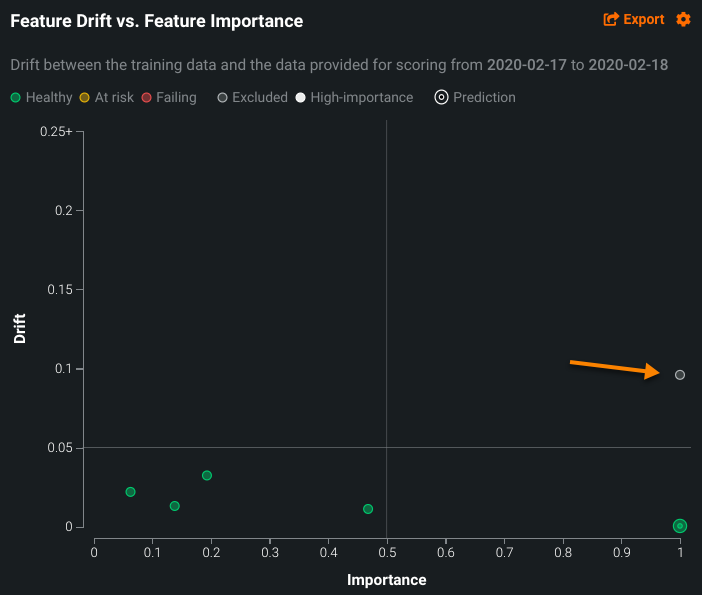

Example of an excluded feature¶

In the example below, the excluded feature, which appears as a gray circle, would normally change the drift status to "Failing" (![]() ). Because it is excluded, the status remains as Passing.

). Because it is excluded, the status remains as Passing.

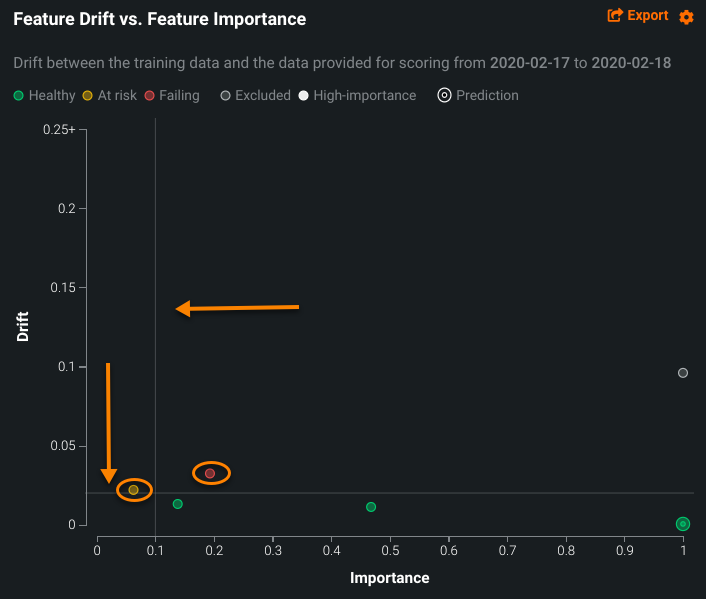

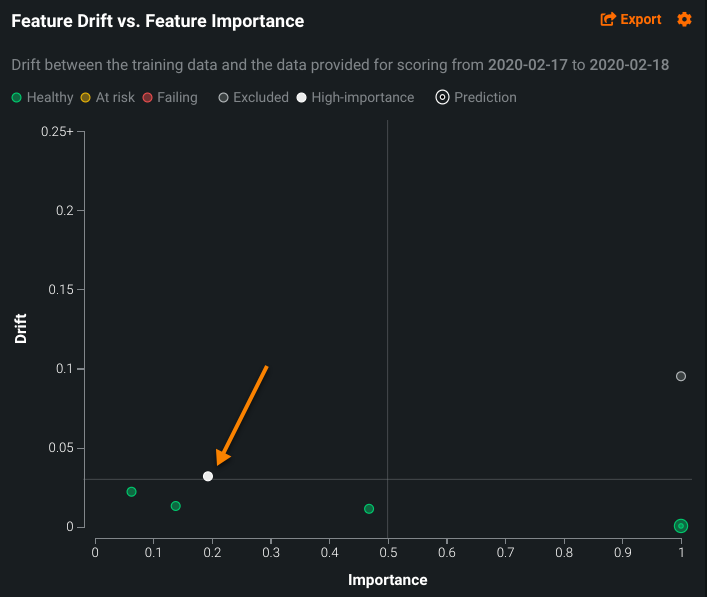

Example of configuring the importance and drift thresholds¶

In the example below, the chart has adjusted the importance and drift thresholds (indicated by the arrows), resulting in more features "At Risk" and "Failing" than the chart above.

Example of starring a feature to assign high importance¶

In the example below, the starred feature, which appears as a white circle, would normally cause drift status to be "At Risk" due to its initially low importance. However, since it is assigned high importance, the feature will change the drift status to "Failing" (![]() ).

).

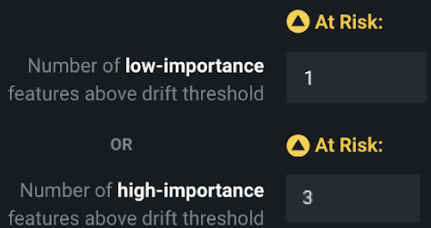

Example of setting a drift status rule¶

The following example configures the rule for a deployment to mark its drift status as "At Risk" if one of the following is true:

-

The number of low-importance features above the drift threshold is greater than 1.

-

The number of high-importance features above the drift threshold is greater than 3.