External Predictions¶

Through the External Predictions advanced option tab, you can bring external model(s) into the DataRobot AutoML environment, view them on the Leaderboard, and run a subset of DataRobot's evaluative insights for comparison against DataRobot models. This feature:

-

Helps to understand how a model trained outside of DataRobot compares in terms of accuracy with DataRobot-trained models from the prediction values.

-

Provides DataRobot’s trust and explainability visualizations for externally trained model(s) to provide better model understanding, compliance, and fairness results.

Workflow overview¶

To bring external models into DataRobot, follow this workflow:

- Prepare the dataset.

- Set advanced options.

- Add an external model.

- Evaluate the external model.

- Enable bias testing (binary classification only).

Prepare the dataset¶

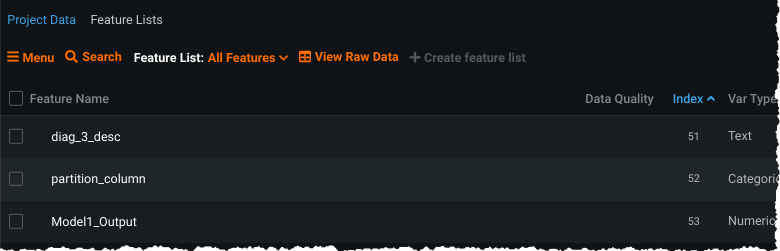

To set up the project, ensure the uploaded dataset has the following two columns:

-

A column containing the values that identify the partition column, either cross validation or train/validation/holdout (TVH). If cross-validation is used, the values represent the folds, for example (5 CV fold example):

1,2,3,4, and5. For TVH, the values are typicallyT,V, andH.This column will later be referenced in the advanced option Partition Feature strategy. In the following example, the column is named

partition_column. -

A column of external model prediction values ("external predictions column"). The descriptions below use the name

Model1_outputas an example of the prediction values.

Note

External model prediction values must be numeric. For binary classification projects, the prediction values must be between [0.0, 1.0]. For regression projects, the prediction values must be between (-inf, inf).

Set advanced options¶

To prepare for modeling:

-

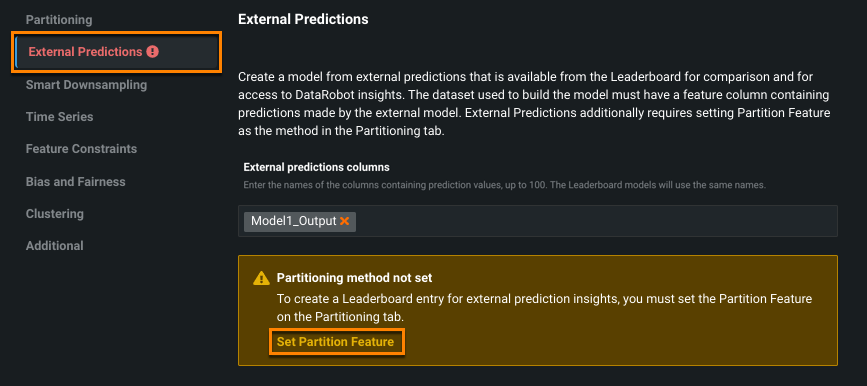

Open the External Predictions tab in advanced options. Enter the external predictions column name(s) from your dataset (up to 100 columns). You are prompted to ensure that Partitioning is set.

-

Click Set Partition Feature to open the appropriate tab. From the Partitioning tab:

- Set Select partitioning method to Partition Feature.

- Set Partition Feature to the column name

partition_column. - Set Run models using to either cross validation or TVH.

- If using TVH, set the values found in the

partition_columnthat represent the partitions.

Add an external model¶

You can add an external model on the Leaderboard either:

- As an individual model using Manual mode.

- As one of many models using full Autopilot, Quick, or Comprehensive mode. In this case, the external model is added at the end of the model recommendation process.

For example, to add a single external model:

-

From the Start page, change the modeling mode to Manual. (This allows you to select your external model from the Repository.) Click Start to begin EDA2.

-

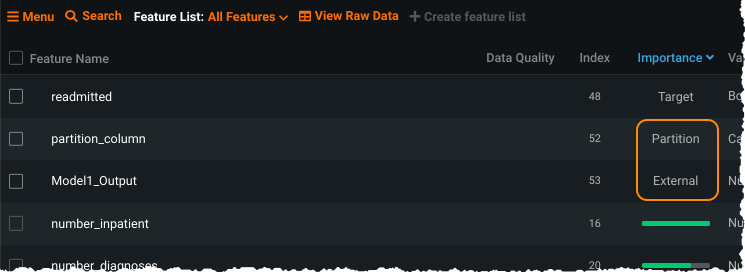

Once EDA2 finishes, open the Data page. In the Importance column, the external model prediction values column,

Model1_Output, is labeled External and the partition feature,partition_column, is labeled Partition. -

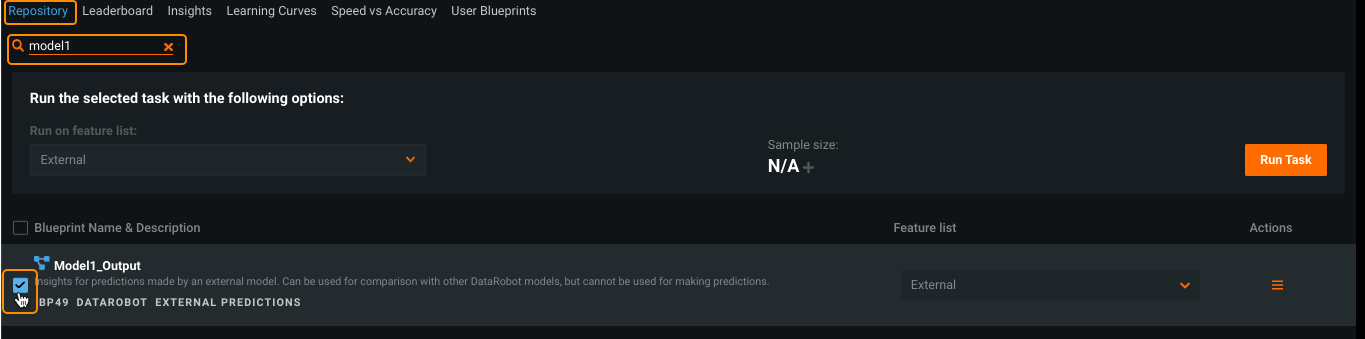

Open the model Repository, search for

Model1_Output, and select it. Notice in the resulting task setup fields, the feature list and sample size are not available for modification. This is because DataRobot cannot know which features from the training data, or what sample size, were used to train the external model. -

Click Run Task.

Evaluate the external model¶

When model building finishes, the model becomes available on the Leaderboard for comparison and further investigation. It is marked with the EXTERNAL PREDICTIONS label:

Note

The Leaderboard metric score (such as LogLoss) will be consistent with the equivalent validation, cross validation, and holdout metric scores calculated by scikit-learn.

The following insights are supported:

| Insight | Project type |

|---|---|

| Lift Chart | All |

| Residuals | Regression |

| ROC Curve | Classification |

| Profit Curve | Classification |

| Model comparison | All |

| Model compliance documentation | All; note only a subset of sections are generated due to the limited knowledge DataRobot has of the external model. |

| Bias and Fairness | Classification; see below. |

Bias and fairness testing¶

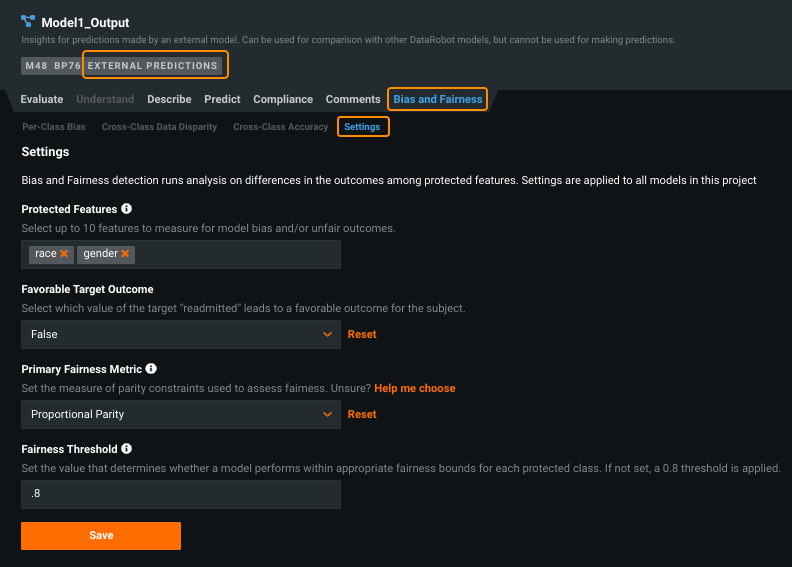

Additionally, if the dataset creates a binary classification project, you can set up Bias and Fairness options for bias testing of the external model.

-

Complete the fields on the Bias and Fairness > Settings page. Click Save and DataRobot retrieves the necessary data.

-

Open the Per-Class Bias tab to help identify if a model is biased, and if so, how much and who it's biased towards or against.

-

Open the Cross-Class Accuracy tab to view calculated evaluation metrics and ROC curve-related scores, segmented by class, for each protected feature.