Manage custom model resources¶

After creating a custom inference model, you can configure the resources the model consumes to facilitate smooth deployment and minimize potential environment errors in production.

To configure the resource allocation and access settings:

-

Navigate to Model Registry > Custom Model Workshop.

-

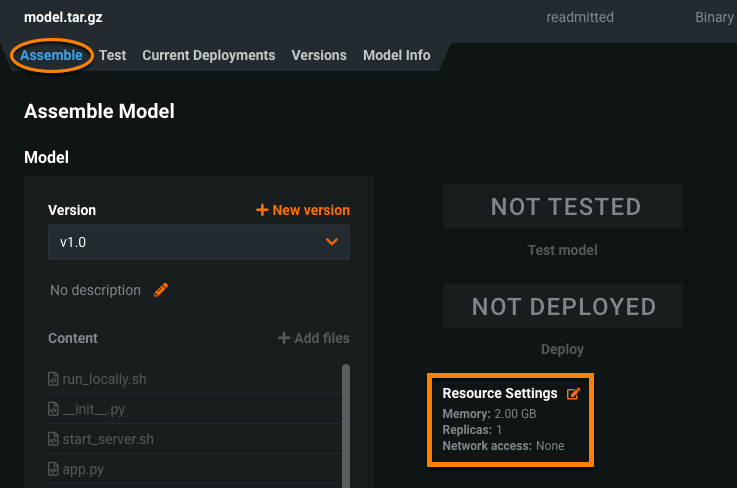

On the Models tab, click the model you want to manage and then click the Assemble tab.

-

On the custom model's Assemble Model page, under the deployment status, configure the Resource Settings:

Note

You can also see these settings in the custom model's model package on the Model Registry > Model Packages page. Click the custom model package, and then, on the Package Info tab, scroll down to the Resource Allocation section.

-

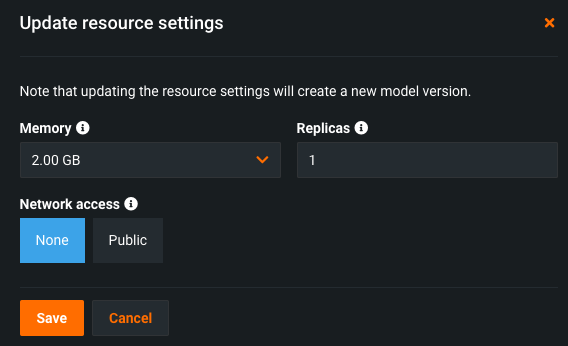

Click the edit icon and configure the custom model's resource allocation and network access settings in the Update resource settings dialog box:

Resource settings access

Users can determine the maximum memory allocated for a model, but only organization admins can configure additional resource settings.

Imbalanced memory settings

DataRobot recommends configuring resource settings only when necessary. When you configure the Memory setting below, you set the Kubernetes memory "limit" (the maximum allowed memory allocation); however, you can't set the memory "request" (the minimum guaranteed memory allocation). For this reason, it is possible to set the "limit" value too far above the default "request" value. An imbalance between the memory "request" and the memory usage allowed by the increased "limit" can result in the custom model exceeding the memory consumption limit. As a result, you may experience unstable custom model execution due to frequent eviction and relaunching of the custom model. If you require an increased Memory setting, you can mitigate this issue by increasing the "request" at the Organization level; for more information, contact DataRobot Support.

Setting Description Memory Determines the maximum amount of memory that may be allocated for a custom inference model. If a model allocates more than the configured maximum memory value, it is evicted by the system. If this occurs during testing, the test is marked as a failure. If this occurs when the model is deployed, the model is automatically launched again by Kubernetes. Replicas Sets the number of replicas executed in parallel to balance workloads when a custom model is running. Increasing the number of replicas may not result in better performance, depending on the custom model's speed. Network access Premium feature. Configures the egress traffic of the custom model: - Public: The default setting. The custom model can access any fully qualified domain name (FQDN) in a public network to leverage third-party services.

- None: The custom model is isolated from the public network and cannot access third party services.

DATAROBOT_ENDPOINTandDATAROBOT_API_TOKENenvironment variables. These environment variables are available for any custom model using a drop-in environment or a custom environment built on DRUM.Premium feature: Network access

Every new custom model you create has public network access by default; however, when you create new versions of any custom model created before October 2023, those new versions remain isolated from public networks (access set to None) until you enable public access for a new version (access set to Public). From this point on, each subsequent version inherits the public access definition from the previous version.

-

Once you have configured the resource settings for the custom model, click Save.

This creates a new minor version of the custom model with edited resource settings applied.