Scoring Code for time series projects¶

Scoring Code is a portable, low-latency method of utilizing DataRobot models outside of the DataRobot application. You can export time series models in a Java-based Scoring Code package from:

-

The Leaderboard: (Leaderboard > Predict > Portable Predictions)

-

A deployment: (Deployments > Predictions > Portable Predictions)

Time series Scoring Code considerations

For information on the time series projects, models, and capabilities with Scoring Code support, see the Time series support section.

Time series parameters for CLI scoring¶

DataRobot supports using scoring at the command line. The following table describes the time series parameters:

| Field | Required? | Default | Description |

|---|---|---|---|

--forecast_point=<value> |

No | None | Formatted date from which to forecast. |

--date_format=<value> |

No | None | Date format to use for output. |

--predictions_start_date=<value> |

No | None | Timestamp that indicates when to start calculating predictions. |

--predictions_end_date=<value> |

No | None | Timestamp that indicates when to stop calculating predictions. |

--with_intervals |

No | None | Turns on prediction interval calculations. |

--interval_length=<value> |

No | None | Interval length as int value from 1 to 99. |

--time_series_batch_processing |

No | Disabled | Enables performance-optimized batch processing for time-series models. |

Scoring Code for segmented modeling projects¶

With segmented modeling, you can build individual models for segments of a multiseries project. DataRobot then merges these models into a Combined Model.

Note

Scoring Code support is available for segments defined by an ID column in the dataset, not segments discovered by a clustering model.

Verify that segment models have Scoring Code¶

If the champion model for a segment does not have Scoring Code, select a model that does have Scoring Code:

-

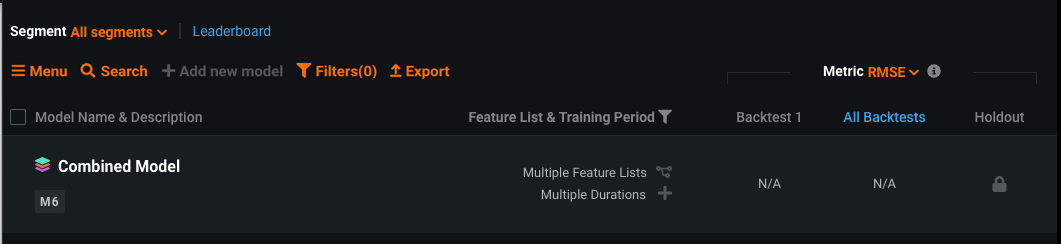

Navigate to the Combined Model on the Leaderboard.

-

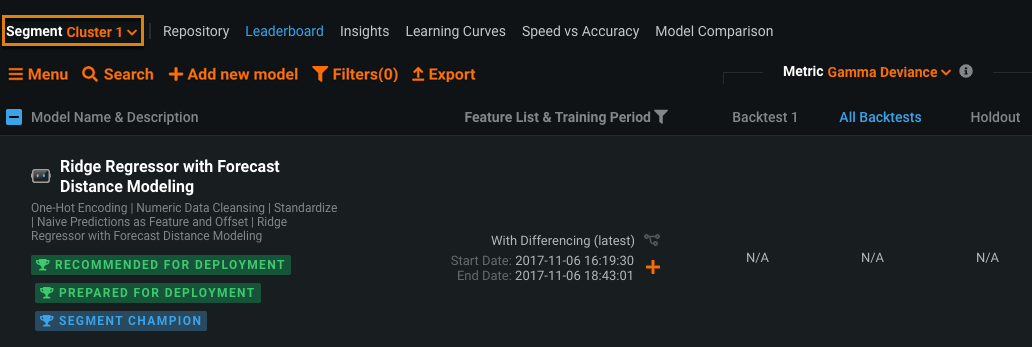

From the Segment dropdown menu, select a segment. Locate the champion for the segment (designated by the SEGMENT CHAMPION indicator).

-

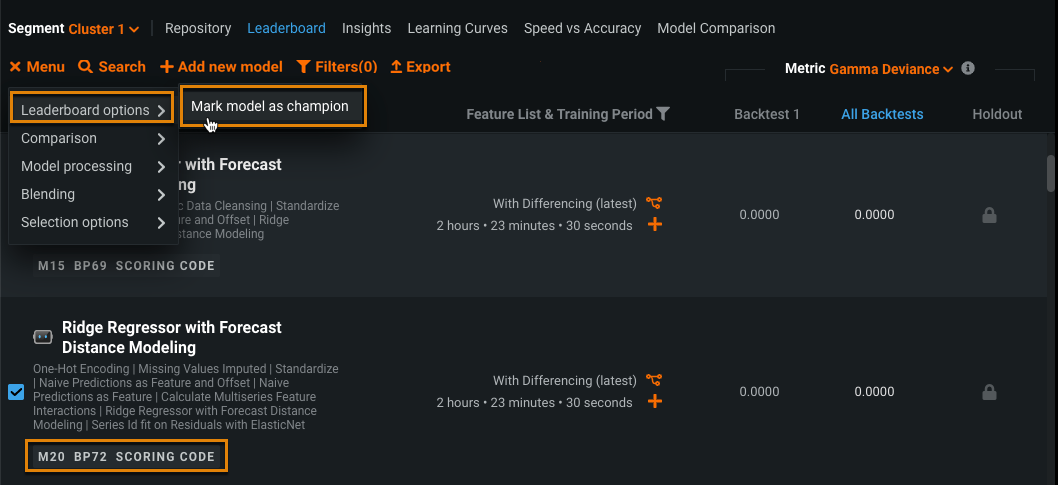

If the segment champion does not have a SCORING CODE indicator, select a new model that meets your modeling requirements and has the SCORING CODE indicator. Then select Leaderboard options > Mark Model as Champion from the Menu at the top.

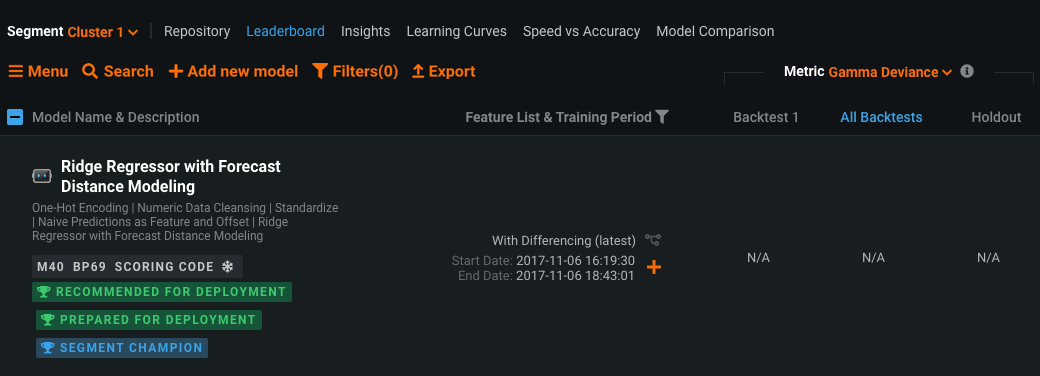

The segment now has a segment champion with Scoring Code:

-

Repeat the process for each segment of the Combined Model to ensure that all of the segment champions have Scoring Code.

Download Scoring Code for a Combined Model¶

To download the Scoring Code JAR for a Combined Model:

-

From the leaderboard: Download the Scoring Code from the Combined Model.

-

From a deployment: Deploy your Combined Model, ensure that each segment has Scoring Code, and download the Scoring Code from the Combined Model deployment.

Prediction intervals in Scoring Code¶

You can now include prediction intervals in the downloaded Scoring Code JAR for a time series model. Supported intervals are 1 to 99.

Download Scoring Code with prediction intervals¶

To download the Scoring Code JAR with prediction intervals enabled:

-

From the leaderboard: Download the Scoring Code with Include Prediction Intervals enabled.

-

From a deployment: Deploy your model and download the Scoring Code with Include Prediction Intervals enabled.

CLI example using prediction intervals¶

The following is a CLI example for scoring models using prediction intervals:

java -jar model.jar csv \

--input=syph.csv \

--output=output.csv \

--with_intervals \

--interval_length=87

Feature considerations¶

Consider the following when working with Scoring Code:

-

Using Scoring Code in production requires additional development efforts to implement model management and model monitoring, which the DataRobot API provides out of the box.

-

Exportable Java Scoring Code requires extra RAM during model building. As a result, to use this feature, you should keep your training dataset under 8GB. Projects larger than 8GB may fail due to memory issues. If you get an out-of-memory error, decrease the sample size and try again. The memory requirement does not apply during model scoring. During scoring, the only limitation on the dataset is the RAM of the machine on which the Scoring Code is run.

Model support¶

Consider the following model support considerations when planning to use Scoring Code:

-

Scoring Code is available for models containing only supported built-in tasks. It is not available for custom models or models containing one or more custom tasks.

-

Scoring Code is not supported in multilabel projects.

-

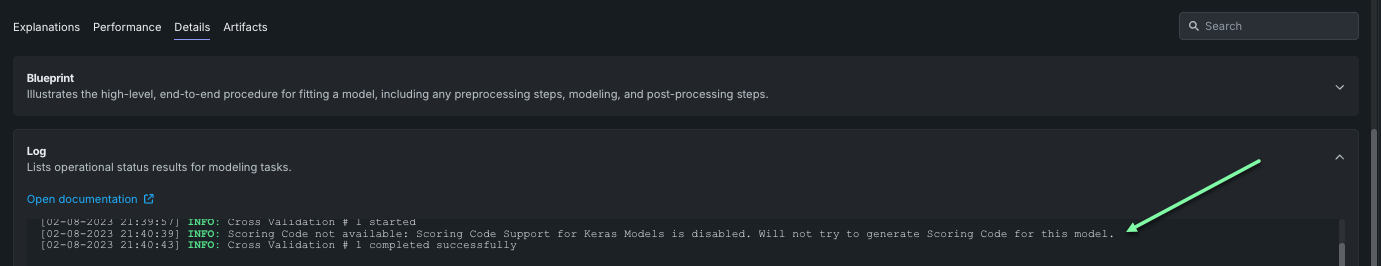

Keras models do not support Scoring Code by default; however, support can be enabled by having an administrator activate the Enable Scoring Code Support for Keras Models feature flag. Note that these models are not compatible with Scoring Code for Android and Snowflake.

Additional instances in which Scoring Code generation is not available include:

- Naive Bayes models

- Visual AI and Location AI models

- Text tokenization involving the MeCab tokenizer for Japanese text (accessed via Advanced Tuning)

Text tokenization

Using the default text tokenization configuration, char-grams, Japanese text is supported.

Time series support¶

The following time series projects and models don't support Scoring Code:

- Time series binary classification projects

- Time series feature derivation projects resulting in datasets larger than 5GB

- Time series anomaly detection models

Anomaly detection models support

While time series anomaly detection models don't generally support Scoring Code, it is supported for IsolationForest and some XGBoost-based anomaly detection model blueprints. For a list of supported time series blueprints, see Time series blueprints with Scoring Code support.

Unsupported capabilities¶

The following capabilities are not supported for Scoring Code. If Scoring Code is not generated due to an unsupported task in the blueprint, the reason is shown in the Details > Log tab.

- Row-based / irregular data

- Nowcasting (single forecast point)

- Intramonth seasonality

- Time series blenders

- Autoexpansion

- Exponentially Weighted Moving Average (EWMA)

- Clustering

- Partial history / cold start

- Prediction Explanations

- Type conversions after uploading data

Supported capabilities¶

The following capabilities are supported for time series Scoring Code:

- Time series parameters for scoring at the command line

- Segmented modeling

- Prediction intervals

- Calendars (high resolution)

- Cross-series

- Zero inflated / naïve binary

- Nowcasting (historical range predictions)

- "Blind history" gaps

- Weighted features

Weighted features support

While weighted features are generally supported, they can result in Scoring Code becoming unavailable due to validation issues; for example, differences in rolling sum computation can cause consistency issues in projects with a weight feature and models trained on feature lists with weighted std or weighted mean.

Time series blueprints with Scoring Code support¶

The following blueprints typically support Scoring Code:

- AUTOARIMA with Fixed Error Terms

- ElasticNet Regressor (L2 / Gamma Deviance) using Linearly Decaying Weights with Forecast Distance Modeling

- ElasticNet Regressor (L2 / Gamma Deviance) with Forecast Distance Modeling

- ElasticNet Regressor (L2 / Poisson Deviance) using Linearly Decaying Weights with Forecast Distance Modeling

- ElasticNet Regressor (L2 / Poisson Deviance) with Forecast Distance Modeling

- Eureqa Generalized Additive Model (250 Generations)

- Eureqa Generalized Additive Model (250 Generations) (Gamma Loss)

- Eureqa Generalized Additive Model (250 Generations) (Poisson Loss)

- Eureqa Regressor (Quick Search: 250 Generations)

- eXtreme Gradient Boosted Trees Regressor

- eXtreme Gradient Boosted Trees Regressor (Gamma Loss)

- eXtreme Gradient Boosted Trees Regressor (Poisson Loss)

- eXtreme Gradient Boosted Trees Regressor with Early Stopping

- eXtreme Gradient Boosted Trees Regressor with Early Stopping (Fast Feature Binning)

- eXtreme Gradient Boosted Trees Regressor with Early Stopping (Gamma Loss)

- eXtreme Gradient Boosted Trees Regressor with Early Stopping (learning rate =0.06) (Fast Feature Binning)

- eXtreme Gradient Boosting on ElasticNet Predictions

- eXtreme Gradient Boosting on ElasticNet Predictions (Poisson Loss)

- Light Gradient Boosting on ElasticNet Predictions

- Light Gradient Boosting on ElasticNet Predictions (Gamma Loss)

- Light Gradient Boosting on ElasticNet Predictions (Poisson Loss)

- Performance Clustered Elastic Net Regressor with Forecast Distance Modeling

- Performance Clustered eXtreme Gradient Boosting on Elastic Net Predictions

- RandomForest Regressor

- Ridge Regressor using Linearly Decaying Weights with Forecast Distance Modeling

- Ridge Regressor with Forecast Distance Modeling

- Vector Autoregressive Model (VAR) with Fixed Error Terms

- IsolationForest Anomaly Detection with Calibration (time series)

- Anomaly Detection with Supervised Learning (XGB) and Calibration (time series)

While the blueprints listed above typically support Scoring Code, there are situations when Scoring Code is unavailable:

- Scoring Code might not be available for some models generated using Feature Discovery.

- Consistency issues can occur for non day-level calendars when the event is not in the dataset; therefore, Scoring Code is unavailable.

- Consistency issues can occur when inferring the forecast point in situations with a non-zero blind history; however, Scoring Code is still available in this scenario.

- Scoring Code might not be available for some models that use text tokenization involving the MeCab tokenizer for Japanese text (accessed via Advanced Tuning). Using the default configuration of char-grams during AutoPilot, Japanese text is supported.

- Differences in rolling sum computation can cause consistency issues in projects with a weight feature and models trained on feature lists with

weighted stdorweighted mean.

Prediction Explanations support¶

Consider the following when working with Prediction Explanations for Scoring Code:

-

To download Prediction Explanations with Scoring Code, you must select Include Prediction Explanations during Leaderboard download or Deployment download. This option is not available for Legacy download.

-

Scoring Code only supports XEMP-based Prediction Explanations. SHAP-based Prediction Explanations aren't supported.

-

Scoring Code doesn't support Prediction Explanations for time series models.