Monitoring agent use cases¶

Reference the use cases below for examples of how to apply the monitoring agent:

- Enable large-scale monitoring

- Perform advanced agent memory tuning for large workloads

- Report accuracy for challengers

- Report metrics

- Monitor a Spark environment

- Monitor using the MLOps CLI

Enable large-scale monitoring¶

To support large-scale monitoring, the MLOps library provides a way to calculate statistics from raw data on the client side. Then, instead of reporting raw features and predictions to the DataRobot MLOps service, the client can report anonymized statistics without the feature and prediction data. Reporting prediction data statistics calculated on the client side is the optimal (and highly performant) method compared to reporting raw data, especially at scale (billions of rows of features and predictions). In addition, because client-side aggregation only sends aggregates of feature values, it is suitable for environments where you don't want to disclose the actual feature values.

To enable the large-scale monitoring functionality, you must set one of the feature type settings. These settings provide the dataset's feature types and can be configured programmatically in your code (using setters) or by defining environment variables.

Note

If you configure these settings programmatically in your code and by defining environment variables, the environment variables take precedence.

The following environment variables are used to configure large-scale monitoring:

| Variable | Description |

|---|---|

MLOPS_FEATURE_TYPES_FILENAME |

The path to the file containing the dataset's feature types in JSON format. Example: "/tmp/feature_types.json" |

MLOPS_FEATURE_TYPES_JSON |

The JSON containing the dataset's feature types. Example: [{"name": "feature_name_f1","feature_type": "date", "format": "%m-%d-%y",}] |

| Optional configuration | |

MLOPS_STATS_AGGREGATION_MAX_RECORDS |

The maximum number of records in a dataset to aggregate. Example: 10000 |

MLOPS_STATS_AGGREGATION_PREDICTION_TS_COLUMN_NAME |

The name of the prediction timestamp column in the dataset you want to aggregate on the client side. Example: "ts" |

MLOPS_STATS_AGGREGATION_PREDICTION_TS_COLUMN_FORMAT |

The format of the prediction timestamp values in the dataset. Example: "%Y-%m-%d %H:%M:%S.%f" |

MLOPS_STATS_AGGREGATION_SEGMENT_ATTRIBUTES |

The custom attribute used to segment the dataset for segmented analysis of data drift and accuracy. Example: "country" |

MLOPS_STATS_AGGREGATION_AUTO_SAMPLING_PERCENTAGE |

The percentage of raw data to report to DataRobot using algorithmic sampling. This setting supports challengers and accuracy tracking by providing a sample of raw features, predictions, and actuals. The rest of the data is sent in aggregate format. In addition, you must define MLOPS_ASSOCIATION_ID_COLUMN_NAME to identify the column in the input data containing the data for sampling. Example: 20 |

MLOPS_ASSOCIATION_ID_COLUMN_NAME |

The column containing the association ID required for automatic sampling and accuracy tracking. Example: "rowID" |

The following code snippets show how you can configure large-scale monitoring settings programmatically:

mlops = MLOps() \

.set_stats_aggregation_feature_types_filename("/tmp/feature_types.json") \

.set_aggregation_max_records(10000) \

.set_prediction_timestamp_column("ts", "yyyy-MM-dd HH:mm:ss") \

.set_segment_attributes("country") \

.set_auto_sampling_percentage(20) \

.set_association_id_column_name("rowID") \

.init()

mlops = MLOps() \

.set_stats_aggregation_feature_types_json([{"name": "feature_name_f1","feature_type": "date", "format": "%m-%d-%y",}]) \

.set_aggregation_max_records(10000) \

.set_prediction_timestamp_column("ts", "yyyy-MM-dd HH:mm:ss") \

.set_segment_attributes("country") \

.set_auto_sampling_percentage(20) \

.set_association_id_column_name("rowID") \

.init()

Manual sampling

To support challenger models and accuracy monitoring, you must send raw features, predictions, and actuals; however, you don't need to use the automatic sampling feature. You can manually report a small sample of raw data and then send the remaining data in aggregate format.

Prediction timestamp

If you don't provide the MLOPS_STATS_AGGREGATION_PREDICTION_TS_COLUMN_NAME and MLOPS_STATS_AGGREGATION_PREDICTION_TS_COLUMN_FORMAT environment variables, the timestamp is generated based on the current local time.

The large-scale monitoring functionality is available for Python, the Java Software Development Kit (SDK), and the MLOps Spark Utils Library:

Replace calls to report_predictions_data() with calls to:

report_aggregated_predictions_data(

self,

features_df,

predictions,

class_names,

deployment_id,

model_id

)

Replace calls to reportPredictionsData() with calls to:

reportAggregatePredictionsData(

Map<String,

List<Object>> featureData,

List<?> predictions,

List<String> classNames

)

Replace calls to reportPredictions() with calls to predictionStatisticsParameters.report().

The predictionStatisticsParameters.report() function has the following builder constructor:

PredictionStatisticsParameters.Builder()

.setChannelConfig(channelConfig)

.setFeatureTypes(featureTypes)

.setDataFrame(df)

.build();

predictionStatisticsParameters.report();

Tip

You can find an example of this use-case in the agent .tar file in examples/java/PredictionStatsSparkUtilsExample.

Map supported feature types¶

Currently, large-scale monitoring supports numeric and categorical features. When configuring this monitoring method, you must map each feature name to the corresponding feature type (either numeric or categorical). When mapping feature types to feature names, there is a method for Scoring Code models and a method for all other models.

Often, a model can output the feature name and the feature type using an existing access method; however, if access is not available, you may have to manually categorize each feature you want to aggregate as Numeric or Categorical.

Map a feature type (Numeric or Categorical) to each feature name using the setFeatureTypes method on predictionStatisticsParameters.

Map a feature type (Numeric or Categorical) to each feature name after using the getFeatures query on the Predictor object to obtain the features.

You can find an example of this use-case in the agent .tar file in examples/java/PredictionStatsSparkUtilsExample/src/main/scala/com/datarobot/dr_mlops_spark/Main.scala.

Perform advanced agent memory tuning for large workloads¶

The monitoring agent's default configuration is tuned to perform well for an average workload; however, as you increase the number of records the agent groups together for forwarding to DataRobot MLOps, the agent's total memory usage increases steadily to support the increased workload. To ensure the agent can support the workload for your use case, you can estimate the agent's total memory use and then set the agent's memory allocation or configure the maximum record group size.

Estimate agent memory use¶

When estimating the monitoring agent's approximate memory usage (in bytes), assume that each feature reported requires an average of 10 bytes of memory. Then, you can estimate the memory use of each message containing raw prediction data from the number of features (represented by num_features) and the number of samples (represented by num_samples) reported. Each message uses approximately 10 bytes × num_features × num_samples of memory.

Note

Consider that the estimate of 10 bytes of memory per feature reported is most applicable to datasets containing a balanced mix of features. Text features tend to be larger, so datasets with an above-average amount of text features tend to use more memory per feature.

When grouping many records at one time, consider that the agent groups messages together until reaching the limit set by the agentMaxAggregatedRecords setting. In addition, at that time, the agent will keep messages in memory up to the limit set by the httpConcurrentRequest setting.

Combining the calculations above, you can estimate the agent's memory usage (and the necessary memory allocation) with the following formula:

memory_allocation = 10 bytes × num_features × num_samples × max_group_size × max_concurrency

Where the variables are defined as:

-

num_features: The number of features (columns) in the dataset. -

num_samples: The number of rows reported in a single call to the MLOPS reporting function. -

max_group_size: The number of records aggregated into each HTTP request, set byagentMaxAggregatedRecordsin the agent config file. -

max_concurrency: The number of concurrent HTTP requests, set byhttpConcurrentRequestin the agent config file.

Once you use the dataset and agent configuration information above to calculate the required agent memory allocation, this information can help you fine-tune the agent configuration to optimize the balance between performance and memory use.

Set agent memory allocation¶

Once you know the agent's memory requirement for your use case, you can increase the agent’s Java Virtual Machine (JVM) memory allocation using the MLOPS_AGENT_JVM_OPT environment variable:

MLOPS_AGENT_JVM_OPT=-Xmx2G

Important

When running the agent in a container or VM, you should configure the system with at least 25% more memory than the -Xmx setting.

Set the maximum group size¶

Alternatively, to reduce the agent's memory requirement for your use case, you can decrease the agent's maximum group size limit set by agentMaxAggregatedRecords in the agent config file:

# Maximum number of records to group together before sending to DataRobot MLOps

agentMaxAggregatedRecords: 10

Lowering this setting to 1 disables record grouping by the agent.

Report accuracy for challengers¶

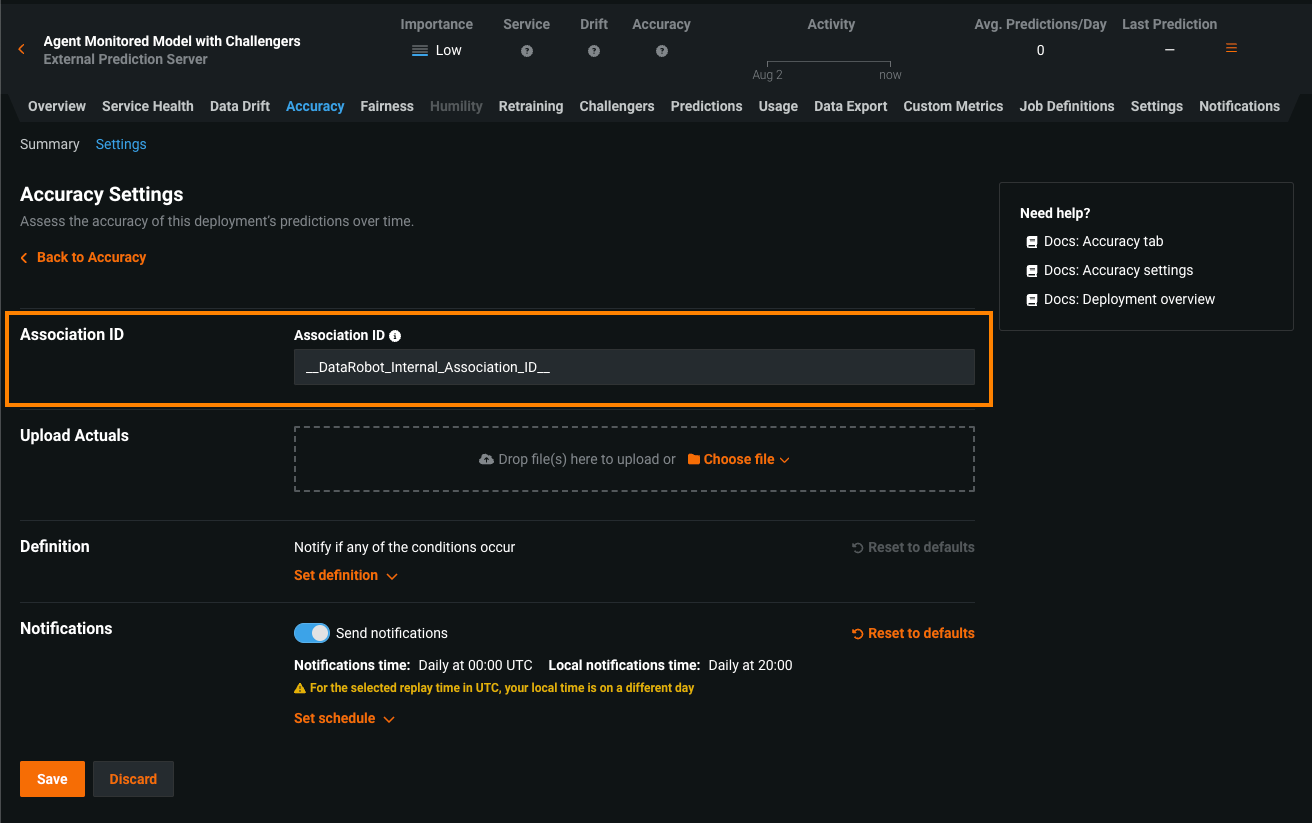

Because the agent may send predictions with an association ID, even if the deployment in DataRobot doesn’t have an association ID set, the agent's API endpoints recognize the __DataRobot_Internal_Association_ID__ column as the association ID for the deployment. This enables accuracy tracking for models reporting prediction data through the agent; however, the __DataRobot_Internal_Association_ID__ column isn't available to DataRobot when predictions are replayed for challenger models, meaning that DataRobot MLOps can't record accuracy for those models due to a missing association ID. To track accuracy for challenger models through the agent, set the association ID on the deployment to __DataRobot_Internal_Association_ID__ during deployment creation or in the accuracy settings. Once you configure the association ID with this value, all future challenger replays will record accuracy for challenger models.

Report metrics¶

If your prediction environment cannot be network-connected to DataRobot, you can instead use monitoring agent reporting in a disconnected manner.

-

In the prediction environment, configure the MLOps library to use the

filesystemspooler type. The MLOps library will report metrics into its configured directory, e.g.,/disconnected/predictions_dir. -

Run the monitoring agent on a machine that is network-connected to DataRobot.

-

Configure the agent to use the

filesystemspooler type and receive its input from a local directory, e.g.,/connected/predictions_dir. -

Migrate the contents of the directory

/disconnected/predictions_dirto the connected environment/connected/predictions_dir.

Reports for Scoring Code models¶

You can also use monitoring agent reporting to send monitoring metrics to DataRobot for downloaded Scoring Code models. Reference an example of this use case in the MLOps agent tarball at examples/java/CodeGenExample.

Monitor a Spark environment¶

A common use case for the monitoring agent is monitoring scoring in Spark environments where scoring happens in Spark and you want to report the predictions and features to DataRobot. Since Spark usually uses a multi-node setup, it is difficult to use the agent's fileystem spooler channel because a shared consistent file system is uncommon in Spark installations.

To work around this, use a network-based channel like RabbitMQ or AWS SQS. These channels can work with multiple writers and single (or multiple) readers.

The following example outlines how to set up agent monitoring on a Spark system using the MLOps Spark Util module, which provides a way to report scoring results on the Spark framework. Reference the documentation for the MLOpsSparkUtils module in the MLOps Java examples directory at examples/java/SparkUtilsExample/.

The Spark example's source code performs three steps:

- Given a scoring JAR file, it scores data and delivers results in a DataFrame.

- Merges the feature's DataFrame and the prediction results into a single DataFrame.

- Calls the

mlops_spark_utils.MLOpsSparkUtils.reportPredictionshelper to report the predictions using the merged DataFrame.

You can use mlops_spark_utils.MLOpsSparkUtils.reportPredictions to report predictions generated by any model as long as the function retrieves the data via a DataFrame.

This example uses RabbitMQ as the channel of communication and includes channel setup. Since Spark is a distributed framework, DataRobot requires a network-based channel like RabbitMQ or AWS SQS in order for the Spark workers to be able to send the monitoring data to the same channel regardless of the node the worker is running on.

Spark prerequisites¶

The following steps outline the prerequisites necessary to execute the Spark monitoring use case.

-

Run a spooler (RabbitMQ in this example) in a container:

- This Docker command will also run the management console for RabbitMQ.

- You can access the console via your browser at http://localhost:15672 (username=

guest, password=guest). -

In the console, view the message queue in action when you run the

./run_example.shscript below.docker run -d -p 15672:15672 -p 5672:5672 --name rabbitmq-spark-example rabbitmq:3-management

-

Configure and start the monitoring agent.

- Follow the quickstart guide provided in the agent tarball.

- Set up the agent to communicate with RabbitMQ.

-

Edit the agent channel config to match the following:

- type: "RABBITMQ_SPOOL" details: {name: "rabbit", queueUrl: "amqp://localhost:5672", queueName: "spark_example"}

-

If you are using mvn, install the

datarobot-mlopsJAR into your local mvn repository before testing the examples by running:./examples/java/install_jar_into_maven.shThis command executes a shell script to install either the

mlops-utils-for-spark_2-<version>.jarormlops-utils-for-spark_3-<version>.jarfile, depending on the Spark version you're using (where<version>represents the agent version). -

Create the example JAR files, set the

JAVA_HOMEenvironment variable, and then runmaketo compile.- For Spark2/Java8:

export JAVA_HOME=$(/usr/libexec/java_home -v 1.8) - For Spark3/Java11:

export JAVA_HOME=$(/usr/libexec/java_home -v 11)

- For Spark2/Java8:

-

Install and run Spark locally.

-

Download the latest version of Spark 2 (2.x.x) or Spark 3 (3.x.x) built for Hadoop 3.3+. To download the latest Spark 3 version, see the Apache Spark downloads page.

Note

Replace the

<version>placeholders in the command and directory below with the versions of Spark and Hadoop you're using. -

Unarchive the tarball:

tar xvf ~/Downloads/spark-<version>-bin-hadoop<version>.tgz. -

In the

spark-<version>-bin-hadoop<version>directory, start the Spark cluster:sbin/start-master.sh -i localhost` sbin/start-slave.sh -i localhost -c 8 -m 2G spark://localhost:7077 -

Ensure your installation is successful:

bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://localhost:7077 --num-executors 1 --driver-memory 512m --executor-memory 512m --executor-cores 1 examples/jars/spark-examples_*.jar 10 -

Add the Spark bin directory to your

$PATH:env SPARK_BIN=/opt/ml/spark-3.2.1-bin-hadoop3.*/bin ./run_example.sh

Note

The monitoring agent also supports Spark2.

-

Spark use case¶

After meeting the prerequisites outlined above, run the Spark example.

-

Create the model package and initialize the deployment:

./create_deployment.shAlternatively, use the DataRobot UI to create an external model package and deploy it.

-

Set the environment variables for the deployment and the model returned from creating the deployment by copying and pasting them into your shell.

export MLOPS_DEPLOYMENT_ID=<deployment_id> export MLOPS_MODEL_ID=<model_id> -

Generate predictions and report statistics to DataRobot:

run_example.sh -

If you want to change the spooler type (the communication channel between the Spark job and the monitoring agent):

- Edit the Scala code under

src/main/scala/com/datarobot/dr_mlops_spark/Main.scala. -

Modify the following line to contain the required channel configuration:

val channelConfig = "output_type=rabbitmq;rabbitmq_url=amqp://localhost;rabbitmq_queue_name=spark_example" -

Recompile the code by running

make.

- Edit the Scala code under

Monitor using the MLOps CLI¶

MLOps supports a command line interface (CLI) for interacting with the MLOps application. You can use the CLI for most MLOps actions, including creating deployments and model packages, uploading datasets, reporting metrics on predictions and actuals, and more.

Use the MLOps CLI help page for a list of available operations and syntax examples:

mlops-cli [-h]

Monitoring agent vs. MLOps CLI

Like the monitoring agent, the MLOps CLI is also able to post prediction data to the MLOps service, but its usage is slightly different. MLOps CLI is a Python app that can send an HTTP request to the MLOps service with the current contents of the spool file. It does not run the monitoring agent or call any Java process internally, and it does not continuously poll or wait for new spool data; once the existing spool data is consumed, it exits. The monitoring agent, on the other hand, is a Java process that continuously polls for new data in the spool file as long as it is running, and posts that data in an optimized form to the MLOps service.