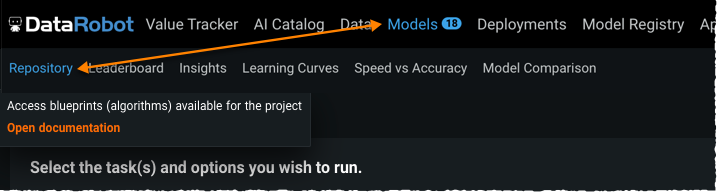

Model Repository¶

The Repository is a library of modeling blueprints available for a selected project. These blueprints illustrate the algorithms (the preprocessing steps, selected estimators, and in some models, postprocessing as well) used to build a model, not the model itself. Model blueprints listed in the Repository have not necessarily been built yet, but could be built in any of the modeling modes. When you create a project in Manual mode and want to select a specific blueprint to run, you access it from the Repository.

When you choose any version of Autopilot as the modeling mode, DataRobot runs a sample of blueprints that will provide a good balance of accuracy and runtime. Blueprints that offer the possibility of improvement while also potentially increasing runtime (many deep learning models, for example) are available from the Repository but not run as part of Autopilot.

It is a good practice to run Autopilot, identify the blueprint (algorithm) that performed best on the data, and then run all variants of that algorithm in the Repository. Comprehensive mode runs all models from the Repository at the maximum sample size, which can be quite time consuming.

From the Repository you can:

- Search to limit the list of models displayed by type.

- Use Preview to display a model's blueprint or code.

- Start a model run using new parameters.

- Start a batch run using new parameters applied to all selected blueprints.

Search the Repository¶

To more easily find one of the model types described below, or to sort by model type, use the Search function:

Click in the search box and begin typing a model/blueprint family, blueprint name, any node, or a badge name. As you type, the list automatically narrows to those blueprints meeting your search criteria. To return to the complete model listing, remove all characters from the search box.

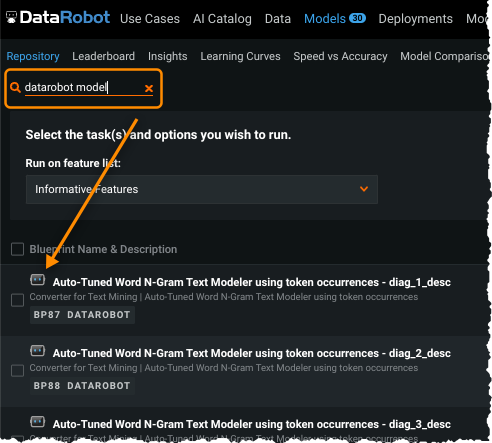

DataRobot models¶

DataRobot models are built using massively parallel processing to train and evaluate thousands of choices, mostly built on open source algorithms (because open source has some of the best algorithms available). DataRobot searches through millions of possible combinations of algorithms, preprocessing steps, features, transformations, and tuning parameters to deliver the best models for your dataset and prediction target. It is this preprocessing and tuning that produces the best models possible. DataRobot models are marked with the DataRobot icon ![]() .

.

List DataRobot models

You can view a list of DataRobot models from the Repository (or the Leaderboard) by using the search term datarobot model:

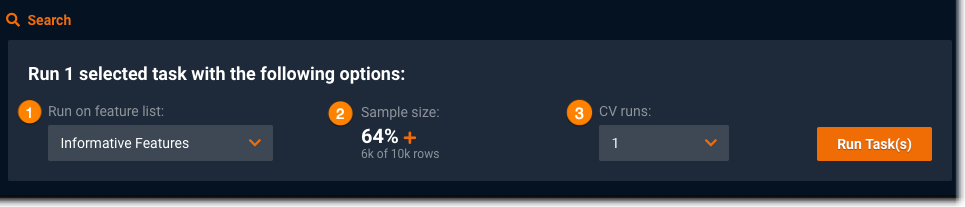

Create a new model¶

To create a new model from a blueprint in the Repository:

-

Select the blueprint to run by marking the check box next to the name.

-

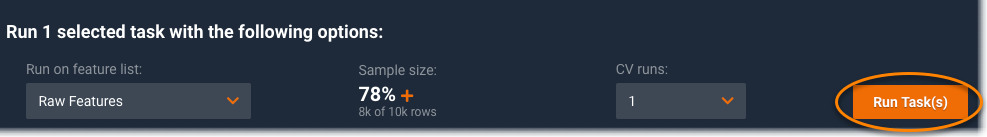

Once selected, modify one or more of the fields in the now-enabled dialog box:

Element Description 1 Run on feature list From the dropdown select a new feature list. The options include the default lists and any lists you created. 2 Sample size Modify the sample size, making it either larger or smaller than the sample size used by Autopilot. Remember that when increasing the sample size, you must set values that leave data available for validation. 3 CV runs Set the number of folds used in cross-validation. -

After verifying the parameter settings, click Run Task(s) to launch the new model run.

Launch a batch run¶

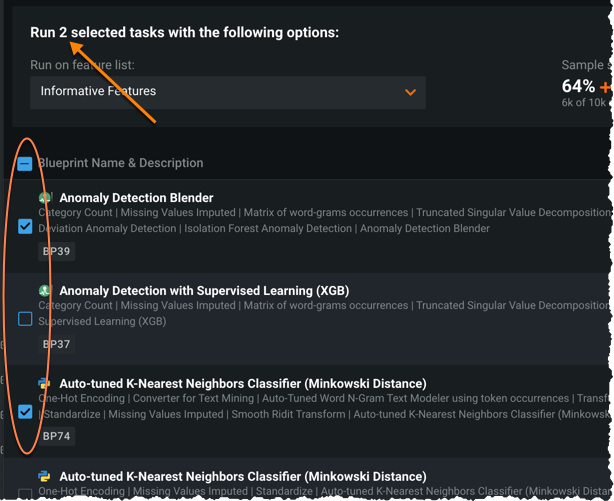

The Repository batch run capability allows you to set parameters and apply them to a group of selected models. To launch a batch run, select the blueprints to run in batch by either clicking in the box next to the model name or selecting all by clicking next to Blueprint Name & Description:

To deselect all selected blueprints, click the minus sign (-) next to Blueprint Name & Description.

If you have already built any of the models in the batch using the same sample size and feature list, you must make a change to at least one of the parameters (described in the run option). This is not required for batches containing all new models. Click Run Task(s) to start the build.

Notes on sample size¶

The sample size available when adding models from the Repository differs depending on the size of the dataset. By default, the sample size reflects the size used in the last Autopilot stage (but this value can be changed to any valid size). DataRobot caps that amount of data at either 64% or 500MB, whichever is smaller.

When calculating size, DataRobot first calculates what will ultimately be the final stage (64% or 500MB, whichever is smaller) and then models according to the mode selected. For full Autopilot, that would be 1/4, 1/2, and all of that data. In datasets smaller than 500MB, full Autopilot stages are 64%/4, 64%/2, and finally, 64%. (See the calculations below for alternate partitioning results.)

If the dataset is larger than 500MB, it is first reduced to the 500MB threshold using random sampling. Then, DataRobot calculates the percentage that corresponds to 500MB and create stages that are 1/4, 1/2, and all of the calculated percentage. For example, if 500MB is 40% of the data, DataRobot runs 10%, 20%, and 40% stages.

In addition to dataset size, the range available for selection also depends on the partitioning parameters. For example, if you have 20% holdout with five-fold cross-validation (CV), the calculation is as follows:

- Take 100% of the data minus 20% holdout.

- From the 80% remaining, use 1/5 of the data. By default, a single validation fold is calculated as:

100% - 20% - 80%/5 = 64%.

Note that if you configured custom training/validation/holdout (TVH) partitions, it is calculated as:

100% - custom % for holdout - custom % for validation

Or, if you declined to include holdout (0%) with five-fold CV, the result is:

100 - 1/5 of full data for validation = 80%

Menu actions¶

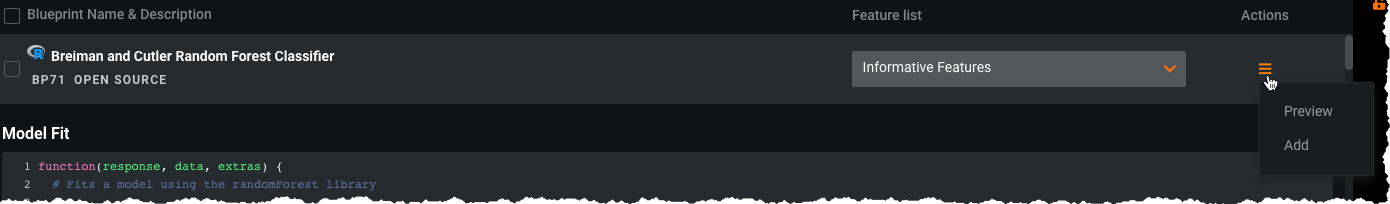

Use the menu to preview a model—either the blueprint or, for open-source models, the code.

Use the Add function to select the model and add it to the task list to run when Run Task is clicked.