Predictions on test and training data¶

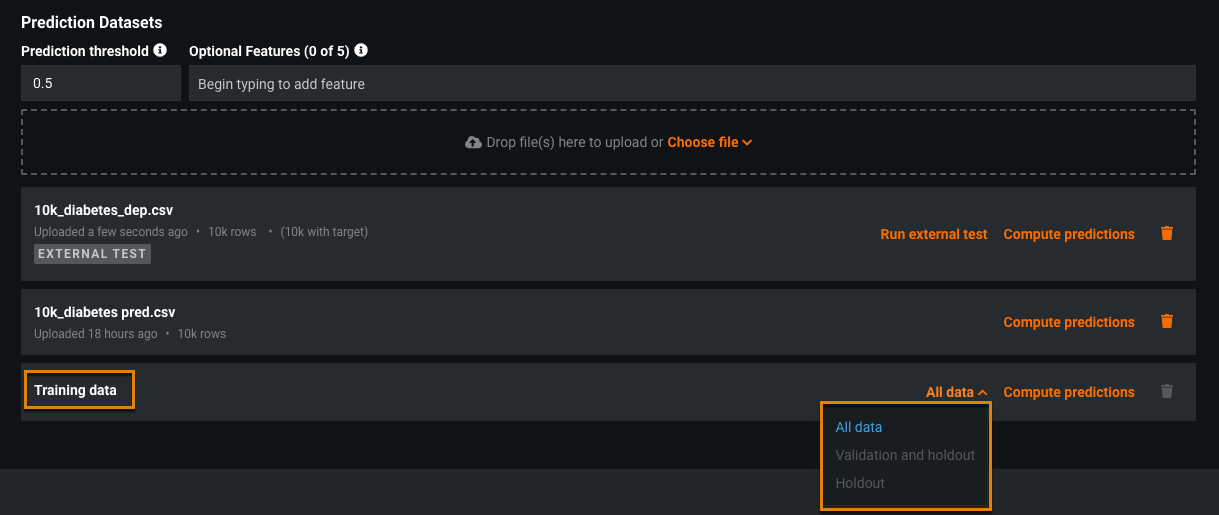

Use the Make Predictions tab to make predictions and assess model performance prior to deployment. You can make predictions on an external test dataset (i.e., external holdout) or you can make predictions on training data (i.e., validation and/or holdout).

Make predictions on an external test dataset¶

To better evaluate model performance, you can upload any number of additional test datasets after project data has been partitioned and models have been trained. An external test dataset is one that:

-

Contains actuals (values for the target).

-

Is not part of the original dataset (you didn't train on any part of it).

Using an external test dataset allows you to compare model accuracy against the predictions.

By uploading an external dataset and using the original model's dataset partitions, you can compare metric scores and visualizations to ensure consistent performance prior to deployment. Select the external test set as if it were a partition in the original project data.

Note

Support for external test sets is available for all project types except supervised time series. Unsupervised time series supports external test sets for anomaly detection but not clustering.

To make predictions on an external test set:

-

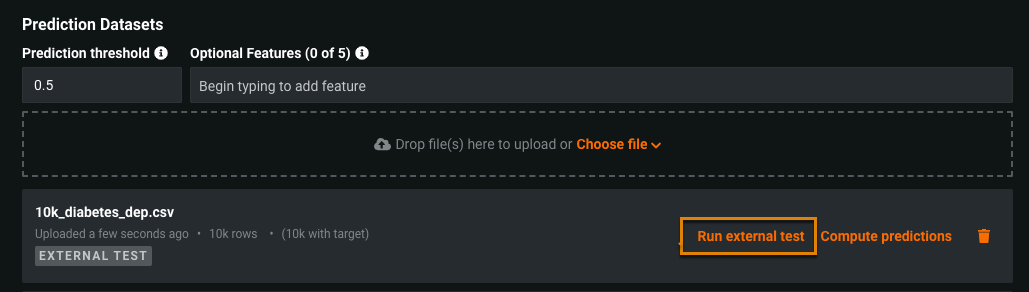

Upload new test data in the same way you would upload a prediction dataset. For supervised learning, the external set must contain the target column and all columns present in the training dataset (although additional columns can be added). The workflow is slightly different for anomaly detection projects.

-

Once uploaded, you'll see the label EXTERNAL TEST below the dataset name. Click Run external test to calculate predicted values and compute statistics that compare the actual target values to the predicted values. The external test is queued and job status appears in the Worker Queue on the right sidebar.

-

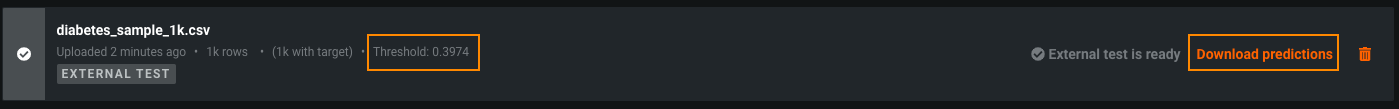

When calculations complete, click Download predictions to save prediction results to a CSV file.

Note

In a binary classification project, when you click Run external test, the current value of the Prediction Threshold is used for computation of the predicted labels. In the downloaded predictions, the labels correspond to that threshold, even if you updated the threshold between computing and downloading. DataRobot displays the threshold that was used in the calculation in the dataset listing.

-

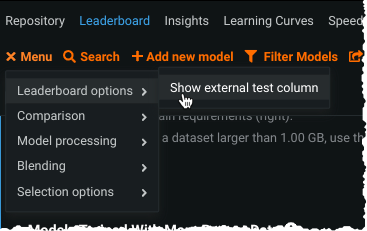

To view external test scores, from the Leaderboard menu select Show external test column.

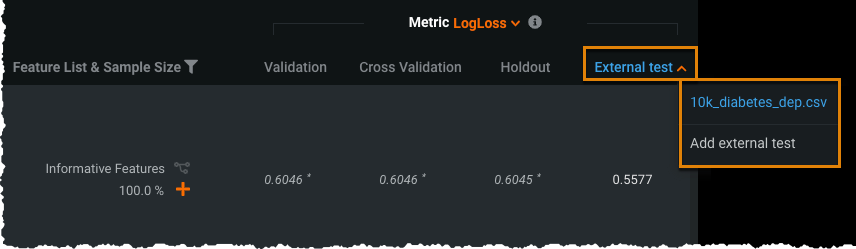

The Leaderboard now includes an External test column.

-

From the External test column, choose the test data to display results for or click Add external test to return to the Make Predictions tab to add additional test data.

You can now sort models by external test scores or calculate scores for more models.

Supply actual values for anomaly detection projects¶

In anomaly detection (non-time series) projects, you must set an actuals column that identifies the outcome or future results to compare to predicted results. This provides a measure of accuracy for the event you are predicting on. The prediction dataset must contain the same columns as those in the training set with at least one column for known anomalies. Select the known anomaly column as the Actuals value.

Compare insights with external test sets¶

Expand the Data Selection dropdown to select an external test set as if it was a partition in the original project data.

This option is available when using the following insights:

- Lift Chart

- ROC Curve

- Profit Curve

- Confusion Matrix

- Accuracy Over Time (OTV only)

- Stability (OTV only)

- Residuals

Note the following:

-

Insights are not computed if an external dataset has fewer than 10 rows; however, metric scores are computed and displayed on the Leaderboard.

-

The ROC Curve insight is disabled if the external dataset only contains single class actuals.

Make predictions on training data¶

Less commonly (although there are reasons), you may want to download predictions for your original training data, which DataRobot automatically imports. From the dropdown, select the partition(s) to use when generating predictions.

For small datasets, predictions are calculated by doing stacked predictions and therefore can use all partitions. Because those calculations are too “expensive” to run on large datasets (750MB and higher by default), predictions are based on holdout and/or validation partitions, as long as the data wasn’t used in training.

Training data predictions vs. Leaderboard cross-validation

The prediction values generated on training data from the Leaderboard should not match the predictions used to generate cross-validation scores on the Leaderboard because predictions on training data use stacked predictions within the training data. For a more detailed comparison, see below:

-

Training data prediction values: Generated using stacked predictions to make predictions on training data without overfitting caused by in-sample predictions. To make out-of-sample predictions on training data, stacked predictions build multiple models on 5 different folds, or sections, of the training data, preventing misleadingly high accuracy scores. This process occurs within the training data.

-

Cross-validation prediction values: Generated using K-fold cross-validation (5-fold by default) to make predictions on multiple subsets of data, ensuring your model generalizes well when presented with new data. On the Leaderboard, the cross-validation score represents the mean of the K-fold cross-validation scores calculated for each fold. This process occurs on training and validation data.

| Dropdown option | Description for small datasets | Description for large datasets |

|---|---|---|

| All data | Predictions are calculated by doing stacked predictions on training, validation, and holdout partitions, regardless of whether they were used for training the model or if holdout has been unlocked. | Not available |

| Validation and holdout | Predictions are calculated using the validation and holdout partitions. If validation was used in training, this option is disabled. | Predictions are calculated using the validation and holdout partitions. If validation was used in training or the project was created without a holdout partition, this option is not available. |

| Validation | If the project was created without a holdout partition, this option replaces the Validation and holdout option. | If the project was created without a holdout partition, this option replaces the Validation and holdout option. |

| Holdout | Predictions are calculated using the holdout partition only. If holdout was used in training, this option is not available (only the All data option is valid). | Predictions are calculated using the holdout partition only. If holdout was used in training, predictions are not available for the dataset. |

Note

For OTV projects, holdout predictions are generated using a model retrained on the holdout partition. If you upload the holdout as an external test dataset instead, the predictions are generated using the model from backtest 1. In this case, the predictions from the external test will not match the holdout predictions.

Select Compute predictions to generate predictions for the selected partition on the existing dataset. Select Download predictions to save results as a CSV.

Note

The Partition field of the exported results indicates the source partition name or fold number of the cross-validation partition. The value -2 indicates the row was "discarded" (not used in TVH). This could be because the target was missing, the partition column (Date/Time-, Group, or Partition Feature-partitioned projects) was missing, or smart downsampling was enabled, and those rows were discarded from the majority class as part of downsampling.

Why use training data for predictions?¶

Although less common, there are times when you want to make predictions on your original training dataset. The most common application of the functionality is for use on large datasets. Because running stacked predictions on large datasets is often too computationally expensive, the Make Predictions tab allows you to download predictions using data from the validation and or holdout partitions (as long as they weren’t used in training).

Some sample use cases:

Clark the software developer needs to know the full distribution of his predictions, not just the mean. His dataset is large enough that stacked predictions are not available. With weekly modeling using the R API, he downloads holdout and validation predictions onto his local machine and loads them into R to produce the report he needs.

Lois the data scientist wants to verify that she can reproduce model scores exactly as well in DataRobot as when using an in-house metric. She partitions the data, specifying holdout during modeling. After modeling completes, she unlocks holdout, selects the top model, and computes and downloads predictions for just the holdout set. She then compares predictions of that brief exercise to the result of her previous many-month-long project.