Data quality checks¶

The sections below detail the checks DataRobot runs for the potential data quality issues. The Data Quality Check Logic summarizes this information.

Data quality check logic summary¶

The following table summarizes the logic behind each data quality check:

| Check / Run | Detection logic | Handling | Reported in... |

|---|---|---|---|

| Outliers / EDA2 | Ueda's algorithm | Linear: Flag added to feature in blueprint Tree: Handled automatically |

Data > Histogram |

| Multicategorical format error / EDA1 | Meets any of the following three conditions:

|

Feature is not identified as multicategorical | Data Quality Assessment log |

| Inliers / EDA1 | Value is not an outlier; frequency is an outlier | Flag added feature in blueprint | Data > Frequent Values |

| Excess zeros / EDA1 | Frequency is an outlier; value is 0 | Flag added feature in blueprint | Data > Frequent Values |

| Disguised Missing Values / EDA1 | Meets the following three conditions:

|

Median imputed; flag feature in blueprint | Data > Frequent Values |

| Target leakage / EDA2 | Importance score for each feature, calculated using Gini Norm metric. Threshold levels for reporting are moderate-risk (0.85) or high-risk (0.975). | High-risk leaky features excluded from Autopilot (using "Leakage Removed" feature list) | Data page; optionally, filter by issue type |

| Missing images / EDA1 | Empty cell, missing file, broken link | Links are fixed automatically | Data Quality Assessment log |

| Imputation leakage / EDA2 (pre-feature derivation) | Target leakage with is_imputed as the target applied to KA features. Only checked for projects with time series data prep applied to the dataset. |

Remove feature from KA features | Data page; optionally, filter by issue type |

| Pre-derived lagged features / EDA2 | Features equal to target(t-1), target(t-2) ... target(t-8) | Excluded from derivation | Data page; optionally, filter by issue type |

| Inconsistent gaps / EDA2 | [Irregular time steps]time steps | Model runs in a row-based mode | Message in the time-aware modeling configuration |

| Leading/ trailing zeros / EDA2 | For series starting/ending with 0, compute probability of consecutive 0s; flag series with <5% probability | User correction | Data page; optionally, filter by issue type |

| Infrequent negative values / EDA1 | Fewer than 2% of values are negative | User correction | Warning message |

| New series in validation / EDA1 | More than 20% of series not seen in training data | User correction | Informational message |

Outliers¶

Outliers, the observation points at the far ends of the sample mean, may be the result of data variability. DataRobot automatically creates blueprints that handle outliers. Each blueprint applies an appropriate method for handling outliers, depending on the modeling algorithm used in the blueprint. For linear models, DataRobot adds a binary column inside of a blueprint to flag rows with outliers. Tree models handle outliers automatically.

DataRobot uses its own implementation of Ueda's algorithm for automatic detection of discordant outliers.

The data quality tool checks for outliers; to view outliers use the feature's histogram.

Multicategorical format errors¶

Multilabel modeling is a classification task that allows each row to contain one, several, or zero labels. To create a training dataset that can be used for multilabel modeling, you must follow the requirements for multicategorical features.

From a sampling of 100 random rows, DataRobot checks every feature that might qualify as multicategorical, looking for at least one value with the proper multicategorical format. If found, each row is checked to determine whether it complies with the multicategorical format. If there is at least one row that does not, the "multicategorical format error" is reported for the feature. The logic for the check is:

- Value must be a valid JSON.

- Value must represent a list of non-empty strings.

Inliers¶

Inliers are values that are neither above nor below the range of common values for a feature, however, they are anomalously frequent compared to nearby values (for example, 55555 as a zip code value, entered by people who don't want to disclose their real zip code). If not handled, they could negatively affect model performance.

For each value recorded for a feature, DataRobot computes the value's frequency for that feature and makes an array of the results. Inlier candidates are the outliers in that array. To reduce false positives, DataRobot then applies another condition, keeping as inliers only those values for which:

frequency > 50 * (number of non-missing rows in the feature) / (number of unique non-missing values in the feature)

The algorithm allows inlier detection in numeric features with many unique values where, due to the number of values, inliers wouldn’t be noticeable in a histogram plot. Note that this is a conservative approach for features with a smaller number of unique values. Additionally, it does not detect inliers in features with fewer than 50 unique values.

A binary column is automatically added inside of a blueprint to flag rows with inliers. This allows the model to incorporate possible patterns behind abnormal values. No additional user action is required.

Excess zeros¶

Repeated zeros in a column could be regular values but could also represent missing values. For example, sales could be zero for a given item either because there was no demand for the item or due to no stock. Using 0s to impute missing values is often suboptimal, potentially leading to decreased model accuracy.

Using the array described in inliers, if the frequency of the value 0 is an outlier, DataRobot flags the feature.

A binary column is automatically added inside of a blueprint to flag rows with excess zeros. This allows the model to incorporate possible patterns behind abnormal values. No additional user action is required.

Disguised missing values¶

A "disguised missing value" is the term applied to a situation when a value (for example, -999) is inserted to encode what would otherwise be a missing value. Because machine learning algorithms do not treat them automatically, these values could negatively affect model performance if not handled.

DataRobot finds values that both repeat with greater frequency than other values and are also detected outliers. To be considered a disguised missing value, repeated outliers must meet one of the following heuristics:

- All digits in the value are the same and repeat at least twice (e.g., 99, 88, 9999).

- The value begins with

1and is then followed by two or more zeros. - The value is equal to -1, 98, or 97.

Disguised missing values are handled in the same way as standard missing values—a median value is imputed and inserted and a binary column flags the rows where imputation occurred.

Target leakage¶

The goal of predictive modeling is to develop a model that makes accurate predictions on new data, unseen during training. Because you cannot evaluate the model on data you don’t have, DataRobot estimates model performance on unseen data by saving off a portion of the historical dataset to use for evaluation.

A problem can occur, however, if the dataset uses information that is not known until the event occurs, causing target leakage. Target leakage refers to a feature whose value cannot be known at the time of prediction (for example, using the value for “churn reason” from the training dataset to predict whether a customer will churn). Including the feature in the model’s feature list would incorrectly influence the prediction and can lead to overly optimistic models.

DataRobot checks for target leakage during EDA2 by calculating ACE importance scores (Gini Norm metric) for each feature with regard to the target. Features that exceed the moderate-risk (0.85) threshold are flagged; features exceeding the high risk (0.975) threshold are removed. See also the ELI5 on ACE scores and target leakage.

If the advanced option for leakage removal is enabled (which it is by default), DataRobot automatically creates a feature list (Informative Features - Leakage Removed) that removes the high-risk problematic columns. Medium-risk features are marked with a yellow warning to alert you that you may want to investigate further.

After DataRobot detects leakage and creates Informative Features - Leakage Removed, it behaves according to the Advanced Option “Run Autopilot on feature list with target leakage removed” setting. If enabled (the default):

- Quick, full, or Comprehensive Autopilot: DataRobot runs the newly created feature list unless you specified a user-created list. To run on one of the other default lists, rebuild models after the initial build with any list you select.

- Manual mode: DataRobot makes the list available so that you can apply it, at your discretion, from the Repository.

- The target leakage list will be available when adding models after the initial build.

If disabled, DataRobot applies the above to Informative Features (with potential target leakage remaining) or any user-created list you specified.

Imputation leakage¶

The time series data prep tool can impute target and feature values for dates that are missing in the original dataset. The data quality check ensures that the imputed features are not leaking the imputed target. This is only a potential problem for features that are known in advance (KA), since the feature value is concurrent with the target value DataRobot is predicting.

DataRobot derives a binary classification target is_imputed = (aggregated_row_count == 0). Prior to deriving time series features, it applies the target leakage check to each KA feature, using is_imputed as the target.

Any features identified as high or moderate risk for imputation leakage are removed from the set of KA features. Subsequently, time series feature derivation proceeds as normal.

Pre-derived lagged feature¶

When a time series project starts, DataRobot automatically creates multiple date/time-related features, like lags and rolling statistics. There are times, however, when you do not want to automate time-based feature engineering (for example, if you have extracted your own time-oriented features and do not want further derivation performed on them). In this case, you should flag those features as Excluded from derivation or Known in advance. The “Lagged feature” check helps to detect whether features that should have been flagged were not, which would lead to duplication of columns.

DataRobot compares each non-target feature with target(t-1), target(t-2) ... target(t-8).

All features detected as lags are automatically set as excluded from derivation to prevent "double derivation." Best practice suggests reviewing other uploaded features and setting all pre-derived features as “Excluded from derivation” or “Known in advance”, if applicable.

Irregular time steps¶

The “inconsistent gaps” check is flagged when a time series model has irregular time steps. These gaps cause inaccurate rolling statistics. Some examples:

-

Transactional data is not aggregated for a time series project and raw transactional data is used.

-

Transactional data is aggregated into a daily sales dataset, and dates with zero sales are not added to the dataset.

DataRobot detects when there are expected timestamps missing.

It is important to understand that gaps could be consistent (for example, no sales for each weekend). DataRobot accounts for that and only detects inconsistent or unexpected gaps.

Because their inclusion is not good for rolling statistics, if greater than 20% of expected time steps are missing, the project runs in row-based mode (i.e., a regular project with out-of-time (OTV) validation). If that is not the intended behavior, make corrections in the dataset and recreate the project.

Leading or trailing zeros¶

Just as for excess zeros, this check works to detect zeros that are used to fill in missing values. It works for the special case where 0s are used to fill in missing values in the beginning or end of series that started later or finished earlier than others.

DataRobot estimates a total rate for zeros in each series and performs a statistical test to identify the number of consecutive zeros that cannot be considered a natural sequence of zeros.

If that is not the intended behavior, make corrections in the dataset and recreate the project.

Infrequent negative values¶

Data with excess zeros in the target can be modeled with a special two-stage model for zero-inflated cases. This model is only available when the min value of the target is zero (that is, a single negative value will invalidate its use). In sales data, for example, this can happen when returns are recorded along with sales. This data quality check identifies a negative value when two-stage models are appropriate and provides a warning to correct the target if the desire is to enable zero-inflated modeling and other additional blueprints.

If DataRobot detects that fewer than 2% of values are negative, it treats the project as zero-inflated.

DataRobot surfaces a warning message.

New series in validation¶

Depending on the project settings (training and validation partition sizes), a multiseries project might be configured so that a new series is introduced at the end of the dataset and therefore isn't part of the training data. For example, this could happen when a new store opens. This check returns an information message indicating that the new series is not within the training data.

If DataRobot detects that more than 20% of series are new (meaning that they are not in the training data).

DataRobot surfaces an informational message.

Missing images¶

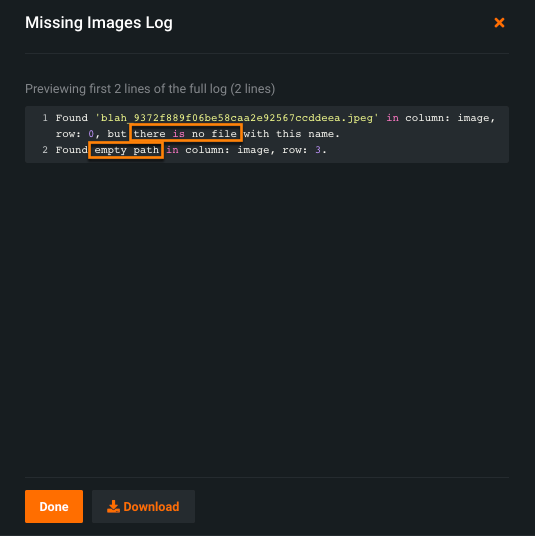

When an image dataset is used to build a Visual AI project, the CSV contains paths to images contained in the provided ZIP archive. These paths can be missing, refer to an image that does not exist, or refer to an invalid image. A missing path is not necessarily an issue as a row could contain a variable number of images or simply not have an image for that row and column. Click Preview Log for a more detailed view:

In this example, row 1 reports a file name referenced that did not exist in the uploaded file (1). Row 2 reports that a row was missing an image path (2). The log provides both the nature of the issue as well as the row in which the problem occurred. The log previews up to 100 rows; choose Download to export the log and view additional rows.

DataRobot checks each image path provided to ensure it refers to an image that exists and is valid.

For paths that fail to resolve, DataRobot attempts to find the intended image and replace the problematic path. In the event that an auto-correction is not possible, the problematic path is removed. If the image was invalid, the path is removed.

All missing images, paths that fail to resolve (even when automatically fixed), and invalid images are logged and available for viewing.