Monitoring agent¶

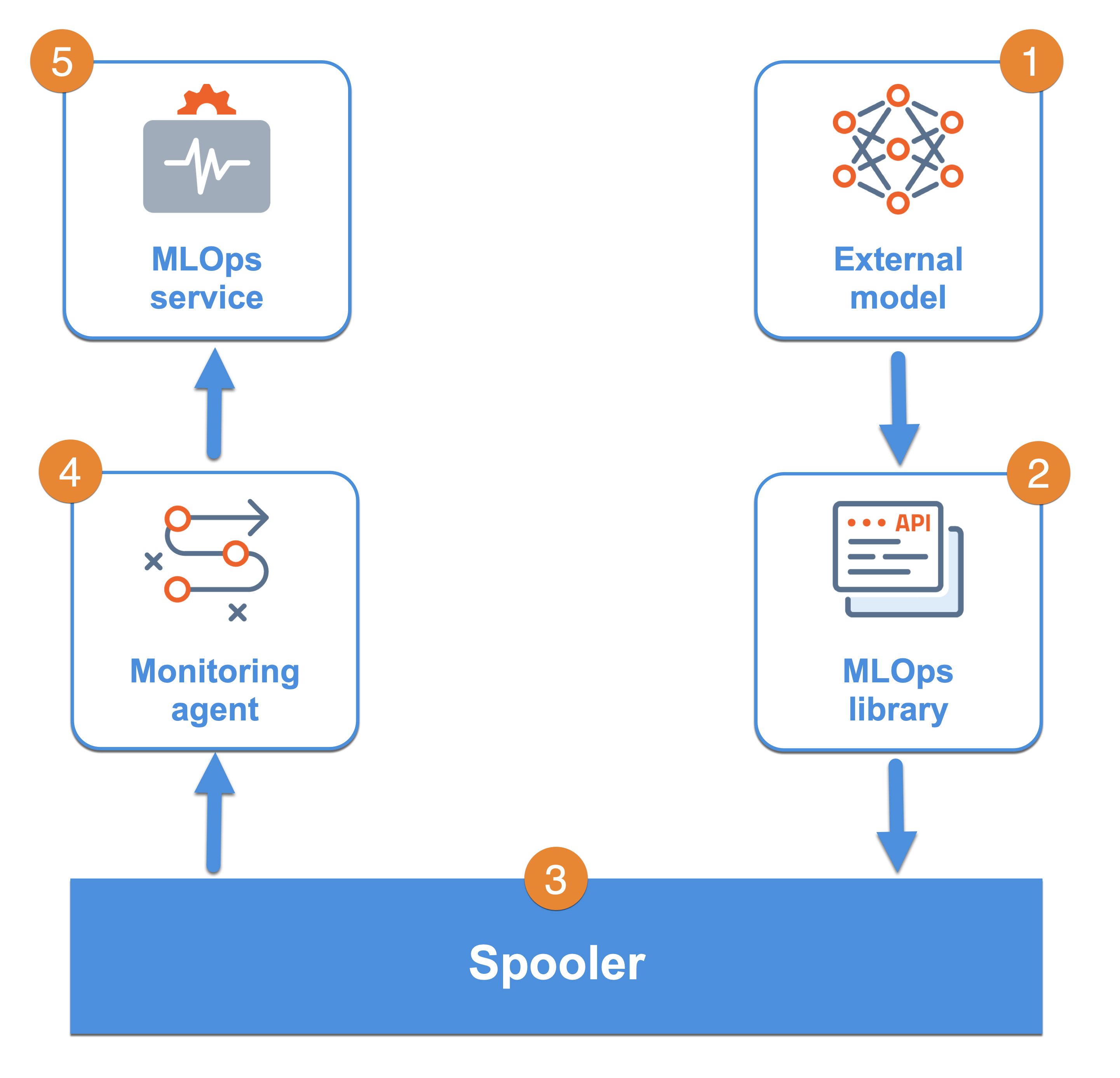

When you enable the monitoring agent feature, you have access to the agent installation and MLOps components, all packaged within a single tarball. The image below illustrates the roles of these components in enabling DataRobot MLOps to monitor external models.

Java requirement

The MLOps monitoring library requires Java 11 or higher. Without monitoring, a model's Scoring Code JAR file requires Java 8 or higher; however, when using the MLOps library to instrument monitoring, a model's Scoring Code JAR file requires Java 11 or higher. For Self-managed AI platform installations, the Java 11 requirement applies to DataRobot v11.0 and higher.

| Component | Description | |

|---|---|---|

| 1 | External model | External models are machine learning models running outside of DataRobot, within your environment. The deployments (running in Python or Java) score data and generate predictions along with other information, such as the number of predictions generated and the length of time to generate each. |

| 2 | DataRobot MLOps library | The MLOps library, available in Python (v2 and v3) and Java, provides APIs to report prediction data and information from a specified deployment (identified by deployment ID and model ID). Supported library calls for the MLOps client let you specify which data to report to the MLOps service, including prediction time, number of predictions, and other metrics and deployment statistics. |

| 3 | Spooler (Buffer) | The library-provided APIs pass messages to a configured spooler (or buffer). |

| 4 | Monitoring agent | The monitoring agent detects data written to the target buffer location and reports it to the MLOps service. |

| 5 | DataRobot MLOps service | If the monitoring agent is running as a service, it retrieves the data as soon as it’s available; otherwise, it retrieves prediction data when it is run manually. |

If models are running in isolation and disconnected from the network, the MLOps library will not have networked access from the buffer directory. For these deployments, you can manually copy prediction data from the buffer location via USB drive as needed. The agent then accesses that data as configured and reports it to the MLOps service.

Additional monitoring agent configuration settings specify where to read data from and report data to, how frequently to report the data, and so forth. The flexible monitoring agent design ensures support for a variety of deployment and environment requirements.

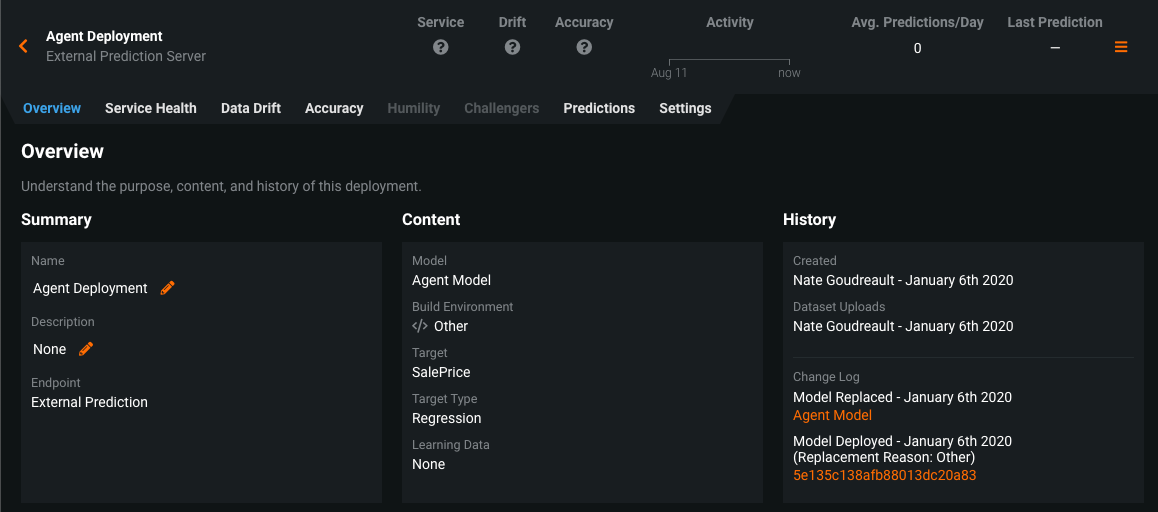

Finally, from the deployment inventory you can view your deployments and view and manage prediction statistics and metrics.

Monitoring agent requirements¶

To use the monitoring agent with a remote deployment environment, you must provide:

-

The URL of DataRobot MLOps. (For Self-Managed AI Platform installations, this is typically of the form

https://10.0.0.1orhttps://my-server-name.) -

An API key from DataRobot. You can configure this through the UI by going to the API keys and tools tab under account settings and finding the API Keys section.

Additionally, reference the documentation for creating and deploying a model package.

MLOps agent tarball¶

You can download the MLOps agent tarball from two locations:

- The API keys and tools page

- The Predictions > Monitoring tab of a deployment configured to monitor an external model

The MLOps agent tarball contains the MLOps libraries for you to install. See monitoring agent and prediction reporting setup to configure the monitoring agent.

Python library public download

You can download the MLOps Python libraries from the public Python Package Index site. Download and install the DataRobot MLOps metrics reporting library and the DataRobot MLOps Connected Client. These pages include instructions for installing the libraries.

Java library public download

You can download the MLOps Java library and agent from the public Maven Repository with a groupId of com.datarobot and an artifactId of datarobot-mlops (library) and mlops-agent (agent). In addition, you can access the DataRobot MLOps Library and DataRobot MLOps Agent artifacts in the Maven Repository to view all versions and download and install the JAR file.

In addition to the MLOps library, the tarball includes Python and Java API examples and accompanying datasets to:

- Create a deployment that generates (example) predictions for both regression and classification models.

- Report metrics from deployments using the MLOps library.

The tarball also includes scripts to:

- Start and stop the agent, as well as retrieve the current agent status.

- Create a remote deployment that uploads a training dataset and returns the deployment ID and model ID for the deployment.

How the agent works¶

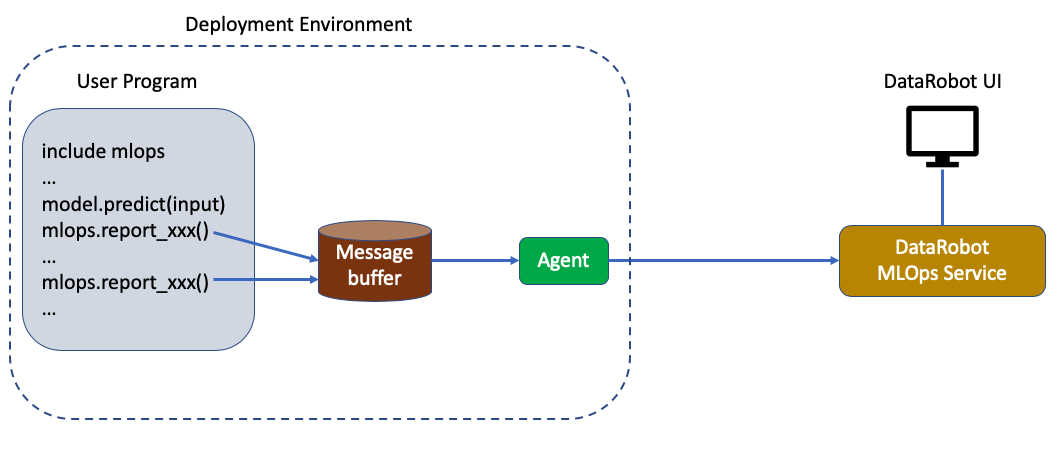

This section outlines the basic workflow for using the monitoring agent from different environments.

Using DataRobot MLOps:

- Use the Model Registry to create a model package with information about your model's metadata.

- Deploy the model package. Create a deployment to display metrics about the running model.

- Use the deployment Predictions tab to view a code snippet demonstrating how to instrument your prediction code with the monitoring agent to report metrics.

Using a remote deployment environment:

- Install the monitoring agent.

- Use the MLOps library to report metrics from your prediction code, as demonstrated by the snippet. The MLOps library buffers the metrics in a spooler (i.e., Filesystem, Rabbit MQ, Kafka, among others), which enables high throughput without slowing down the deployment.

- The monitoring agent forwards the metrics to DataRobot MLOps.

- You can view the reported metrics via the DataRobot MLOps Deployment inventory.

Monitoring agent and prediction reporting setup¶

The following sections outline how to configure both the machine using the monitoring agent to upload data, and the machine using the MLOps library to report predictions.

Monitoring agent configuration¶

Complete the following workflow for each machine using the monitoring agent to upload data to DataRobot MLOps. This setup only needs to be performed once for each deployment environment.

- Ensure that Java (version 8) is installed.

- Download the MLOps agent tarball, available through the API keys and tools tab. The tarball includes the monitoring agent and library software, example code, and associated scripts.

- Change the directory to the unpacked directory.

- Install the monitoring agent.

- Configure the monitoring agent.

- Run the agent service.

Host predictions¶

For each machine using the MLOps library to report predictions, ensure that appropriate libraries and requirements are installed. There are two locations where you can obtain the libraries:

Download the MLOps agent tarball and install the libraries:

-

Java: The Java library is included in the .tar file in

lib/datarobot-mlops-<version>.jar. -

Python: The Python version of the library is included in the .tar file in

lib\datarobot_mlops-*-py2.py3-none-any.whl. This works for both Python2 and Python3. You can install it using:pip install lib\datarobot_mlops-*-py2.py3-none-any.whl

Download the MLOps Python libraries from the Python Package Index site:

-

DataRobot MLOps metrics reporting library

- Download and then install:

pip install datarobot-mlops

- Download and then install:

-

DataRobot MLOps Connected client (mlops-cli)

- Download and then install:

pip install datarobot-mlops-connected-client

- Download and then install:

The MLOps agent .tar file includes several end-to-end examples in various languages.

Create and deploy a model package¶

A model package stores metadata about your external model: the problem type (e.g., regression), the training data used, and more. You can create a model package using the Model Registry and deploy it.

In the deployment's Integrations tab, you can view example code as well as the values for the MLOPS_DEPLOYMENT_ID and MLOPS_MODEL_ID that are necessary to report statistics from your deployment.

If you wish to instead create a model package using the API, you can follow the pattern used in the helper scripts in the examples directory for creating model packages and deployments. Each example has its own create_deployment.sh script to create the related model package and deployment. This script interacts with DataRobot MLOps directly and so must be run on a machine with connectivity to it. When run, each script outputs a deployment ID and model ID that are then used by the run_example.sh script, in which the model inference and subsequent metrics reporting actually happens.

When creating and deploying an external model package, you can upload the data used to train the model: the training dataset, the holdout dataset, or both. When you upload this data, it is used to monitor the model. The datasets you upload provide the following functionality:

-

Training dataset: Provides the baseline for feature drift monitoring.

-

Holdout dataset: Provides the predictions used as a baseline for accuracy monitoring.

You can find examples of the expected data format for holdout datasets in the examples/data folder of the agent tar file:

-

mlops-example-lending-club-holdout.csv: Demonstrates the holdout data format for regression models. -

mlops-example-iris-holdout.csv: Demonstrates the holdout data format for classification models.Note

For classification models, you must provide the predictions for all classes.

Instrument deployments with the monitoring agent¶

To configure the monitoring agent with each deployment:

- Locate the MLOps library and sample code. These are included within the MLOps

.tarfile distribution. - Configure the deployment ID and model ID in your environment.

- Instrument your code with MLOps calls as shown in the sample code provided for your programming language.

- To report results to DataRobot MLOps, you must configure the library to use the same channel as is configured in the agent. For testing, you can configure the library to output to stdout though these calls will not be forwarded to the agent or DataRobot MLOps. Configure the library via the :ref:

mlops API <mlops-lib>. - You can view your deployment in the DataRobot MLOps UI under the Deployments tab.