Make predictions with the API¶

This section describes how to use DataRobot's Prediction API to make predictions on a dedicated prediction server. If you need Prediction API reference documentation, it is available here.

You can use DataRobot's Prediction API for making predictions on a model deployment (by specifying the deployment ID). This provides access to advanced model management features like target or data drift detection. DataRobot's model management features are safely decoupled from the Prediction API so that you can gain their benefit without sacrificing prediction speed or reliability. See the deployment section for details on creating a model deployment.

Before generating predictions with the Prediction API, review the recommended best practices to ensure the fastest predictions.

Making predictions¶

To generate predictions on new data using the Prediction API, you need:

- The model's deployment ID. You can find the ID in the sample code output of the Deployments > Predictions > Prediction API tab (with Interface set to "API Client").

- Your API key.

Warning

If your model is an open-source R script, it will run considerably slower.

Prediction requests are submitted as POST requests to the resource, for example:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions" \

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

Availability information

Managed AI Platform (SaaS) users must include the datarobot-key in the cURL header (for example, curl -H "Content-Type: application/json", -H "datarobot-key: xxxx"). Find the key by displaying secrets on the Predictions > Prediction API tab or by contacting your DataRobot representative.

The order of the prediction response rows is the same as the order of the sent data.

The Response returned is similar to:

HTTP/1.1 200 OK

Content-Type: application/json

X-DataRobot-Execution-Time: 38

X-DataRobot-Model-Cache-Hit: true

{"data":[...]}

Note

The example above shows an arbitrary hostname (example.datarobot.com) as the Prediction API URL; be sure to use the correct hostname of your dedicated prediction server. The configured (predictions) URL is displayed in the sample code of the Deployments > Predictions > Prediction API tab. See your system administrator for more assistance if needed.

Using persistent HTTP connections¶

All prediction requests are served over a secure connection (SSL/TLS), which can result in significant connection setup time. Depending on your network latency to the prediction instance, this can be anywhere from 30ms to upwards of 100-150ms.

To address this, the Prediction API supports HTTP Keep-Alive, enabling your systems to keep a connection open for up to a minute after the last prediction request.

Using the Python requests module, run your prediction requests from requests.Session:

import json

import requests

data = [

json.dumps({'Feature1': 42, 'Feature2': 'text value 1'}),

json.dumps({'Feature1': 60, 'Feature2': 'text value 2'}),

]

api_key = '...'

api_endpoint = '...'

session = requests.Session()

session.headers = {

'Authorization': 'Bearer {}'.format(api_key),

'Content-Type': 'text/json',

}

for row in data:

print(session.post(api_endpoint, data=row).json())

Check the documentation of your favorite HTTP library for how to use persistent connections in your integration.

Prediction inputs¶

The API supports both JSON- and CSV-formatted input data (although JSON can be a safer choice if it is created with a good quality JSON parser). Data can either be posted in the request body or via a file upload (multipart form).

Note

When using the Prediction API, the only supported column separator in CSV files and request bodies is the comma (,).

JSON input¶

The JSON input is formatted as an array of objects where the key is the feature name and the value is the value in the dataset.

For example, a CSV file that looks like:

a,b,c

1,2,3

7,8,9

Would be represented in JSON as:

[

{

"a": 1,

"b": 2,

"c": 3

},

{

"a": 7,

"b": 8,

"c": 9

}

]

Submit a JSON array to the Prediction API by sending the data to the /predApi/v1.0/deployments/<deploymentId>/predictions endpoint. For example:

curl -H "Content-Type: application/json" -X POST --data '[{"a": 4, "b": 5, "c": 6}\]' \

-H "Authorization: Bearer <API key>" \

https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions

File input¶

This example assumes a CSV file, dataset.csv, that contains a header and the rows of data to predict on. cURL automatically sets the content type.

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions" \

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

HTTP/1.1 200 OK

Date: Fri, 08 Feb 2019 10:00:00 GMT

Content-Type: application/json

Content-Length: 60624

Connection: keep-alive

Server: nginx/1.12.2

X-DataRobot-Execution-Time: 39

X-DataRobot-Model-Cache-Hit: true

Access-Control-Allow-Methods: OPTIONS, POST

Access-Control-Allow-Credentials: true

Access-Control-Expose-Headers: Content-Type,Content-Length,X-DataRobot-Execution-Time,X-DataRobot-Model-Cache-Hit,X-DataRobot-Model-Id,X-DataRobot-Request-Id

Access-Control-Allow-Headers: Content-Type,Authorization,datarobot-key

X-DataRobot-Request-ID: 9e61f97bf07903b8c526f4eb47830a86

{

"data": [

{

"predictionValues": [

{

"value": 0.2570950924,

"label": 1

},

{

"value": 0.7429049076,

"label": 0

}

],

"predictionThreshold": 0.5,

"prediction": 0,

"rowId": 0

},

{

"predictionValues": [

{

"value": 0.7631880558,

"label": 1

},

{

"value": 0.2368119442,

"label": 0

}

],

"predictionThreshold": 0.5,

"prediction": 1,

"rowId": 1

}

]

}

In-body text input¶

This example includes the CSV file content in the request body. With this format, you must set the Content-Type of the form data to text/plain.

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions" --data-binary $'a,b,c\n1,2,3\n7,8,9\n'

-H "content-type: text/plain" \

-H "Authorization: Bearer <API key>" \

Prediction outputs¶

The Content-Type header value must be set appropriately for the type of data being sent ( text/csv or application/json); the raw API request responds with JSON by default. Reference the output format for more information about the structure of the output schema. The output schema shares the same format for real-time and batch predictions.

CSV output¶

To return CSV in addition to JSON from the Prediction API for real-time predictions, use -H "Accept: text/csv".

Prediction objects¶

The following sections describe the content of the various prediction objects.

Request schema¶

Note that Request schema are standard for any kind of predictions. The following are the accepted headers:

| Name | Value(s) |

|---|---|

| text/csv;charset=utf8 | |

| application/json | |

| multipart/form-data | |

| gzip | |

| bz2 | |

| Bearer |

Note the following:

-

If you are submitting predictions as a raw stream of data, you can specify an encoding by adding

;charset=<encoding>to theContent-Typeheader. See the Python standard encodings for a list of valid values. DataRobot usesutf8by default. -

If you are sending an encoded stream of data, you should specify the

Content-Encodingheader. -

The

Authorizationfield is a Bearer authentication HTTP authentication scheme that involves security tokens called bearer tokens. While it is possible to authenticate via pair username + API token (Basic auth) or just via API token, these authentication methods are deprecated and not recommended.

You can parameterize a request using URI query parameters:

| Parameter name | Type | Notes |

|---|---|---|

| string | List of columns from a scoring dataset to return in the prediction response. | |

| string | If passthroughColumnsSet=all is passed, all columns from the scoring dataset are returned in the prediction response. |

Note the following:

- The

passthroughColumnsandpassthroughColumnsSetparameters cannot both be passed in the same request. - While there is no limit on the number of column names you can pass with the

passthroughColumnsquery parameter, there is a limit on the HTTP request line (currently 8192 bytes).

The following example illustrates the use of multiple passthrough columns:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions?passthroughColumns=Latitude&passthroughColumns=Longitude" \

-H "Authorization: Bearer <API key>" \

-H "datarobot-key: <DataRobot key>" -F \

file=@~/.home/path/to/dataset.csv

Response schema¶

The following is a sample prediction response body (also see the additional example of a time series response body):

{

"data": [

{

"predictionValues": [

{

"value": 0.6856798909,

"label": 1

},

{

"value": 0.3143201091,

"label": 0

}

],

"predictionThreshold": 0.5,

"prediction": 1,

"rowId": 0,

"passthroughValues": {

"Latitude": -25.433508,

"Longitude": 22.759397

}

},

{

"predictionValues": [

{

"value": 0.765656753,

"label": 1

},

{

"value": 0.234343247,

"label": 0

}

],

"predictionThreshold": 0.5,

"prediction": 1,

"rowId": 1,

"passthroughValues": {

"Latitude": 41.051128,

"Longitude": 14.49598

}

}

]

}

The table below lists custom DataRobot headers:

| Name | Value | Note |

|---|---|---|

| numeric | Time for compute predictions (ms). | |

| true or false | Indication of in-memory presence of model (bool). | |

| ObjectId | ID of the model used to serve the prediction request (only returned for predictions made on model deployments). | |

| uuid | Unique identifier of a prediction request. |

The following table describes the Response Prediction Rows of the JSON array:

| Name | Type | Note |

|---|---|---|

| array | An array of predictionValues (schema described below). |

|

| float | The threshold used for predictions (applicable to binary classification projects only). | |

| float | The output of the model for this row. | |

| int | The row described. | |

| object | A JSON object where key is a column name and value is a corresponding value for a predicted row from the scoring dataset. This JSON item is only returned if either passthroughColumns or passthroughColumnsSet is passed. | |

| float | The exposure-adjusted output of the model for this row if the exposure was used during model building. The adjustedPrediction is included in responses if the request parameter excludeAdjustedPredictions is false. | |

| array | An array of exposure-adjusted PredictionValue (schema described below). The adjustedPredictionValues is included in responses if the request parameter excludeAdjustedPredictions is false. | |

| array | An array of PredictionExplanations (schema described below). This JSON item is only returned with Prediction Explanations. |

Prediction values schema¶

The following table describes the predictionValues schema in the JSON Response array:

| Name | Type | Note |

|---|---|---|

| - | Describes what the model output corresponds to. For regression projects, it is the name of the target feature. For classification projects, it is a label from the target feature. | |

| float | The output of the prediction. For regression projects, it is the predicted value of the target. For classification projects, it is the probability associated with the label that is predicted to be most likely (implying a threshold of 0.5 for binary classification problems). |

Extra custom model output schema¶

Availability information

Additional output in prediction responses for custom models is off by default. Contact your DataRobot representative or administrator for information on enabling this feature.

Feature flag: Enable Additional Custom Model Output in Prediction Responses

In some cases, the prediction response from your model may contain extra model output. This is possible for custom models with additional output columns defined in the score() hook and for Generative AI (GenAI) models. The score() hook can return any number of extra columns, containing data of types string, int, float, bool, or datetime. When additional columns are returned through the score() method, the prediction response is as follows:

- For a tabular response (CSV), the additional columns are returned as part of the response table or dataframe.

- For a JSON response, the

extraModelOutputkey is returned alongside each row. This key is a dictionary containing the values of each additional column in the row.

As custom models, deployed GenAI models can return extra columns through the extraModelOutput key to provide information about the text generation model (citations, latency, confidence, LLM blueprint ID, token counts, etc.), as shown in the example below:

Citations in prediction response

For citations to be included in a Gen AI model's prediction response, the LLM deployed from the playground must have a vector database (VDB) associated with it.

{

"data": [

{

"rowId": 0,

"prediction": "In the field of biology, there have been some exciting new discoveries made through research conducted on the International Space Station (ISS). Here are three examples:\n\n1. Understanding Plant Root Orientation: Scientists have been studying the growth and development of plants in microgravity. They found that plants grown in space exhibit different root orientation compared to those grown on Earth. This discovery helps us understand how plants adapt and respond to the absence of gravity. This knowledge can be applied to improve agricultural practices and develop innovative techniques for growing plants in challenging environments on Earth.\n\n2. Tissue Damage and Repair: One fascinating area of research on the ISS involves studying how living organisms respond to injuries in space. Scientists have investigated tissue damage and repair mechanisms in various organisms, including humans. By studying the healing processes in microgravity, researchers gained insights into how wounds heal differently in space compared to on Earth. This knowledge has implications for developing new therapies and treatments for wound healing and tissue regeneration.\n\n3. Bubbles, Lightning, and Fire Dynamics: The ISS provides a unique laboratory environment for studying the behavior of bubbles, lightning, and fire in microgravity. Scientists have conducted experiments to understand how these phenomena behave differently without the influence of gravity. These studies have practical applications, such as improving combustion processes, enhancing fire safety measures, and developing more efficient cooling systems.\n\nThese are just a few examples of the exciting discoveries that have been made in the field of biology through research conducted on the ISS. The microgravity environment of space offers a unique perspective and enables researchers to uncover new insights into the workings of living organisms and their interactions with the environment.",

"predictionValues": [

{

"label": "resultText",

"value": "In the field of biology, there have been some exciting new discoveries made through research conducted on the International Space Station (ISS). Here are three examples:\n\n1. Understanding Plant Root Orientation: Scientists have been studying the growth and development of plants in microgravity. They found that plants grown in space exhibit different root orientation compared to those grown on Earth. This discovery helps us understand how plants adapt and respond to the absence of gravity. This knowledge can be applied to improve agricultural practices and develop innovative techniques for growing plants in challenging environments on Earth.\n\n2. Tissue Damage and Repair: One fascinating area of research on the ISS involves studying how living organisms respond to injuries in space. Scientists have investigated tissue damage and repair mechanisms in various organisms, including humans. By studying the healing processes in microgravity, researchers gained insights into how wounds heal differently in space compared to on Earth. This knowledge has implications for developing new therapies and treatments for wound healing and tissue regeneration.\n\n3. Bubbles, Lightning, and Fire Dynamics: The ISS provides a unique laboratory environment for studying the behavior of bubbles, lightning, and fire in microgravity. Scientists have conducted experiments to understand how these phenomena behave differently without the influence of gravity. These studies have practical applications, such as improving combustion processes, enhancing fire safety measures, and developing more efficient cooling systems.\n\nThese are just a few examples of the exciting discoveries that have been made in the field of biology through research conducted on the ISS. The microgravity environment of space offers a unique perspective and enables researchers to uncover new insights into the workings of living organisms and their interactions with the environment."

}

],

"deploymentApprovalStatus": "APPROVED",

"extraModelOutput": {

"CITATION_CONTENT_8": "3\nthe research study is received by others and how the \nknowledge is disseminated through citations in other \njournals. For example, six ISS studies have been \npublished in Nature, represented as a small node in the \ngraph. Network analysis shows that findings published \nin Nature are likely to be cited by other similar leading \njournals such as Science and Astrophysical Journal \nLetters (represented in bright yellow links) as well as \nspecialized journals such as Physical Review D and New \nJournal of Physics (represented in a yellow-green link). \nSix publications in Nature led to 512 citations according \nto VOSviewer\u2019s network map (version 1.6.11), an \nincrease of over 8,000% from publication to citation. \nFor comparison purposes, 6 publications in a small \njournal like American Journal of Botany led to 185 \ncitations and 107 publications in Acta Astronautica, \na popular journal among ISS scientists, led to 1,050 \ncitations (Figure 3, panel B). This count of 1,050",

"CITATION_CONTENT_9": "Introduction\n4\nFigure 3. Count of publications reported in journals ranked in the top 100 according to global standards of Clarivate. A total of 567 top-tier publications \nthrough the end of FY-23 are shown by year and research category.\nIn this year\u2019s edition of the Annual Highlights of Results, we report findings from a \nwide range of topics in biology and biotechnology, physics, human research, Earth and \nspace science, and technology development \u2013 including investigations about plant root \norientation, tissue damage and repair, bubbles, lightning, fire dynamics, neutron stars, \ncosmic ray nuclei, imaging technology improvements, brain and vascular health, solar \npanel materials, grain flow, as well as satellite and robot control. \nThe findings highlighted here are only a small sample representative of the research \nconducted by the participating space agencies \u2013 ASI (Agenzia Spaziale Italiana), CSA \n(Canadian Space Agency), ESA (European Space Agency), JAXA (Japanese Aerospace",

"CITATION_PAGE_3": 4,

"CITATION_PAGE_8": 6,

"CITATION_CONTENT_5": "23\nPUBLICATION HIGHLIGHTS: \nEARTH AND SPACE SCIENCE\nThe ISS laboratories enable scientific experiments in the biological sciences \nthat explore the complex responses of living organisms to the microgravity \nenvironment. The lab facilities support the exploration of biological systems \nranging from microorganisms and cellular biology to integrated functions \nof multicellular plants and animals. Several recent biological sciences \nexperiments have facilitated new technology developments that allow \ngrowth and maintenance of living cells, tissues, and organisms.\nThe Alpha Magnetic \nSpectrometer-02 (AMS-02) is \na state-of-the-art particle \nphysics detector constructed, \ntested, and operated by an \ninternational team composed \nof 60 institutes from \n16 countries and organized \nunder the United States \nDepartment of Energy (DOE) sponsorship. \nThe AMS-02 uses the unique environment of \nspace to advance knowledge of the universe \nand lead to the understanding of the universe\u2019s",

"CITATION_SOURCE_5": "Space_Station_Annual_Highlights/iss_2017_highlights.pdf",

"CITATION_CONTENT_3": "Introduction\n2\nExtensive international collaboration in the \nunique environment of LEO as well as procedural \nimprovements to assist researchers in the collection \nof data from the ISS have produced promising \nresults in the areas of protein crystal growth, tissue \nregeneration, vaccine and drug development, 3D \nprinting, and fiber optics, among many others. In \nthis year\u2019s edition of the Annual Highlights of Results, \nwe report findings from a wide range of topics in \nbiotechnology, physics, human research, Earth \nand space science, and technology development \n\u2013 including investigations about human retinal cells, \nbacterial resistance, black hole detection, space \nanemia, brain health, Bose-Einstein condensates, \nparticle self-assembly, RNA extraction technology, \nand more. The findings highlighted here represent \nonly a sample of the work ISS has contributed to \nsociety during the past 12 months.\nAs of Oct. 1, 2022, we have identified a total of 3,679",

"CITATION_SOURCE_8": "Space_Station_Annual_Highlights/iss_2021_highlights.pdf",

"CITATION_PAGE_7": 8,

"CITATION_PAGE_6": 8,

"CITATION_PAGE_2": 4,

"CITATION_CONTENT_7": "Biology and Biotechnology Earth and Space Science Educational and Cultural Activities\nHuman Research Physical Science Technology Development and Demonstration",

"CITATION_SOURCE_9": "Space_Station_Annual_Highlights/iss_2023_highlights.pdf",

"datarobot_latency": 3.1466632366,

"blocked_resultText": false,

"CITATION_SOURCE_2": "Space_Station_Annual_Highlights/iss_2023_highlights.pdf",

"CITATION_SOURCE_6": "Space_Station_Annual_Highlights/iss_2021_highlights.pdf",

"CITATION_SOURCE_7": "Space_Station_Annual_Highlights/iss_2023_highlights.pdf",

"datarobot_confidence_score": 0.6524822695,

"CITATION_PAGE_9": 7,

"CITATION_CONTENT_4": "Molecular Life Sciences. 2021 October 29; DOI: \n10.1007/s00018-021-03989-2.\nFigure 7. Immunoflourescent images of human retinal \ncells in different conditions. Image adopted from \nCialdai, Cellular and Molecular Life Sciences.\nThe ISS laboratory provides a platform for investigations in the biological sciences that \nexplores the complex responses of living organisms to the microgravity environment. Lab \nfacilities support the exploration of biological systems, from microorganisms and cellular \nbiology to the integrated functions of multicellular plants and animals.",

"CITATION_SOURCE_1": "Space_Station_Annual_Highlights/iss_2023_highlights.pdf",

"CITATION_SOURCE_0": "Space_Station_Annual_Highlights/iss_2018_highlights.pdf",

"CITATION_SOURCE_3": "Space_Station_Annual_Highlights/iss_2022_highlights.pdf",

"CITATION_PAGE_5": 26,

"CITATION_PAGE_0": 7,

"CITATION_PAGE_1": 11,

"LLM_BLUEPRINT_ID": "662ba0062ade64c4fc4c1a1f",

"CITATION_PAGE_4": 9,

"datarobot_token_count": 320,

"CITATION_CONTENT_0": "more effectively in space by addressing \nsuch topics as understanding radiation effects on \ncrew health, combating bone and muscle loss, \nimproving designs of systems that handle fluids \nin microgravity, and determining how to maintain \nenvironmental control efficiently. \nResults from the ISS provide new \ncontributions to the body of scientific \nknowledge in the physical sciences, life \nsciences, and Earth and space sciences \nto advance scientific discoveries in multi\u0002disciplinary ways. \nISS science results have Earth-based \napplications, including understanding our \nclimate, contributing to the treatment of \ndisease, improving existing materials, and inspiring \nthe future generation of scientists, clinicians, \ntechnologists, engineers, mathematicians, artists, \nand explorers.\nBENEFITS\nFOR HUMANITY\nDISCOVERY\nFigure 4. A heat map of all of the countries whose authors have cited scientific results publications from ISS Research through October 1, 2018.\nEXPLORATION",

"CITATION_SOURCE_4": "Space_Station_Annual_Highlights/iss_2022_highlights.pdf",

"CITATION_CONTENT_2": "capabilities (i.e., facilities), and data delivery are critical to the effective operation \nof scientific projects for accurate results to be shared with the scientific community, \nsponsors, legislators, and the public. \nOver 3,700 investigations have operated since Expedition 1, with more than 250 active \nresearch facilities, the participation of more than 100 countries, the work of more than \n5,000 researchers, and over 4,000 publications. The growth in research (Figure 1) and \ninternational collaboration (Figure 2) has prompted the publication of over 560 research \narticles in top-tier scientific journals with about 75 percent of those groundbreaking studies \noccurring since 2018 (Figure 3). \nBibliometric analyses conducted through VOSviewer1\n measure the impact of space station \nresearch by quantifying and visualizing networks of journals, citations, subject areas, and \ncollaboration between authors, countries, or organizations. Using bibliometrics, a broad",

"CITATION_CONTENT_1": "technologists, engineers, mathematicians, artists, and explorers.\nEXPLORATION\nDISCOVERY\nBENEFITS\nFOR HUMANITY",

"CITATION_CONTENT_6": "control efficiently. \nResults from the ISS provide new \ncontributions to the body of scientific \nknowledge in the physical sciences, life \nsciences, and Earth and space sciences \nto advance scientific discoveries in multi\u0002disciplinary ways. \nISS science results have Earth-based \napplications, including understanding our \nclimate, contributing to the treatment of \ndisease, improving existing materials, and \ninspiring the future generation of scientists, \nclinicians, technologists, engineers, \nmathematicians, artists and explorers.\nBENEFITS\nFOR HUMANITY\nDISCOVERY\nEXPLORATION"

}

},

]

}

Making predictions with time series¶

Tip

Time series predictions are specific to time series projects, not all time-aware modeling projects. Specifically, the CSV file must follow a specific format, described in the predictions section of the time series modeling pages.

If you are making predictions with the forecast point, you can skip the forecast window in your prediction data as DataRobot generates a forecast point automatically. This is called autoexpansion. Autoexpansion applies automatically if:

- Predictions are made for a specific forecast point and not a forecast range.

- The time series project has a regular time step and does not use Nowcasting.

When using autoexpansion, note the following:

- If you have Known in Advance features that are important for your model, it is recommended that you manually create a forecast window to increase prediction accuracy.

- If you plan to use an association ID other than the primary date/time column in your deployment to track accuracy, create a forecast window manually.

The URL for making predictions with time series deployments and regular non-time series deployments is the same. The only difference is that you can optionally specify forecast point, prediction start/end date, or some other time series specific URL parameters. Using the deployment ID, the server automatically detects the deployed model as a time series deployment and processes it accordingly:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions" \

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

The following is a sample Response body for a multiseries project:

HTTP/1.1 200 OK

Content-Type: application/json

X-DataRobot-Execution-Time: 1405

X-DataRobot-Model-Cache-Hit: false

{

"data": [

{

"seriesId": 1,

"forecastPoint": "2018-01-09T00:00:00Z",

"rowId": 365,

"timestamp": "2018-01-10T00:00:00.000000Z",

"predictionValues": [

{

"value": 45180.4041874386,

"label": "target (actual)"

}

],

"forecastDistance": 1,

"prediction": 45180.4041874386

},

{

"seriesId": 1,

"forecastPoint": "2018-01-09T00:00:00Z",

"rowId": 366,

"timestamp": "2018-01-11T00:00:00.000000Z",

"predictionValues": [

{

"value": 47742.9432499386,

"label": "target (actual)"

}

],

"forecastDistance": 2,

"prediction": 47742.9432499386

},

{

"seriesId": 1,

"forecastPoint": "2018-01-09T00:00:00Z",

"rowId": 367,

"timestamp": "2018-01-12T00:00:00.000000Z",

"predictionValues": [

{

"value": 46394.5698978878,

"label": "target (actual)"

}

],

"forecastDistance": 3,

"prediction": 46394.5698978878

},

{

"seriesId": 2,

"forecastPoint": "2018-01-09T00:00:00Z",

"rowId": 697,

"timestamp": "2018-01-10T00:00:00.000000Z",

"predictionValues": [

{

"value": 39794.833199375,

"label": "target (actual)"

}

]

}

]

}

Request parameters¶

You can parameterize the time series prediction request using URI query parameters. For example, overriding the default inferred forecast point can look like this:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions?forecastPoint=1961-01-01T00:00:00?relaxKnownInAdvanceFeaturesCheck=true" \

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

For the full list of time series-specific parameters, see Time series predictions for deployments.

Response schema¶

The Response schema is consistent with standard predictions but adds a number of columns for each PredictionRow object:

| Name | Type | Notes |

|---|---|---|

| string, int, or None | A multiseries identifier of a predicted row that identifies the series in a multiseries project. | |

| string | An ISO 8601 formatted DateTime string corresponding to the forecast point for the prediction request, either user-configured or selected by DataRobot. | |

| string | An ISO 8601 formatted DateTime string corresponding to the DateTime column of the predicted row. | |

| int | A forecast distance identifier of the predicted row, or how far it is from forecastPoint in the scoring dataset. | |

| string | A DateTime string corresponding to the DateTime column of the predicted row. Unlike the timestamp column, this column will keep the same DateTime formatting as the uploaded prediction dataset. (This column is shown if enabled by your administrator.) |

Making Prediction Explanations¶

The DataRobot Prediction Explanations feature gives insight into which attributes of a particular input cause it to have exceptionally high or exceptionally low predicted values.

Tip

You must run the following two critical dependencies before running Prediction Explanations:

- You must compute Feature Impact for the model.

- You must generate predictions on the dataset using the selected model.

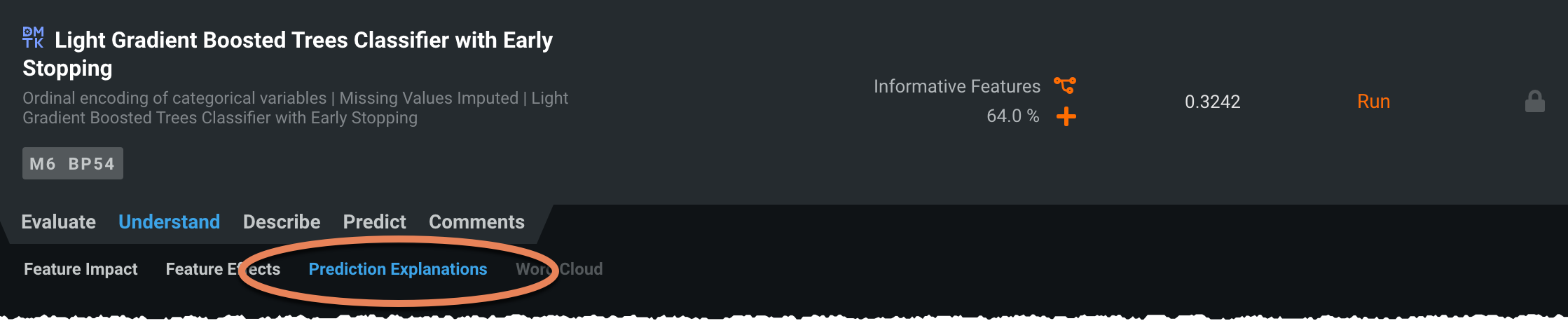

To initialize Prediction Explanations, use the Prediction Explanations tab.

Making Prediction Explanations is very similar to standard prediction requests. First, Prediction Explanations requests are submitted as POST requests to the resource:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictionExplanations" \

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

The following is a sample Response body:

HTTP/1.1 200 OK

Content-Type: application/json

X-DataRobot-Execution-Time: 841

X-DataRobot-Model-Cache-Hit: true

{

"data": [

{

"predictionValues": [

{

"value": 0.6634830442,

"label": 1

},

{

"value": 0.3365169558,

"label": 0

}

],

"prediction": 1,

"rowId": 0,

"predictionExplanations": [

{

"featureValue": 49,

"strength": 0.6194461777,

"feature": "driver_age",

"qualitativeStrength": "+++",

"label": 1

},

{

"featureValue": 1,

"strength": 0.3501610895,

"feature": "territory",

"qualitativeStrength": "++",

"label": 1

},

{

"featureValue": "M",

"strength": -0.171075409,

"feature": "gender",

"qualitativeStrength": "--",

"label": 1

}

]

},

{

"predictionValues": [

{

"value": 0.3565584672,

"label": 1

},

{

"value": 0.6434415328,

"label": 0

}

],

"prediction": 0,

"rowId": 1,

"predictionExplanations": []

}

]

}

Request parameters¶

You can parameterize the Prediction Explanations prediction request using URI query parameters:

| Parameter name | Type | Notes |

|---|---|---|

| int | Maximum number of codes generated per prediction. Default is 3. Previously called maxCodes. | |

| float | Prediction Explanation low threshold. Predictions must be below this value (or above the thresholdHigh value) for Prediction Explanations to compute. This value can be null. | |

| float | Prediction Explanation high threshold. Predictions must be above this value (or below the thresholdLow value) for Prediction Explanations to compute. This value can be null. | |

| string | Includes or excludes exposure-adjusted predictions in prediction responses if exposure was used during model building. The default value is 'true' (exclude exposure-adjusted predictions). |

The following is an example of a parameterized request:

curl -i -X POST "https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictionExplanations?maxExplanations=2&thresholdLow=0.2&thresholdHigh=0.5"

-H "Authorization: Bearer <API key>" -F \

file=@~/.home/path/to/dataset.csv

DataRobot's headers schema is the same as that for prediction responses. The Response schema is consistent with standard predictions, but adds "predictionExplanations", an array of PredictionExplanations for each PredictionRow object.

PredictionExplanations schema¶

Response JSON Array of Objects:

| Name | Type | Notes |

|---|---|---|

| – | Describes which output was driven by this Prediction Explanation. For regression projects, it is the name of the target feature. For classification projects, it is the class whose probability increasing would correspond to a positive strength of this Prediction Explanation. | |

| string | Name of the feature contributing to the prediction. | |

| - | Value the feature took on for this row. | |

| float | Amount this feature’s value affected the prediction. | |

| string | Human-readable description of how strongly the feature affected the prediction (e.g., +++, –, +). |

Tip

The prediction explanation strength value is not bounded to the values [-1, 1]; its interpretation may change as the number of features in the model changes. For normalized values, use qualitativeStrength instead. qualitativeStrength expresses the [-1, 1] range with visuals, with --- representing -1 and +++ representing 1. For explanations with the same qualitativeStrength, you can then use the strength value for ranking.

See the section on interpreting Prediction Explanation output for more information.

Making predictions with humility monitoring¶

Predictions with humility monitoring allow you to monitor predictions using user-defined humility rules.

When a prediction falls outside the thresholds provided for the "Uncertain Prediction" Trigger, it will default to the action assigned to the trigger. The humility key is added to the body of the prediction response when the trigger is activated.

The following is a sample Response body for a Regression project with an Uncertain Prediction Trigger with Action - No Operation:

{

"data": [

{

"predictionValues": [

{

"value": 122.8034057617,

"label": "length"

}

],

"prediction": 122.8034057617,

"rowId": 99,

"humility": [

{

"ruleId": "5ebad4735f11b33a38ff3e0d",

"triggered": true,

"ruleName": "Uncertain Prediction Trigger"

}

]

}

]

}

The following is an example of a Response body for a regression model deployment. It uses the "Uncertain Prediction" trigger with the "Throw Error" action:

480 Error: {"message":"Humility ReturnError action triggered."}

The following is an example of a Response body for a regression model deployment. It uses the "Uncertain Prediction" trigger with the "Override Prediction" action:

{

"data": [

{

"predictionValues": [

{

"value": 122.8034057617,

"label": "length"

}

],

"prediction": 5220,

"rowId": 99,

"humility": [

{

"ruleId": "5ebad4735f11b33a38ff3e0d",

"triggered": true,

"ruleName": "Uncertain Prediction Trigger"

}

]

}

]

}

Response schema¶

The response schema is consistent with standard predictions but adds a new humility column with a subset of columns for each Humility object:

| Name | Type | Notes |

|---|---|---|

| string | The ID of the humility rule assigned to the deployment | |

| boolean | Returns "True" or "False" depending on if the rule was triggered or not | |

| string | The name of the rule that is either defined by the user or auto-generated with a timestamp |

Error responses¶

Any error is indicated by a non-200 code attribute. Codes starting with 4XX indicate request errors (e.g., missing columns, wrong credentials, unknown model ID). The message attribute gives a detailed description of the error in the case of a 4XX code. For example:

curl -H "Content-Type: application/json" -X POST --data '' \

-H "Authorization: Bearer <API key>" \

https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>

HTTP/1.1 400 BAD REQUEST

Date: Fri, 08 Feb 2019 11:00:00 GMT

Content-Type: application/json

Content-Length: 53

Connection: keep-alive

Server: nginx/1.12.2

X-DataRobot-Execution-Time: 332

X-DataRobot-Request-ID: fad6a0b62c1ff30db74c6359648d12fd

{

"message": "The requested URL was not found on the server. If you entered the URL manually, please check your spelling and try again."

}

Codes starting with 5XX indicate server-side errors. Retry the request or contact your DataRobot representative.

Knowing the limitations¶

The following describes the size and timeout boundaries for real-time deployment predictions:

-

Maximum data submission size is 50MB.

-

There is no limit on the number of rows, but timeout limits are as follows:

- Self-Managed AI Platform: configuration-dependent

- Managed AI Platform: 600s

If your request exceeds the timeout, or you are trying to score a large file using dedicated predictions, consider using the batch scoring package.

-

There is a limit on the size of the HTTP request line (currently 8192 bytes).

-

For managed AI Platform deployments, dedicated Prediction API servers automatically close persistent HTTP connections if they are idle for more than 600 seconds. To use persistent connections, the client side must be able to handle these disconnects correctly. The following example configures Python HTTP library

requeststo automatically retry HTTP requests on transport failure:

import requests

import urllib3

# create a transport adapter that will automatically retry GET/POST/HEAD requests on failures up to 3 times

adapter = requests.adapters.HTTPAdapter(

max_retries=urllib3.Retry(

total=3,

method_whitelist=frozenset(['GET', 'POST', 'HEAD'])

)

)

# create a Session (a pool of connections) and make it use the given adapter for HTTP and HTTPS requests

session = requests.Session()

session.mount('http://', adapter)

session.mount('https://', adapter)

# execute a prediction request that will be retried on transport failures, if needed

api_token = '<your api token>'

dr_key = '<your datarobot key>'

response = session.post(

'https://example.datarobot.com/predApi/v1.0/deployments/<deploymentId>/predictions',

headers={

'Authorization': 'Bearer %s' % api_token,

'DataRobot-Key': dr_key,

'Content-Type': 'text/csv',

},

data='<your scoring data>',

)

print(response.content)

Model caching¶

The dedicated prediction server fetches models, as needed, from the DataRobot cluster. To speed up subsequent predictions that use the same model, DataRobot stores a certain number of models in memory (cache). When the cache fills, each new model request will require that one of the existing models in the cache be removed. DataRobot removes the least recently used model (which is not necessarily the model that has been in the cache the longest).

For Self-Managed AI Platform installations, the default size for the cache is 16 models, but it can vary from installation to installation. Please contact DataRobot support if you have questions regarding the cache size of your specific installation.

A prediction server runs multiple prediction processes, each of which has its own exclusive model cache. Prediction processes do not share between themselves. Because of this, it is possible that you send two consecutive requests to a prediction server, and each has to download the model data.

Each response from the prediction server includes a header, X-DataRobot-Model-Cache-Hit, indicating whether the model used was in the cache. If the model was in the cache, the value of the header is true; if the value is false, the model was not in the cache.

Best practices for the fastest predictions¶

The following checklist summarizes the suggestions above to help deliver the fastest predictions possible:

-

Implement persistent HTTP connections: This reduces network round-trips, and thus latency, to the Prediction API.

-

Use CSV data: Because JSON serialization of large amounts of data can take longer than using CSV, consider using CSV for your prediction inputs.

-

Keep the number of requested models low: This allows the Prediction API to make use of model caching.

-

Batch data together in chunks: Batch as many rows together as possible without going over the 50MB real-time deployment prediction request limit. If scoring larger files, consider using the Batch Prediction API which, in addition to scoring local files, also supports scoring to and from S3 and databases.