Configure evaluation and moderation¶

Premium

Evaluation and moderation guardrails are a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

Feature flag: Enable Moderation Guardrails (Premium), Enable Global Models in the Model Registry (Premium), Enable Additional Custom Model Output in Prediction Responses

Evaluation and moderation guardrails help your organization block prompt injection and hateful, toxic, or inappropriate prompts and responses. It can also prevent hallucinations or low-confidence responses and, more generally, keep the model on topic. In addition, these guardrails can safeguard against the sharing of personally identifiable information (PII). Many evaluation and moderation guardrails connect a deployed text generation model (LLM) to a deployed guard model. These guard models make predictions on LLM prompts and responses and then report these predictions and statistics to the central LLM deployment.

To use evaluation and moderation guardrails, first create and deploy guard models to make predictions on an LLM's prompts or responses; for example, a guard model could identify prompt injection or toxic responses. Then, when you create a custom model with the Text Generation or Agentic Workflow target type, define one or more evaluation and moderation guardrails.

Important prerequisites

Before configuring evaluation and moderation guardrails for an LLM, follow these guidelines while deploying guard models and configuring your LLM deployment:

- If using a custom guard model, before deployment, define

moderations.input_column_nameandmoderations.output_column_nameas tag-type key values on the registered model version. If you don't set these key values, any users of the guard model will have to enter the input and output column names manually. - Deploy the global or custom guard models you intend to use to monitor the central LLM before configuring evaluation and moderation.

- Deploy the central LLM on a different prediction environment than the deployed guard models.

- Set an association ID and enable prediction storage before you start making predictions through the deployed LLM. If you don't set an association ID and provide association IDs alongside the LLM's predictions, the metrics for the moderations won't be calculated on the Custom metrics tab.

- After you define the association ID, you can enable automatic association ID generation to ensure these metrics appear on the Custom metrics tab. You can enable this setting during or after deployment.

Prediction method considerations

When making predictions outside a chat generation Q&A application, evaluations and moderations are only compatible with real-time predictions, not batch predictions. In addition, when requesting streaming responses using the Bolt-on Governance API, evaluation and moderation negates the effect of streaming. Guardrails evaluate only the complete response of the LLM and therefore return the response text in one chunk.

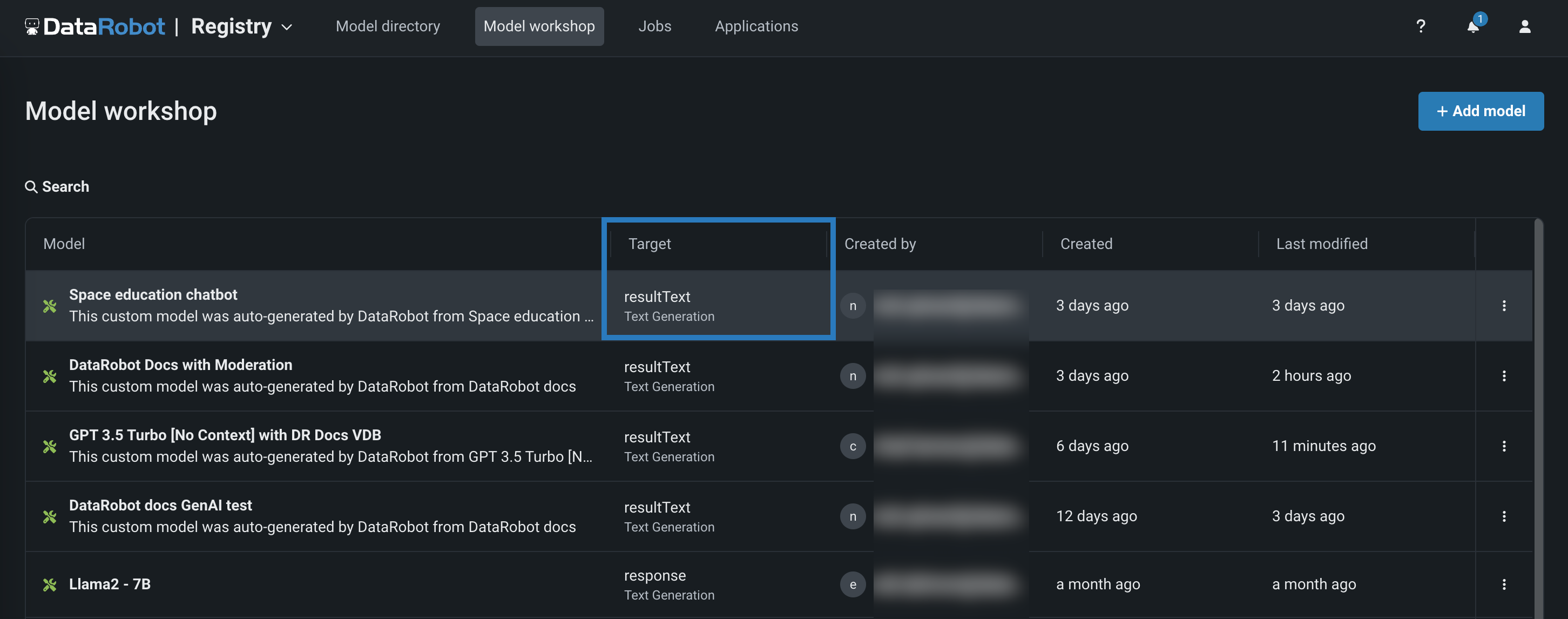

Select evaluation and moderation guardrails¶

When you create a custom model with the Text Generation or Agentic Workflow target type, define one or more evaluation and moderation guardrails.

To select and configure evaluation and moderation guardrails:

-

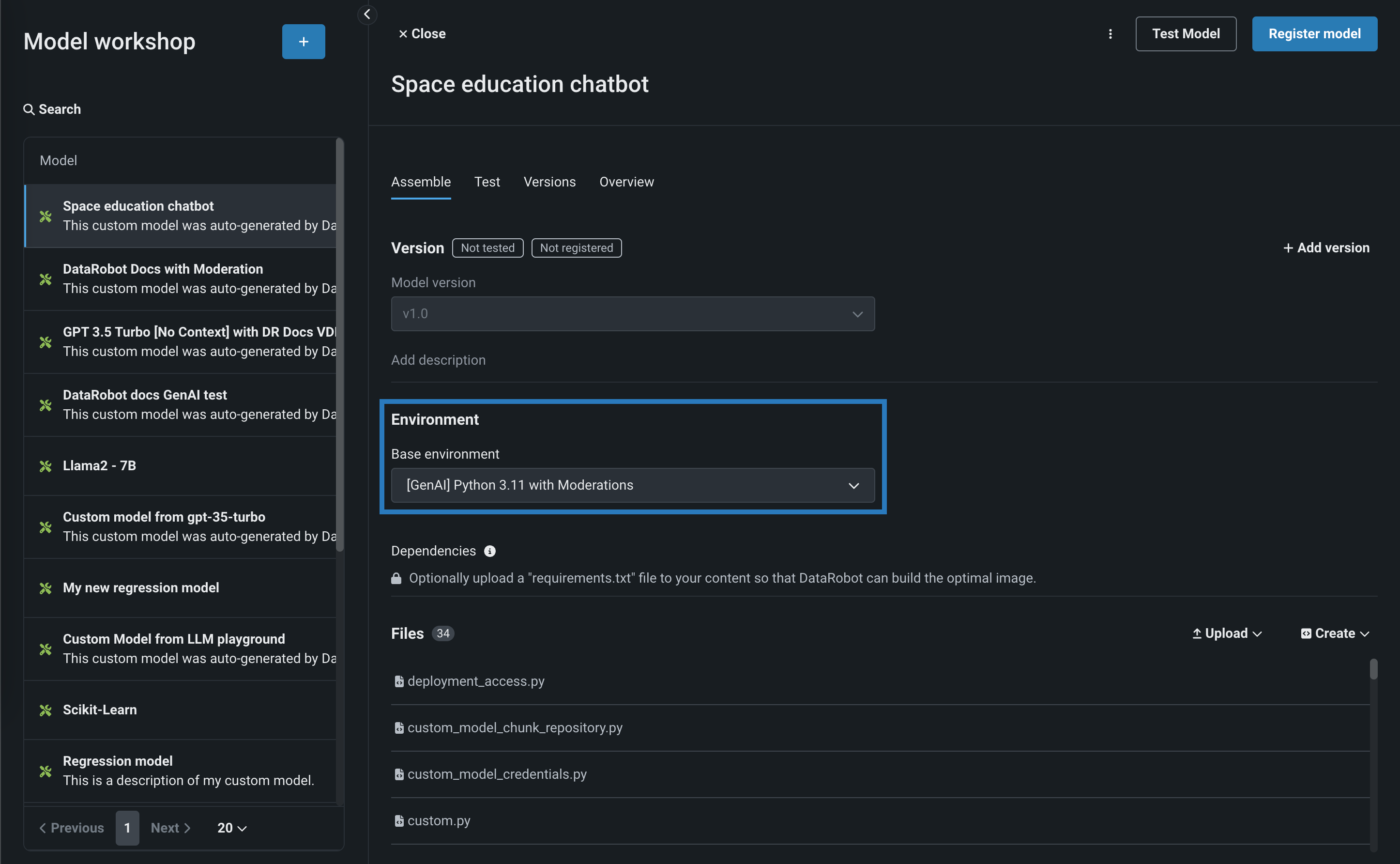

In the Workshop, open the Assemble tab of a custom model with the Text Generation or Agentic Workflow target type and assemble a model, either manually from a custom model you created outside of DataRobot or automatically from a model built in a Use Case's LLM playground:

When you assemble a text generation model with moderations, ensure you configure any required runtime parameters (for example, credentials) or resource settings (for example, public network access). Finally, set the Base environment to a moderation-compatible environment; for example, [GenAI] Python 3.12 with Moderations:

Resource settings

DataRobot recommends creating the LLM custom model using larger resource bundles with more memory and CPU resources.

-

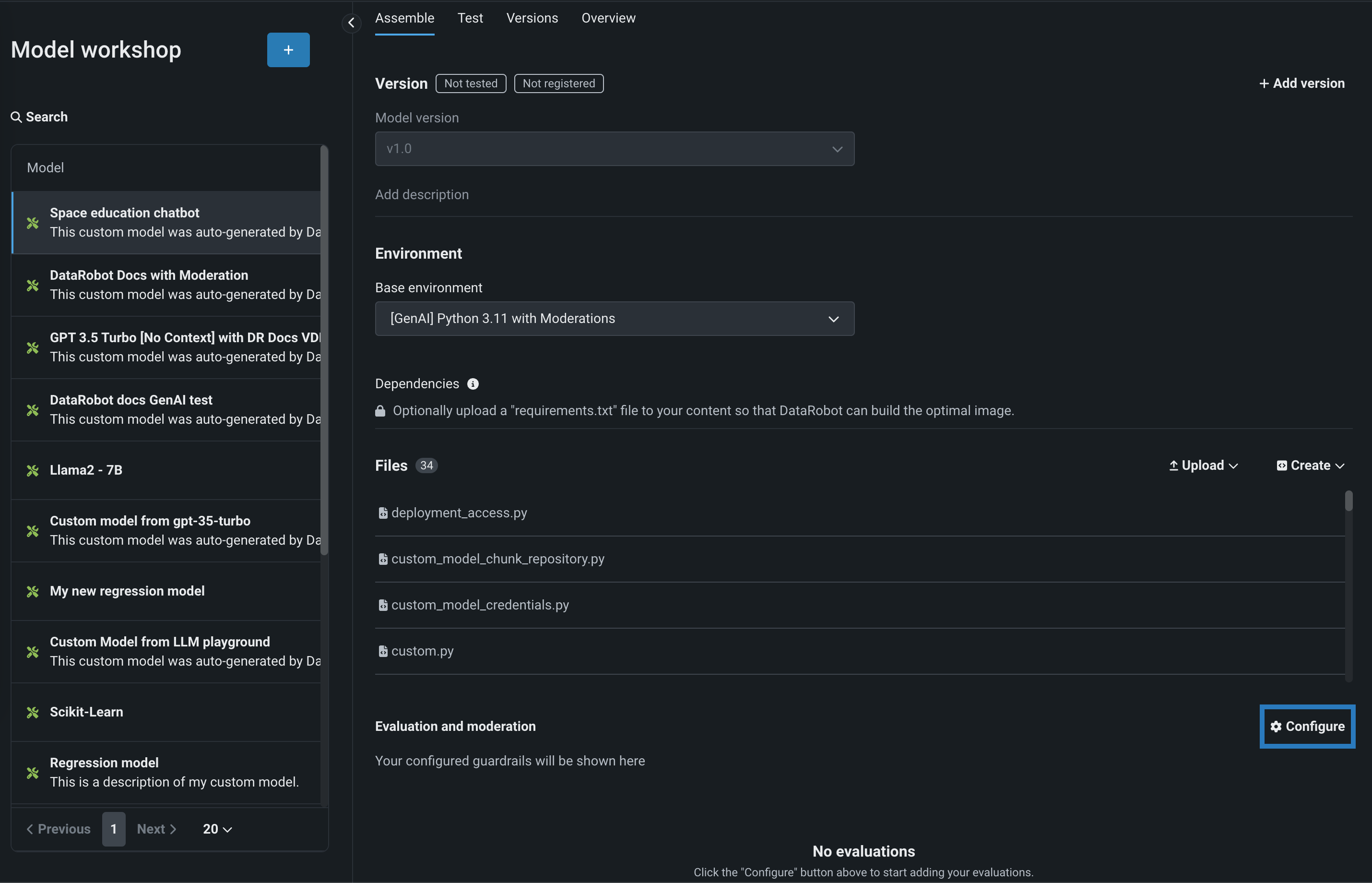

After you've configured the custom model's required settings, navigate to the Evaluation and moderation section and click Configure:

-

In the Configuration summary, do either of the following:

-

Click View lineage to review how evaluations are executed in DataRobot. All evaluations and their respective moderations run in parallel.

-

Click General configuration to set the following:

Setting Description Set moderation timeout Configure the maximum wait time (in seconds) for moderations before the system automatically times out. Timeout action Define what happens if the moderation system times out: Score prompt / response or Block prompt / response.

-

-

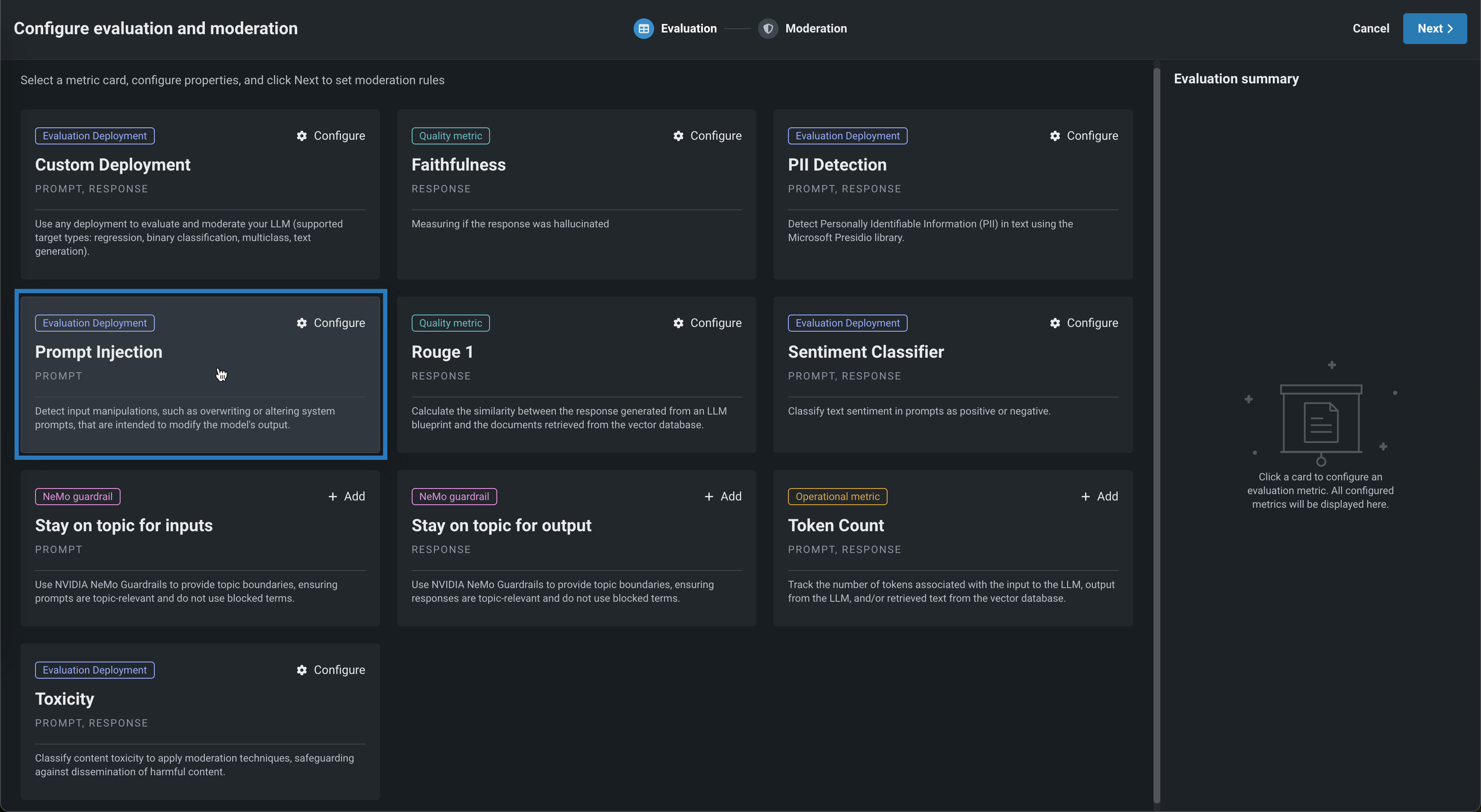

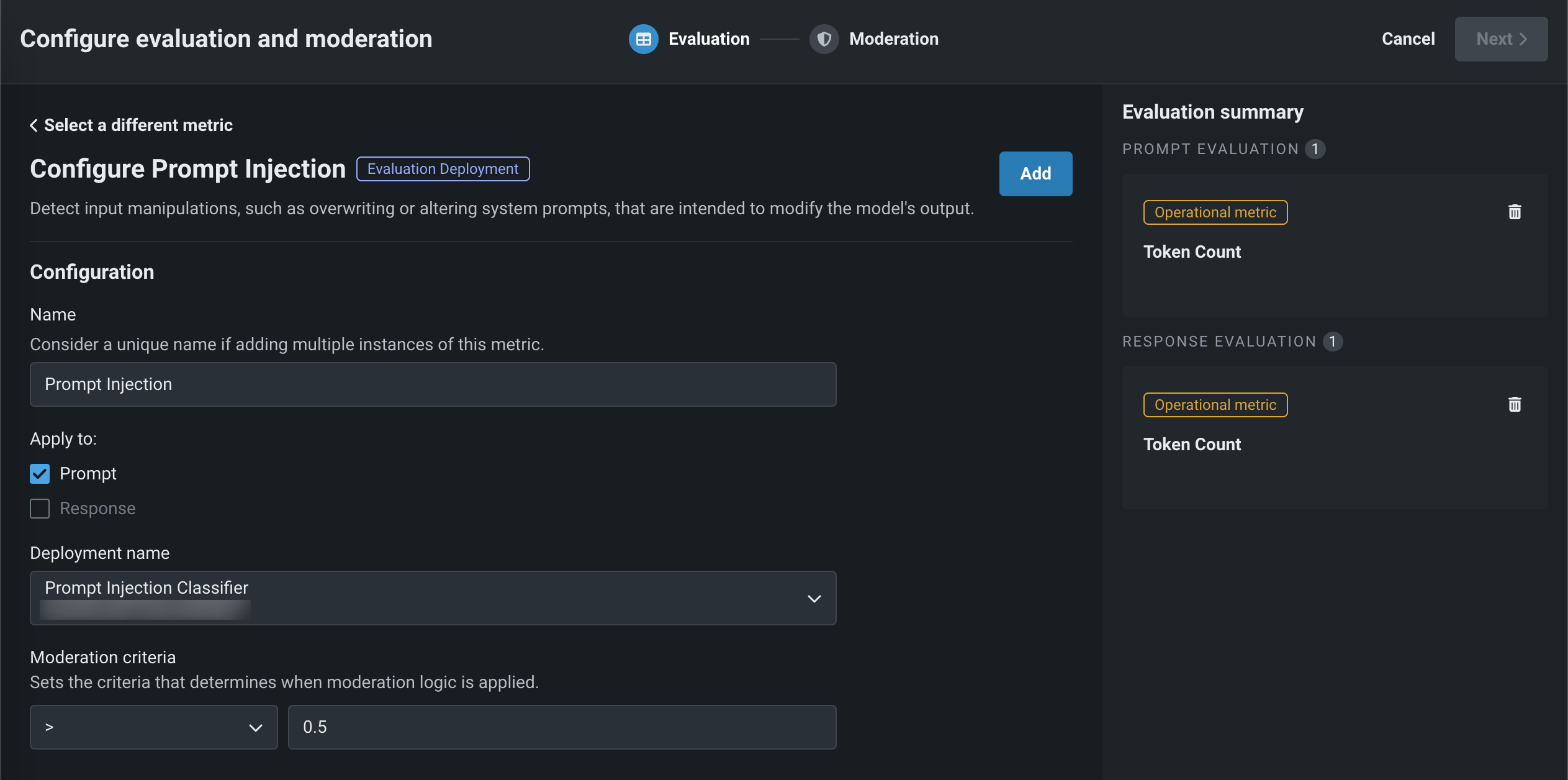

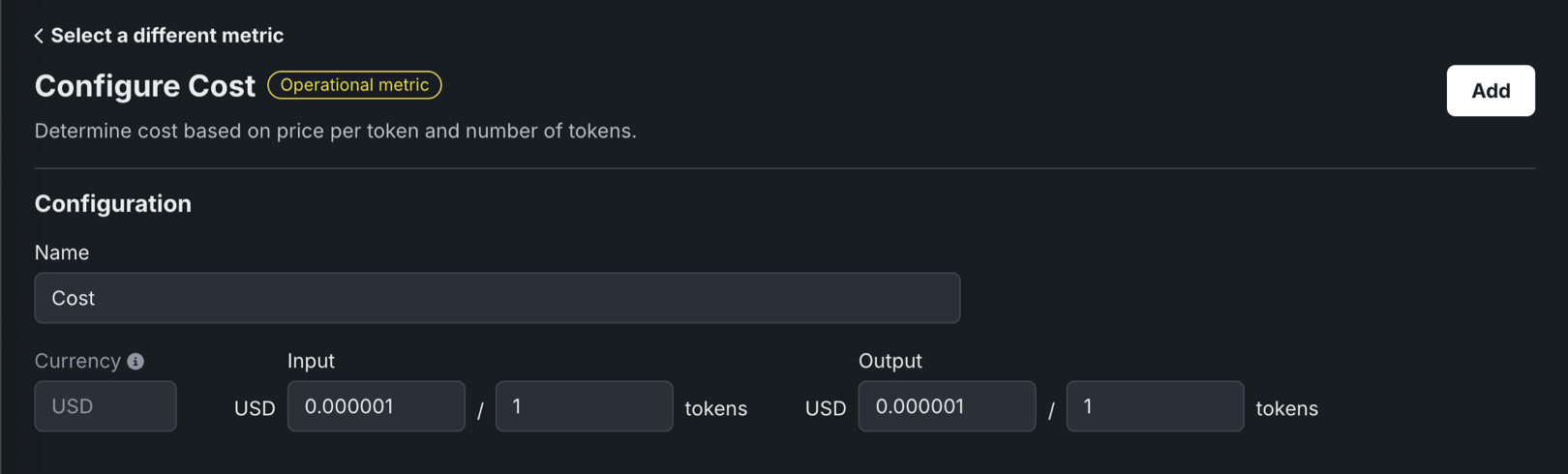

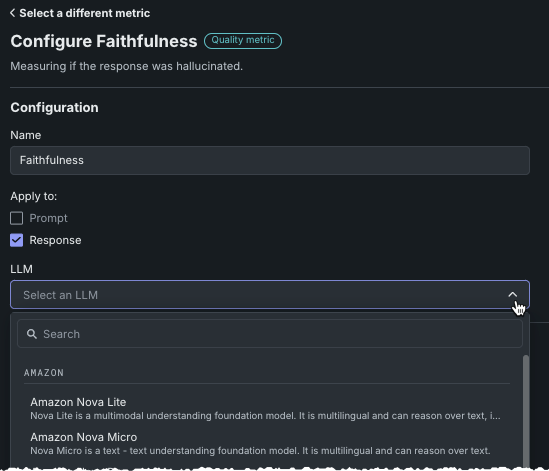

In the Configure evaluation and moderation panel, click one of the following metric cards to configure the required properties:

Evaluation metric Requires Description Content safety A deployed NIM model llama-3.1-nemoguard-8b-content-safetyimported from NGCClassify prompts and responses as safe or unsafe; return a list of any unsafe categories detected. Cost LLM cost settings Calculate the cost of generating the LLM response using the provided input cost-per-token, and output cost-per-token values. The cost calculation also includes the cost of citations. For more information, see Cost metric settings. Custom Deployment Custom deployment Use any deployment to evaluate and moderate your LLM (supported target types: regression, binary classification, multiclass, text generation). Emotions Classifier Emotions Classifier deployment Classify prompt or response text by emotion. Faithfulness Playground LLM, vector database Measure if the LLM response matches the source to identify possible hallucinations. Jailbreak A deployed NIM model nemoguard-jailbreak-detectimported from NGCClassify jailbreak attempts using NemoGuard JailbreakDetect. PII Detection Presidio PII Detection Detect Personally Identifiable Information (PII) in text using the Microsoft Presidio library. Prompt Injection Prompt Injection Classifier Detect input manipulations, such as overwriting or altering system prompts, intended to modify the model's output. Prompt tokens N/A Tracks the number of tokens associated with the input to the LLM. Response tokens N/A Tracks the number of tokens associated with the output from the LLM. Rouge 1 Vector database Calculate the similarity between the response generated from an LLM blueprint and the documents retrieved from the vector database. Stay on topic for inputs NVIDIA NeMo guardrails configuration Use NVIDIA NeMo Guardrails to provide topic boundaries, ensuring prompts are topic-relevant and do not use blocked terms. Stay on topic for output NVIDIA NeMo guardrails configuration Use NVIDIA NeMo Guardrails to provide topic boundaries, ensuring responses are topic-relevant and do not use blocked terms. Token Count N/A Track the number of tokens associated with the input to the LLM, output from the LLM, and/or retrieved text from the vector database. Toxicity Toxicity Classifier Classify content toxicity to apply moderation techniques, safeguarding against dissemination of harmful content. Agentic workflow metrics Agent Goal Accuracy Playground LLM Evaluate the performance of the LLM in identifying and achieving the goals of the user, without reference. Task Adherence Playground LLM Measure how well the agent adheres to its assigned tasks or predefined goals. The deployments required for PII detection, prompt injection detection, emotion classification, and toxicity classification are available as global models in Registry.

Multiclass custom deployment metric limits

Multiclass custom deployment metrics can have:

-

Up to

10classes defined in the Matches list for moderation criteria. -

Up to

100class names in the guard model.

-

-

Depending on the metric selected above, configure the following fields:

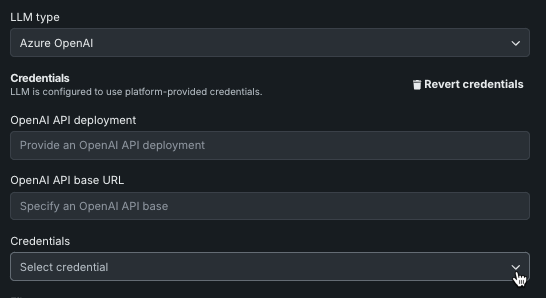

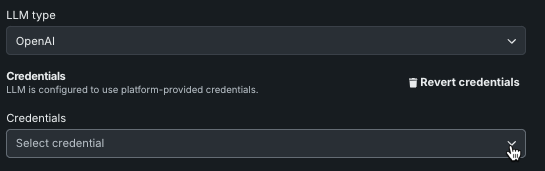

Field Description General settings Name Enter a unique name if adding multiple instances of the evaluation metric. Apply to Select one or both of Prompt and Response, depending on the evaluation metric. Note that when you select Prompt, it's the user prompt, not the final LLM prompt, that is used for metric calculation. Custom Deployment, PII Detection, Prompt Injection, Emotions Classifier, and Toxicity settings Deployment name For evaluation metrics calculated by a guard model, select the custom model deployment. Custom Deployment settings Input column name This name is defined by the custom model creator. For global models created by DataRobot, the default input column name is text. If the guard model for the custom deployment has themoderations.input_column_namekey value defined, this field is populated automatically.Output column name This name is defined by the custom model creator, and needs to refer to the target column for the model. The target name is listed on the deployment's Overview tab (and often has _PREDICTIONappended to it). You can confirm the column names by exporting and viewing the CSV data from the custom deployment. If the guard model for the custom deployment has themoderations.output_column_namekey value defined, this field is populated automatically.Faithfulness, Agent Goal Accuracy, and Task Adherence settings LLM Select a playground LLM to evaluate the selected metric. For Faithfulness, once you select an LLM, you have the option of using your own user-provided credentials instead of DataRobot-provided. Stay on topic for input/output settings LLM Type Select Azure OpenAI, OpenAI, or NIM. For the Azure OpenAI LLM type, additionally enter an OpenAI API deployment; for NIM enter a NIM deployment. If you use the LLM gateway, the default experience, DataRobot-supplied credentials are provided. When LLM type is Azure OpenAI or OpenAI, click Change credentials to provide your own authentication. Files For the Stay on topic evaluations, next to a file, click to modify the NeMo guardrails configuration files. In particular, update prompts.ymlwith allowed and blocked topics andblocked_terms.txtwith the blocked terms, providing rules for NeMo guardrails to enforce. Theblocked_terms.txtfile is shared between the input and output stay on topic metrics; therefore, modifyingblocked_terms.txtin the input metric modifies it for the output metric and vice versa. Only two NeMo stay on topic metrics can exist in a custom model, one for input and one for output.Moderation settings Configure and apply moderation Enable this setting to expand the Moderation section and define the criteria that determines when moderation logic is applied. -

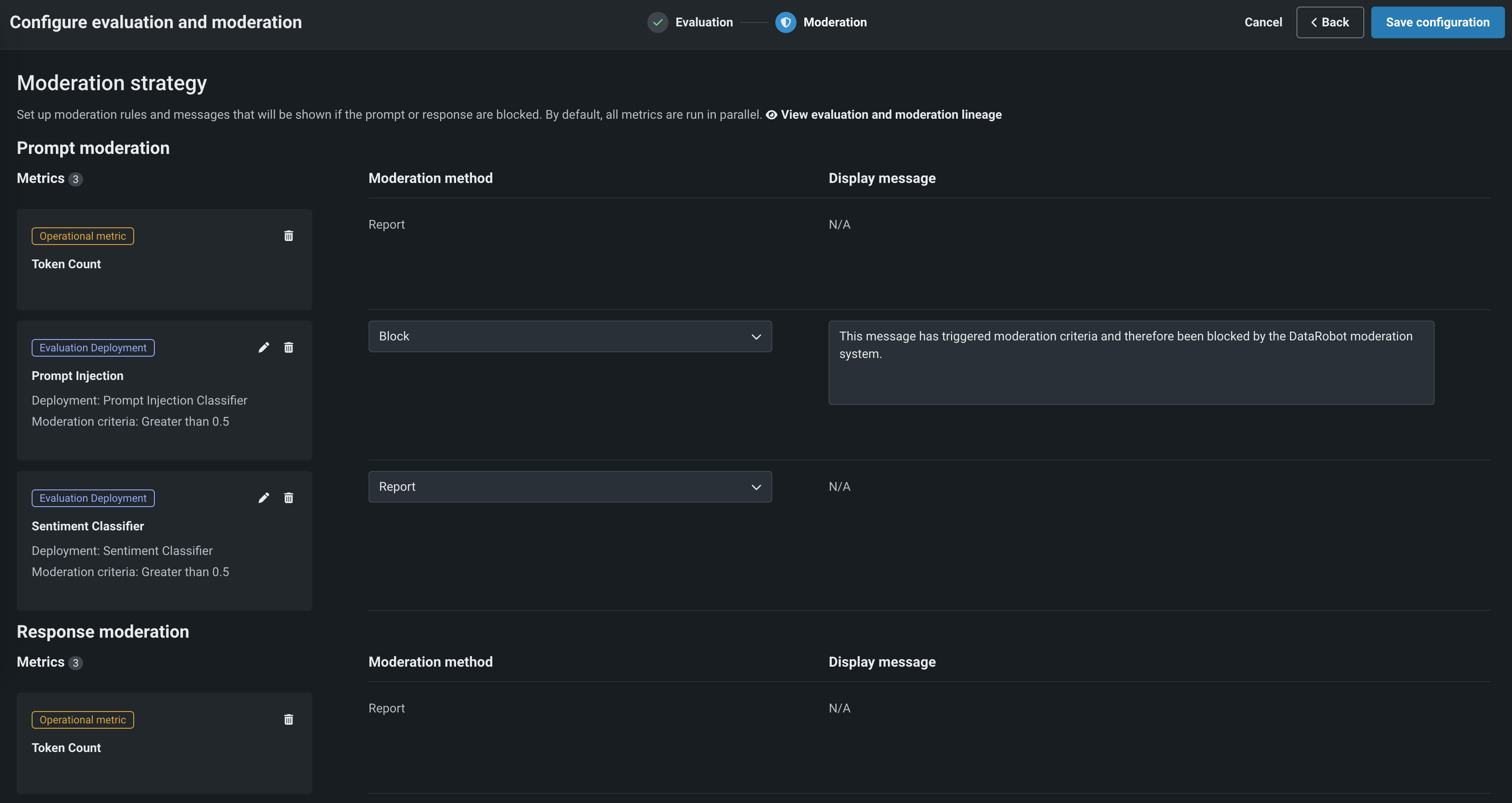

In the Moderation section, with Configure and apply moderation enabled, for each evaluation metric, set the following:

Setting Description Moderation criteria If applicable, set the threshold settings evaluated to trigger moderation logic. For the Emotions Classifier, select Matches or Does not match and define a list of classes (emotions) to trigger moderation logic. Moderation method Select Report, Report and block, or Replace (if applicable). Moderation message If you select Report and block, you can optionally modify the default message. -

After configuring the required fields, click Add to save the evaluation and return to the evaluation selection page. Then, select and configure another metric, or click Save configuration.

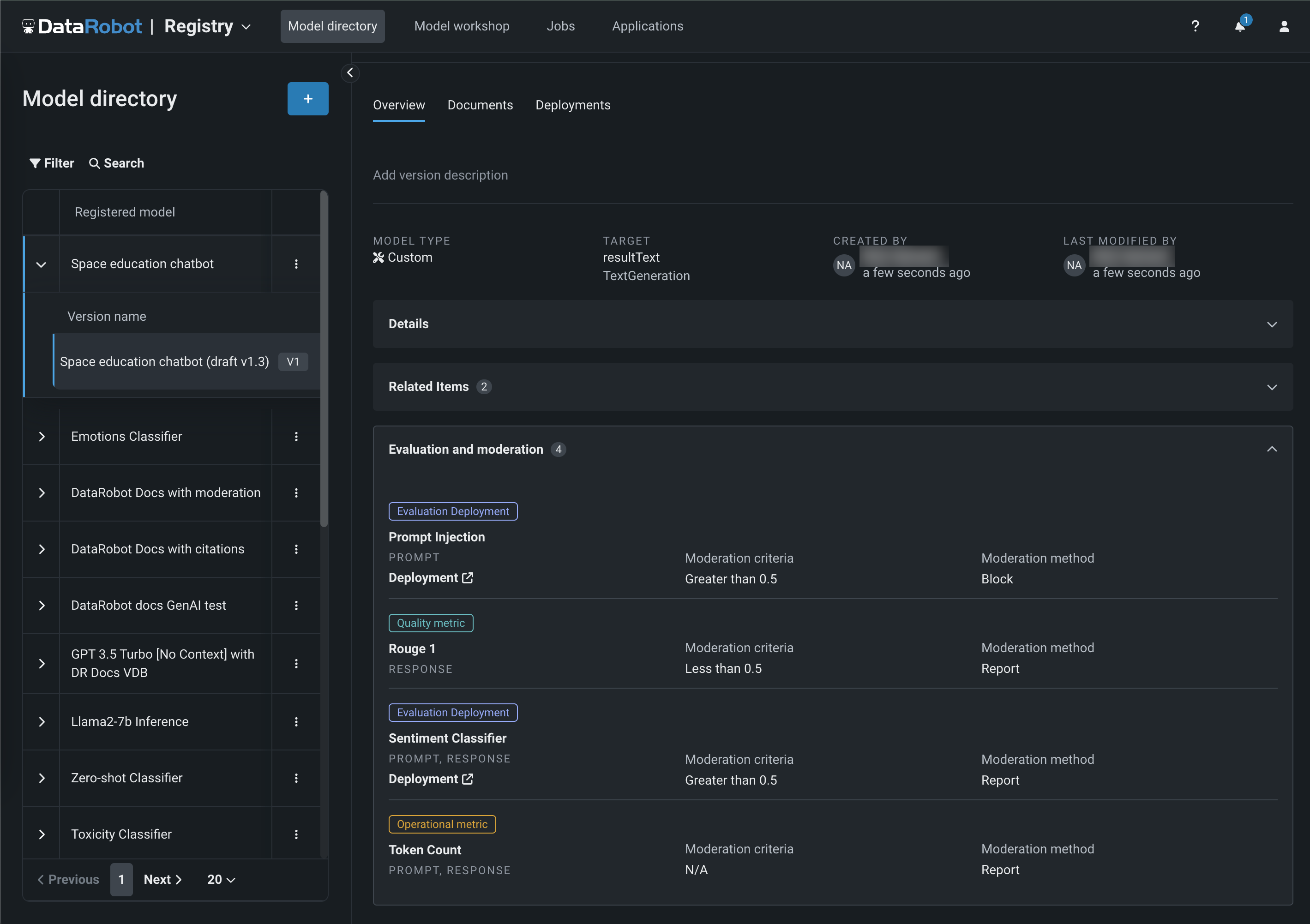

The guardrails you selected appear in the Evaluation and moderation section of the Assemble tab.

After you add guardrails to a text generation custom model, you can test, register, and deploy the model to make predictions in production. After making predictions, you can view the evaluation metrics on the Custom metrics tab and prompts, responses, and feedback (if configured) on the Data exploration tab.

Tracing tab

When you add moderations to an LLM deployment, you can't view custom metric data by row on the Data exploration > Tracing tab.

Change credentials¶

DataRobot provides credentials for available LLMs using the LLM gateway. With certain metrics and LLMs or LLM types, you can, instead use your own credentials for authentication. Before proceeding, define user-specified credentials on the credentials management page.

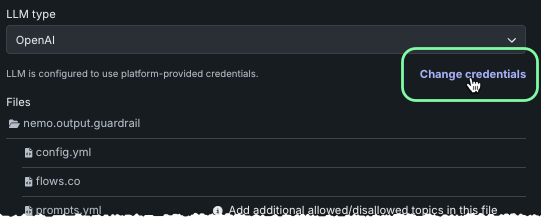

Stay on topic metrics¶

To change credentials for either Stay on topic for inputs or Stay on topic for output, choose the LLM type and click Change credentials.

To revert to DataRobot-provided credentials, click Revert credentials.

Faithfulness metric¶

To change credentials for Faithfulness, select the LLM and click Change credentials.

The following table lists the required fields:

| Provider | Fields |

|---|---|

| Amazon |

|

| Azure OpenAI |

|

|

|

| OpenAI |

|

To revert to DataRobot-provided credentials, click Revert credentials.

Global models for evaluation metric deployments¶

The deployments required for PII detection, prompt injection detection, emotion classification, and toxicity classification are available as global models in Registry. The following global models are available:

| Model | Type | Target | Description |

|---|---|---|---|

| Prompt Injection Classifier | Binary | Classifies text as prompt injection or legitimate. This guard model requires one column named text, containing the text to classify. For more information, see the deberta-v3-base-injection model details. |

|

| Toxicity Classifier | Binary | Classifies text as toxic or non-toxic. This guard model requires one column named text, containing the text to classify. For more information, see the toxic-comment-model details. |

|

| Sentiment Classifier | Binary | Classifies text sentiment as positive or negative. This model requires one column named text, containing the text to classify. For more information, see the distilbert-base-uncased-finetuned-sst-2-english model details. |

|

| Emotions Classifier | Multiclass | Classifies text by emotion. This is a multilabel model, meaning that multiple emotions can be applied to the text. This model requires one column named text, containing the text to classify. For more information, see the roberta-base-go_emotions-onnx model details. |

|

| Refusal Score | Regression | Outputs a maximum similarity score, comparing the input to a list of cases where an LLM has refused to answer a query because the prompt is outside the limits of what the model is configured to answer. | |

| Presidio PII Detection | Binary | Detects and replaces Personally Identifiable Information (PII) in text. This guard model requires one column named text, containing the text to be classified. The types of PII to detect can optionally be specified in a column, 'entities', as a comma-separated string. If this column is not specified, all supported entities will be detected. Entity types can be found in the PII entities supported by Presidio documentation. In addition to the detection result, the model returns an anonymized_text column, containing an updated version of the input with detected PII replaced with placeholders. For more information, see the Presidio: Data Protection and De-identification SDK documentation. |

|

| Zero-shot Classifier | Binary | Performs zero-shot classification on text with user-specified labels. This model requires classified text in a column named text and class labels as a comma-separated string in a column named labels. It expects the same set of labels for all rows; therefore, the labels provided in the first row are used. For more information, see the deberta-v3-large-zeroshot-v1 model details. |

|

| Python Dummy Binary Classification | Binary | Always yields 0.75 for the positive class. For more information, see the python3_dummy_binary model template. |

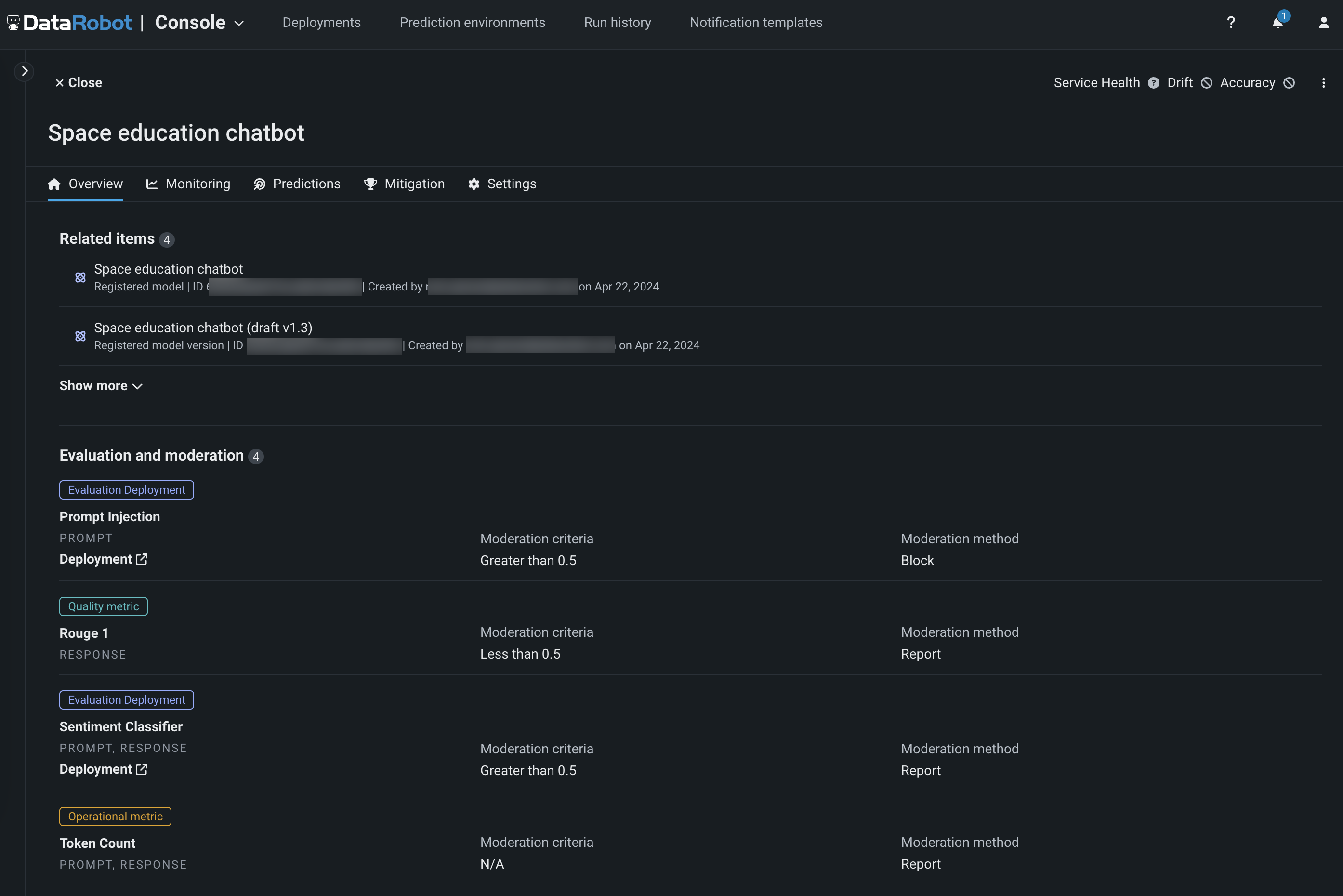

View evaluation and moderation guardrails¶

When a text generation model with guardrails is registered and deployed, you can view the configured guardrails on the registered model's Overview tab and the deployment's Overview tab:

Evaluation and moderation logs

On the Activity log > Moderation tab of a deployed LLM with evaluation and moderation configured, you can view a history of evaluation and moderation-related events for the deployment to diagnose issues with a deployment's configured evaluations and moderations.