Service health¶

The Service health tab tracks metrics about a deployment's ability to respond to prediction requests quickly and reliably. This helps identify bottlenecks and assess capacity, which is critical to proper provisioning. For example, if a model seems to have generally slowed in its response times, the Service health tab for the model's deployment can help. You might notice in the tab that median latency goes up with an increase in prediction requests. If latency increases when a new model is switched in, you can consult with your team to determine whether the new model can instead be replaced with one offering better performance.

To access Service health, select an individual deployment from the deployment inventory page and then, from the Overview, click Monitoring > Service health. The tab provides informational tiles and a chart to help assess the activity level and health of the deployment.

Time of Prediction

The Time of Prediction value differs between the Data drift and Accuracy tabs and the Service health tab:

-

On the Service health tab, the "time of prediction request" is always the time the prediction server received the prediction request. This method of prediction request tracking accurately represents the prediction service's health for diagnostic purposes.

-

On the Data drift and Accuracy tabs, the "time of prediction request" is, by default, the time you submitted the prediction request, which you can override with the prediction timestamp in the Prediction History and Service Health settings.

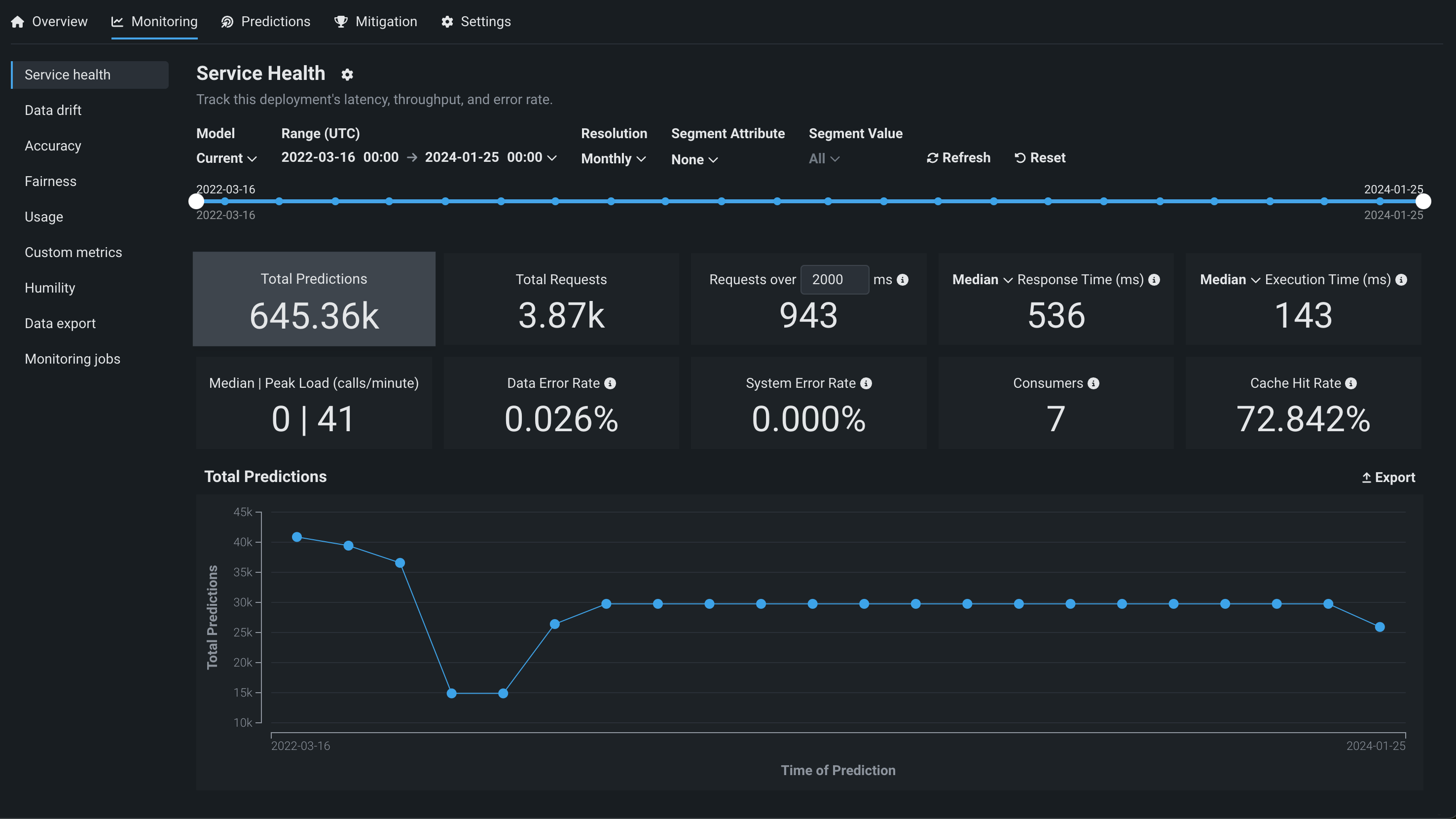

Understand metric tiles and chart¶

DataRobot displays informational statistics based on your current settings for model and time frame. That is, tile values correspond to the same units as those selected on the slider. If the slider interval values are weekly, the displayed tile metrics show values corresponding to weeks. Clicking a metric tile updates the chart below.

The Service health tab reports the following metrics on the dashboard:

Service health information for external models and monitoring jobs

Service health information is unavailable for external agent-monitored deployments and deployments with predictions uploaded through a prediction monitoring job.

| Statistic | Reports (for selected time period) |

|---|---|

| Total Predictions | The number of predictions the deployment has made (per prediction node). |

| Total Requests | The number of prediction requests the deployment has received (a single request can contain multiple prediction requests). |

Requests over x ms |

The number of requests where the response time was longer than the specified number of milliseconds. The default is 2000 ms; click in the box to enter a time between 10 and 100,000 ms or adjust with the controls. |

| Response Time | The time (in milliseconds) DataRobot spent receiving a prediction request, calculating the request, and returning a response to the user. The report does not include time due to network latency. Select the median prediction request time or 90th, 95th, or 99th percentile. The display reports a dash if you have made no requests against it or if it's an external deployment. |

| Execution Time | The time (in milliseconds) DataRobot spent calculating a prediction request. Select the median prediction request time or 90th, 95th, or 99th percentile. |

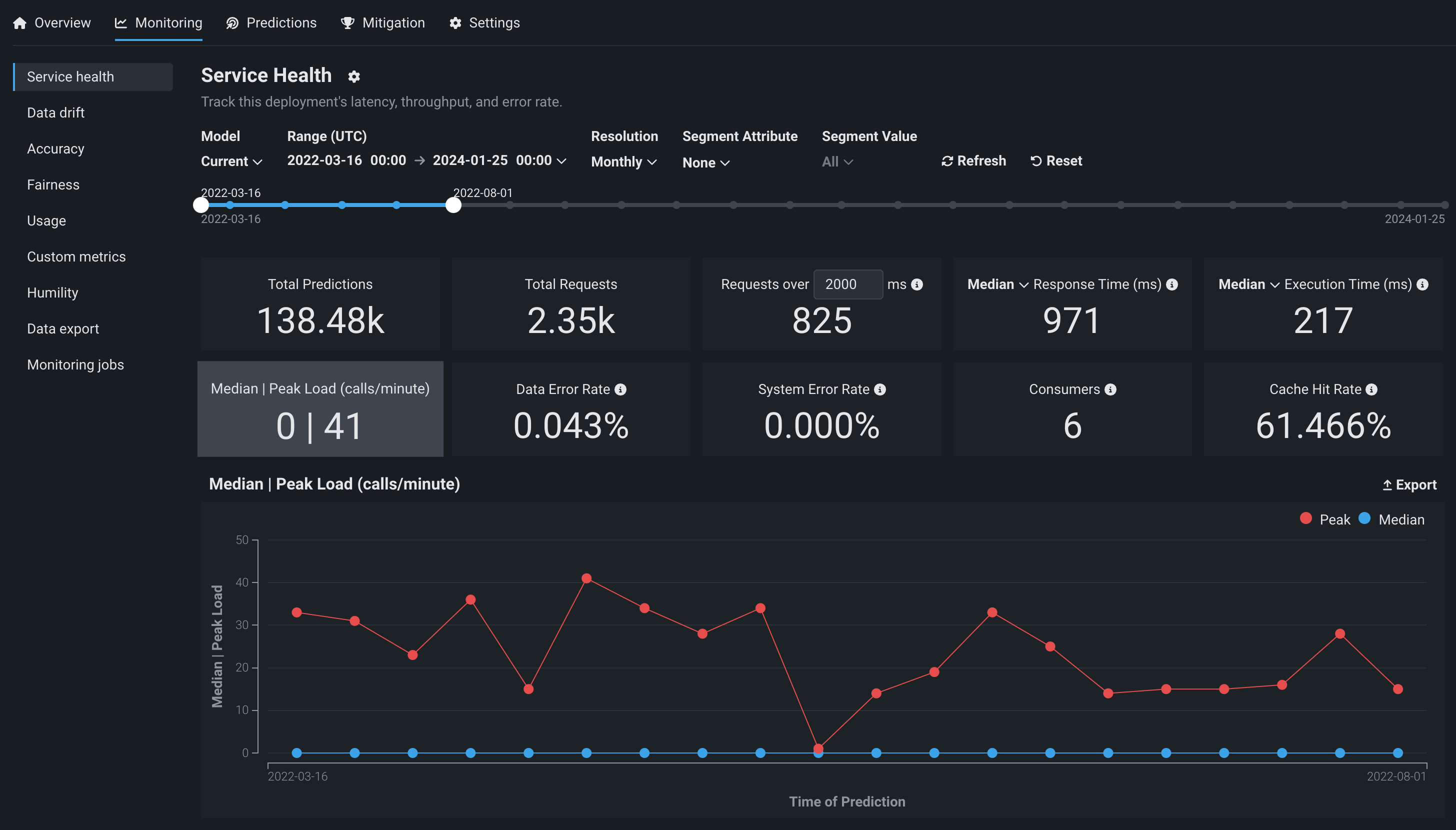

| Median/Peak Load | The median and maximum number of requests per minute. |

| Data Error Rate | The percentage of requests that result in a 4xx error (problems with the prediction request submission). This is a component of the value reported as the Service Health Summary on the Deployments dashboard top banner. |

| System Error Rate | The percentage of well-formed requests that result in a 5xx error (problem with the DataRobot prediction server). This is a component of the value reported as the Service Health Summary on the Deployments dashboard top banner. |

| Consumers | The number of distinct users (identified by API key) who have made prediction requests against this deployment. |

| Cache Hit Rate | The percentage of requests that used a cached model (the model was recently used by other predictions). If not cached, DataRobot has to look the model up, which can cause delays. The prediction server cache holds 16 models by default, dropping the least-used model when the limit is reached. |

You can configure the dashboard to focus the visualized statistics on specific segments and time frames. The following controls are available:

| Control | Description |

|---|---|

| Model | Updates the dashboard displays to reflect the model you selected from the dropdown. |

| Range (UTC) | Sets the date range displayed for the deployment date slider. You can also drag the date slider to set the range. The range selector only allows you to select dates and times between the start date of the deployment's current version of a model and the current date. |

| Resolution | Sets the time granularity of the deployment date slider. The following resolution settings are available, based on the selected range:

|

| Segment Attribute | Sets the segment to filter the dashboard by. |

| Segment Value | Sets a specific value within a segment to filter the dashboard by. |

| Refresh | Initiates an on-demand update of the dashboard with new data. Otherwise, DataRobot refreshes the dashboard every 15 minutes. |

| Reset | Reverts the dashboard controls to the default settings. |

The chart below the metric tiles displays individual metrics over time, helping to identify patterns in the quality of service. Clicking on a metric tile updates the chart to represent that information; adjusting the data range slider focuses on a specific period:

Export charts

Click Export to download a .csv or .png file of the currently selected chart, or a .zip archive file of both (and a .json file).

The Median | Peak Load (calls/minute) chart displays two lines, one for Peak load and one for Median load over time:

Service health status indicators¶

Service health tracks metrics about a deployment’s ability to respond to prediction requests quickly and reliably. You can view the service health status in the deployment inventory and visualize service health on the Service health tab. Service health monitoring represents the occurrence of 4XX and 5XX errors in your prediction requests or prediction server:

- 4xx errors indicate problems with the prediction request submission.

- 5xx errors indicate problems with the DataRobot prediction server.

| Color | Service Health | Action |

|---|---|---|

| Green / Passing | Zero 4xx or 5xx errors. | No action needed. |

| Yellow / At risk | At least one 4xx error and zero 5xx errors. | Concerns found, but no immediate action needed; monitor. |

| Red / Failing | At least one 5xx error. | Immediate action needed. |

| Gray / Disabled | Unmonitored deployment. | Enable monitoring and make predictions. |

| Gray / Not started | No service health events recorded. | Make predictions. |

| Gray / Unknown | No predictions made. | Make predictions. |

Explore deployment data tracing¶

Premium

Tracing is a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

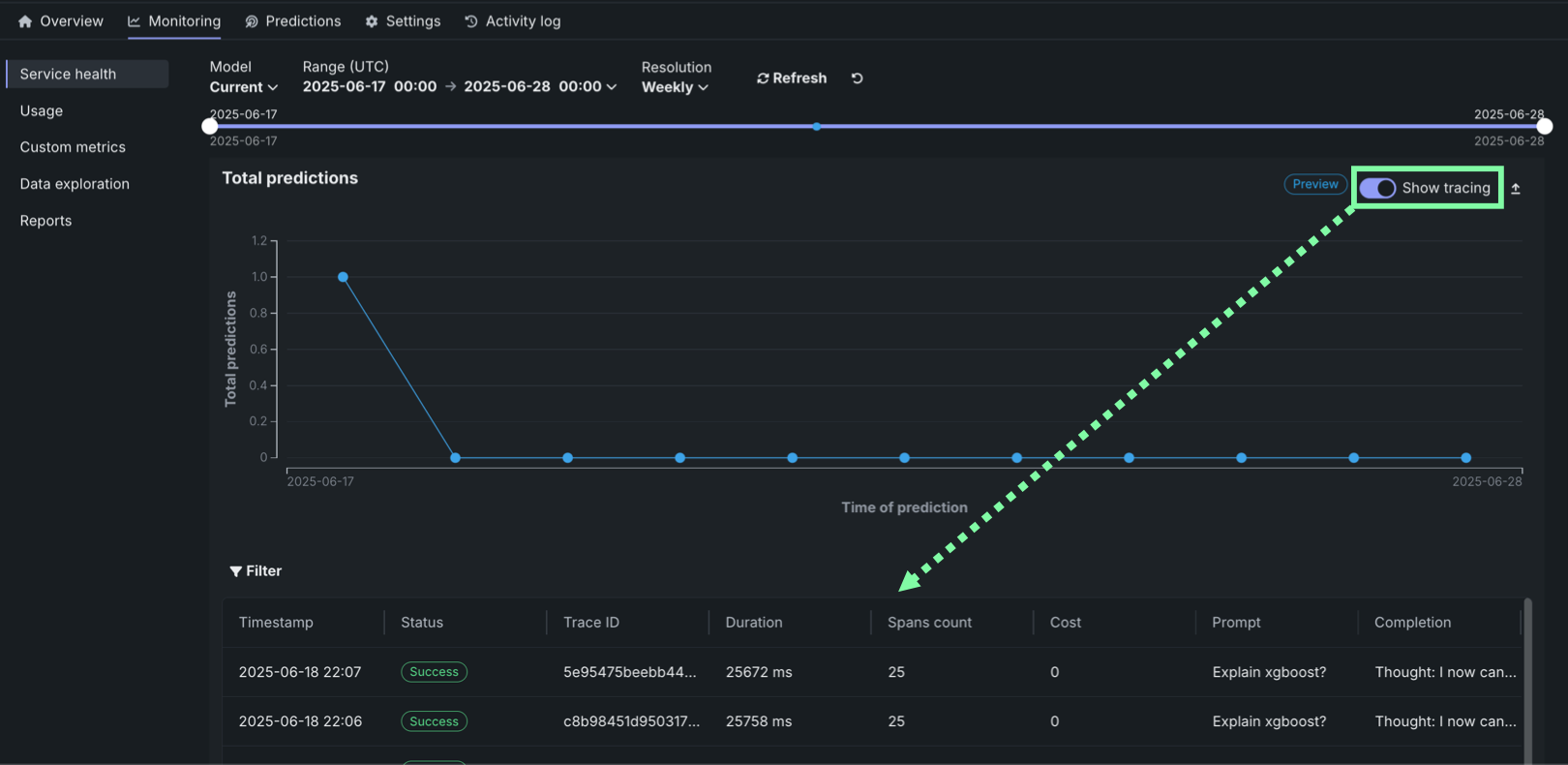

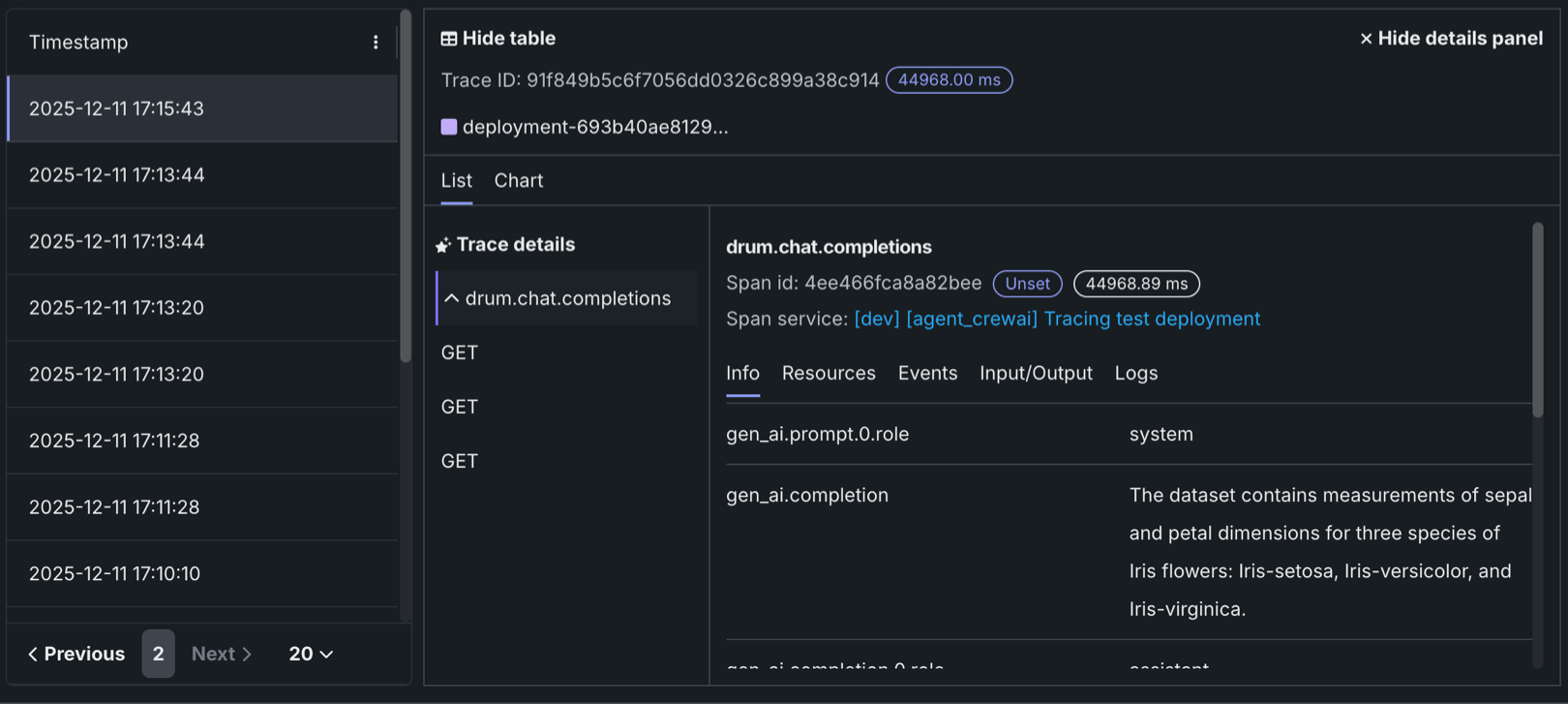

On the Service health tab of a custom or external model deployment, you can view the tracing table below the Total predictions chart. To view the tracing table, in the upper-right corner of the Total predictions chart, click Show tracing.

Traces represent the path taken by a request to a model or agentic workflow. DataRobot uses the OpenTelemetry framework for tracing. A trace follows the entire end-to-end path of a request, from origin to resolution. Each trace contains one or more spans, starting with the root span. The root span represents the entire path of the request and contains a child span for each individual step in the process. The root (or parent) span and each child span share the same Trace ID.

In the Tracing table, you can review the following fields related to each trace:

| Column | Description |

|---|---|

| Timestamp | The date and time of the trace in YYYY-MM-DD HH:MM format. |

| Status | The overall status of the trace, including all spans. The Status will be Error if any dependent task fails. |

| Trace ID | A unique identifier for the trace. |

| Duration | The amount of time, in milliseconds, it took for the trace to complete. This value is equal to the duration of the root span (rounded) and includes all actions represented by child spans. |

| Spans count | The number of completed spans (actions) included in the trace. |

| Cost | If cost data is provided, the total cost of the trace. |

| Prompt | The user prompt related to the trace. |

| Completion | The agent or model response (completion) associated with the prompt for the trace. |

| Tools | The tool or tools called during the request represented by the trace. |

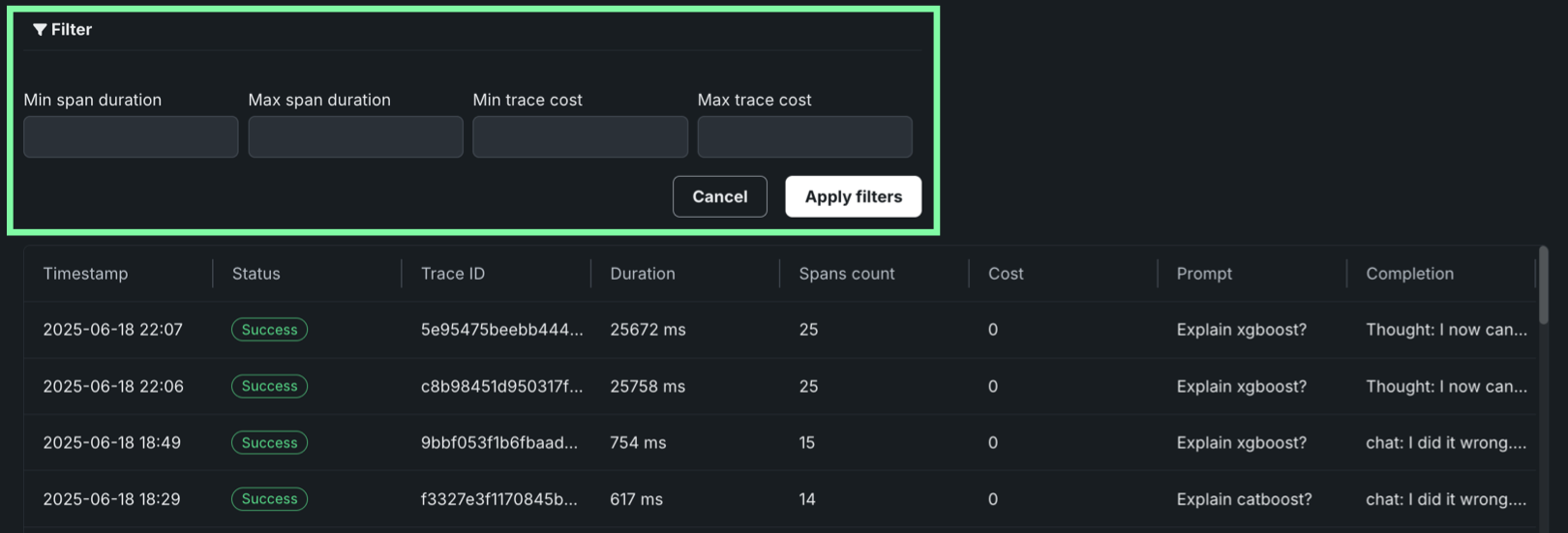

Click Filter to filter by Min span duration, Max span duration, Min trace cost, and Max trace cost. The unit for span filters is nanoseconds (ns), the chart displays spans in milliseconds (ms).

Filter accessibility

The Filter button is hidden when a span is expanded to detail view. To return to the chart view with the filter, click Hide details panel.

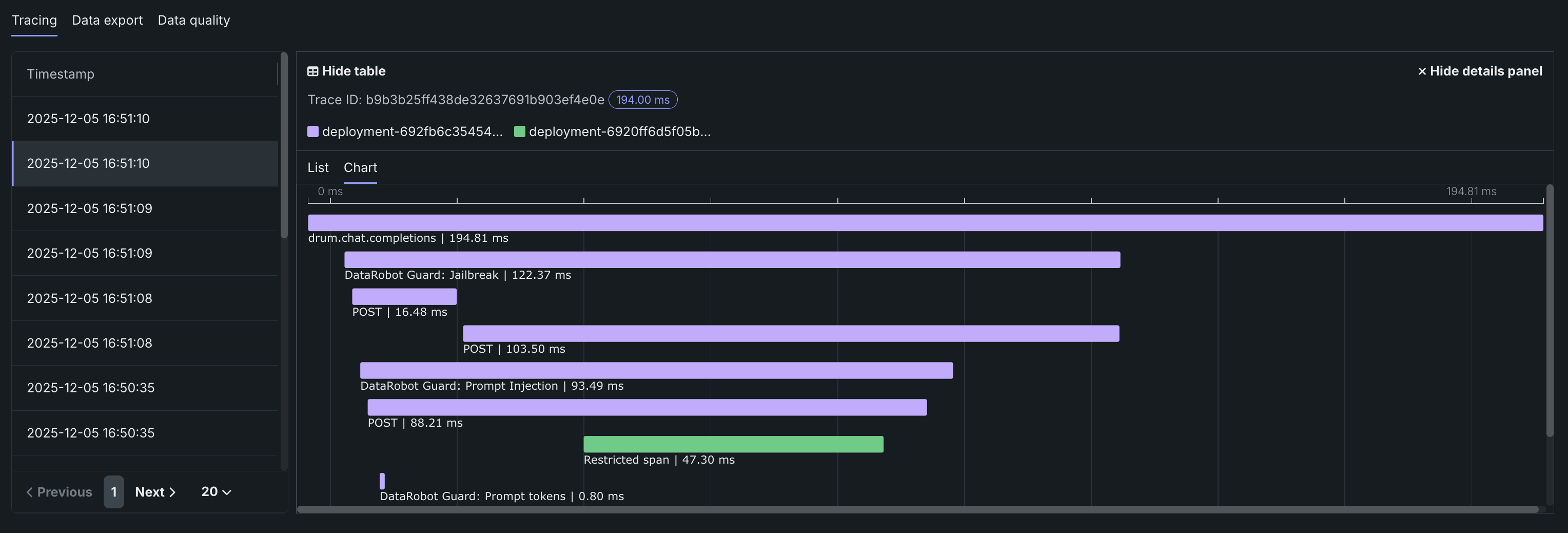

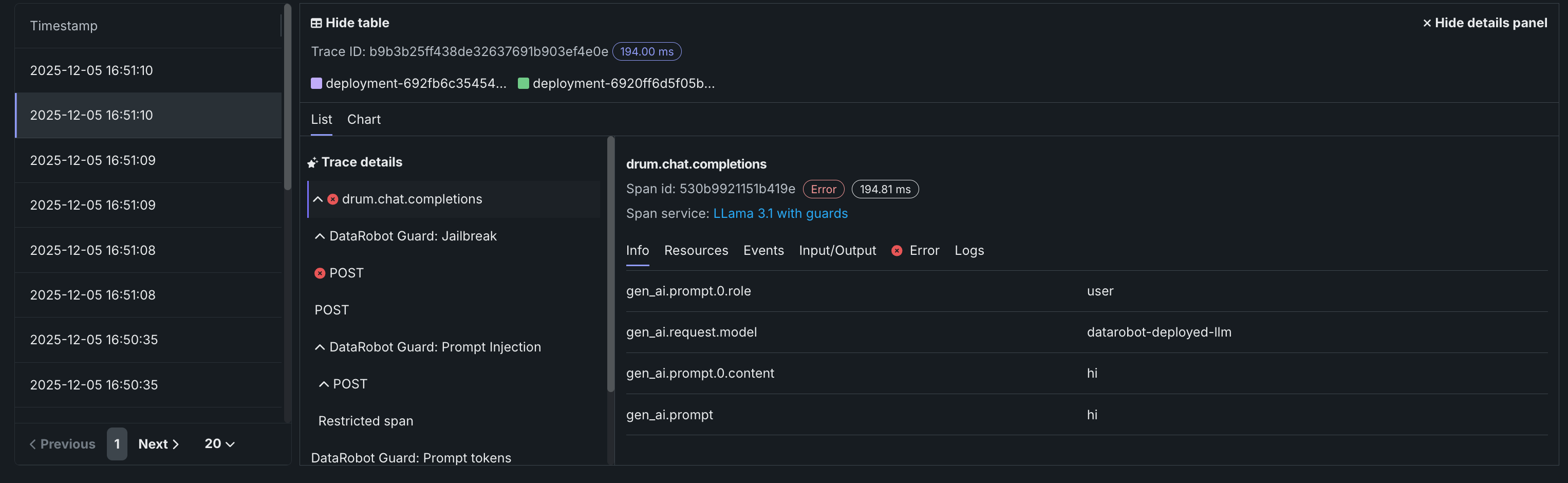

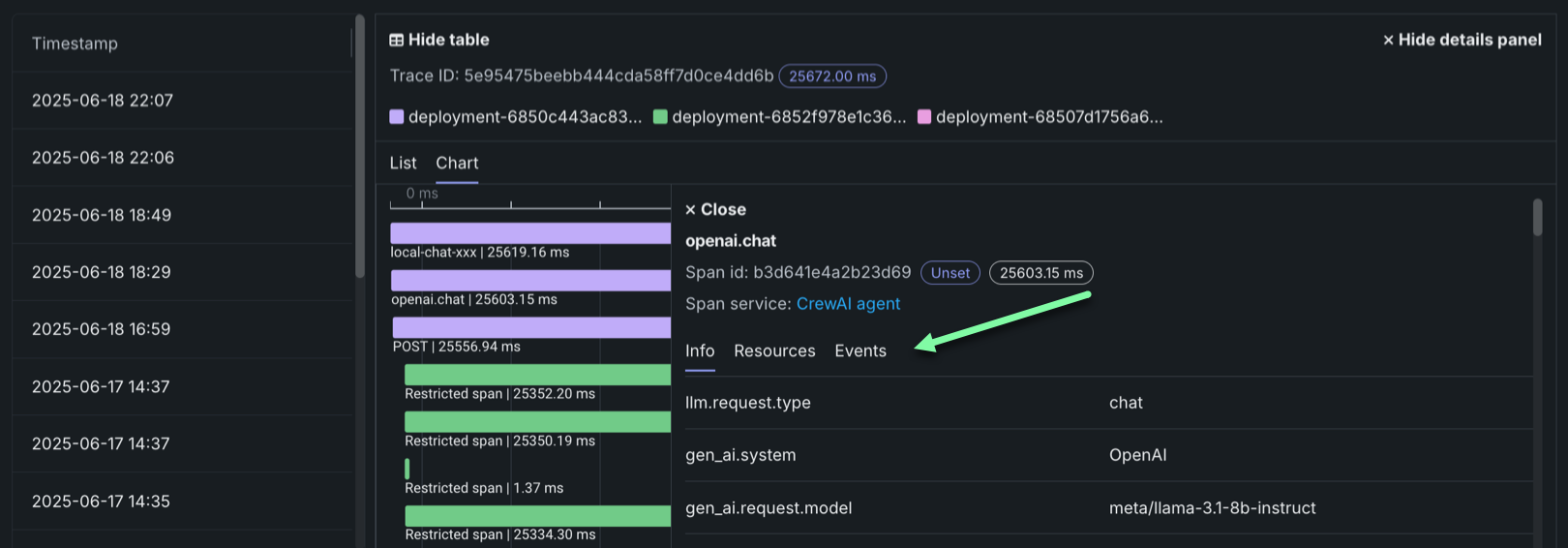

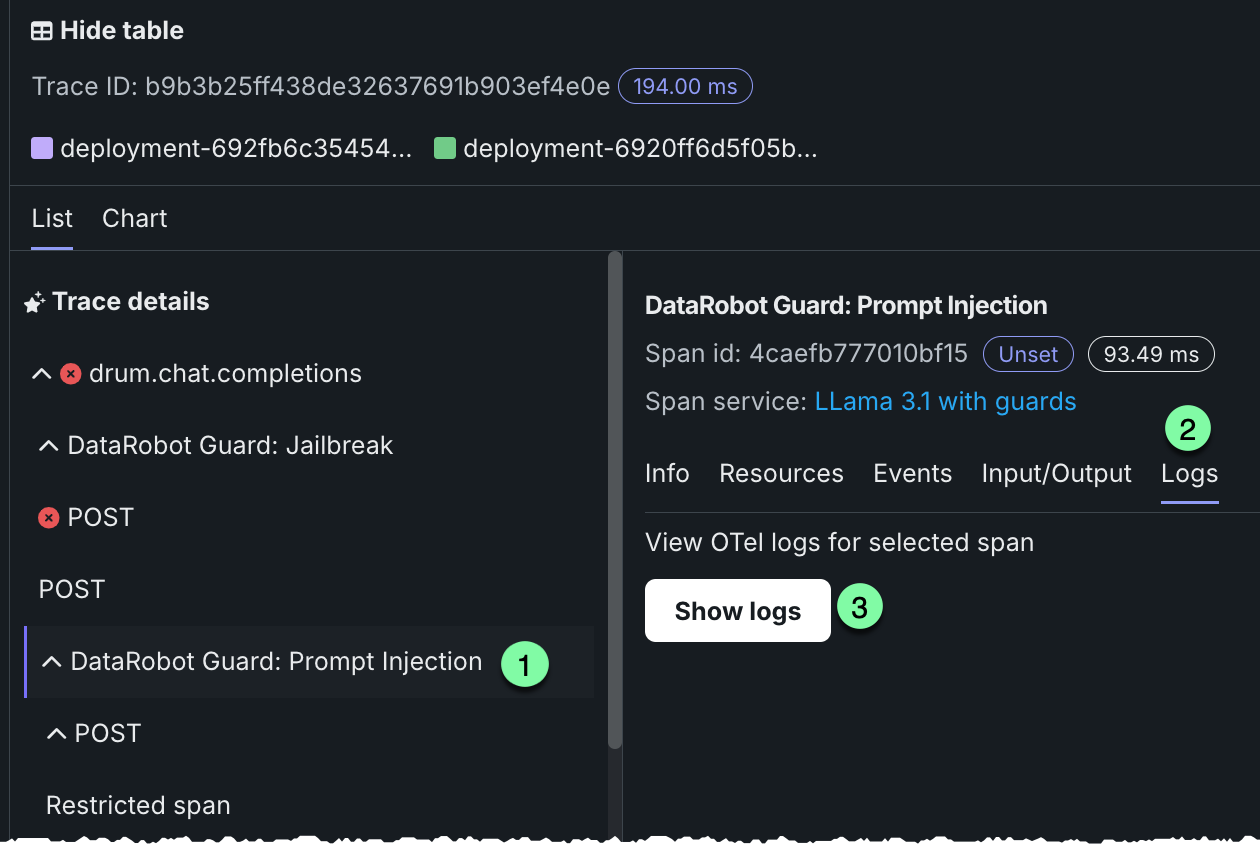

To review the spans contained in a trace, along with trace details, click a trace row in the Tracing table. The span colors correspond to a Span service, usually a deployment. Restricted span appears when you don’t have access to the deployment or service associated with the span. You can view spans in Chart format or List format.

Span detail controls

From either view, you can click Hide table to collapse the Timestamps table or Hide details panel to return to the expanded Tracing table view.

For either view, click the Span service name to access the deployment or resource (if you have access). Additional information, dependent on the configuration of the generative AI model or agentic workflow, is available on the Info, Resources, Events, Input/Output, Error, and Logs tabs. The Error tab only appears when an error occurs in a trace.

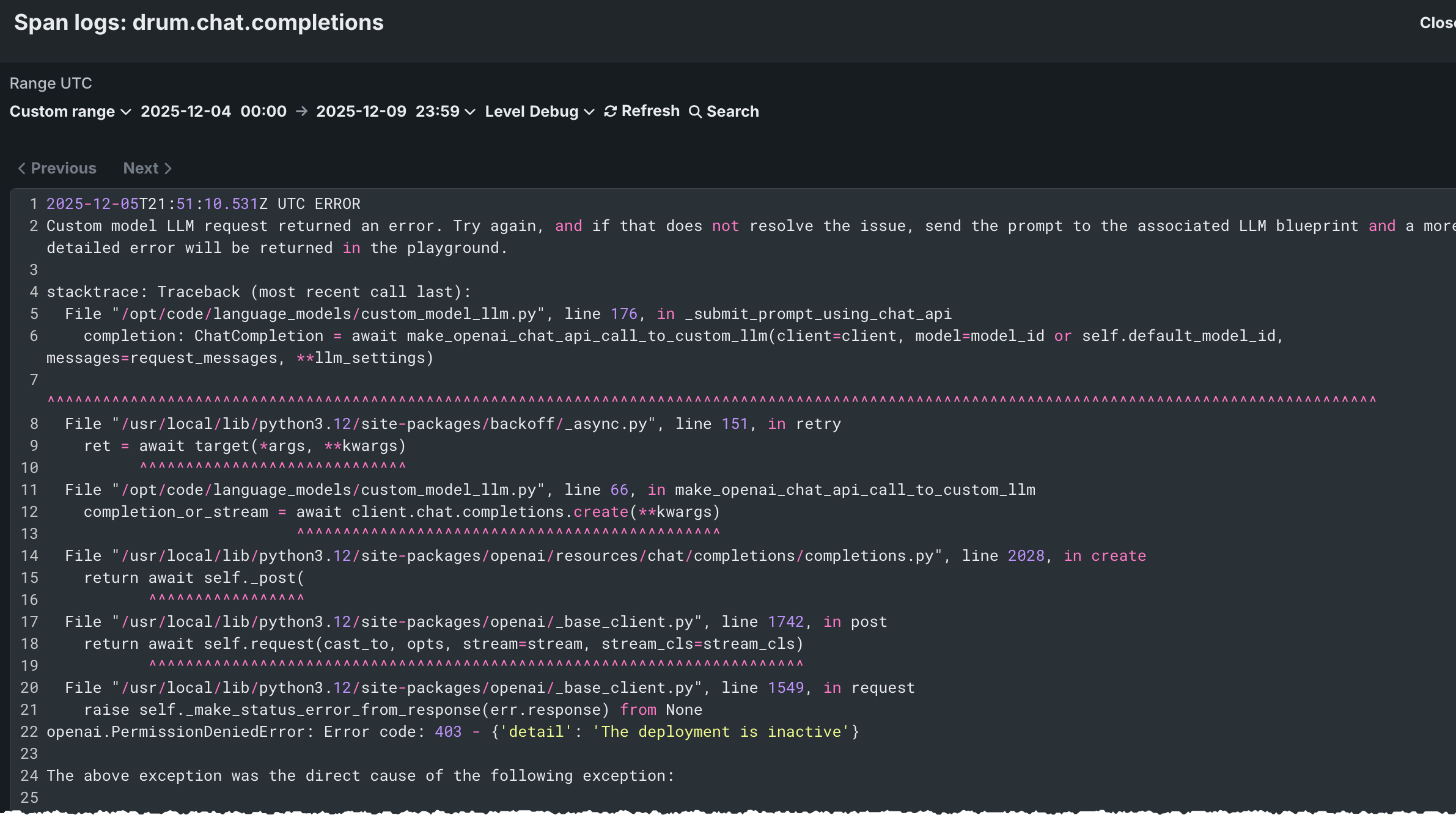

Filter tracing logs¶

From the list view, you can display OTel logs for a span. The results shown are a subset of the full deployment logs, and are accessed as follows:

-

Open the list view and select a span under Trace details.

-

Click the Logs tab.

-

Click Show logs.