Automated deployment and replacement of Scoring Code in AzureML¶

Premium

Automated deployment and replacement of Scoring Code in AzureML is a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

Feature flag: Enable the Automated Deployment and Replacement of Scoring Code in AzureML (Premium feature)

Create a DataRobot-managed AzureML prediction environment to deploy DataRobot Scoring Code in AzureML. With DataRobot management enabled, the external AzureML deployment has access to MLOps features, including automatic Scoring Code replacement.

Service health information for external models and monitoring jobs

Service health information is unavailable for external agent-monitored deployments and deployments with predictions uploaded through a prediction monitoring job.

Create an AzureML prediction environment¶

To deploy a model in AzureML, first create a custom AzureML prediction environment:

-

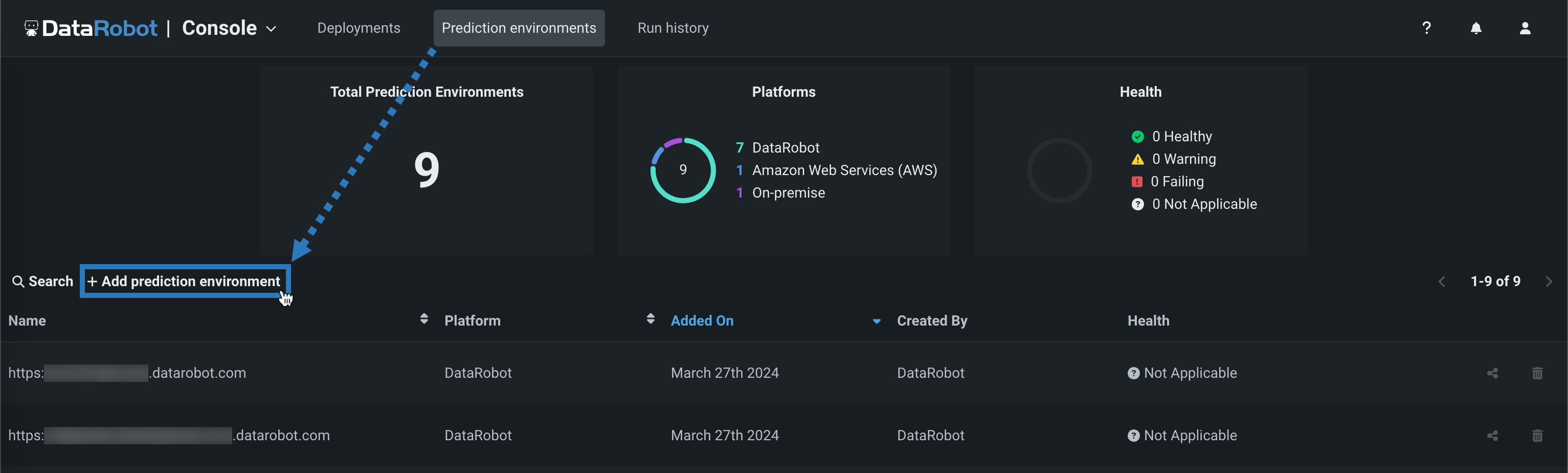

Open the Console > Prediction environments page and click + Add prediction environment.

-

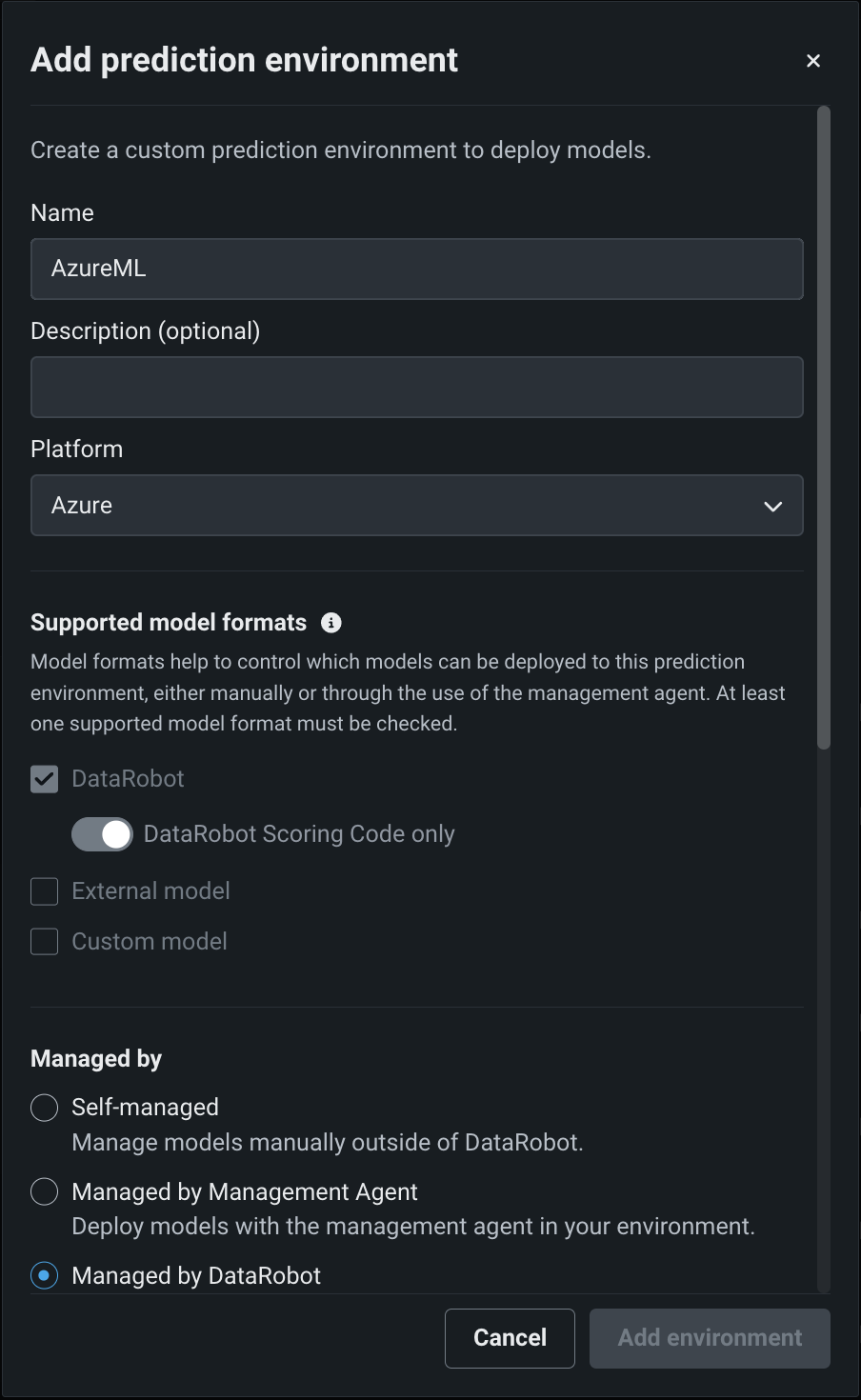

In the Add prediction environment dialog box, configure the prediction environment settings:

-

Enter a descriptive Name and an optional Description of the prediction environment.

-

Select Azure from the Platform dropdown.

-

Select the Managed by DataRobot option to allow this prediction environment to automatically package and deploy DataRobot Scoring Code models through the Management Agent.

The Supported model formats settings are automatically set to DataRobot and DataRobot Scoring Code only and can't be changed, as this is the only model format supported by AzureML.

-

-

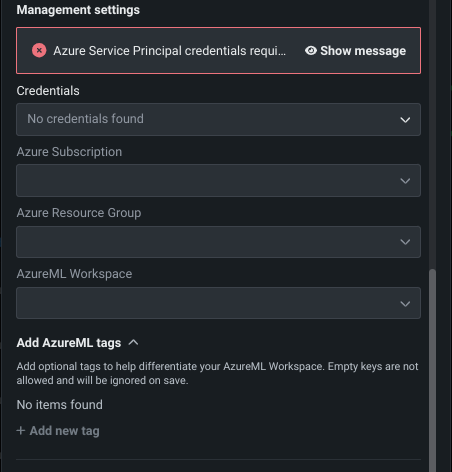

In the Management settings section, select the related Azure service principal Credentials. Configure the Azure Subscription, Azure Resource Group, and AzureML Workspace fields accessible using the provided Credentials.

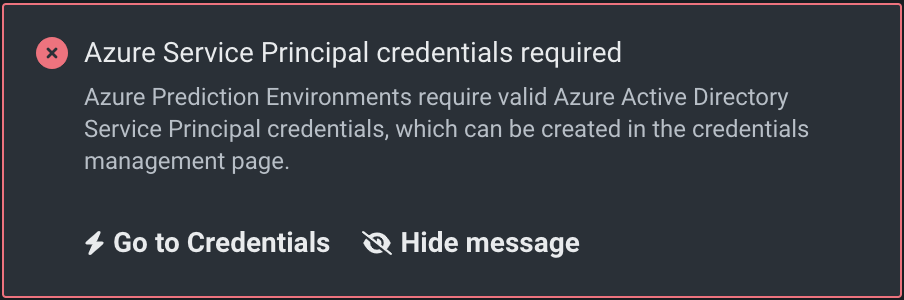

Azure service principal credentials required

DataRobot management of Scoring Code in AzureML requires existing Azure Service Principal Credentials. If you don't have existing credentials, the Azure Service Principal credentials required alert appears, directing you to Go to Credentials to create Azure Service Principal credentials.

To create the required credentials, for Credential type, select Azure Service Principal. Then, enter a Client ID, Client Secret, Azure Tenant ID, and a Display name. To validate and save the credentials, click Save and sign in.

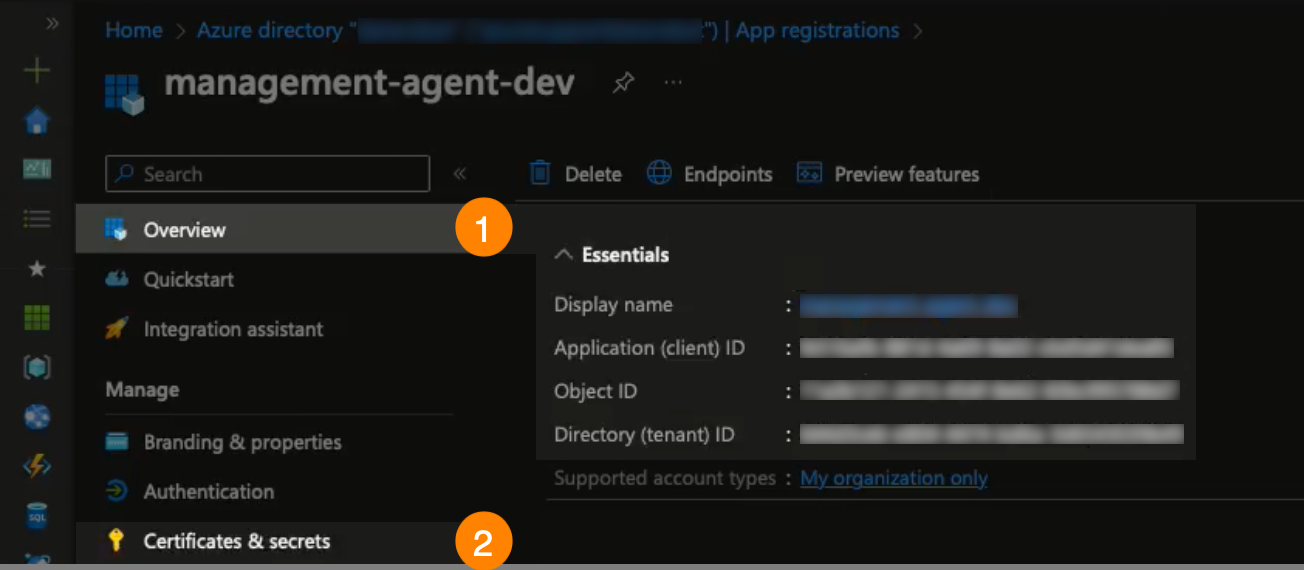

You can find these IDs and the display name on Azure's App registrations > Overview tab (1) and generate secrets on the App registration > Certificates and secrets tab(2):

In addition, if you are using tags for governance and resource management in AzureML, click AzureML tags and then + Add new tag to add the required tags to the prediction environment.

-

(Optional) If you want to connect to and retrieve data from Azure Event Hubs for monitoring, in the Monitoring settings section, click Enable monitoring and configure the Event Hubs Namespace, Event Hubs Instance, and Managed Identities fields. This requires valid Credentials, an Azure Subscription ID, and an Azure Resource Group.

You can also click Environment variables and then + New environment variables to add environment variables to the prediction environment.

-

After configuring the environment settings, click Add environment.

The AzureML environment is now available from the Prediction environments page.

Deploy a model to the AzureML prediction environment¶

Once you've created an AzureML prediction environment, you can deploy a model to it:

-

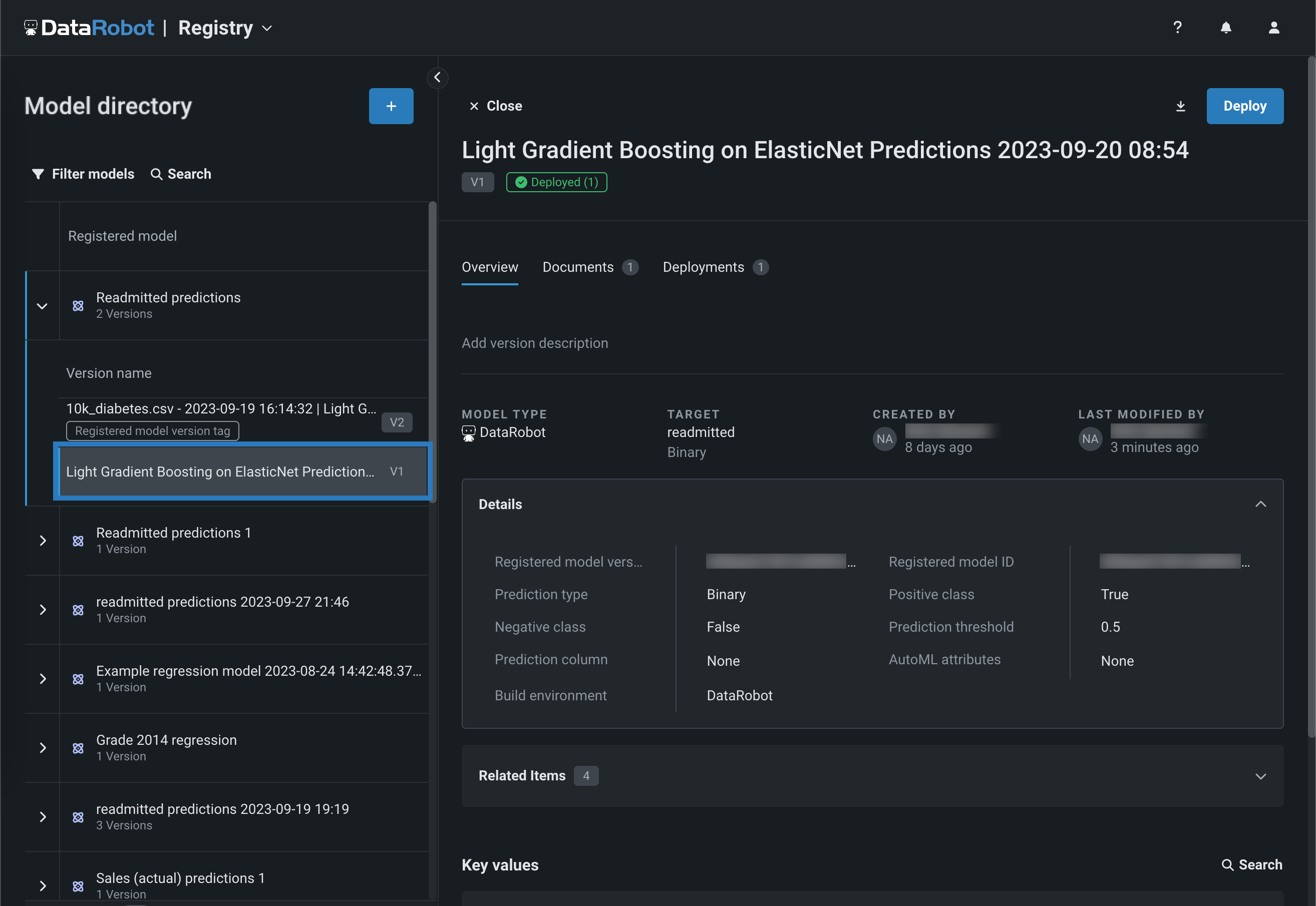

On the Registry > Models tab, in the table of registered models, click the registered model containing the version you want to deploy, opening the list of versions.

Model support

AzureML prediction environments do not support models without Scoring Code support.

-

From the list of versions, click the Scoring Code enabled version you want to deploy, opening the registered model version panel.

-

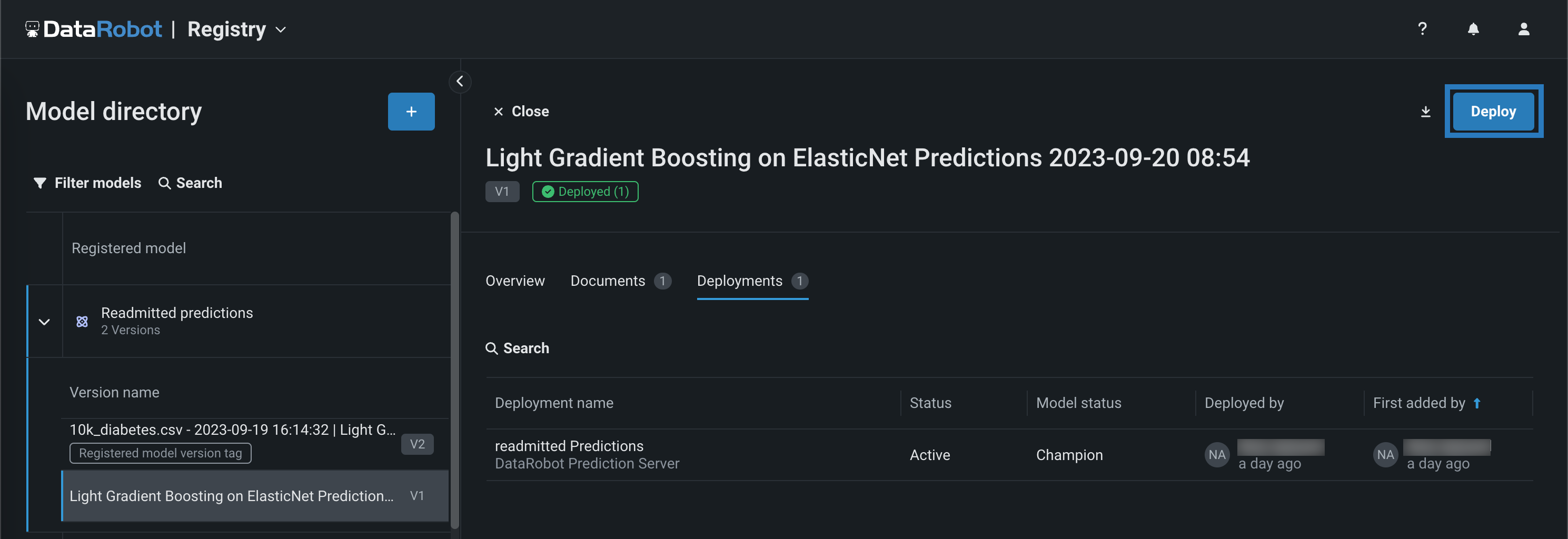

In the upper-right corner of any tab in the registered model version panel, click Deploy.

-

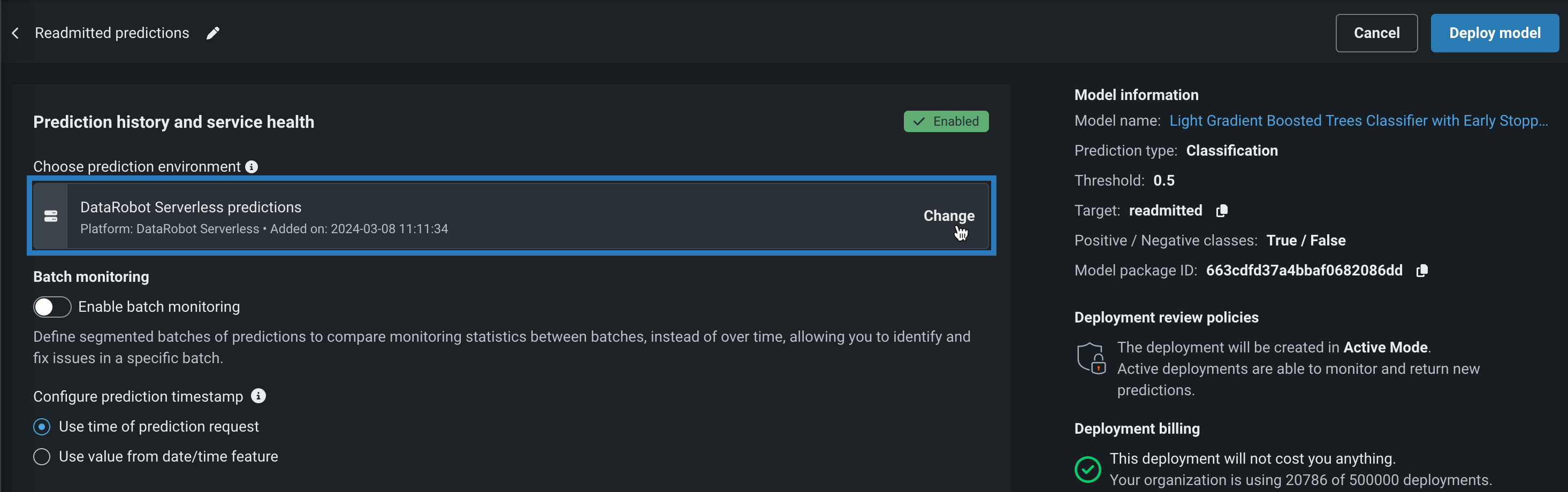

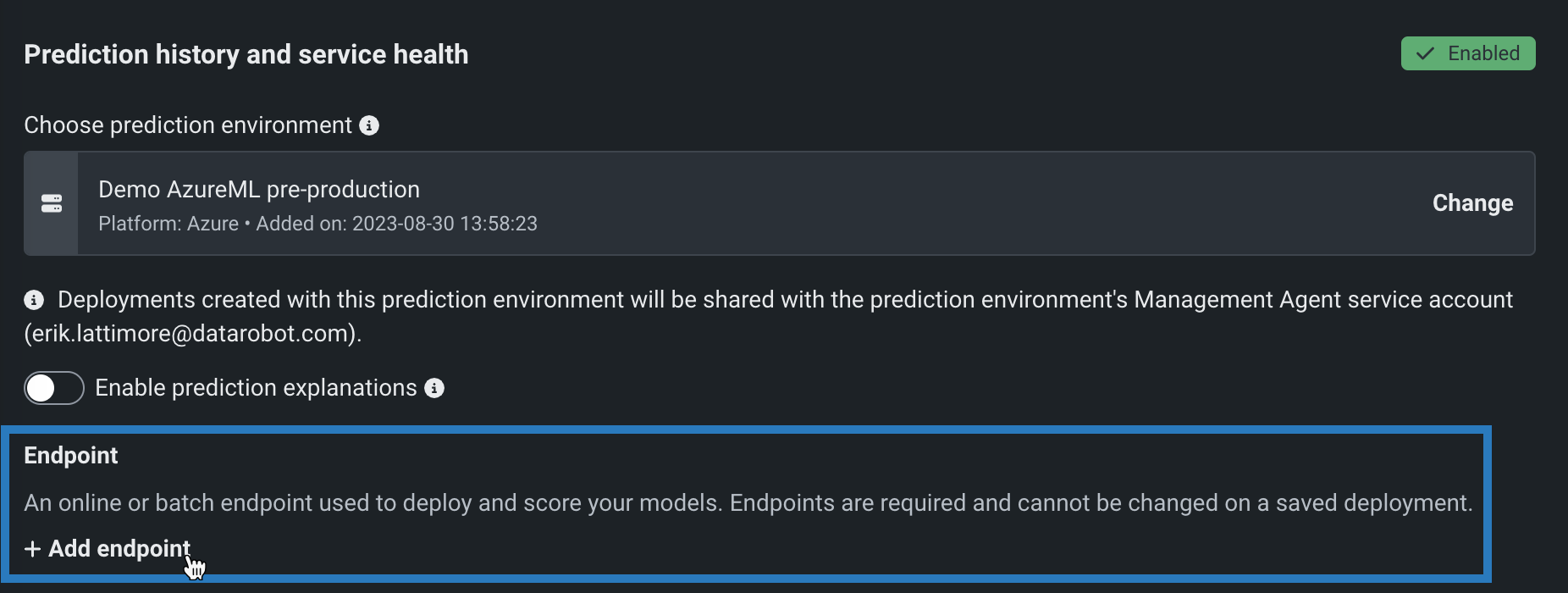

In the Prediction history and service health settings, under Choose prediction environment, click Change.

-

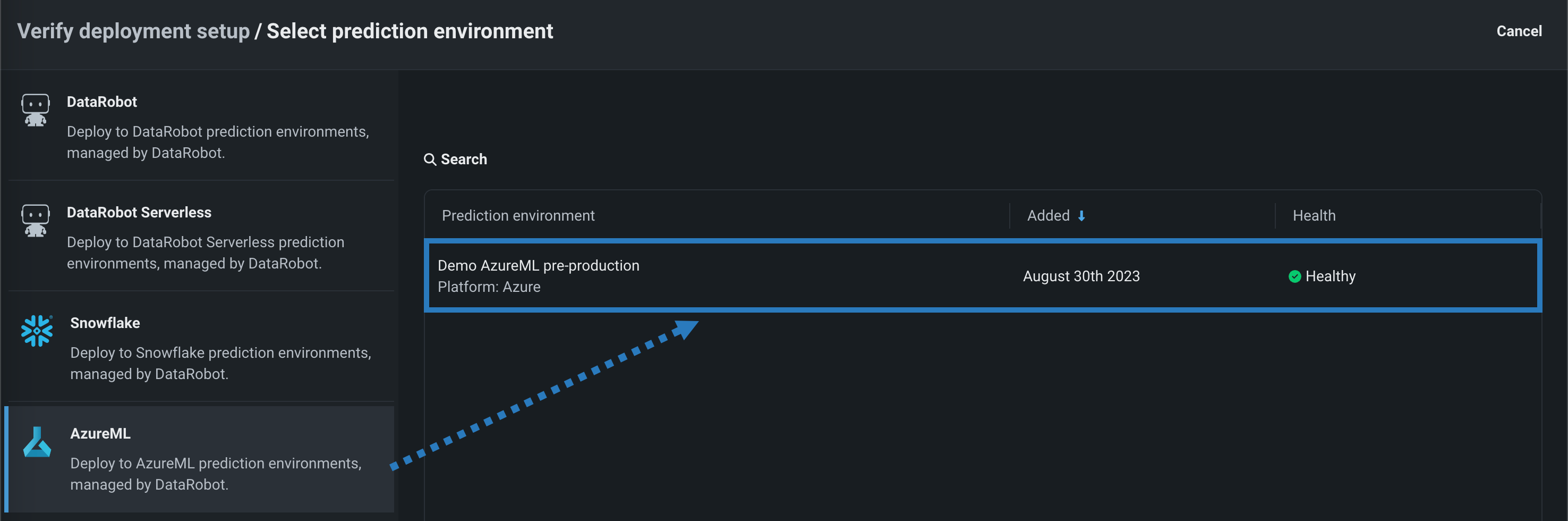

In the Select prediction environment panel, click AzureML, and then click the prediction environment you want to deploy to.

-

With an AzureML environment selected, in the Prediction history and service health section, under Endpoint, click + Add endpoint.

-

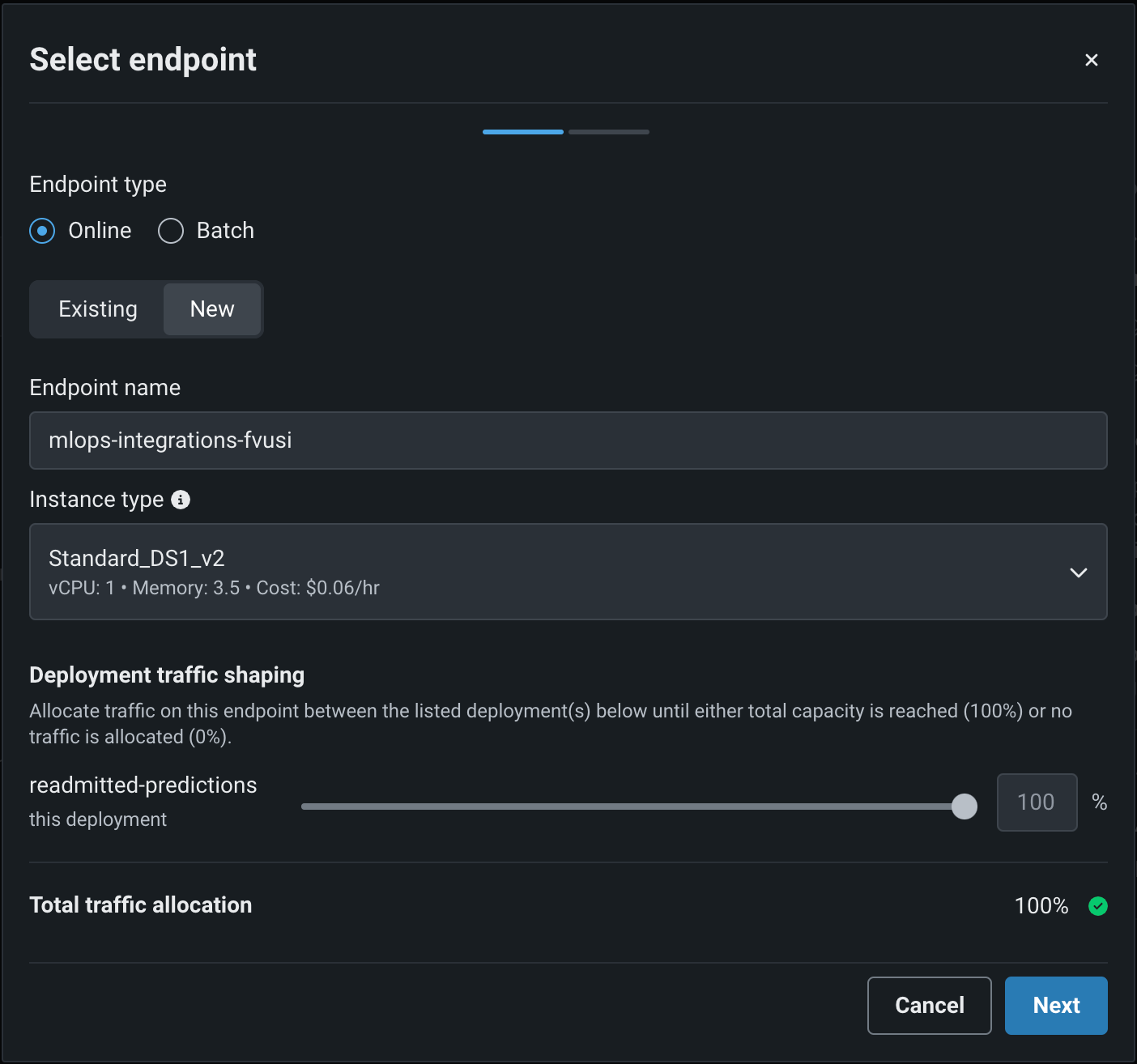

In the Select endpoint dialog box, define an Online or Batch endpoint, depending on your expected workload, and then click Next.

-

(Optional) On the next page, define additional Environment key-value pairs to provide extra parameters to the Azure deployment interface. Then, click Confirm.

-

Configure the remaining deployment settings and click Deploy model.

While the deployment is Launching, you can monitor the status events on the deployment's Monitoring > Service health tab under Recent activity > Agent activity

Make predictions in AzureML¶

After deploying a model to an AzureML prediction environment, you can use the code snippet from the Predictions > Portable predictions tab to score data in AzureML. Before running the code snippet, you must provide the required credentials in either of the following ways:

-

Export the Azure Service Principal’s secrets as environment variables locally before running the snippet:

Environment variable Description AZURE_CLIENT_IDThe Application ID in the App registration > Overview tab. AZURE_TENANT_IDThe Directory ID in the App registration > Overview tab. AZURE_CLIENT_SECRETThe secret token generated in the App registration > Certificates and secrets tab. -

Install the Azure CLI, and run the

az logincommand to allow the portable predictions snippet to use your personal Azure credentials.

Important

Deployments to AzureML Batch and Online endpoints utilize different APIs than standard DataRobot deployments.

-

Online endpoints support JSON or CSV as input and outputs results to JSON.

-

Batch endpoints support CSV input and output the results to a CSV file.